- Privacy Policy

Home » ANOVA (Analysis of variance) – Formulas, Types, and Examples

ANOVA (Analysis of variance) – Formulas, Types, and Examples

Table of Contents

Analysis of Variance (ANOVA)

Analysis of Variance (ANOVA) is a statistical method used to test differences between two or more means. It is similar to the t-test, but the t-test is generally used for comparing two means, while ANOVA is used when you have more than two means to compare.

ANOVA is based on comparing the variance (or variation) between the data samples to the variation within each particular sample. If the between-group variance is high and the within-group variance is low, this provides evidence that the means of the groups are significantly different.

ANOVA Terminology

When discussing ANOVA, there are several key terms to understand:

- Factor : This is another term for the independent variable in your analysis. In a one-way ANOVA, there is one factor, while in a two-way ANOVA, there are two factors.

- Levels : These are the different groups or categories within a factor. For example, if the factor is ‘diet’ the levels might be ‘low fat’, ‘medium fat’, and ‘high fat’.

- Response Variable : This is the dependent variable or the outcome that you are measuring.

- Within-group Variance : This is the variance or spread of scores within each level of your factor.

- Between-group Variance : This is the variance or spread of scores between the different levels of your factor.

- Grand Mean : This is the overall mean when you consider all the data together, regardless of the factor level.

- Treatment Sums of Squares (SS) : This represents the between-group variability. It is the sum of the squared differences between the group means and the grand mean.

- Error Sums of Squares (SS) : This represents the within-group variability. It’s the sum of the squared differences between each observation and its group mean.

- Total Sums of Squares (SS) : This is the sum of the Treatment SS and the Error SS. It represents the total variability in the data.

- Degrees of Freedom (df) : The degrees of freedom are the number of values that have the freedom to vary when computing a statistic. For example, if you have ‘n’ observations in one group, then the degrees of freedom for that group is ‘n-1’.

- Mean Square (MS) : Mean Square is the average squared deviation and is calculated by dividing the sum of squares by the corresponding degrees of freedom.

- F-Ratio : This is the test statistic for ANOVAs, and it’s the ratio of the between-group variance to the within-group variance. If the between-group variance is significantly larger than the within-group variance, the F-ratio will be large and likely significant.

- Null Hypothesis (H0) : This is the hypothesis that there is no difference between the group means.

- Alternative Hypothesis (H1) : This is the hypothesis that there is a difference between at least two of the group means.

- p-value : This is the probability of obtaining a test statistic as extreme as the one that was actually observed, assuming that the null hypothesis is true. If the p-value is less than the significance level (usually 0.05), then the null hypothesis is rejected in favor of the alternative hypothesis.

- Post-hoc tests : These are follow-up tests conducted after an ANOVA when the null hypothesis is rejected, to determine which specific groups’ means (levels) are different from each other. Examples include Tukey’s HSD, Scheffe, Bonferroni, among others.

Types of ANOVA

Types of ANOVA are as follows:

One-way (or one-factor) ANOVA

This is the simplest type of ANOVA, which involves one independent variable . For example, comparing the effect of different types of diet (vegetarian, pescatarian, omnivore) on cholesterol level.

Two-way (or two-factor) ANOVA

This involves two independent variables. This allows for testing the effect of each independent variable on the dependent variable , as well as testing if there’s an interaction effect between the independent variables on the dependent variable.

Repeated Measures ANOVA

This is used when the same subjects are measured multiple times under different conditions, or at different points in time. This type of ANOVA is often used in longitudinal studies.

Mixed Design ANOVA

This combines features of both between-subjects (independent groups) and within-subjects (repeated measures) designs. In this model, one factor is a between-subjects variable and the other is a within-subjects variable.

Multivariate Analysis of Variance (MANOVA)

This is used when there are two or more dependent variables. It tests whether changes in the independent variable(s) correspond to changes in the dependent variables.

Analysis of Covariance (ANCOVA)

This combines ANOVA and regression. ANCOVA tests whether certain factors have an effect on the outcome variable after removing the variance for which quantitative covariates (interval variables) account. This allows the comparison of one variable outcome between groups, while statistically controlling for the effect of other continuous variables that are not of primary interest.

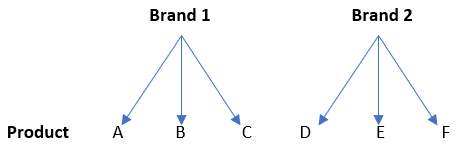

Nested ANOVA

This model is used when the groups can be clustered into categories. For example, if you were comparing students’ performance from different classrooms and different schools, “classroom” could be nested within “school.”

ANOVA Formulas

ANOVA Formulas are as follows:

Sum of Squares Total (SST)

This represents the total variability in the data. It is the sum of the squared differences between each observation and the overall mean.

- yi represents each individual data point

- y_mean represents the grand mean (mean of all observations)

Sum of Squares Within (SSW)

This represents the variability within each group or factor level. It is the sum of the squared differences between each observation and its group mean.

- yij represents each individual data point within a group

- y_meani represents the mean of the ith group

Sum of Squares Between (SSB)

This represents the variability between the groups. It is the sum of the squared differences between the group means and the grand mean, multiplied by the number of observations in each group.

- ni represents the number of observations in each group

- y_mean represents the grand mean

Degrees of Freedom

The degrees of freedom are the number of values that have the freedom to vary when calculating a statistic.

For within groups (dfW):

For between groups (dfB):

For total (dfT):

- N represents the total number of observations

- k represents the number of groups

Mean Squares

Mean squares are the sum of squares divided by the respective degrees of freedom.

Mean Squares Between (MSB):

Mean Squares Within (MSW):

F-Statistic

The F-statistic is used to test whether the variability between the groups is significantly greater than the variability within the groups.

If the F-statistic is significantly higher than what would be expected by chance, we reject the null hypothesis that all group means are equal.

Examples of ANOVA

Examples 1:

Suppose a psychologist wants to test the effect of three different types of exercise (yoga, aerobic exercise, and weight training) on stress reduction. The dependent variable is the stress level, which can be measured using a stress rating scale.

Here are hypothetical stress ratings for a group of participants after they followed each of the exercise regimes for a period:

- Yoga: [3, 2, 2, 1, 2, 2, 3, 2, 1, 2]

- Aerobic Exercise: [2, 3, 3, 2, 3, 2, 3, 3, 2, 2]

- Weight Training: [4, 4, 5, 5, 4, 5, 4, 5, 4, 5]

The psychologist wants to determine if there is a statistically significant difference in stress levels between these different types of exercise.

To conduct the ANOVA:

1. State the hypotheses:

- Null Hypothesis (H0): There is no difference in mean stress levels between the three types of exercise.

- Alternative Hypothesis (H1): There is a difference in mean stress levels between at least two of the types of exercise.

2. Calculate the ANOVA statistics:

- Compute the Sum of Squares Between (SSB), Sum of Squares Within (SSW), and Sum of Squares Total (SST).

- Calculate the Degrees of Freedom (dfB, dfW, dfT).

- Calculate the Mean Squares Between (MSB) and Mean Squares Within (MSW).

- Compute the F-statistic (F = MSB / MSW).

3. Check the p-value associated with the calculated F-statistic.

- If the p-value is less than the chosen significance level (often 0.05), then we reject the null hypothesis in favor of the alternative hypothesis. This suggests there is a statistically significant difference in mean stress levels between the three exercise types.

4. Post-hoc tests

- If we reject the null hypothesis, we conduct a post-hoc test to determine which specific groups’ means (exercise types) are different from each other.

Examples 2:

Suppose an agricultural scientist wants to compare the yield of three varieties of wheat. The scientist randomly selects four fields for each variety and plants them. After harvest, the yield from each field is measured in bushels. Here are the hypothetical yields:

The scientist wants to know if the differences in yields are due to the different varieties or just random variation.

Here’s how to apply the one-way ANOVA to this situation:

- Null Hypothesis (H0): The means of the three populations are equal.

- Alternative Hypothesis (H1): At least one population mean is different.

- Calculate the Degrees of Freedom (dfB for between groups, dfW for within groups, dfT for total).

- If the p-value is less than the chosen significance level (often 0.05), then we reject the null hypothesis in favor of the alternative hypothesis. This would suggest there is a statistically significant difference in mean yields among the three varieties.

- If we reject the null hypothesis, we conduct a post-hoc test to determine which specific groups’ means (wheat varieties) are different from each other.

How to Conduct ANOVA

Conducting an Analysis of Variance (ANOVA) involves several steps. Here’s a general guideline on how to perform it:

- Null Hypothesis (H0): The means of all groups are equal.

- Alternative Hypothesis (H1): At least one group mean is different from the others.

- The significance level (often denoted as α) is usually set at 0.05. This implies that you are willing to accept a 5% chance that you are wrong in rejecting the null hypothesis.

- Data should be collected for each group under study. Make sure that the data meet the assumptions of an ANOVA: normality, independence, and homogeneity of variances.

- Calculate the Degrees of Freedom (df) for each sum of squares (dfB, dfW, dfT).

- Compute the Mean Squares Between (MSB) and Mean Squares Within (MSW) by dividing the sum of squares by the corresponding degrees of freedom.

- Compute the F-statistic as the ratio of MSB to MSW.

- Determine the critical F-value from the F-distribution table using dfB and dfW.

- If the calculated F-statistic is greater than the critical F-value, reject the null hypothesis.

- If the p-value associated with the calculated F-statistic is smaller than the significance level (0.05 typically), you reject the null hypothesis.

- If you rejected the null hypothesis, you can conduct post-hoc tests (like Tukey’s HSD) to determine which specific groups’ means (if you have more than two groups) are different from each other.

- Regardless of the result, report your findings in a clear, understandable manner. This typically includes reporting the test statistic, p-value, and whether the null hypothesis was rejected.

When to use ANOVA

ANOVA (Analysis of Variance) is used when you have three or more groups and you want to compare their means to see if they are significantly different from each other. It is a statistical method that is used in a variety of research scenarios. Here are some examples of when you might use ANOVA:

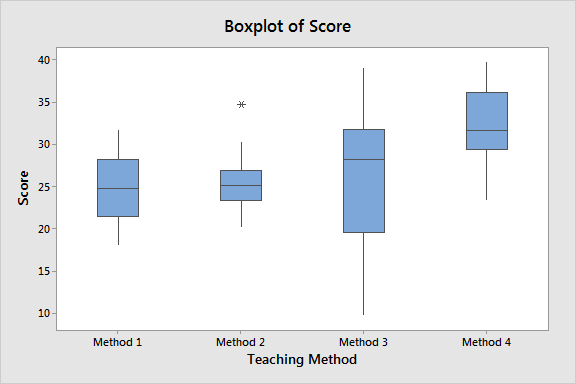

- Comparing Groups : If you want to compare the performance of more than two groups, for example, testing the effectiveness of different teaching methods on student performance.

- Evaluating Interactions : In a two-way or factorial ANOVA, you can test for an interaction effect. This means you are not only interested in the effect of each individual factor, but also whether the effect of one factor depends on the level of another factor.

- Repeated Measures : If you have measured the same subjects under different conditions or at different time points, you can use repeated measures ANOVA to compare the means of these repeated measures while accounting for the correlation between measures from the same subject.

- Experimental Designs : ANOVA is often used in experimental research designs when subjects are randomly assigned to different conditions and the goal is to compare the means of the conditions.

Here are the assumptions that must be met to use ANOVA:

- Normality : The data should be approximately normally distributed.

- Homogeneity of Variances : The variances of the groups you are comparing should be roughly equal. This assumption can be tested using Levene’s test or Bartlett’s test.

- Independence : The observations should be independent of each other. This assumption is met if the data is collected appropriately with no related groups (e.g., twins, matched pairs, repeated measures).

Applications of ANOVA

The Analysis of Variance (ANOVA) is a powerful statistical technique that is used widely across various fields and industries. Here are some of its key applications:

Agriculture

ANOVA is commonly used in agricultural research to compare the effectiveness of different types of fertilizers, crop varieties, or farming methods. For example, an agricultural researcher could use ANOVA to determine if there are significant differences in the yields of several varieties of wheat under the same conditions.

Manufacturing and Quality Control

ANOVA is used to determine if different manufacturing processes or machines produce different levels of product quality. For instance, an engineer might use it to test whether there are differences in the strength of a product based on the machine that produced it.

Marketing Research

Marketers often use ANOVA to test the effectiveness of different advertising strategies. For example, a marketer could use ANOVA to determine whether different marketing messages have a significant impact on consumer purchase intentions.

Healthcare and Medicine

In medical research, ANOVA can be used to compare the effectiveness of different treatments or drugs. For example, a medical researcher could use ANOVA to test whether there are significant differences in recovery times for patients who receive different types of therapy.

ANOVA is used in educational research to compare the effectiveness of different teaching methods or educational interventions. For example, an educator could use it to test whether students perform significantly differently when taught with different teaching methods.

Psychology and Social Sciences

Psychologists and social scientists use ANOVA to compare group means on various psychological and social variables. For example, a psychologist could use it to determine if there are significant differences in stress levels among individuals in different occupations.

Biology and Environmental Sciences

Biologists and environmental scientists use ANOVA to compare different biological and environmental conditions. For example, an environmental scientist could use it to determine if there are significant differences in the levels of a pollutant in different bodies of water.

Advantages of ANOVA

Here are some advantages of using ANOVA:

Comparing Multiple Groups: One of the key advantages of ANOVA is the ability to compare the means of three or more groups. This makes it more powerful and flexible than the t-test, which is limited to comparing only two groups.

Control of Type I Error: When comparing multiple groups, the chances of making a Type I error (false positive) increases. One of the strengths of ANOVA is that it controls the Type I error rate across all comparisons. This is in contrast to performing multiple pairwise t-tests which can inflate the Type I error rate.

Testing Interactions: In factorial ANOVA, you can test not only the main effect of each factor, but also the interaction effect between factors. This can provide valuable insights into how different factors or variables interact with each other.

Handling Continuous and Categorical Variables: ANOVA can handle both continuous and categorical variables . The dependent variable is continuous and the independent variables are categorical.

Robustness: ANOVA is considered robust to violations of normality assumption when group sizes are equal. This means that even if your data do not perfectly meet the normality assumption, you might still get valid results.

Provides Detailed Analysis: ANOVA provides a detailed breakdown of variances and interactions between variables which can be useful in understanding the underlying factors affecting the outcome.

Capability to Handle Complex Experimental Designs: Advanced types of ANOVA (like repeated measures ANOVA, MANOVA, etc.) can handle more complex experimental designs, including those where measurements are taken on the same subjects over time, or when you want to analyze multiple dependent variables at once.

Disadvantages of ANOVA

Some limitations or disadvantages that are important to consider:

Assumptions: ANOVA relies on several assumptions including normality (the data follows a normal distribution), independence (the observations are independent of each other), and homogeneity of variances (the variances of the groups are roughly equal). If these assumptions are violated, the results of the ANOVA may not be valid.

Sensitivity to Outliers: ANOVA can be sensitive to outliers. A single extreme value in one group can affect the sum of squares and consequently influence the F-statistic and the overall result of the test.

Dichotomous Variables: ANOVA is not suitable for dichotomous variables (variables that can take only two values, like yes/no or male/female). It is used to compare the means of groups for a continuous dependent variable.

Lack of Specificity: Although ANOVA can tell you that there is a significant difference between groups, it doesn’t tell you which specific groups are significantly different from each other. You need to carry out further post-hoc tests (like Tukey’s HSD or Bonferroni) for these pairwise comparisons.

Complexity with Multiple Factors: When dealing with multiple factors and interactions in factorial ANOVA, interpretation can become complex. The presence of interaction effects can make main effects difficult to interpret.

Requires Larger Sample Sizes: To detect an effect of a certain size, ANOVA generally requires larger sample sizes than a t-test.

Equal Group Sizes: While not always a strict requirement, ANOVA is most powerful and its assumptions are most likely to be met when groups are of equal or similar sizes.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Content Analysis – Methods, Types and Examples

Documentary Analysis – Methods, Applications and...

Narrative Analysis – Types, Methods and Examples

Graphical Methods – Types, Examples and Guide

Substantive Framework – Types, Methods and...

Inferential Statistics – Types, Methods and...

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

One-way ANOVA | When and How to Use It (With Examples)

Published on March 6, 2020 by Rebecca Bevans . Revised on May 10, 2024.

ANOVA , which stands for Analysis of Variance, is a statistical test used to analyze the difference between the means of more than two groups.

A one-way ANOVA uses one independent variable , while a two-way ANOVA uses two independent variables.

Table of contents

When to use a one-way anova, how does an anova test work, assumptions of anova, performing a one-way anova, interpreting the results, post-hoc testing, reporting the results of anova, other interesting articles, frequently asked questions about one-way anova.

Use a one-way ANOVA when you have collected data about one categorical independent variable and one quantitative dependent variable . The independent variable should have at least three levels (i.e. at least three different groups or categories).

ANOVA tells you if the dependent variable changes according to the level of the independent variable. For example:

- Your independent variable is social media use , and you assign groups to low , medium , and high levels of social media use to find out if there is a difference in hours of sleep per night .

- Your independent variable is brand of soda , and you collect data on Coke , Pepsi , Sprite , and Fanta to find out if there is a difference in the price per 100ml .

- You independent variable is type of fertilizer , and you treat crop fields with mixtures 1 , 2 and 3 to find out if there is a difference in crop yield .

The null hypothesis ( H 0 ) of ANOVA is that there is no difference among group means. The alternative hypothesis ( H a ) is that at least one group differs significantly from the overall mean of the dependent variable.

If you only want to compare two groups, use a t test instead.

Prevent plagiarism. Run a free check.

ANOVA determines whether the groups created by the levels of the independent variable are statistically different by calculating whether the means of the treatment levels are different from the overall mean of the dependent variable.

If any of the group means is significantly different from the overall mean, then the null hypothesis is rejected.

ANOVA uses the F test for statistical significance . This allows for comparison of multiple means at once, because the error is calculated for the whole set of comparisons rather than for each individual two-way comparison (which would happen with a t test).

The F test compares the variance in each group mean from the overall group variance. If the variance within groups is smaller than the variance between groups , the F test will find a higher F value, and therefore a higher likelihood that the difference observed is real and not due to chance.

The assumptions of the ANOVA test are the same as the general assumptions for any parametric test:

- Independence of observations : the data were collected using statistically valid sampling methods , and there are no hidden relationships among observations. If your data fail to meet this assumption because you have a confounding variable that you need to control for statistically, use an ANOVA with blocking variables.

- Normally-distributed response variable : The values of the dependent variable follow a normal distribution .

- Homogeneity of variance : The variation within each group being compared is similar for every group. If the variances are different among the groups, then ANOVA probably isn’t the right fit for the data.

While you can perform an ANOVA by hand , it is difficult to do so with more than a few observations. We will perform our analysis in the R statistical program because it is free, powerful, and widely available. For a full walkthrough of this ANOVA example, see our guide to performing ANOVA in R .

The sample dataset from our imaginary crop yield experiment contains data about:

- fertilizer type (type 1, 2, or 3)

- planting density (1 = low density, 2 = high density)

- planting location in the field (blocks 1, 2, 3, or 4)

- final crop yield (in bushels per acre).

This gives us enough information to run various different ANOVA tests and see which model is the best fit for the data.

For the one-way ANOVA, we will only analyze the effect of fertilizer type on crop yield.

Sample dataset for ANOVA

After loading the dataset into our R environment, we can use the command aov() to run an ANOVA. In this example we will model the differences in the mean of the response variable , crop yield, as a function of type of fertilizer.

To view the summary of a statistical model in R, use the summary() function.

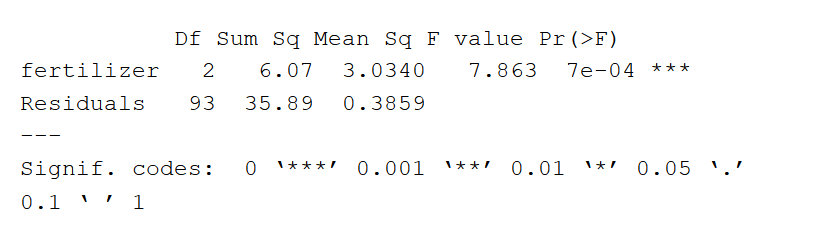

The summary of an ANOVA test (in R) looks like this:

The ANOVA output provides an estimate of how much variation in the dependent variable that can be explained by the independent variable.

- The first column lists the independent variable along with the model residuals (aka the model error).

- The Df column displays the degrees of freedom for the independent variable (calculated by taking the number of levels within the variable and subtracting 1), and the degrees of freedom for the residuals (calculated by taking the total number of observations minus 1, then subtracting the number of levels in each of the independent variables).

- The Sum Sq column displays the sum of squares (a.k.a. the total variation) between the group means and the overall mean explained by that variable. The sum of squares for the fertilizer variable is 6.07, while the sum of squares of the residuals is 35.89.

- The Mean Sq column is the mean of the sum of squares, which is calculated by dividing the sum of squares by the degrees of freedom.

- The F value column is the test statistic from the F test: the mean square of each independent variable divided by the mean square of the residuals. The larger the F value, the more likely it is that the variation associated with the independent variable is real and not due to chance.

- The Pr(>F) column is the p value of the F statistic. This shows how likely it is that the F value calculated from the test would have occurred if the null hypothesis of no difference among group means were true.

Because the p value of the independent variable, fertilizer, is statistically significant ( p < 0.05), it is likely that fertilizer type does have a significant effect on average crop yield.

ANOVA will tell you if there are differences among the levels of the independent variable, but not which differences are significant. To find how the treatment levels differ from one another, perform a TukeyHSD (Tukey’s Honestly-Significant Difference) post-hoc test.

The Tukey test runs pairwise comparisons among each of the groups, and uses a conservative error estimate to find the groups which are statistically different from one another.

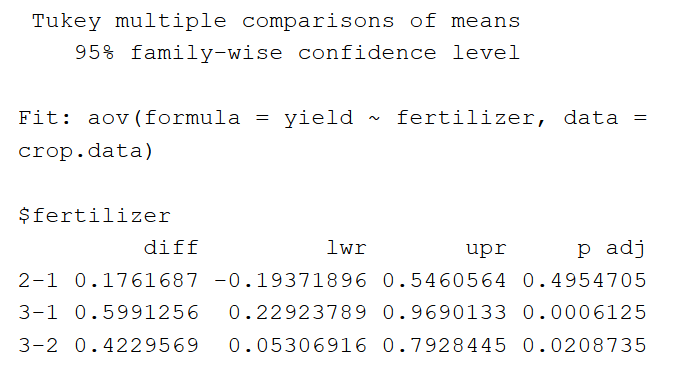

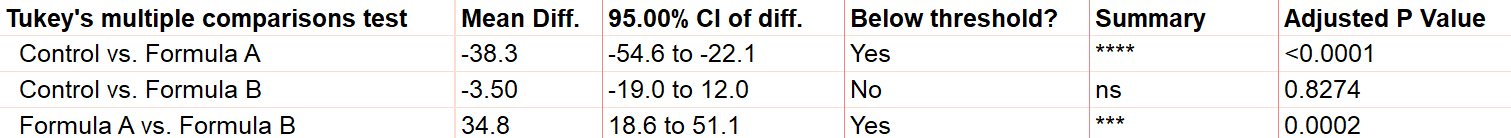

The output of the TukeyHSD looks like this:

First, the table reports the model being tested (‘Fit’). Next it lists the pairwise differences among groups for the independent variable.

Under the ‘$fertilizer’ section, we see the mean difference between each fertilizer treatment (‘diff’), the lower and upper bounds of the 95% confidence interval (‘lwr’ and ‘upr’), and the p value , adjusted for multiple pairwise comparisons.

The pairwise comparisons show that fertilizer type 3 has a significantly higher mean yield than both fertilizer 2 and fertilizer 1, but the difference between the mean yields of fertilizers 2 and 1 is not statistically significant.

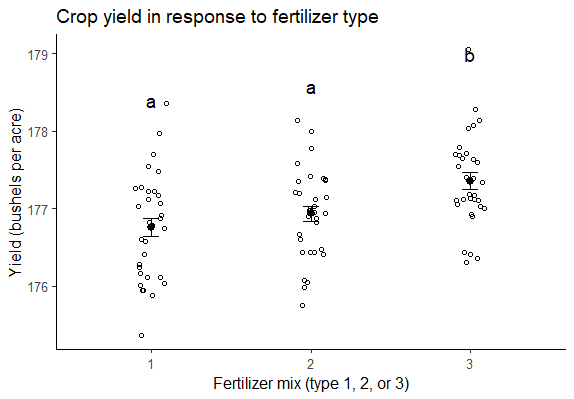

When reporting the results of an ANOVA, include a brief description of the variables you tested, the F value, degrees of freedom, and p values for each independent variable, and explain what the results mean.

If you want to provide more detailed information about the differences found in your test, you can also include a graph of the ANOVA results , with grouping letters above each level of the independent variable to show which groups are statistically different from one another:

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Chi square test of independence

- Statistical power

- Descriptive statistics

- Degrees of freedom

- Pearson correlation

- Null hypothesis

Methodology

- Double-blind study

- Case-control study

- Research ethics

- Data collection

- Hypothesis testing

- Structured interviews

Research bias

- Hawthorne effect

- Unconscious bias

- Recall bias

- Halo effect

- Self-serving bias

- Information bias

The only difference between one-way and two-way ANOVA is the number of independent variables . A one-way ANOVA has one independent variable, while a two-way ANOVA has two.

- One-way ANOVA : Testing the relationship between shoe brand (Nike, Adidas, Saucony, Hoka) and race finish times in a marathon.

- Two-way ANOVA : Testing the relationship between shoe brand (Nike, Adidas, Saucony, Hoka), runner age group (junior, senior, master’s), and race finishing times in a marathon.

All ANOVAs are designed to test for differences among three or more groups. If you are only testing for a difference between two groups, use a t-test instead.

A factorial ANOVA is any ANOVA that uses more than one categorical independent variable . A two-way ANOVA is a type of factorial ANOVA.

Some examples of factorial ANOVAs include:

- Testing the combined effects of vaccination (vaccinated or not vaccinated) and health status (healthy or pre-existing condition) on the rate of flu infection in a population.

- Testing the effects of marital status (married, single, divorced, widowed), job status (employed, self-employed, unemployed, retired), and family history (no family history, some family history) on the incidence of depression in a population.

- Testing the effects of feed type (type A, B, or C) and barn crowding (not crowded, somewhat crowded, very crowded) on the final weight of chickens in a commercial farming operation.

In ANOVA, the null hypothesis is that there is no difference among group means. If any group differs significantly from the overall group mean, then the ANOVA will report a statistically significant result.

Significant differences among group means are calculated using the F statistic, which is the ratio of the mean sum of squares (the variance explained by the independent variable) to the mean square error (the variance left over).

If the F statistic is higher than the critical value (the value of F that corresponds with your alpha value, usually 0.05), then the difference among groups is deemed statistically significant.

Quantitative variables are any variables where the data represent amounts (e.g. height, weight, or age).

Categorical variables are any variables where the data represent groups. This includes rankings (e.g. finishing places in a race), classifications (e.g. brands of cereal), and binary outcomes (e.g. coin flips).

You need to know what type of variables you are working with to choose the right statistical test for your data and interpret your results .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2024, May 09). One-way ANOVA | When and How to Use It (With Examples). Scribbr. Retrieved September 3, 2024, from https://www.scribbr.com/statistics/one-way-anova/

Is this article helpful?

Rebecca Bevans

Other students also liked, two-way anova | examples & when to use it, anova in r | a complete step-by-step guide with examples, guide to experimental design | overview, steps, & examples, what is your plagiarism score.

ANOVA Test: Definition, Types, Examples, SPSS

Statistics Definitions > ANOVA Contents :

The ANOVA Test

- How to Run a One Way ANOVA in SPSS

Two Way ANOVA

What is manova, what is factorial anova, how to run an anova, anova vs. t test.

- Repeated Measures ANOVA in SPSS: Steps

Related Articles

Watch the video for an introduction to ANOVA.

Can’t see the video? Click here to watch it on YouTube.

An ANOVA test is a way to find out if survey or experiment results are significant . In other words, they help you to figure out if you need to reject the null hypothesis or accept the alternate hypothesis .

Basically, you’re testing groups to see if there’s a difference between them. Examples of when you might want to test different groups:

- A group of psychiatric patients are trying three different therapies: counseling, medication and biofeedback. You want to see if one therapy is better than the others.

- A manufacturer has two different processes to make light bulbs. They want to know if one process is better than the other.

- Students from different colleges take the same exam. You want to see if one college outperforms the other.

What Does “One-Way” or “Two-Way Mean?

One-way or two-way refers to the number of independent variables (IVs) in your Analysis of Variance test.

- One-way has one independent variable (with 2 levels ). For example: brand of cereal ,

- Two-way has two independent variables (it can have multiple levels). For example: brand of cereal, calories .

What are “Groups” or “Levels”?

Groups or levels are different groups within the same independent variable . In the above example, your levels for “brand of cereal” might be Lucky Charms, Raisin Bran, Cornflakes — a total of three levels. Your levels for “Calories” might be: sweetened, unsweetened — a total of two levels.

Let’s say you are studying if an alcoholic support group and individual counseling combined is the most effective treatment for lowering alcohol consumption. You might split the study participants into three groups or levels:

- Medication only,

- Medication and counseling,

- Counseling only.

Your dependent variable would be the number of alcoholic beverages consumed per day.

If your groups or levels have a hierarchical structure (each level has unique subgroups), then use a nested ANOVA for the analysis.

What Does “Replication” Mean?

It’s whether you are replicating (i.e. duplicating) your test(s) with multiple groups. With a two way ANOVA with replication , you have two groups and individuals within that group are doing more than one thing (i.e. two groups of students from two colleges taking two tests). If you only have one group taking two tests, you would use without replication.

Types of Tests.

There are two main types: one-way and two-way. Two-way tests can be with or without replication.

- One-way ANOVA between groups: used when you want to test two groups to see if there’s a difference between them.

- Two way ANOVA without replication: used when you have one group and you’re double-testing that same group. For example, you’re testing one set of individuals before and after they take a medication to see if it works or not.

- Two way ANOVA with replication: Two groups , and the members of those groups are doing more than one thing . For example, two groups of patients from different hospitals trying two different therapies.

Back to Top

One Way ANOVA

A one way ANOVA is used to compare two means from two independent (unrelated) groups using the F-distribution . The null hypothesis for the test is that the two means are equal. Therefore, a significant result means that the two means are unequal.

Examples of when to use a one way ANOVA

Situation 1: You have a group of individuals randomly split into smaller groups and completing different tasks. For example, you might be studying the effects of tea on weight loss and form three groups: green tea, black tea, and no tea. Situation 2: Similar to situation 1, but in this case the individuals are split into groups based on an attribute they possess. For example, you might be studying leg strength of people according to weight. You could split participants into weight categories (obese, overweight and normal) and measure their leg strength on a weight machine.

Limitations of the One Way ANOVA

A one way ANOVA will tell you that at least two groups were different from each other. But it won’t tell you which groups were different. If your test returns a significant f-statistic, you may need to run an ad hoc test (like the Least Significant Difference test) to tell you exactly which groups had a difference in means . Back to Top

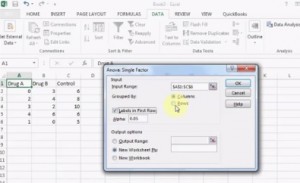

How to run a One Way ANOVA in SPSS

A Two Way ANOVA is an extension of the One Way ANOVA. With a One Way, you have one independent variable affecting a dependent variable . With a Two Way ANOVA, there are two independents. Use a two way ANOVA when you have one measurement variable (i.e. a quantitative variable ) and two nominal variables . In other words, if your experiment has a quantitative outcome and you have two categorical explanatory variables , a two way ANOVA is appropriate.

For example, you might want to find out if there is an interaction between income and gender for anxiety level at job interviews. The anxiety level is the outcome, or the variable that can be measured. Gender and Income are the two categorical variables . These categorical variables are also the independent variables, which are called factors in a Two Way ANOVA.

The factors can be split into levels . In the above example, income level could be split into three levels: low, middle and high income. Gender could be split into three levels: male, female, and transgender. Treatment groups are all possible combinations of the factors. In this example there would be 3 x 3 = 9 treatment groups.

Main Effect and Interaction Effect

The results from a Two Way ANOVA will calculate a main effect and an interaction effect . The main effect is similar to a One Way ANOVA: each factor’s effect is considered separately. With the interaction effect, all factors are considered at the same time. Interaction effects between factors are easier to test if there is more than one observation in each cell. For the above example, multiple stress scores could be entered into cells. If you do enter multiple observations into cells, the number in each cell must be equal.

Two null hypotheses are tested if you are placing one observation in each cell. For this example, those hypotheses would be: H 01 : All the income groups have equal mean stress. H 02 : All the gender groups have equal mean stress.

For multiple observations in cells, you would also be testing a third hypothesis: H 03 : The factors are independent or the interaction effect does not exist.

An F-statistic is computed for each hypothesis you are testing.

Assumptions for Two Way ANOVA

- The population must be close to a normal distribution .

- Samples must be independent.

- Population variances must be equal (i.e. homoscedastic ).

- Groups must have equal sample sizes .

MANOVA is just an ANOVA with several dependent variables. It’s similar to many other tests and experiments in that it’s purpose is to find out if the response variable (i.e. your dependent variable) is changed by manipulating the independent variable. The test helps to answer many research questions, including:

- Do changes to the independent variables have statistically significant effects on dependent variables?

- What are the interactions among dependent variables?

- What are the interactions among independent variables?

MANOVA Example

Suppose you wanted to find out if a difference in textbooks affected students’ scores in math and science. Improvements in math and science means that there are two dependent variables, so a MANOVA is appropriate.

An ANOVA will give you a single ( univariate ) f-value while a MANOVA will give you a multivariate F value. MANOVA tests the multiple dependent variables by creating new, artificial, dependent variables that maximize group differences. These new dependent variables are linear combinations of the measured dependent variables.

Interpreting the MANOVA results

If the multivariate F value indicates the test is statistically significant , this means that something is significant. In the above example, you would not know if math scores have improved, science scores have improved (or both). Once you have a significant result, you would then have to look at each individual component (the univariate F tests) to see which dependent variable(s) contributed to the statistically significant result.

Advantages and Disadvantages of MANOVA vs. ANOVA

- MANOVA enables you to test multiple dependent variables.

- MANOVA can protect against Type I errors.

Disadvantages

- MANOVA is many times more complicated than ANOVA, making it a challenge to see which independent variables are affecting dependent variables.

- One degree of freedom is lost with the addition of each new variable .

- The dependent variables should be uncorrelated as much as possible. If they are correlated, the loss in degrees of freedom means that there isn’t much advantages in including more than one dependent variable on the test.

Reference : SFSU. Retrieved April 18, 2022 from: http://online.sfsu.edu/efc/classes/biol710/manova/MANOVAnewest.pdf

A factorial ANOVA is an Analysis of Variance test with more than one independent variable , or “ factor “. It can also refer to more than one Level of Independent Variable . For example, an experiment with a treatment group and a control group has one factor (the treatment) but two levels (the treatment and the control). The terms “two-way” and “three-way” refer to the number of factors or the number of levels in your test. Four-way ANOVA and above are rarely used because the results of the test are complex and difficult to interpret.

- A two-way ANOVA has two factors ( independent variables ) and one dependent variable . For example, time spent studying and prior knowledge are factors that affect how well you do on a test.

- A three-way ANOVA has three factors (independent variables) and one dependent variable. For example, time spent studying, prior knowledge, and hours of sleep are factors that affect how well you do on a test

Factorial ANOVA is an efficient way of conducting a test. Instead of performing a series of experiments where you test one independent variable against one dependent variable, you can test all independent variables at the same time.

Variability

In a one-way ANOVA, variability is due to the differences between groups and the differences within groups. In factorial ANOVA, each level and factor are paired up with each other (“crossed”). This helps you to see what interactions are going on between the levels and factors. If there is an interaction then the differences in one factor depend on the differences in another.

Let’s say you were running a two-way ANOVA to test male/female performance on a final exam. The subjects had either had 4, 6, or 8 hours of sleep.

- IV1: SEX (Male/Female)

- IV2: SLEEP (4/6/8)

- DV: Final Exam Score

A two-way factorial ANOVA would help you answer the following questions:

- Is sex a main effect? In other words, do men and women differ significantly on their exam performance?

- Is sleep a main effect? In other words, do people who have had 4,6, or 8 hours of sleep differ significantly in their performance?

- Is there a significant interaction between factors? In other words, how do hours of sleep and sex interact with regards to exam performance?

- Can any differences in sex and exam performance be found in the different levels of sleep?

Assumptions of Factorial ANOVA

- Normality: the dependent variable is normally distributed.

- Independence: Observations and groups are independent from each other.

- Equality of Variance: the population variances are equal across factors/levels.

These tests are very time-consuming by hand. In nearly every case you’ll want to use software. For example, several options are available in Excel :

- Two way ANOVA in Excel with replication and without replication.

- One way ANOVA in Excel 2013 .

ANOVA tests in statistics packages are run on parametric data. If you have rank or ordered data, you’ll want to run a non-parametric ANOVA (usually found under a different heading in the software, like “ nonparametric tests “).

It is unlikely you’ll want to do this test by hand, but if you must, these are the steps you’ll want to take:

- Find the mean for each of the groups.

- Find the overall mean (the mean of the groups combined).

- Find the Within Group Variation ; the total deviation of each member’s score from the Group Mean.

- Find the Between Group Variation : the deviation of each Group Mean from the Overall Mean.

- Find the F statistic: the ratio of Between Group Variation to Within Group Variation.

A Student’s t-test will tell you if there is a significant variation between groups. A t-test compares means, while the ANOVA compares variances between populations. You could technically perform a series of t-tests on your data. However, as the groups grow in number, you may end up with a lot of pair comparisons that you need to run. ANOVA will give you a single number (the f-statistic ) and one p-value to help you support or reject the null hypothesis . Back to Top

Repeated Measures (Within Subjects) ANOVA

A repeated measures ANOVA is almost the same as one-way ANOVA, with one main difference: you test related groups, not independent ones.

It’s called Repeated Measures because the same group of participants is being measured over and over again. For example, you could be studying the cholesterol levels of the same group of patients at 1, 3, and 6 months after changing their diet. For this example, the independent variable is “time” and the dependent variable is “cholesterol.” The independent variable is usually called the within-subjects factor .

Repeated measures ANOVA is similar to a simple multivariate design. In both tests, the same participants are measured over and over. However, with repeated measures the same characteristic is measured with a different condition. For example, blood pressure is measured over the condition “time”. For simple multivariate design it is the characteristic that changes. For example, you could measure blood pressure, heart rate and respiration rate over time.

Reasons to use Repeated Measures ANOVA

- When you collect data from the same participants over a period of time, individual differences (a source of between group differences) are reduced or eliminated.

- Testing is more powerful because the sample size isn’t divided between groups.

- The test can be economical, as you’re using the same participants.

Assumptions for Repeated Measures ANOVA

The results from your repeated measures ANOVA will be valid only if the following assumptions haven’t been violated:

- There must be one independent variable and one dependent variable.

- The dependent variable must be a continuous variable , on an interval scale or a ratio scale .

- The independent variable must be categorical , either on the nominal scale or ordinal scale.

- Ideally, levels of dependence between pairs of groups is equal (“sphericity”). Corrections are possible if this assumption is violated.

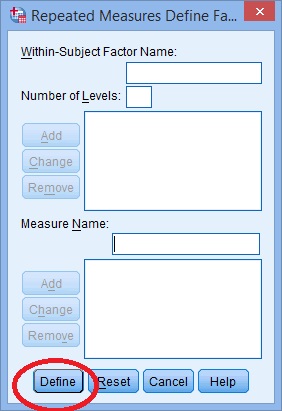

One Way Repeated Measures ANOVA in SPSS: Steps

Watch the video for the steps:

Step 2: Replace the “factor1” name with something that represents your independent variable. For example, you could put “age” or “time.”

Step 3: Enter the “Number of Levels.” This is how many times the dependent variable has been measured. For example, if you took measurements every week for a total of 4 weeks, this number would be 4.

Step 4: Click the “Add” button and then give your dependent variable a name.

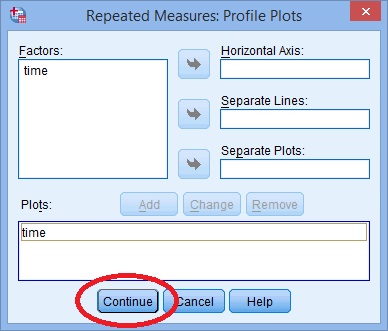

Step 7: Click “Plots” and use the arrow keys to transfer the factor from the left box onto the Horizontal Axis box.

Step 9: Click “Options”, then transfer your factors from the left box to the Display Means for box on the right.

Step 10: Click the following check boxes:

- Compare main effects.

- Descriptive Statistics.

- Estimates of Effect Size .

Step 11: Select “Bonferroni” from the drop down menu under Confidence Interval Adjustment . Step 12: Click “Continue” and then click “OK” to run the test. Back to Top

In statistics, sphericity (ε) refers to Mauchly’s sphericity test , which was developed in 1940 by John W. Mauchly , who co-developed the first general-purpose electronic computer.

Sphericity is used as an assumption in repeated measures ANOVA. The assumption states that the variances of the differences between all possible group pairs are equal. If your data violates this assumption, it can result in an increase in a Type I error (the incorrect rejection of the null hypothesis) .

It’s very common for repeated measures ANOVA to result in a violation of the assumption. If the assumption has been violated, corrections have been developed that can avoid increases in the type I error rate. The correction is applied to the degrees of freedom in the F-distribution .

Mauchly’s Sphericity Test

Mauchly’s test for sphericity can be run in the majority of statistical software, where it tends to be the default test for sphericity. Mauchly’s test is ideal for mid-size samples. It may fail to detect sphericity in small samples and it may over-detect in large samples. If the test returns a small p-value (p ≤.05), this is an indication that your data has violated the assumption. The following picture of SPSS output for ANOVA shows that the significance “sig” attached to Mauchly’s is .274. This means that the assumption has not been violated for this set of data.

You would report the above result as “Mauchly’s Test indicated that the assumption of sphericity had not been violated, χ 2 (2) = 2.588, p = .274.”

If your test returned a small p-value , you should apply a correction, usually either the:

- Greehouse-Geisser correction.

- Huynh-Feldt correction .

When ε ≤ 0.75 (or you don’t know what the value for the statistic is), use the Greenhouse-Geisser correction. When ε > .75, use the Huynh-Feldt correction .

Grand mean ANOVA vs Regression

Blokdyk, B. (2018). Ad Hoc Testing . 5STARCooks Miller, R. G. Beyond ANOVA: Basics of Applied Statistics . Boca Raton, FL: Chapman & Hall, 1997 Image: UVM. Retrieved December 4, 2020 from: https://www.uvm.edu/~dhowell/gradstat/psych341/lectures/RepeatedMeasures/repeated1.html

An open portfolio of interoperable, industry leading products

The Dotmatics digital science platform provides the first true end-to-end solution for scientific R&D, combining an enterprise data platform with the most widely used applications for data analysis, biologics, flow cytometry, chemicals innovation, and more.

Statistical analysis and graphing software for scientists

Bioinformatics, cloning, and antibody discovery software

Plan, visualize, & document core molecular biology procedures

Electronic Lab Notebook to organize, search and share data

Proteomics software for analysis of mass spec data

Modern cytometry analysis platform

Analysis, statistics, graphing and reporting of flow cytometry data

Software to optimize designs of clinical trials

POPULAR USE CASES

The Ultimate Guide to ANOVA

Get all of your ANOVA questions answered here

ANOVA is the go-to analysis tool for classical experimental design, which forms the backbone of scientific research.

In this article, we’ll guide you through what ANOVA is, how to determine which version to use to evaluate your particular experiment, and provide detailed examples for the most common forms of ANOVA.

This includes a (brief) discussion of crossed, nested, fixed and random factors, and covers the majority of ANOVA models that a scientist would encounter before requiring the assistance of a statistician or modeling expert.

What is ANOVA used for?

ANOVA, or (Fisher’s) analysis of variance, is a critical analytical technique for evaluating differences between three or more sample means from an experiment. As the name implies, it partitions out the variance in the response variable based on one or more explanatory factors.

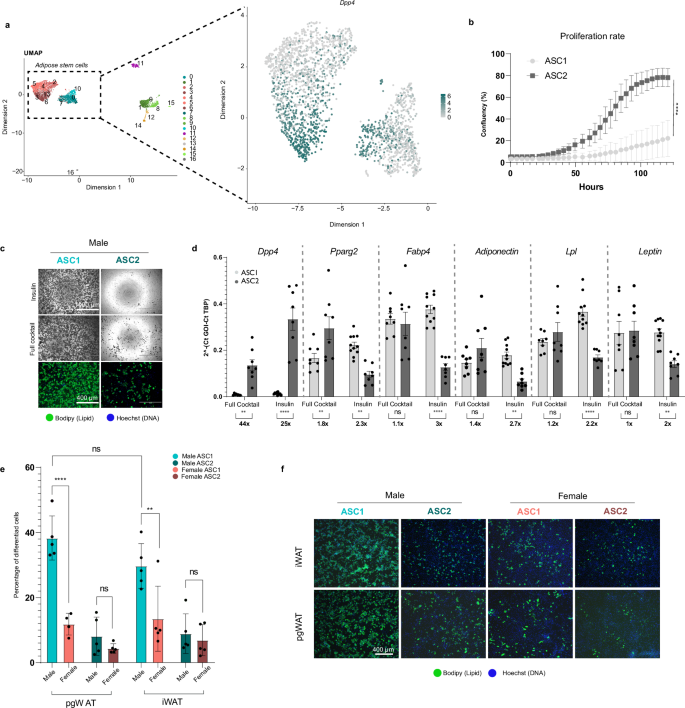

As you will see there are many types of ANOVA such as one-, two-, and three-way ANOVA as well as nested and repeated measures ANOVA. The graphic below shows a simple example of an experiment that requires ANOVA in which researchers measured the levels of neutrophil extracellular traps (NETs) in plasma across patients with different viral respiratory infections.

Many researchers may not realize that, for the majority of experiments, the characteristics of the experiment that you run dictate the ANOVA that you need to use to test the results. While it’s a massive topic (with professional training needed for some of the advanced techniques), this is a practical guide covering what most researchers need to know about ANOVA.

When should I use ANOVA?

If your response variable is numeric, and you’re looking for how that number differs across several categorical groups, then ANOVA is an ideal place to start. After running an experiment, ANOVA is used to analyze whether there are differences between the mean response of one or more of these grouping factors.

ANOVA can handle a large variety of experimental factors such as repeated measures on the same experimental unit (e.g., before/during/after).

If instead of evaluating treatment differences, you want to develop a model using a set of numeric variables to predict that numeric response variable, see linear regression and t tests .

What is the difference between one-way, two-way and three-way ANOVA?

The number of “ways” in ANOVA (e.g., one-way, two-way, …) is simply the number of factors in your experiment.

Although the difference in names sounds trivial, the complexity of ANOVA increases greatly with each added factor. To use an example from agriculture, let’s say we have designed an experiment to research how different factors influence the yield of a crop.

An experiment with a single factor

In the most basic version, we want to evaluate three different fertilizers. Because we have more than two groups, we have to use ANOVA. Since there is only one factor (fertilizer), this is a one-way ANOVA. One-way ANOVA is the easiest to analyze and understand, but probably not that useful in practice, because having only one factor is a pretty simplistic experiment.

What happens when you add a second factor?

If we have two different fields, we might want to add a second factor to see if the field itself influences growth. Within each field, we apply all three fertilizers (which is still the main interest). This is called a crossed design. In this case we have two factors, field and fertilizer, and would need a two-way ANOVA.

As you might imagine, this makes interpretation more complicated (although still very manageable) simply because more factors are involved. There is now a fertilizer effect, as well as a field effect, and there could be an interaction effect, where the fertilizer behaves differently on each field.

How about adding a third factor?

Finally, it is possible to have more than two factors in an ANOVA. In our example, perhaps you also wanted to test out different irrigation systems. You could have a three-way ANOVA due to the presence of fertilizer, field, and irrigation factors. This greatly increases the complication.

Now in addition to the three main effects (fertilizer, field and irrigation), there are three two-way interaction effects (fertilizer by field, fertilizer by irrigation, and field by irrigation), and one three-way interaction effect.

If any of the interaction effects are statistically significant, then presenting the results gets quite complicated. “Fertilizer A works better on Field B with Irrigation Method C ….”

In practice, two-way ANOVA is often as complex as many researchers want to get before consulting with a statistician. That being said, three-way ANOVAs are cumbersome, but manageable when each factor only has two levels.

What are crossed and nested factors?

In addition to increasing the difficulty with interpretation, experiments (or the resulting ANOVA) with more than one factor add another level of complexity, which is determining whether the factors are crossed or nested.

With crossed factors, every combination of levels among each factor is observed. For example, each fertilizer is applied to each field (so the fields are subdivided into three sections in this case).

With nested factors, different levels of a factor appear within another factor. An example is applying different fertilizers to each field, such as fertilizers A and B to field 1 and fertilizers C and D to field 2. See more about nested ANOVA here .

What are fixed and random factors?

Another challenging concept with two or more factors is determining whether to treat the factors as fixed or random.

Fixed factors are used when all levels of a factor (e.g., Fertilizer A, Fertilizer B, Fertilizer C) are specified and you want to determine the effect that factor has on the mean response.

Random factors are used when only some levels of a factor are observed (e.g., Field 1, Field 2, Field 3) out of a large or infinite possible number (e.g., all fields), but rather than specify the effect of the factor, which you can’t do because you didn’t observe all possible levels, you want to quantify the variability that’s within that factor (variability added within each field).

Many introductory courses on ANOVA only discuss fixed factors, and we will largely follow suit other than with two specific scenarios (nested factors and repeated measures).

What are the (practical) assumptions of ANOVA?

These are one-way ANOVA assumptions, but also carryover for more complicated two-way or repeated measures ANOVA.

- Categorical treatment or factor variables - ANOVA evaluates mean differences between one or more categorical variables (such as treatment groups), which are referred to as factors or “ways.”

- Three or more groups - There must be at least three distinct groups (or levels of a categorical variable) across all factors in an ANOVA. The possibilities are endless: one factor of three different groups, two factors of two groups each (2x2), and so on. If you have fewer than three groups, you can probably get away with a simple t-test.

- Numeric Response - While the groups are categorical, the data measured in each group (i.e., the response variable) still needs to be numeric. ANOVA is fundamentally a quantitative method for measuring the differences in a numeric response between groups. If your response variable isn’t continuous, then you need a more specialized modelling framework such as logistic regression or chi-square contingency table analysis to name a few.

- Random assignment - The makeup of each experimental group should be determined by random selection.

- Normality - The distribution within each factor combination should be approximately normal, although ANOVA is fairly robust to this assumption as the sample size increases due to the central limit theorem.

What is the formula for ANOVA?

The formula to calculate ANOVA varies depending on the number of factors, assumptions about how the factors influence the model (blocking variables, fixed or random effects, nested factors, etc.), and any potential overlap or correlation between observed values (e.g., subsampling, repeated measures).

The good news about running ANOVA in the 21st century is that statistical software handles the majority of the tedious calculations. The main thing that a researcher needs to do is select the appropriate ANOVA.

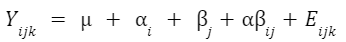

An example formula for a two-factor crossed ANOVA is:

How do I know which ANOVA to use?

As statisticians, we like to imagine that you’re reading this before you’ve run your experiment. You can save a lot of headache by simplifying an experiment into a standard format (when possible) to make the analysis straightforward.

Regardless, we’ll walk you through picking the right ANOVA for your experiment and provide examples for the most popular cases. The first question is:

Do you only have a single factor of interest?

If you have only measured a single factor (e.g., fertilizer A, fertilizer B, .etc.), then use one-way ANOVA . If you have more than one, then you need to consider the following:

Are you measuring the same observational unit (e.g., subject) multiple times?

This is where repeated measures come into play and can be a really confusing question for researchers, but if this sounds like it might describe your experiment, see repeated measures ANOVA . Otherwise:

Are any of the factors nested, where the levels are different depending on the levels of another factor?

In this case, you have a nested ANOVA design. If you don’t have nested factors or repeated measures, then it becomes simple:

Do you have two categorical factors?

Then use two-way ANOVA.

Do you have three categorical factors?

Use three-way ANOVA.

Do you have variables that you recorded that aren’t categorical (such as age, weight, etc.)?

Although these are outside the scope of this guide, if you have a single continuous variable, you might be able to use ANCOVA, which allows for a continuous covariate. With multiple continuous covariates, you probably want to use a mixed model or possibly multiple linear regression.

Prism does offer multiple linear regression but assumes that all factors are fixed. A full “mixed model” analysis is not yet available in Prism, but is offered as options within the one- and two-way ANOVA parameters.

How do I perform ANOVA?

Once you’ve determined which ANOVA is appropriate for your experiment, use statistical software to run the calculations. Below, we provide detailed examples of one, two and three-way ANOVA models.

How do I read and interpret an ANOVA table?

Interpreting any kind of ANOVA should start with the ANOVA table in the output. These tables are what give ANOVA its name, since they partition out the variance in the response into the various factors and interaction terms. This is done by calculating the sum of squares (SS) and mean squares (MS), which can be used to determine the variance in the response that is explained by each factor.

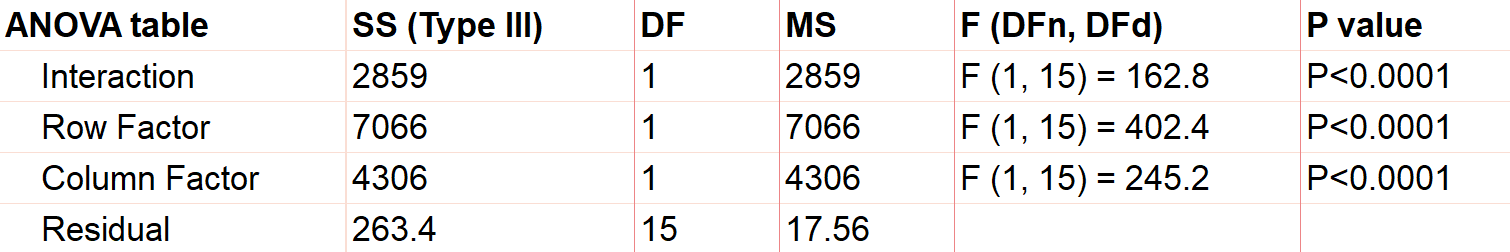

If you have predetermined your level of significance, interpretation mostly comes down to the p-values that come from the F-tests. The null hypothesis for each factor is that there is no significant difference between groups of that factor. All of the following factors are statistically significant with a very small p-value.

One-way ANOVA Example

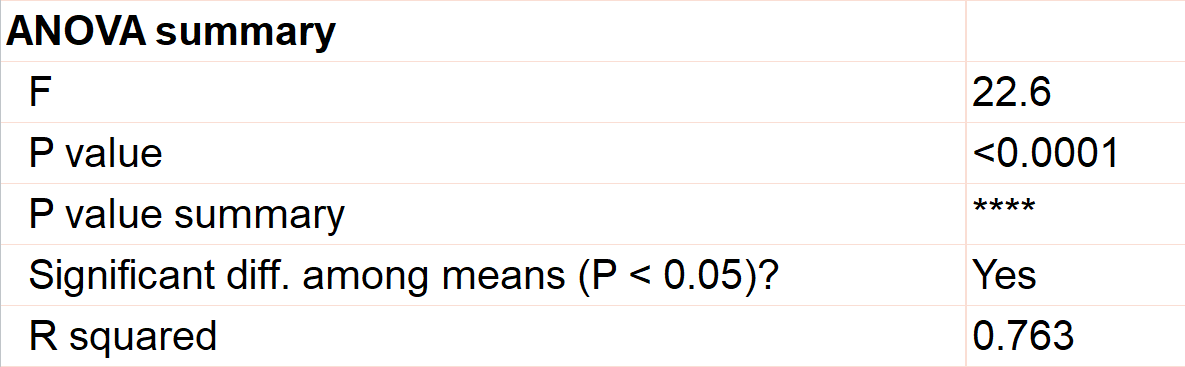

An example of one-way ANOVA is an experiment of cell growth in petri dishes. The response variable is a measure of their growth, and the variable of interest is treatment, which has three levels: formula A, formula B, and a control.

Classic one-way ANOVA assumes equal variances within each sample group. If that isn’t a valid assumption for your data, you have a number of alternatives .

Calculating a one-way ANOVA

Using Prism to do the analysis, we will run a one-way ANOVA and will choose 95% as our significance threshold. Since we are interested in the differences between each of the three groups, we will evaluate each and correct for multiple comparisons (more on this later!).

For the following, we’ll assume equal variances within the treatment groups. Consider

The first test to look at is the overall (or omnibus) F-test, with the null hypothesis that there is no significant difference between any of the treatment groups. In this case, there is a significant difference between the three groups (p<0.0001), which tells us that at least one of the groups has a statistically significant difference.

Now we can move to the heart of the issue, which is to determine which group means are statistically different. To learn more, we should graph the data and test the differences (using a multiple comparison correction).

Graphing one-way ANOVA

The easiest way to visualize the results from an ANOVA is to use a simple chart that shows all of the individual points. Rather than a bar chart, it’s best to use a plot that shows all of the data points (and means) for each group such as a scatter or violin plot.

As an example, below you can see a graph of the cell growth levels for each data point in each treatment group, along with a line to represent their mean. This can help give credence to any significant differences found, as well as show how closely groups overlap.

Determining statistical significance between groups

In addition to the graphic, what we really want to know is which treatment means are statistically different from each other. Because we are performing multiple tests, we’ll use a multiple comparison correction . For our example, we’ll use Tukey’s correction (although if we were only interested in the difference between each formula to the control, we could use Dunnett’s correction instead).

In this case, the mean cell growth for Formula A is significantly higher than the control (p<.0001) and Formula B ( p=0.002 ), but there’s no significant difference between Formula B and the control.

Two-way ANOVA example

For two-way ANOVA, there are two factors involved. Our example will focus on a case of cell lines. Suppose we have a 2x2 design (four total groupings). There are two different treatments (serum-starved and normal culture) and two different fields. There are 19 total cell line “experimental units” being evaluated, up to 5 in each group (note that with 4 groups and 19 observational units, this study isn’t balanced). Although there are multiple units in each group, they are all completely different replicates and therefore not repeated measures of the same unit.

As with one-way ANOVA, it’s a good idea to graph the data as well as look at the ANOVA table for results.

Graphing two-way ANOVA

There are many options here. Like our one-way example, we recommend a similar graphing approach that shows all the data points themselves along with the means.

Determining statistical significance between groups in two-way ANOVA

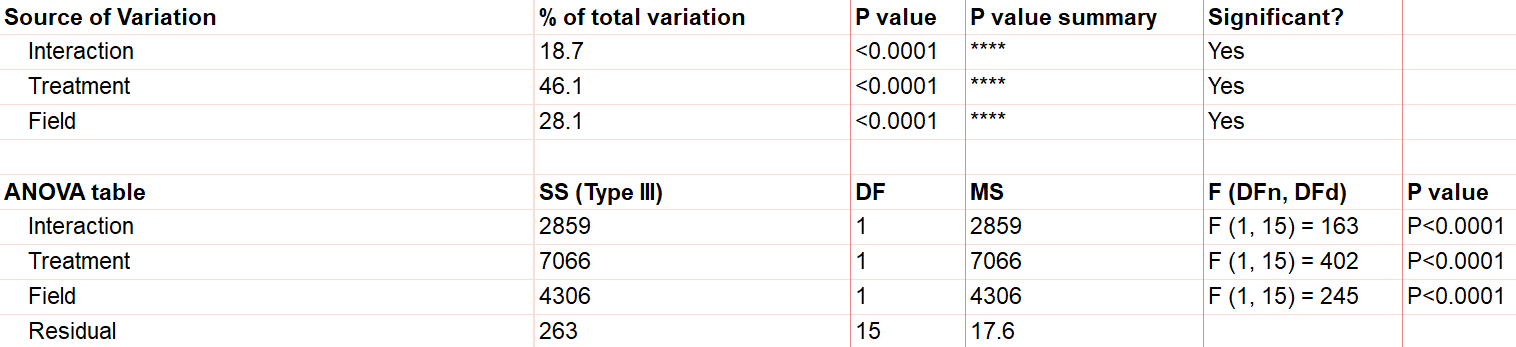

Let’s use a two-way ANOVA with a 95% significance threshold to evaluate both factors’ effects on the response, a measure of growth.

Feel free to use our two-way ANOVA checklist as often as you need for your own analysis.

First, notice there are three sources of variation included in the model, which are interaction, treatment, and field.

The first effect to look at is the interaction term, because if it’s significant, it changes how you interpret the main effects (e.g., treatment and field). The interaction effect calculates if the effect of a factor depends on the other factor. In this case, the significant interaction term (p<.0001) indicates that the treatment effect depends on the field type.

A significant interaction term muddies the interpretation, so that you no longer have the simple conclusion that “Treatment A outperforms Treatment B.” In this case, the graphic is particularly useful. It suggests that while there may be some difference between three of the groups, the precise combination of serum starved in field 2 outperformed the rest.

To confirm whether there is a statistically significant result, we would run pairwise comparisons (comparing each factor level combination with every other one) and account for multiple comparisons.

Do I need to correct for multiple comparisons for two-way ANOVA?

If you’re comparing the means for more than one combination of treatment groups, then absolutely! Here’s more information about multiple comparisons for two-way ANOVA .

Repeated measures ANOVA

So far we have focused almost exclusively on “ordinary” ANOVA and its differences depending on how many factors are involved. In all of these cases, each observation is completely unrelated to the others. Other than the combination of factors that may be the same across replicates, each replicate on its own is independent.

There is a second common branch of ANOVA known as repeated measures . In these cases, the units are related in that they are matched up in some way. Repeated measures are used to model correlation between measurements within an individual or subject. Repeated measures ANOVA is useful (and increases statistical power) when the variability within individuals is large relative to the variability among individuals.

It’s important that all levels of your repeated measures factor (usually time) are consistent. If they aren’t, you’ll need to consider running a mixed model, which is a more advanced statistical technique.

There are two common forms of repeated measures:

- You observe the same individual or subject at different time points. If you’re familiar with paired t-tests, this is an extension to that. (You can also have the same individual receive all of the treatments, which adds another level of repeated measures.)

- You have a randomized block design, where matched elements receive each treatment. For example, you split a large sample of blood taken from one person into 3 (or more) smaller samples, and each of those smaller samples gets exactly one treatment.

Repeated measures ANOVA can have any number of factors. See analysis checklists for one-way repeated measures ANOVA and two-way repeated measures ANOVA .

What does it mean to assume sphericity with repeated measures ANOVA?

Repeated measures are almost always treated as random factors, which means that the correlation structure between levels of the repeated measures needs to be defined. The assumption of sphericity means that you assume that each level of the repeated measures has the same correlation with every other level.

This is almost never the case with repeated measures over time (e.g., baseline, at treatment, 1 hour after treatment), and in those cases, we recommend not assuming sphericity. However, if you used a randomized block design, then sphericity is usually appropriate .

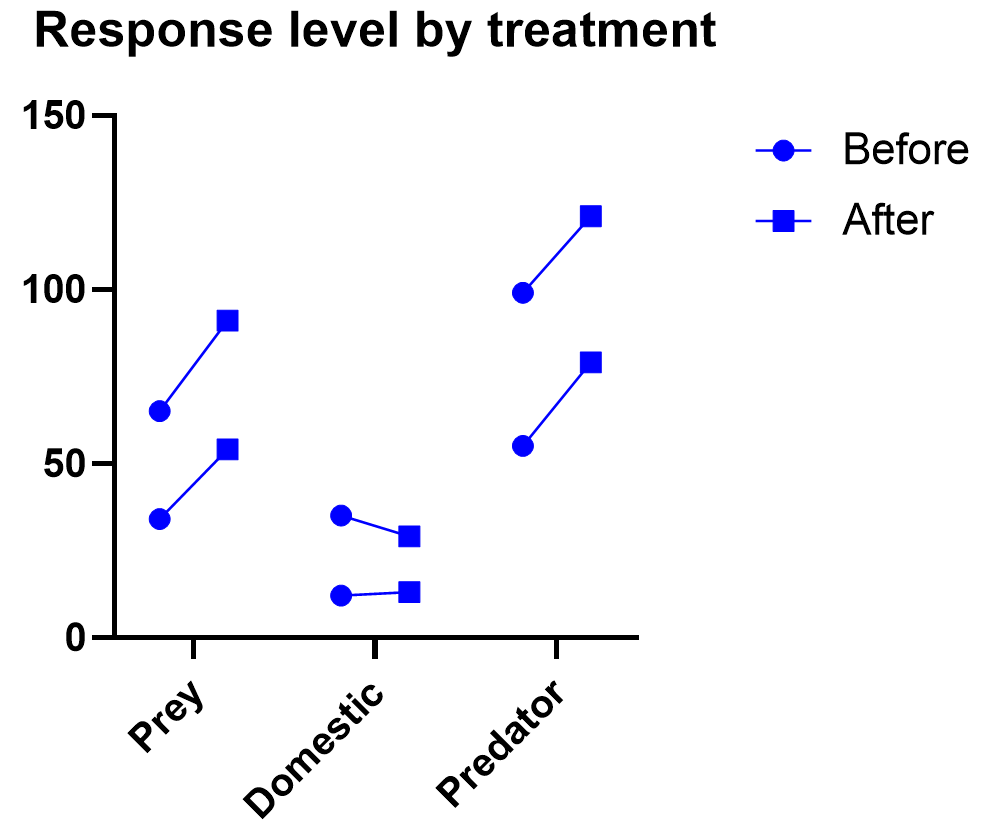

Example two-way ANOVA with repeated measures

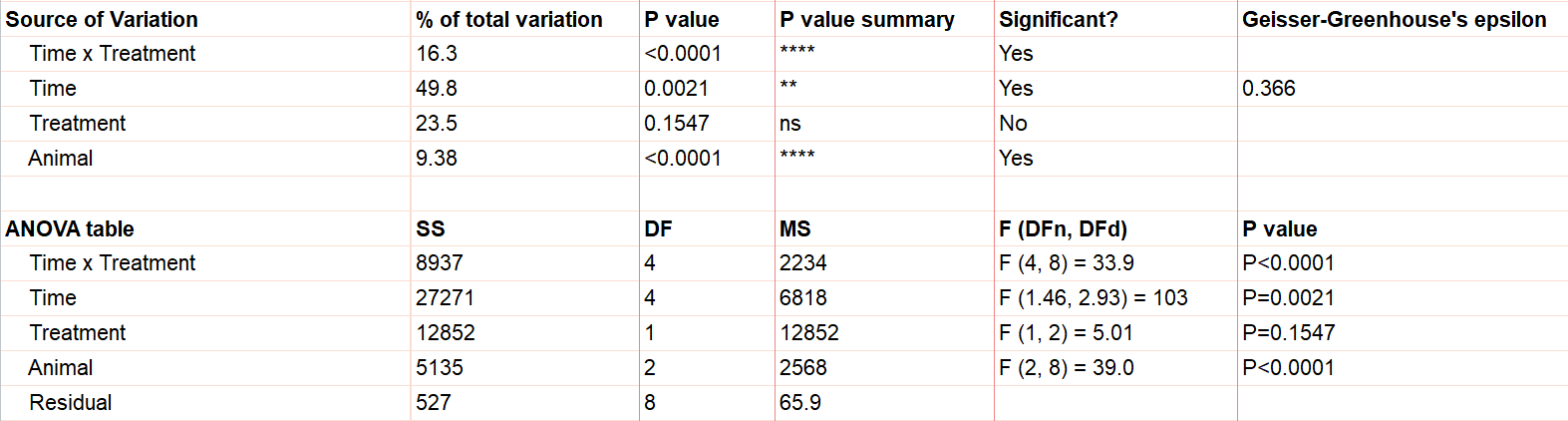

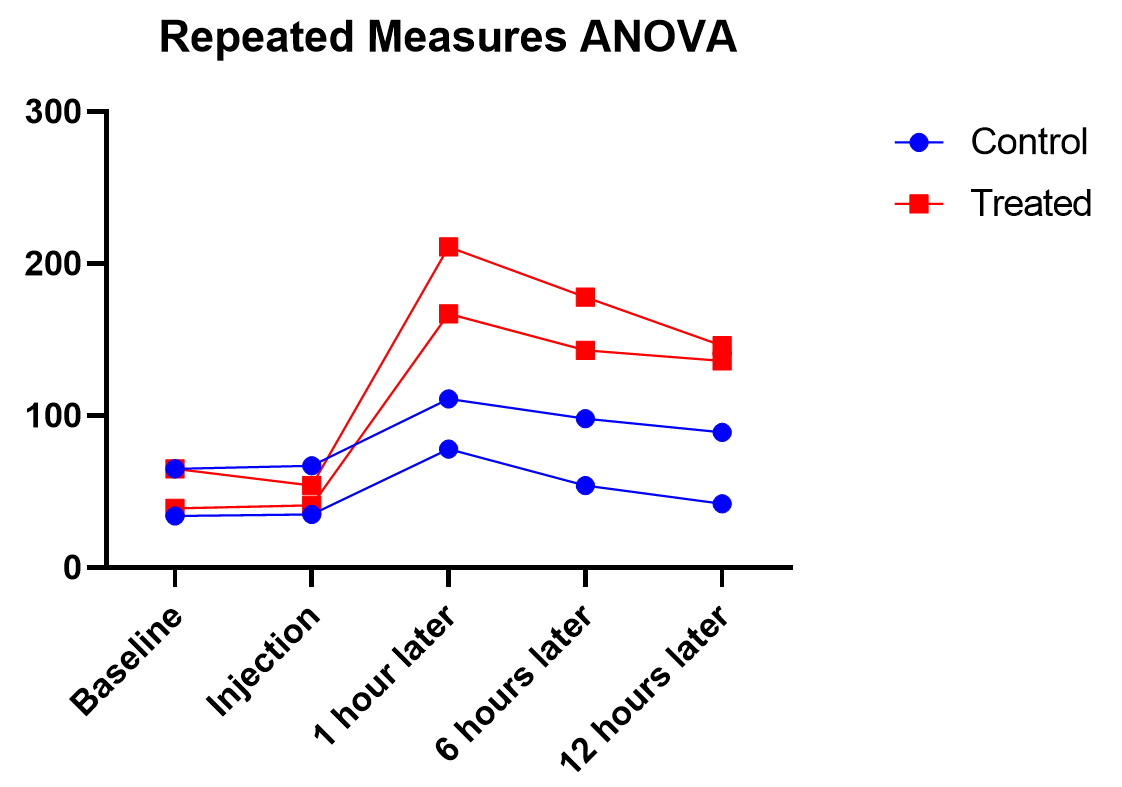

Say we have two treatments (control and treatment) to evaluate using test animals. We’ll apply both treatments to each two animals (replicates) with sufficient time in between the treatments so there isn’t a crossover (or carry-over) effect. Also, we’ll measure five different time points for each treatment (baseline, at time of injection, one hour after, …). This is repeated measures because we will need to measure matching samples from the same animal under each treatment as we track how its stimulation level changes over time.

The output shows the test results from the main and interaction effects. Due to the interaction between time and treatment being significant (p<.0001), the fact that the treatment main effect isn’t significant (p=.154) isn’t noteworthy.

Graphing repeated measures ANOVA

As we’ve been saying, graphing the data is useful, and this is particularly true when the interaction term is significant. Here we get an explanation of why the interaction between treatment and time was significant, but treatment on its own was not. As soon as one hour after injection (and all time points after), treated units show a higher response level than the control even as it decreases over those 12 hours. Thus the effect of time depends on treatment. At the earlier time points, there is no difference between treatment and control.

Graphing repeated measures data is an art, but a good graphic helps you understand and communicate the results. For example, it’s a completely different experiment, but here’s a great plot of another repeated measures experiment with before and after values that are measured on three different animal types.

What if I have three or more factors?

Interpreting three or more factors is very challenging and usually requires advanced training and experience .

Just as two-way ANOVA is more complex than one-way, three-way ANOVA adds much more potential for confusion. Not only are you dealing with three different factors, you will now be testing seven hypotheses at the same time. Two-way interactions still exist here, and you may even run into a significant three-way interaction term.

It takes careful planning and advanced experimental design to be able to untangle the combinations that will be involved ( see more details here ).

Non-parametric ANOVA alternatives

As with t-tests (or virtually any statistical method), there are alternatives to ANOVA for testing differences between three groups. ANOVA is means-focused and evaluated in comparison to an F-distribution.

The two main non-parametric cousins to ANOVA are the Kruskal-Wallis and Friedman’s tests. Just as is true with everything else in ANOVA, it is likely that one of the two options is more appropriate for your experiment.

Kruskal-Wallis tests the difference between medians (rather than means) for 3 or more groups. It is only useful as an “ordinary ANOVA” alternative, without matched subjects like you have in repeated measures. Here are some tips for interpreting Kruskal-Wallis test results.

Friedman’s Test is the opposite, designed as an alternative to repeated measures ANOVA with matched subjects. Here are some tips for interpreting Friedman's Test .

What are simple, main, and interaction effects in ANOVA?

Consider the two-way ANOVA model setup that contains two different kinds of effects to evaluate:

The 𝛼 and 𝛽 factors are “main” effects, which are the isolated effect of a given factor. “Main effect” is used interchangeably with “simple effect” in some textbooks.

The interaction term is denoted as “𝛼𝛽”, and it allows for the effect of a factor to depend on the level of another factor. It can only be tested when you have replicates in your study. Otherwise, the error term is assumed to be the interaction term.

What are multiple comparisons?

When you’re doing multiple statistical tests on the same set of data, there’s a greater propensity to discover statistically significant differences that aren’t true differences. Multiple comparison corrections attempt to control for this, and in general control what is called the familywise error rate. There are a number of multiple comparison testing methods , which all have pros and cons depending on your particular experimental design and research questions.

What does the word “way” mean in one-way vs two-way ANOVA?

In statistics overall, it can be hard to keep track of factors, groups, and tails. To the untrained eye “two-way ANOVA” could mean any of these things.

The best way to think about ANOVA is in terms of factors or variables in your experiment. Suppose you have one factor in your analysis (perhaps “treatment”). You will likely see that written as a one-way ANOVA. Even if that factor has several different treatment groups, there is only one factor, and that’s what drives the name.

Also, “way” has absolutely nothing to do with “tails” like a t-test. ANOVA relies on F tests, which can only test for equal vs unequal because they rely on squared terms. So ANOVA does not have the “one-or-two tails” question .

What is the difference between ANOVA and a t-test?

ANOVA is an extension of the t-test. If you only have two group means to compare, use a t-test. Anything more requires ANOVA.

What is the difference between ANOVA and chi-square?

Chi-square is designed for contingency tables, or counts of items within groups (e.g., type of animal). The goal is to see whether the counts in a particular sample match the counts you would expect by random chance.

ANOVA separates subjects into groups for evaluation, but there is some numeric response variable of interest (e.g., glucose level).

Can ANOVA evaluate effects on multiple response variables at the same time?

Multiple response variables makes things much more complicated than multiple factors. ANOVA (as we’ve discussed it here) can obviously handle multiple factors but it isn’t designed for tracking more than one response at a time.

Technically, there is an expansion approach designed for this called Multivariate (or Multiple) ANOVA, or more commonly written as MANOVA. Things get complicated quickly, and in general requires advanced training.

Can ANOVA evaluate numeric factors in addition to the usual categorical factors?

It sounds like you are looking for ANCOVA (analysis of covariance). You can treat a continuous (numeric) factor as categorical, in which case you could use ANOVA, but this is a common point of confusion .

What is the definition of ANOVA?

ANOVA stands for analysis of variance, and, true to its name, it is a statistical technique that analyzes how experimental factors influence the variance in the response variable from an experiment.

What is blocking in Anova?

Blocking is an incredibly powerful and useful strategy in experimental design when you have a factor that you think will heavily influence the outcome, so you want to control for it in your experiment. Blocking affects how the randomization is done with the experiment. Usually blocking variables are nuisance variables that are important to control for but are not inherently of interest.

A simple example is an experiment evaluating the efficacy of a medical drug and blocking by age of the subject. To do blocking, you must first gather the ages of all of the participants in the study, appropriately bin them into groups (e.g., 10-30, 30-50, etc.), and then randomly assign an equal number of treatments to the subjects within each group.

There’s an entire field of study around blocking. Some examples include having multiple blocking variables, incomplete block designs where not all treatments appear in all blocks, and balanced (or unbalanced) blocking designs where equal (or unequal) numbers of replicates appear in each block and treatment combination.

What is ANOVA in statistics?