13 Interesting Neural Network Project Ideas & Topics for Beginners [2024]

![artificial neural networks thesis topics 13 Interesting Neural Network Project Ideas & Topics for Beginners [2024]](https://www.upgrad.com/__khugblog-next/image/?url=https%3A%2F%2Fd14b9ctw0m6fid.cloudfront.net%2Fugblog%2Fwp-content%2Fuploads%2F2020%2F05%2F515.png&w=1920&q=75)

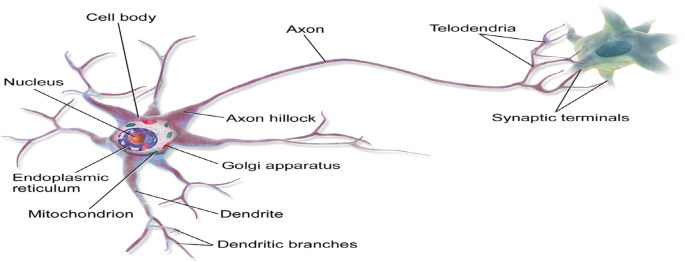

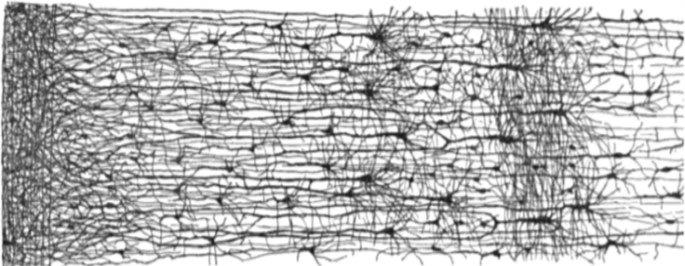

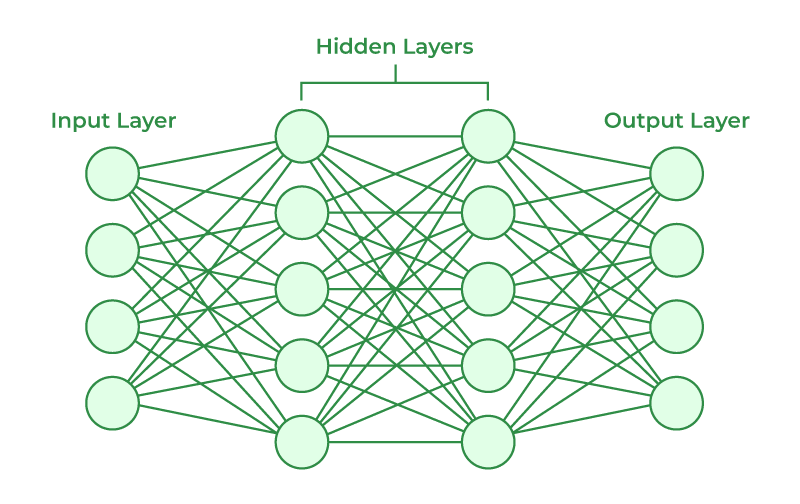

The topic of neural networks has captivated the world of artificial intelligence and machine learning with its ability to mimic the human brain’s learning process. Neural networks aim to recognize underlying relationships in datasets through a process that mimics the functioning of the human brain.

Modern developments in image recognition, autonomous vehicles, and many other fields are based on application of neural networks. These adaptable frameworks have revolutionized the way we use technology. Such systems can learn to perform tasks without being programmed with precise rules. You can implement different neural network projects to understand all about network architectures and how they work.

In this article, we will delve into the fundamental principles of neural networks, and witness their capabilities through real-world Advanced deep learning projects ideas and understand what is neural network in AI? Read on to familiarize yourself with some exciting applications!

Fundamentals of neural networks

The fundamentals of neural networks algorithms lie in their inspiration from the human brain’s neural networks and their ability to learn from data. These networks are useful tools for a variety of machine-learning applications since they are built to analyze data and identify patterns.

Before we begin with our list of neural network project ideas , let us first revise the basics.

- A neural network is a series of algorithms that process complex data

- It can adapt to changing input.

- It can generate the best possible results without requiring you to redesign the output criteria.

- Computer scientists use neural networks to recognize patterns and solve diverse problems.

- It is an example of machine learning.

- The phrase “deep learning” is used for complex neural networks.

Understanding these fundamentals is essential for building, training, and deploying effective application neural network models.

Today, neural networks are applied to a wide range of business functions, such as customer research, sales forecasting, data validation, risk management, etc. And adopting a hands-on training approach brings many advantages if you want to pursue a career in deep learning. So, let us dive into the topics one by one. Learn more about the applications of neural networks.

Neural Network Projects

Here are few examples of Neural network projects with source code

1. Autoencoders based on neural networks

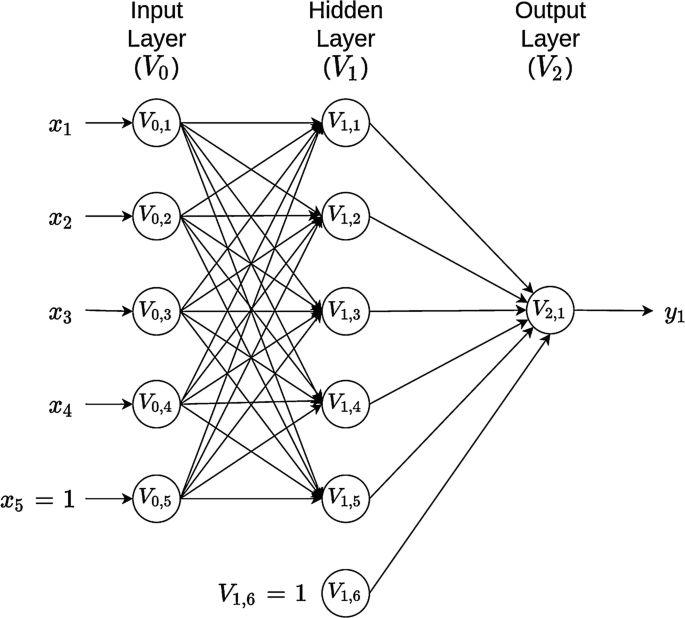

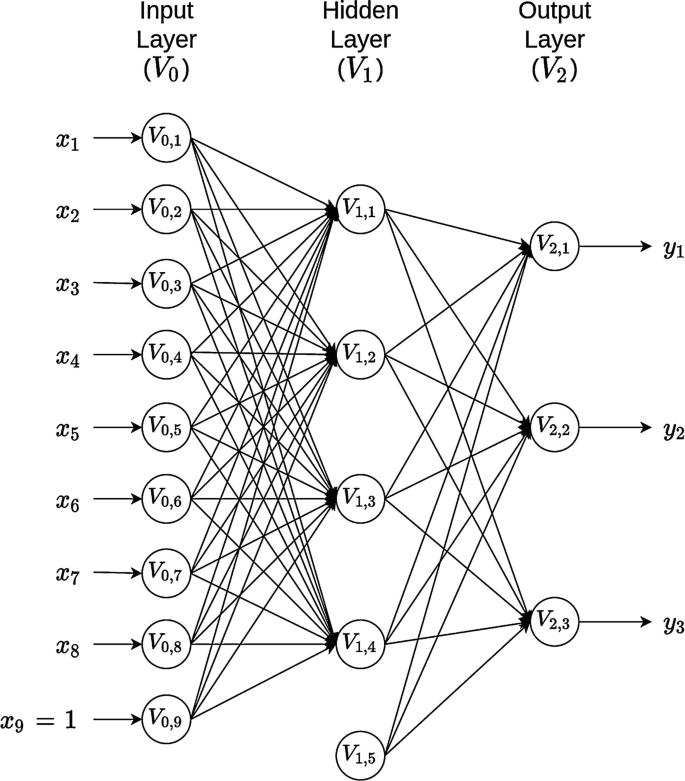

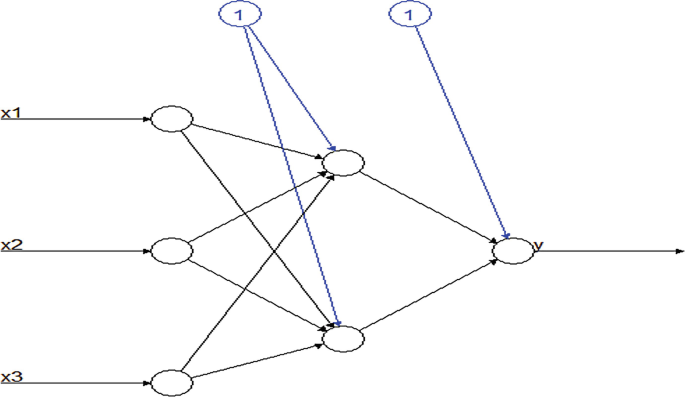

Autoencoders are the simplest of deep learning architectures. They are a specific type of feedforward neural networks where the input is first compressed into a lower-dimensional code. Then, the output is reconstructed from the compact code representation or summary. Therefore, autoencoders have three components built inside them – encoder, code, and decoder. In the next section, we have summarized how the architecture works.

- The input passes through the encoder to produce the code.

- The decoder (mirror image of the encoder’s structure) processes the output using the code.

- An output is generated, which is identical to the input.

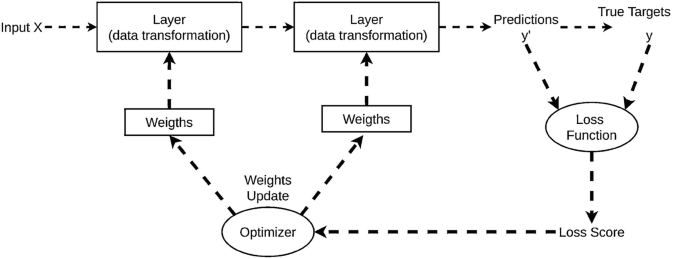

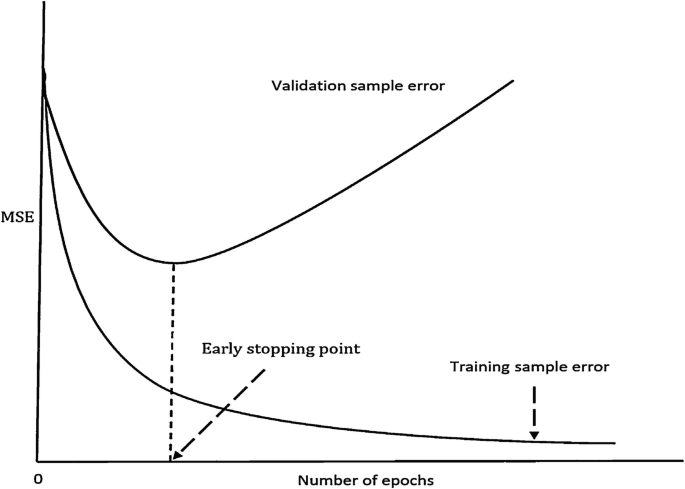

From the above steps, you will observe that an autoencoder is a dimensionality reduction or compression algorithm. To begin the development process, you will need an encoding method, a decoding method, and a loss function. Binary cross-entropy and mean squared error are the two top choices for the loss function. And to train the autoencoders, you can follow the same procedure as artificial neural networks via back-propagation. Now, let us discuss the applications of these networks.

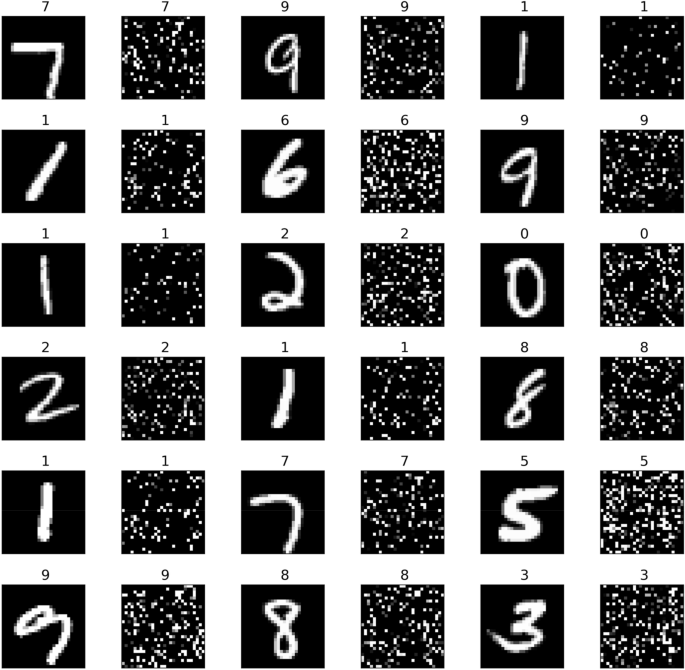

You can create a handwriting recognition tool using the MNIST dataset as input. MNIST is a manageable, beginner-friendly data source that can be used to generate images of handwritten numbers. Since these images are noisy, they need a noise removal filter to classify and read the digits properly. And autoencoders can learn this noise removal feature for a particular dataset. You can try this project yourself by downloading freely available code from online repositories.

Must Read : Free nlp online course !

2. Convolutional neural network model

Convolutional neural networks or CNNs are typically applied to analyze visual imagery. This architecture can be used for different purposes, such as for image processing in self-driving cars.

Autonomous driving applications use this model to interface with the vehicle where CNNs receive image feedback and pass it along to a series of output decisions (turn right/left, stop/drive, etc.) Then, Reinforcement Learning algorithms process these decisions for driving. Here is how you can start building a full-fledged application on your own:

- Take a tutorial on MNIST or CIFAR-10.

- Get acquainted with binary image classification models.

- Plug and play with the open code in your Jupyter notebook.

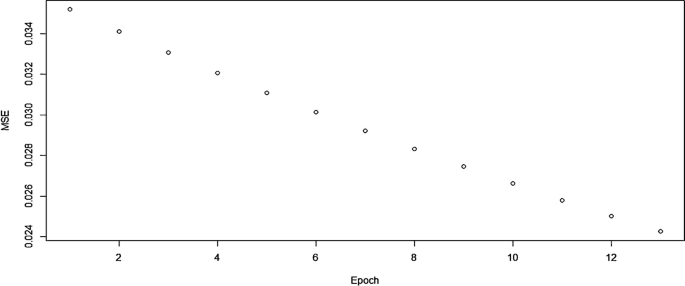

With this approach, you can learn how to import custom datasets and experiment with the implementation to achieve the desired performance. You can try increasing the number of epochs, toying with images, adding more layers, etc. Additionally, you can dive into some object detection algorithms like SSD, YOLO, Fast R-CNN, etc. Facial recognition in the iPhone’s FaceID feature is one of the most common examples of this model.

Once you have brushed up your concepts, try your hand at constructing a traffic sign classification system for a self-driving car using CNN and the Keras library. You can explore the GTSRB dataset for this project. Learn more about convolutional neural networks.

Learn Machine Learning courses from the World’s top Universities – Masters, Executive Post Graduate Programs, and Advanced Certificate Program in ML & AI to fast-track your career.

3. Recurrent neural network model

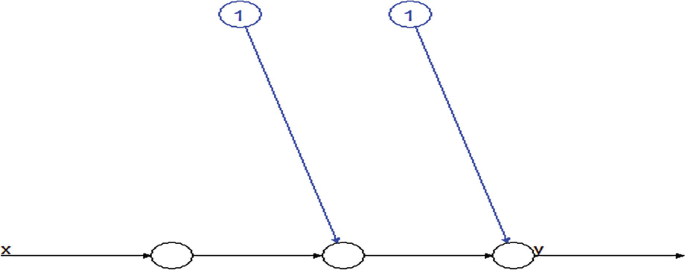

Unlike feedforward nets, recurrent neural networks or RNNs can deal with sequences of variable lengths. Sequence models like RNN have several applications, ranging from chatbots, text mining, video processing, to price predictions.

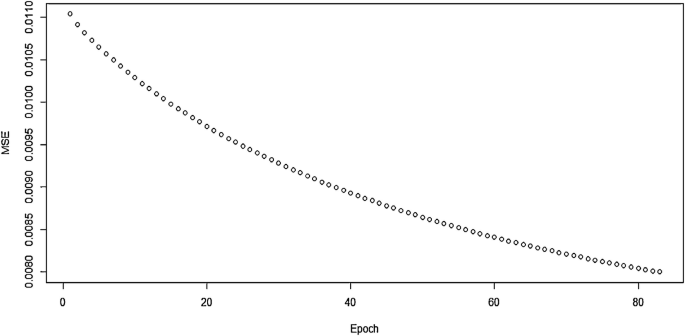

If you are just getting started, you should first acquire a foundational understanding of the LSTN gate with a char-level RNN. For example, you can attempt loading stock price datasets. You can train RNNs to predict what comes next by processing real data sequences one by one. We have explained this process below:

- Assume that the predictions are probabilistic.

- Sampling iterations take place in the network’s output distribution.

- The sample is fed as input in the next step.

- The trained network generates novel sequences.

With this, we have covered the main types of neural networks and their applications . Let us now look at some more specific neural network project ideas .

Best Machine Learning and AI Courses Online

4. cryptographic applications using artificial neural networks.

Cryptography is concerned with maintaining computational security and avoiding data leakages in electronic communications.

Artificial neural network-based cryptographic applications represent an intriguing merging of cutting-edge technologies to address pressing problems with security, privacy, and data protection. Cryptographic applications utilising neural networks have the potential to change the face of modern cybersecurity and data protection as research in this field advances.

You can implement a project in this field by using different neural network architectures and training algorithms.

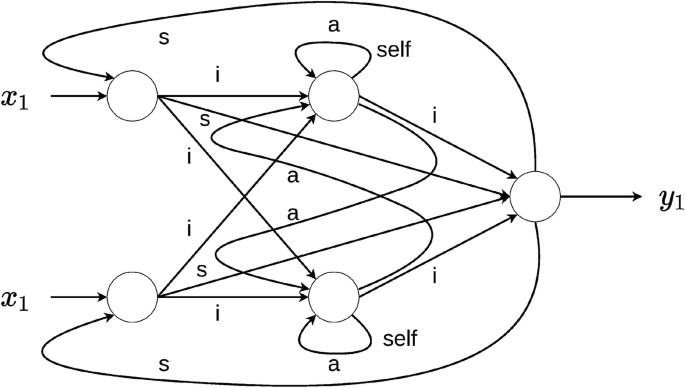

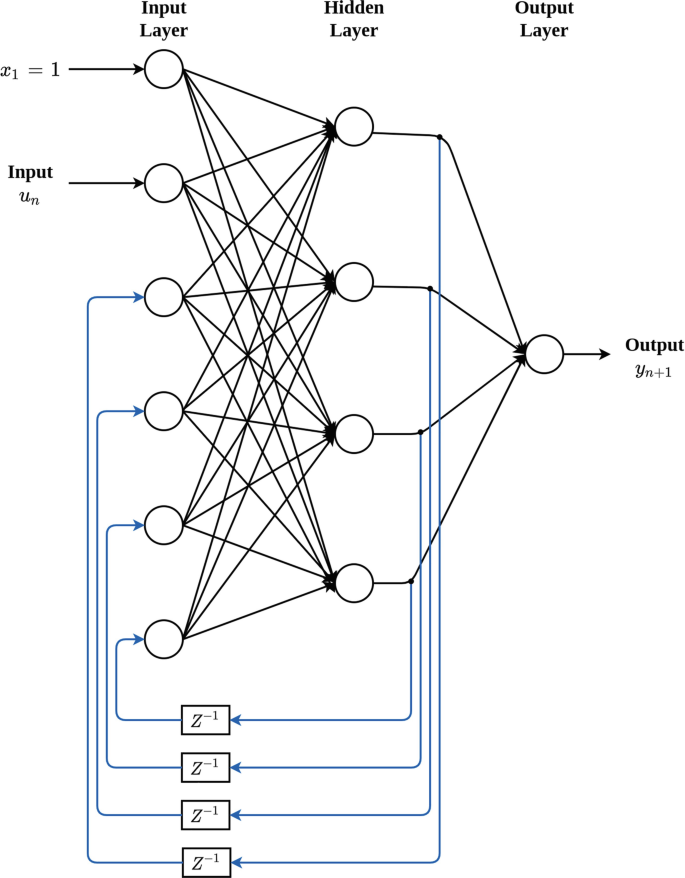

Suppose the objective of your study is to investigate the use of artificial neural networks in cryptography. For the implementation, you can use a simple recurrent structure like the Jordan network, trained by the back-propagation algorithm. You will get a finite state sequential machine, which will be used for the encryption and decryption processes. Additionally, chaotic neural nets can form an integral part of the cryptographic algorithm in such systems.

5. Credit scoring system

Loan defaulters can stimulate enormous losses for banks and financial institutions. Therefore, they have to dedicate significant resources for assessing credit risks and classifying applications. In such a scenario, neural networks can provide an excellent alternative to traditional statistical models.

They offer a better predictive ability and more accurate classification outcomes than techniques like logistic regression and discriminant analysis. So, consider taking up a project to prove the same. You can design a credit scoring system based on artificial neural networks, and a draw a conclusion for your study from the following steps:

- Extract a real-world credit card data set for analysis.

- Determine the structure of neural networks for use, such as mixture-of-experts or the radial basis function.

- Specify weights to minimize the total errors.

- Explain your optimization technique or theory.

- Compare your proposed decision-support system with other credit scoring applications.

6. Web-based training environment

If you want to learn how to create an advanced web education system using modern internet and development technologies, refer to the project called Socratenon. It will give you a peek into how web-based training can go beyond traditional solutions like virtual textbooks. The project’s package has been finalized, and its techniques have been tested for their superiority over other solutions available from open literature.

Socrantenon demonstrates how existing learning environments can be improved using sophisticated tools, such as:

- User modeling to personalize content for users

- Intelligent agents to provide better assistance and search

- An intelligent back-end using neural networks and case-based reasoning

In-demand Machine Learning Skills

7. vehicle security system using facial recognition.

For this project, you can refer to SmartEye, a solution developed by Alfred Ritikos at Universiti Teknologi Malaysia . It covers several techniques, from facial recognition to optics and intelligent software development.

Over the years, security systems have come to benefit from many innovative products that facilitate identification, verification, and authentication of individuals. And SmartEye tries to conceptualize these processes by simulation. Also, it experiments with the existing facial recognition technologies by combining multilevel wavelet decomposition and neural networks.

8. Automatic music generation

An interesting and cutting-edge use of artificial intelligence that has the potential to transform the music industry and creative processes is automatic music generation utilising neural networks. With deep learning, it is possible to make real music without knowing how to play any instruments. You can train machines to write music, harmonise tunes, and create new musical compositions . You can create an automatic music generator using MIDI file data and building an LSTM model to generate new compositions.

OpenAI’s MuseNet serves as the appropriate example for this type of project. MuseNet is a deep neural network programmed to learn from discovered patterns of harmony, style, and rhythm and predict the next tokens to generate musical compositions. It can produce four-minute-long pieces with ten different instruments and combine forms like country music and rock music.

Learn more: Introduction to Deep Learning & Neural Networks

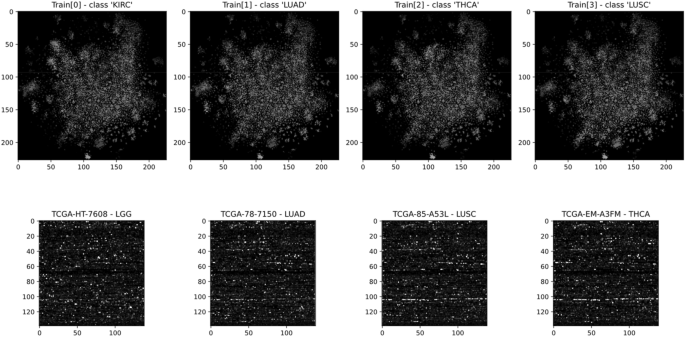

9. Application for cancer detection

Another ground breaking idea for Deep learning projects with source code is in area of medicine for the diagnosis of cancer, which holds great promise for breakthroughs in early identification and better patient outcomes. Neural network implementations have the potential to introduce efficiency in medical diagnosis, and particularly in the field of cancer detection. There are numerous benefits to employing neural networks to detect cancer:

- Early Detection

- Speed and Efficiency

- Scalability

Since cancer cells are different from healthy cells, it is possible to detect the ailment using histology images. In order to learn the intricate patterns and traits associated with cancer, neural networks can be trained on enormous databases of medical pictures. This allows them to detect malignant spots more effectively and reliably. For example, a multi-tiered neural network architecture allows you to classify breast tissue into malignant and benign. You can practice building this breast cancer classifier using an IDC dataset from Kaggle, which is available in the public domain.

10. Text summarizer

By leveraging the capabilities of neural networks, this technology can efficiently extract key information and essential details from documents, articles, or other textual sources, compressing the content while preserving the main ideas and context.

Automatic text summarization involves condensing a piece of text into a shorter version. For this project, you will apply deep neural networks using natural language processing . The manual process of writing summaries is both laborious and time expensive. So, automatic text summarizers have gained immense importance in the area of academic research.

11. Intelligent chatbot

A fascinating use of artificial intelligence and natural language processing (NLP) is developing intelligent chatbots using neural networks. Chatbots can be created to comprehend natural language questions and answer in a human-like manner by utilising the power of neural networks, providing individualised and contextually appropriate interactions.

Modern businesses are using chatbots to take care of routine requests and enhance customer service. Some of these bots can also identify the context of the queries and then respond with relevant answers. So, there are several ways to implement a chatbot system.

You can implement a project on retrieval-based chatbots using NLTK and Keras. Or you can go for generative models that are based on deep neural networks and do not require predefined responses.

Read: How to make chatbot in Python?

12. Human pose estimation project

This project will encompass detecting the human body in an image and then estimating its key points such as eyes, head, neck, knees, elbows, etc. It is the same technology Snapchat and Instagram use to fix face filters on a person. You can use the MPII Human Pose dataset to create your version.

13. Human activity recognition project

You can explore various Neural Network Project ideas, including implementing a neural network-based model for detecting human activities, such as sitting on a chair, falling, picking something up, opening or closing a door, etc. This is a video classification project, which will include combining a series of images and classifying the action. You can use a labeled video clips database, such as 20BN-something-something.

Neural networks and deep learning have brought significant transformations to the world of artificial intelligence. Today, these methods have penetrated a wide range of industries, from medicine and biomedical systems to banking and finance to marketing and retail.

Popular AI and ML Blogs & Free Courses

The journey of exploring neural networks has been one of the most exhilarating phases of my career. Diving into this complex yet fascinating world of artificial intelligence, I’ve had the opportunity to work on various projects, each teaching me something unique about how machines can mimic human brain functions. From these experiences, I’ve compiled a list of Neural Network Project Ideas for Beginners, aimed at helping those just starting out in this field. When I began, the challenge wasn’t just understanding the intricate details of neural networks but also finding practical projects to apply this knowledge. This article is crafted from my firsthand experiences, offering a blend of practical project ideas and topics that are perfectly suited for beginners. Whether you’re a student, a budding professional, or simply an AI enthusiast, these neural network project ideas are tailored to kickstart your journey into the world of artifi cial intelligence.

If you’re interested to gain Machine Learning certification, check out IIIT-B & upGrad’s Executive PG Programme in Machine Learning & AI which is designed for working professionals and offers 450+ hours of rigorous training, 30+ case studies & assignments, IIIT-B Alumni status, 5+ practical hands-on capstone projects & job assistance with top firms.

Refer to your Network!

If you know someone, who would benefit from our specially curated programs? Kindly fill in this form to register their interest. We would assist them to upskill with the right program, and get them a highest possible pre-applied fee-waiver up to ₹ 70,000/-

You earn referral incentives worth up to ₹80,000 for each friend that signs up for a paid programme! Read more about our referral incentives here .

Pavan Vadapalli

Something went wrong

Machine Learning Skills To Master

- Artificial Intelligence Courses

- Tableau Courses

- NLP Courses

- Deep Learning Courses

Our Popular Machine Learning Course

Our Trending Machine Learning Courses

- Advanced Certificate Programme in Machine Learning and NLP from IIIT Bangalore - Duration 8 Months

- Master of Science in Machine Learning & AI from LJMU - Duration 18 Months

- Executive PG Program in Machine Learning and AI from IIIT-B - Duration 12 Months

Frequently Asked Questions (FAQs)

Artificial Intelligence (AI) projects enable machines to perform tasks that would otherwise require human intelligence. Learning, thinking, problem-solving, and perception are all goals of these intelligent creatures. Many theories, methodologies, and technologies are used in AI. Machine learning, neural networks, expert systems, cognitive technologies, human computer interaction, and natural language are just a few of the subfields. Graphics rendering unit, Iot, Complex algorithms, and API are some of the other AI-supporting technologies.

AI can be divided into four categories. Reactive machines are AI systems that do not rely on prior experience to complete a task. They have no memory and respond based on what they see. IBM's chess-playing supercomputers, Deep Blue, are an example. In order to act in current situations, people with limited memory rely on their past experiences. Autonomous vehicles are an example of limited memory. Theory of mind is a form of artificial intelligence system that allows machines to make decisions. None of them are as capable of making decisions as humans are. It is, nonetheless, making substantial progress. A self-aware AI system is one that is aware of its own existence. These systems should be self-aware, aware of their own condition, and able to predict the feelings of others.

Face biometrics are used to unlock a phone in an artificial intelligence project. The AI application can extract image attributes using deep learning. Convolution neural networks and Deep autoencoders networks are the two primary types of neural networks used. It's also a four-step procedure. Detection and face recognition, face alignment, face extraction, and face recognition are the four methods.

Explore Free Courses

Learn more about the education system, top universities, entrance tests, course information, and employment opportunities in Canada through this course.

Advance your career in the field of marketing with Industry relevant free courses

Build your foundation in one of the hottest industry of the 21st century

Master industry-relevant skills that are required to become a leader and drive organizational success

Build essential technical skills to move forward in your career in these evolving times

Get insights from industry leaders and career counselors and learn how to stay ahead in your career

Kickstart your career in law by building a solid foundation with these relevant free courses.

Stay ahead of the curve and upskill yourself on Generative AI and ChatGPT

Build your confidence by learning essential soft skills to help you become an Industry ready professional.

Learn more about the education system, top universities, entrance tests, course information, and employment opportunities in USA through this course.

Suggested Blogs

by venkatesh Rajanala

29 Feb 2024

by Pavan Vadapalli

27 Feb 2024

19 Feb 2024

by Kechit Goyal

18 Feb 2024

![artificial neural networks thesis topics Artificial Intelligence Salary in India [For Beginners & Experienced] in 2024](https://www.upgrad.com/__khugblog-next/image/?url=https%3A%2F%2Fd14b9ctw0m6fid.cloudfront.net%2Fugblog%2Fwp-content%2Fuploads%2F2019%2F11%2F06-banner.png&w=3840&q=75)

17 Feb 2024

![artificial neural networks thesis topics 45+ Interesting Machine Learning Project Ideas For Beginners [2024]](https://www.upgrad.com/__khugblog-next/image/?url=https%3A%2F%2Fd14b9ctw0m6fid.cloudfront.net%2Fugblog%2Fwp-content%2Fuploads%2F2019%2F07%2FBlog_FI_Machine_Learning_Project_Ideas.png&w=3840&q=75)

by Jaideep Khare

16 Feb 2024

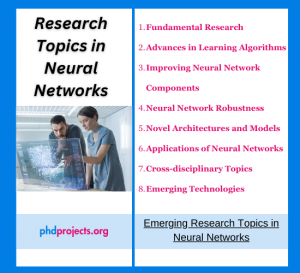

Research Topics in Neural Networks

In artificial intelligence and machine learning, Neural Networks-based research is a wide and consistently emerging field. The concepts include theoretical basics, methodological enhancements, novel frameworks and a broad range of applications. All the members in phdprojects.org are extremely cooperative and work tirelessly to get original and novel topics on your area. We offer dedicated help to provide meaningful project. Below, we discuss about various latest and evolving research concepts in neural networks:

Fundamental Research:

- Neural Network Theory: Our research interprets the neural network’s in-depth theoretical factors such as ability, generalization capabilities and the reason for its robustness among several tasks.

- Optimization Methods: To efficiently and appropriately train the neural networks, we create novel optimization techniques.

- Neural Architecture Search (NAS): Machine learning assists us to discover the best network frameworks and effectively automate the neural network development process.

- Quantum Neural Networks: We examine how quantum techniques improve efficiency of neural networks and analyze the intersection of neural networks and quantum computing.

Advances in Learning Techniques:

- Meta-Learning: In meta-learning, our model learns how to learn and enhances its efficiency with every task with remembering the previously gained skills.

- Federated Learning: By keeping the data confidentiality and safety, we explore the training of distributed neural networks throughout various devices.

- Reinforcement Learning: To accomplish the aim, our approach enhances the methods that enable models to decide consecutive decisions by communicating with their platforms.

- Few-shot or Semi-supervised Learning: This technique allows our neural network models to learn from a limited labeled dataset added with a huge unlabeled dataset.

Enhancing Neural Network Components:

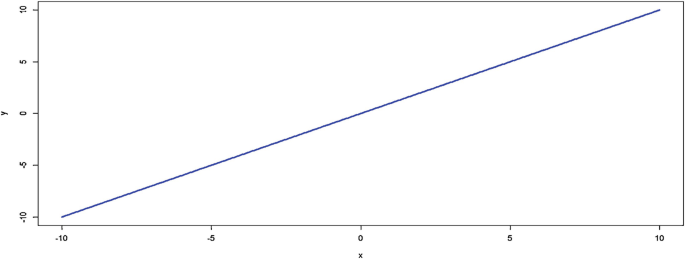

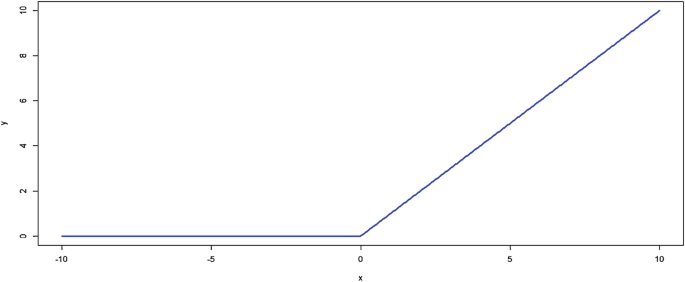

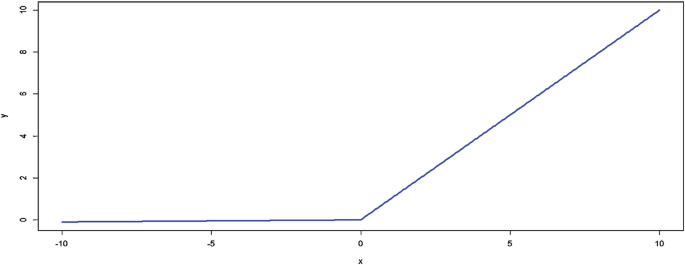

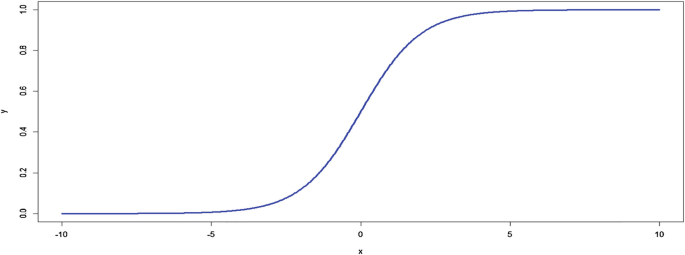

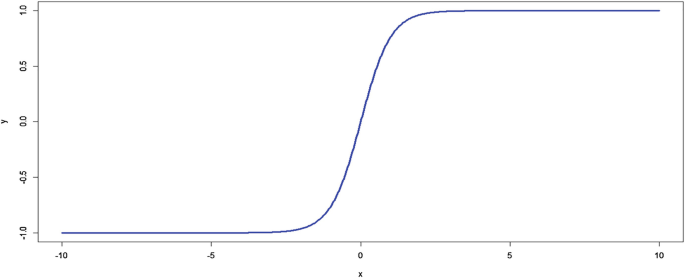

- Activation Functions: To enhance the efficiency and training variations of neural networks, we investigate various activation functions.

- Dynamic & Adaptive Networks: This is about the development of neural networks that alter their design and dimension at the training process based on the difficult nature of the task.

- Regularization Methods: To avoid overfitting issues and enhance the neural network’s generalization, we build novel regularization techniques.

Neural Network Efficiency:

- Explainable AI (XAI): To make our model more clear and reliable, we improve the understandability of neural network decisions.

- Adversarial Machine Learning: Our research explores the neural network’s safety factors, specifically its efficiency against adversarial assaults and creates protection.

- Fault Tolerance in Neural Networks: Make sure whether our neural networks are robust even its aspects fail or data is modified.

New Architectures & Frameworks:

- Capsule Networks: Approaching our capsule networks framework which intends to address the challenges of CNNs including its inefficiency in managing spatial hierarchies.

- Spiking Neural Networks (SNN): We create neural frameworks that nearly copies the processing way of biological neurons and effectively guides to more robust AI frameworks.

- Integrated frameworks: Our project integrates neural networks with statistical frameworks or machine learning to manipulate the effectiveness of both.

Neural Networks Applications:

- Clinical Diagnosis: In clinical imaging and diagnosis such as radiology, pathology and genomics, we enhance the neural network’s utilization.

- Climate Modeling: Neural networks support us to interpret the complicated climatic systems and improve the climate forecasting’s accuracy,

- Automatic Systems: Our project intends to create neural networks to utilize in automatic drones, robots, and self-driving cars.

- Neural Networks in Natural Language processing (NLP): For various tasks such as summarization, translation, question-answering and others, we employ the latest language frameworks.

- Financial Modeling: Neural networks helpful for us to forecast market trends, evaluate severity and automate business.

Cross-disciplinary Concepts:

- Bio-inspired Neural Networks: To develop more robust and effective neural network methods, we observe motivations from neuroscience.

- Neural Networks for Social Good: For overcoming social limitations like disaster concerns, poverty consideration, or monitoring disease spread, our research uses a neural network approach.

Evolving Approaches:

- AI for Creativity: For innovative tasks like creating arts, music, development and writing, we make use of neural networks.

- Edge AI: The process of neural network optimization helps us to effectively execute our model on edge-based devices such as IoT devices or smartphones with a small amount of computational energy.

It is very significant for us to think about the accessible resources, our own knowledge and possible project effects while selecting research concepts. A novel research approach emerges through the association with business, integrative community and institution and it also offers potential applications for our project.

What specific neural network architectures are being explored in the research thesis?

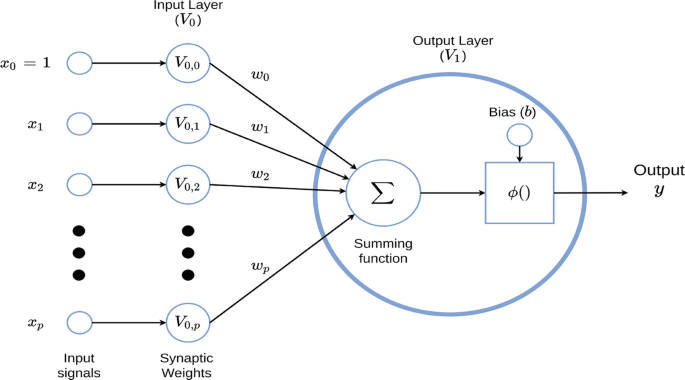

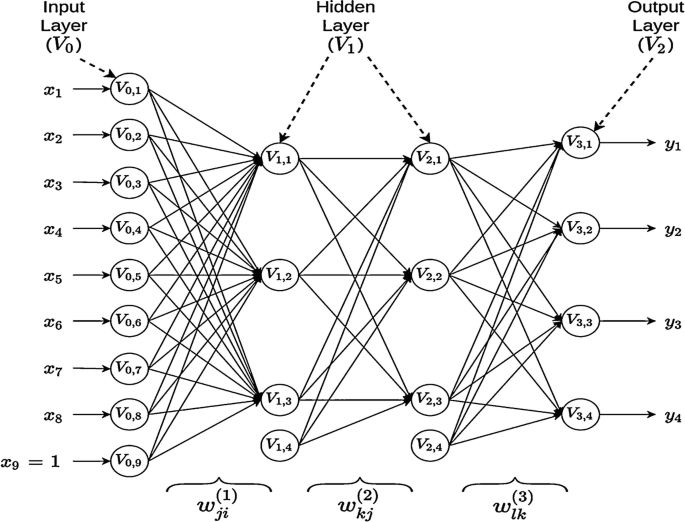

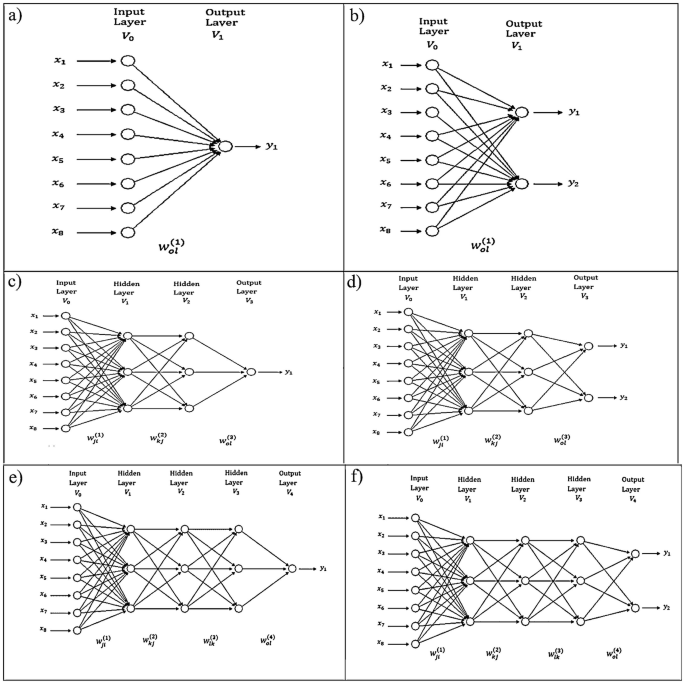

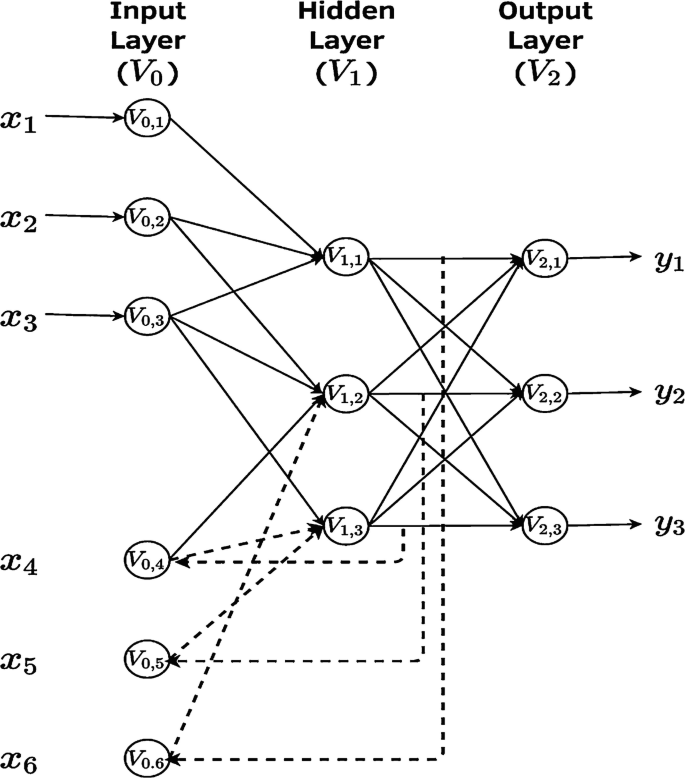

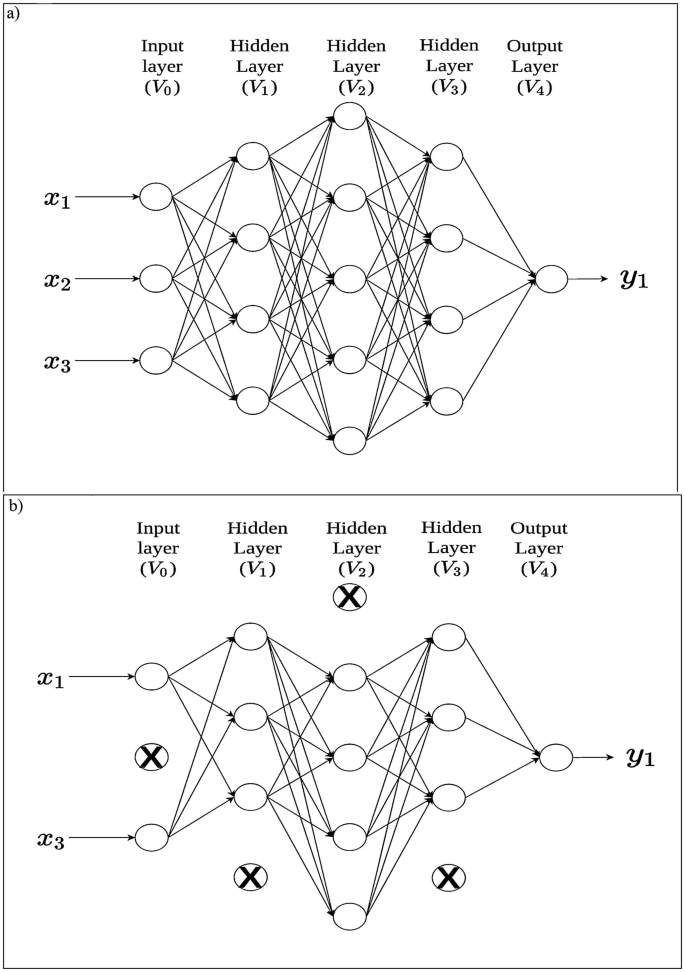

Neural Network Architecture operates by using organized layers to change input data into important depictions. The original layer obtains the unprocessed data, which then undergoes mathematical calculations within one or multiple hidden layers.

Convolutional Neural Networks (CNN) outshine in image recognition tasks, while Recurrent Neural Networks (RNN) prove superior performance in categorization calculation.

- Global Asymptotical Stability of Recurrent Neural Networks With Multiple Discrete Delays and Distributed Delays

- An Improved Algebraic Criterion for Global Exponential Stability of Recurrent Neural Networks With Time-Varying Delays

- Finding Features for Real-Time Premature Ventricular Contraction Detection Using a Fuzzy Neural Network System

- Improved Delay-Dependent Stability Condition of Discrete Recurrent Neural Networks With Time-Varying Delays

- Experiments in the application of neural networks to rotating machine fault diagnosis

- Flash-based programmable nonlinear capacitor for switched-capacitor implementations of neural networks

- Polynomial functions can be realized by finite size multilayer feedforward neural networks

- Convergence of Nonautonomous Cohen–Grossberg-Type Neural Networks With Variable Delays

- Analysis and Optimization of Network Properties for Bionic Topology Hopfield Neural Network Using Gaussian-Distributed Small-World Rewiring Method

- Comparing Support Vector Machines and Feedforward Neural Networks With Similar Hidden-Layer Weights

- An artificial neural network study of the relationship between arousal, task difficulty and learning

- Flow-Based Encrypted Network Traffic Classification With Graph Neural Networks

- Deriving sufficient conditions for global asymptotic stability of delayed neural networks via nonsmooth analysis-II

- Bifurcating pulsed neural networks, chaotic neural networks and parametric recursions: conciliating different frameworks in neuro-like computing

- Prediction of internal surface roughness in drilling using three feedforward neural networks – a comparison

- Comparison of two neural networks approaches to Boolean matrix factorization

- A new class of convolutional neural networks (SICoNNets) and their application of face detection

- The Guelph Darwin Project: the evolution of neural networks by genetic algorithms

- Training neural networks with threshold activation functions and constrained integer weights

- A commodity trading model based on a neural network-expert system hybrid

- PHD Guidance

- PHD PROJECTS UK

- PHD ASSISTANCE IN BANGALORE

- PHD Assistance

- PHD In 3 Months

- PHD Dissertation Help

- PHD IN JAVA PROGRAMMING

- PHD PROJECTS IN MATLAB

- PHD PROJECTS IN RTOOL

- PHD PROJECTS IN WEKA

- PhD projects in computer networking

- COMPUTER SCIENCE THESIS TOPICS FOR UNDERGRADUATES

- PHD PROJECTS AUSTRALIA

- PHD COMPANY

- PhD THESIS STRUCTURE

- PHD GUIDANCE HELP

- PHD PROJECTS IN HADOOP

- PHD PROJECTS IN OPENCV

- PHD PROJECTS IN SCILAB

- PHD PROJECTS IN WORDNET

- NETWORKING PROJECTS FOR PHD

- THESIS TOPICS FOR COMPUTER SCIENCE STUDENTS

- IEEE JOURNALS IN COMPUTER SCIENCE

- OPEN ACCESS JOURNALS IN COMPUTER SCIENCE

- SCIENCE CITATION INDEX COMPUTER SCIENCE JOURNALS

- SPRINGER JOURNALS IN COMPUTER SCIENCE

- ELSEVIER JOURNALS IN COMPUTER SCIENCE

- ACM JOURNALS IN COMPUTER SCIENCE

- INTERNATIONAL JOURNALS FOR COMPUTER SCIENCE AND ENGINEERING

- COMPUTER SCIENCE JOURNALS WITHOUT PUBLICATION FEE

- SCIENCE CITATION INDEX EXPANDED JOURNALS LIST

- THOMSON REUTERS INDEXED JOURNALS

- DOAJ COMPUTER SCIENCE JOURNALS

- SCOPUS INDEXED COMPUTER SCIENCE JOURNALS

- SCI INDEXED COMPUTER SCIENCE JOURNALS

- SPRINGER JOURNALS IN COMPUTER SCIENCE AND TECHNOLOGY

- ISI INDEXED JOURNALS IN COMPUTER SCIENCE

- PAID JOURNALS IN COMPUTER SCIENCE

- NATIONAL JOURNALS IN COMPUTER SCIENCE AND ENGINEERING

- MONTHLY JOURNALS IN COMPUTER SCIENCE

- SCIMAGO JOURNALS LIST

- THOMSON REUTERS INDEXED COMPUTER SCIENCE JOURNALS

- RESEARCH PAPER FOR SALE

- CHEAP PAPER WRITING SERVICE

- RESEARCH PAPER ASSISTANCE

- THESIS BUILDER

- WRITING YOUR JOURNAL ARTICLE IN 12 WEEKS

- WRITE MY PAPER FOR ME

- PHD PAPER WRITING SERVICE

- THESIS MAKER

- THESIS HELPER

- DISSERTATION HELP UK

- DISSERTATION WRITERS UK

- BUY DISSERTATION ONLINE

- PHD THESIS WRITING SERVICES

- DISSERTATION WRITING SERVICES UK

- DISSERTATION WRITING HELP

- PHD PROJECTS IN COMPUTER SCIENCE

- DISSERTATION ASSISTANCE

Artificial Neural Network Thesis Topics

Artificial Neural Network Thesis Topics are recently explored for student’s interest on Artificial Neural Network. This is one of our preeminent services, which have attracted many students and research scholars due to its ever-growing research scope. Artificial Neural Network (ANN) is a mathematical model used to predict system performance, which is inspired by the function and structure of human biological neural networks (function is similar to the human brain and nervous system).

We have world-class engineers with us who are working on every part of this domain to resolve the issues of ANN. Consequently, We are well known for university guidelines worldwide because we tie-ups with top international colleges and universities, and our thesis writing service is delivered in all kinds of research fields. We are developing Artificial Neural Networks based Projects for the past ten years; till now, we have accomplished 1000+ Artificial Neural Network Thesis Topics for students and research scholars.

Neural Network Topics

Artificial Neural Topics offered by us for budding students and research scholars. We always provide thesis topics on current trends because we are one of the members in high-level journals like IEEE, SPRINGER, Elsevier, and other SCI-indexed journals. Our company is an ISO 9001.2000 certified company that wrote a thesis for students and research scholars in the world’s various countries. To select Artificial Thesis Topics, you must know about Artificial Neural Networks and their important aspects. Here’s we have given a brief overview of ANN for your reference,

Key Features of Artificial Neural Networks

- Adaptive Learning

- Patter extraction and detection

- Semantic meaning extraction from imprecise data

- Also in Real time operations

Artificial Neural Networks based Algorithms

- Feedforward Neural Networks

- Radial Basis Function Networks

- Time delay neural network

- Regulatory feedback network

- Probabilistic neural network

- Associative Neural Network

- Fully Recurrent Network

- Echo State Network

- Bi-Directional RNN

- Simple Recurrent Networks

- Stochastic Neural Networks

- Long Short Term Memory Networks

- Genetic Scale RNN

- Holographic Associative Memory

- Spiking Neural Networks

- Cascading Neural Networks

- Dynamic Neural Networks

- Neuro-Fuzzy Networks

- One Shot Associative Memory

- Instantaneously Trained Networks

- Hierarchical Temporal Memory

- Oscillating Neural Network

- Growing Neural Gas

- Counter Propagation Neural Network

- Hybridization Neural Network

- And also in Convolutional Neural Network

List of Tasks That are Used ANN

- Classification (Pattern and sequence recognition, sequential decision making and also novelty detection)

- Control (also as Computer Numerical Control)

- Data processing (Clustering , filtering, compression and also blind source separation)

- Function approximation/Regression analysis (Modeling, fitness approximation and also time series prediction)

- Robotics (Prosthesis and also in directing manipulators)

Major Research Issues on Artificial Neural Network

- Takes long training period

- Complex computation [Time Consuming]

- Ensure the development of Robust method s

- Improve extrapolation ability

- Data with uncertainty

- Increase model transparency

Support for Matlab Toolbox

To solve ANN issues we have also use the latest version of Neural Network Toolbox version 3.0. It offers the following features, including

- New Reduced Memory [Levenburg Marquardt Algorithm also to handle large scale problems]

- New-pre-processing and also post-processing functions

- Newly supervised networks [Probabilistic and also Generalized Regression]

- New network training algorithms [conjugate gradient, two quasi-newton methods, and also to resilient back propagation)

- Automatic creation of network simulation blocks using Simulink

Real Time Applications

- Image compression

- Security related applications

- Medical image processing applications

- Character recognition

- System identification and also control

- Trajectory prediction and also in vehicle control

- Process control and natural resources

- Pattern classification (radar systems, object recognition and also face recognition)

- Data mining (e-mail spam filtering, also knowledge discovery in databases)

- Sequence recognition (Speech, handwritten text recognition and also gesture recognition)

Future Applications of ANNs include

- Integration of Fuzzy logic with ANN

- Pulsed Artificial Neural Networks

- Hardware specialized Artificial Neural Networks

- Robots can see, feel and also in predict the world abnormal behavior

- Music composition

- Self-driving cars common usage

- Improved stock prediction

- Self-diagnosis of medical problems

- And also in Handwritten documents to be automatically transformed

Current Artificial Neural Network Thesis Topics

- Design and analysis of an intelligent flow transmitter based on artificial neural networks

- Inter and intra channel nonlinearity compensation also in WDN OFDM coherent optical using Artificial Neural Network using nonlinear equalization

- Empirical mode decomposition and also artificial neural network based wind turbine using fast, turbsim and Simulink

- Artificial neural network based structural damage fault detection also for profile monitoring

- Reduce common-mode voltage also in cascaded multilevel inverter using artificial neural network

- Diffusion based overlay measurement also using artificial neural networks

- Optimization and also modeling of tensile strength and also yield point on a steel bar using ANN

We have provided a few major aspects of Artificial Neural Networks. But we explore beyond the student’s level, which can make them stand in the field of research. We fully served with a research perspective, and our guidance and assistance make our students Expert. Create your own style in research; let it be unique also intended for yourself and yet identifiable for others.

Related Pages

Services we offer.

Mathematical proof

Pseudo code

Conference Paper

Research Proposal

System Design

Literature Survey

Data Collection

Thesis Writing

Data Analysis

Rough Draft

Paper Collection

Code and Programs

Paper Writing

Course Work

Available Master's thesis topics in machine learning

Main content.

Here we list topics that are available. You may also be interested in our list of completed Master's theses .

Learning and inference with large Bayesian networks

Most learning and inference tasks with Bayesian networks are NP-hard. Therefore, one often resorts to using different heuristics that do not give any quality guarantees.

Task: Evaluate quality of large-scale learning or inference algorithms empirically.

Advisor: Pekka Parviainen

Sum-product networks

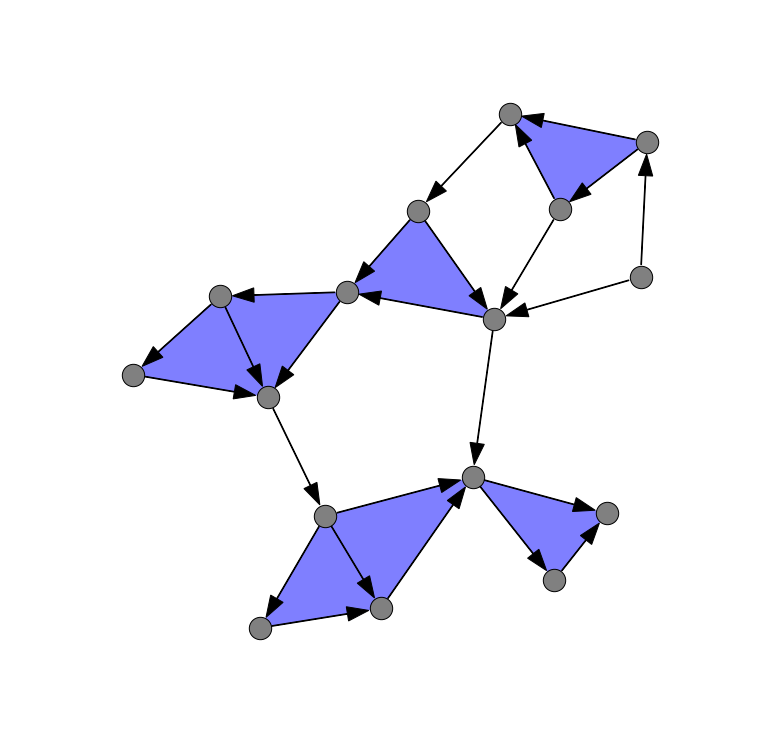

Traditionally, probabilistic graphical models use a graph structure to represent dependencies and independencies between random variables. Sum-product networks are a relatively new type of a graphical model where the graphical structure models computations and not the relationships between variables. The benefit of this representation is that inference (computing conditional probabilities) can be done in linear time with respect to the size of the network.

Potential thesis topics in this area: a) Compare inference speed with sum-product networks and Bayesian networks. Characterize situations when one model is better than the other. b) Learning the sum-product networks is done using heuristic algorithms. What is the effect of approximation in practice?

Bayesian Bayesian networks

The naming of Bayesian networks is somewhat misleading because there is nothing Bayesian in them per se; A Bayesian network is just a representation of a joint probability distribution. One can, of course, use a Bayesian network while doing Bayesian inference. One can also learn Bayesian networks in a Bayesian way. That is, instead of finding an optimal network one computes the posterior distribution over networks.

Task: Develop algorithms for Bayesian learning of Bayesian networks (e.g., MCMC, variational inference, EM)

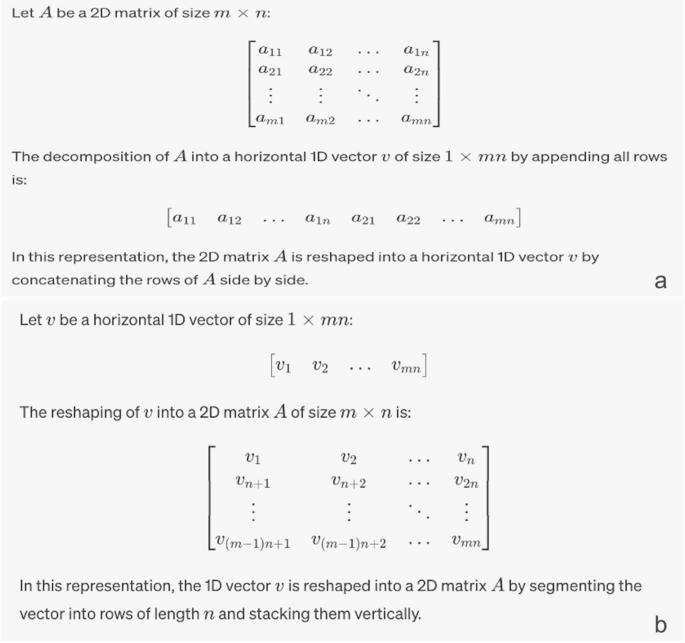

Large-scale (probabilistic) matrix factorization

The idea behind matrix factorization is to represent a large data matrix as a product of two or more smaller matrices.They are often used in, for example, dimensionality reduction and recommendation systems. Probabilistic matrix factorization methods can be used to quantify uncertainty in recommendations. However, large-scale (probabilistic) matrix factorization is computationally challenging.

Potential thesis topics in this area: a) Develop scalable methods for large-scale matrix factorization (non-probabilistic or probabilistic), b) Develop probabilistic methods for implicit feedback (e.g., recommmendation engine when there are no rankings but only knowledge whether a customer has bought an item)

Bayesian deep learning

Standard deep neural networks do not quantify uncertainty in predictions. On the other hand, Bayesian methods provide a principled way to handle uncertainty. Combining these approaches leads to Bayesian neural networks. The challenge is that Bayesian neural networks can be cumbersome to use and difficult to learn.

The task is to analyze Bayesian neural networks and different inference algorithms in some simple setting.

Deep learning for combinatorial problems

Deep learning is usually applied in regression or classification problems. However, there has been some recent work on using deep learning to develop heuristics for combinatorial optimization problems; see, e.g., [1] and [2].

Task: Choose a combinatorial problem (or several related problems) and develop deep learning methods to solve them.

References: [1] Vinyals, Fortunato and Jaitly: Pointer networks. NIPS 2015. [2] Dai, Khalil, Zhang, Dilkina and Song: Learning Combinatorial Optimization Algorithms over Graphs. NIPS 2017.

Advisors: Pekka Parviainen, Ahmad Hemmati

Estimating the number of modes of an unknown function

Mode seeking considers estimating the number of local maxima of a function f. Sometimes one can find modes by, e.g., looking for points where the derivative of the function is zero. However, often the function is unknown and we have only access to some (possibly noisy) values of the function.

In topological data analysis, we can analyze topological structures using persistent homologies. For 1-dimensional signals, this can translate into looking at the birth/death persistence diagram, i.e. the birth and death of connected topological components as we expand the space around each point where we have observed our function. These observations turn out to be closely related to the modes (local maxima) of the function. A recent paper [1] proposed an efficient method for mode seeking.

In this project, the task is to extend the ideas from [1] to get a probabilistic estimate on the number of modes. To this end, one has to use probabilistic methods such as Gaussian processes.

[1] U. Bauer, A. Munk, H. Sieling, and M. Wardetzky. Persistence barcodes versus Kolmogorov signatures: Detecting modes of one-dimensional signals. Foundations of computational mathematics17:1 - 33, 2017.

Advisors: Pekka Parviainen , Nello Blaser

Causal Abstraction Learning

We naturally make sense of the world around us by working out causal relationships between objects and by representing in our minds these objects with different degrees of approximation and detail. Both processes are essential to our understanding of reality, and likely to be fundamental for developing artificial intelligence. The first process may be expressed using the formalism of structural causal models, while the second can be grounded in the theory of causal abstraction.

This project will consider the problem of learning an abstraction between two given structural causal models. The primary goal will be the development of efficient algorithms able to learn a meaningful abstraction between the given causal models.

Advisor: Fabio Massimo Zennaro

Causal Bandits

"Multi-armed bandit" is an informal name for slot machines, and the formal name of a large class of problems where an agent has to choose an action among a range of possibilities without knowing the ensuing rewards. Multi-armed bandit problems are one of the most essential reinforcement learning problems where an agent is directly faced with an exploitation-exploration trade-off.

This project will consider a class of multi-armed bandits where an agent, upon taking an action, interacts with a causal system. The primary goal will be the development of learning strategies that takes advantage of the underlying causal system in order to learn optimal policies in a shortest amount of time.

Causal Modelling for Battery Manufacturing

Lithium-ion batteries are poised to be one of the most important sources of energy in the near future. Yet, the process of manufacturing these batteries is very hard to model and control. Optimizing the different phases of production to maximize the lifetime of the batteries is a non-trivial challenge since physical models are limited in scope and collecting experimental data is extremely expensive and time-consuming.

This project will consider the problem of aggregating and analyzing data regarding a few stages in the process of battery manufacturing. The primary goal will be the development of algorithms for transporting and integrating data collected in different contexts, as well as the use of explainable algorithms to interpret them.

Reinforcement Learning for Computer Security

The field of computer security presents a wide variety of challenging problems for artificial intelligence and autonomous agents. Guaranteeing the security of a system against attacks and penetrations by malicious hackers has always been a central concern of this field, and machine learning could now offer a substantial contribution. Security capture-the-flag simulations are particularly well-suited as a testbed for the application and development of reinforcement learning algorithms.

This project will consider the use of reinforcement learning for the preventive purpose of testing systems and discovering vulnerabilities before they can be exploited. The primary goal will be the modelling of capture-the-flag challenges of interest and the development of reinforcement learning algorithms that can solve them.

Approaches to AI Safety

The world and the Internet are more and more populated by artificial autonomous agents carrying out tasks on our behalf. Many of these agents are provided with an objective and they learn their behaviour trying to achieve their objective as best as they can. However, this approach can not guarantee that an agent, while learning its behaviour, will not undertake actions that may have unforeseen and undesirable effects. Research in AI safety tries to design autonomous agent that will behave in a predictable and safe way.

This project will consider specific problems and novel solution in the domain of AI safety and reinforcement learning. The primary goal will be the development of innovative algorithms and their implementation withing established frameworks.

Reinforcement Learning for Super-modelling

Super-modelling [1] is a technique designed for combining together complex dynamical models: pre-trained models are aggregated with messages and information being exchanged in order synchronize the behavior of the different modles and produce more accurate and reliable predictions. Super-models are used, for instance, in weather or climate science, where pre-existing models are ensembled together and their states dynamically aggregated to generate more realistic simulations.

This project will consider how reinforcement learning algorithms may be used to solve the coordination problem among the individual models forming a super-model. The primary goal will be the formulation of the super-modelling problem within the reinforcement learning framework and the study of custom RL algorithms to improve the overall performance of super-models.

[1] Schevenhoven, Francine, et al. "Supermodeling: improving predictions with an ensemble of interacting models." Bulletin of the American Meteorological Society 104.9 (2023): E1670-E1686.

Advisor: Fabio Massimo Zennaro , Francine Janneke Schevenhoven

The Topology of Flight Paths

Air traffic data tells us the position, direction, and speed of an aircraft at a given time. In other words, if we restrict our focus to a single aircraft, we are looking at a multivariate time-series. We can visualize the flight path as a curve above earth's surface quite geometrically. Topological data analysis (TDA) provides different methods for analysing the shape of data. Consequently, TDA may help us to extract meaningful features from the air traffic data. Although the typical flight path shapes may not be particularly intriguing, we can attempt to identify more intriguing patterns or “abnormal” manoeuvres, such as aborted landings, go-arounds, or diverts.

Advisor: Odin Hoff Gardå , Nello Blaser

Automatic hyperparameter selection for isomap

Isomap is a non-linear dimensionality reduction method with two free hyperparameters (number of nearest neighbors and neighborhood radius). Different hyperparameters result in dramatically different embeddings. Previous methods for selecting hyperparameters focused on choosing one optimal hyperparameter. In this project, you will explore the use of persistent homology to find parameter ranges that result in stable embeddings. The project has theoretic and computational aspects.

Advisor: Nello Blaser

Validate persistent homology

Persistent homology is a generalization of hierarchical clustering to find more structure than just the clusters. Traditionally, hierarchical clustering has been evaluated using resampling methods and assessing stability properties. In this project you will generalize these resampling methods to develop novel stability properties that can be used to assess persistent homology. This project has theoretic and computational aspects.

Topological Ancombs quartet

This topic is based on the classical Ancombs quartet and families of point sets with identical 1D persistence ( https://arxiv.org/abs/2202.00577 ). The goal is to generate more interesting datasets using the simulated annealing methods presented in ( http://library.usc.edu.ph/ACM/CHI%202017/1proc/p1290.pdf ). This project is mostly computational.

Persistent homology vectorization with cycle location

There are many methods of vectorizing persistence diagrams, such as persistence landscapes, persistence images, PersLay and statistical summaries. Recently we have designed algorithms to in some cases efficiently detect the location of persistence cycles. In this project, you will vectorize not just the persistence diagram, but additional information such as the location of these cycles. This project is mostly computational with some theoretic aspects.

Divisive covers

Divisive covers are a divisive technique for generating filtered simplicial complexes. They original used a naive way of dividing data into a cover. In this project, you will explore different methods of dividing space, based on principle component analysis, support vector machines and k-means clustering. In addition, you will explore methods of using divisive covers for classification. This project will be mostly computational.

Learning Acquisition Functions for Cost-aware Bayesian Optimization

This is a follow-up project of an earlier Master thesis that developed a novel method for learning Acquisition Functions in Bayesian Optimization through the use of Reinforcement Learning. The goal of this project is to further generalize this method (more general input, learned cost-functions) and apply it to hyperparameter optimization for neural networks.

Advisors: Nello Blaser , Audun Ljone Henriksen

Stable updates

This is a follow-up project of an earlier Master thesis that introduced and studied empirical stability in the context of tree-based models. The goal of this project is to develop stable update methods for deep learning models. You will design sevaral stable methods and empirically compare them (in terms of loss and stability) with a baseline and with one another.

Advisors: Morten Blørstad , Nello Blaser

Multimodality in Bayesian neural network ensembles

One method to assess uncertainty in neural network predictions is to use dropout or noise generators at prediction time and run every prediction many times. This leads to a distribution of predictions. Informatively summarizing such probability distributions is a non-trivial task and the commonly used means and standard deviations result in the loss of crucial information, especially in the case of multimodal distributions with distinct likely outcomes. In this project, you will analyze such multimodal distributions with mixture models and develop ways to exploit such multimodality to improve training. This project can have theoretical, computational and applied aspects.

Learning a hierarchical metric

Often, labels have defined relationships to each other, for instance in a hierarchical taxonomy. E.g. ImageNet labels are derived from the WordNet graph, and biological species are taxonomically related, and can have similarities depending on life stage, sex, or other properties.

ArcFace is an alternative loss function that aims for an embedding that is more generally useful than softmax. It is commonly used in metric learning/few shot learning cases.

Here, we will develop a metric learning method that learns from data with hierarchical labels. Using multiple ArcFace heads, we will simultaneously learn to place representations to optimize the leaf label as well as intermediate labels on the path from leaf to root of the label tree. Using taxonomically classified plankton image data, we will measure performance as a function of ArcFace parameters (sharpness/temperature and margins -- class-wise or level-wise), and compare the results to existing methods.

Advisor: Ketil Malde ( [email protected] )

Self-supervised object detection in video

One challenge with learning object detection is that in many scenes that stretch off into the distance, annotating small, far-off, or blurred objects is difficult. It is therefore desirable to learn from incompletely annotated scenes, and one-shot object detectors may suffer from incompletely annotated training data.

To address this, we will use a region-propsal algorithm (e.g. SelectiveSearch) to extract potential crops from each frame. Classification will be based on two approaches: a) training based on annotated fish vs random similarly-sized crops without annotations, and b) using a self-supervised method to build a representation for crops, and building a classifier for the extracted regions. The method will be evaluated against one-shot detectors and other training regimes.

If successful, the method will be applied to fish detection and tracking in videos from baited and unbaited underwater traps, and used to estimate abundance of various fish species.

See also: Benettino (2016): https://link.springer.com/chapter/10.1007/978-3-319-48881-3_56

Representation learning for object detection

While traditional classifiers work well with data that is labeled with disjoint classes and reasonably balanced class abundances, reality is often less clean. An alternative is to learn a vectors space embedding that reflects semantic relationships between objects, and deriving classes from this representation. This is especially useful for few-shot classification (ie. very few examples in the training data).

The task here is to extend a modern object detector (e.g. Yolo v8) to output an embedding of the identified object. Instead of a softmax classifier, we can learn the embedding either in a supervised manner (using annotations on frames) by attaching an ArcFace or other supervised metric learning head. Alternatively, the representation can be learned from tracked detections over time using e.g. a contrastive loss function to keep the representation for an object (approximately) constant over time. The performance of the resulting object detector will be measured on underwater videos, targeting species detection and/or indiviual recognition (re-ID).

Time-domain object detection

Object detectors for video are normally trained on still frames, but it is evident (from human experience) that using time domain information is more effective. I.e., it can be hard to identify far-off or occluded objects in still images, but movement in time often reveals them.

Here we will extend a state of the art object detector (e.g. yolo v8) with time domain data. Instead of using a single frame as input, the model will be modified to take a set of frames surrounding the annotated frame as input. Performance will be compared to using single-frame detection.

Large-scale visualization of acoustic data

The Institute of Marine Research has decades of acoustic data collected in various surveys. These data are in the process of being converted to data formats that can be processed and analyzed more easily using packages like Xarray and Dask.

The objective is to make these data more accessible to regular users by providing a visual front end. The user should be able to quickly zoom in and out, perform selection, export subsets, apply various filters and classifiers, and overlay annotations and other relevant auxiliary data.

Learning acoustic target classification from simulation

Broadband echosounders emit a complex signal that spans a large frequency band. Different targets will reflect, absorb, and generate resonance at different amplitudes and frequencies, and it is therefore possible to classify targets at much higher resolution and accuracy than before. Due to the complexity of the received signals, deriving effective profiles that can be used to identify targets is difficult.

Here we will use simulated frequency spectra from geometric objects with various shapes, orientation, and other properties. We will train ML models to estimate (recover) the geometric and material properties of objects based on these spectra. The resulting model will be applied to read broadband data, and compared to traditional classification methods.

Online learning in real-time systems

Build a model for the drilling process by using the Virtual simulator OpenLab ( https://openlab.app/ ) for real-time data generation and online learning techniques. The student will also do a short survey of existing online learning techniques and learn how to cope with errors and delays in the data.

Advisor: Rodica Mihai

Building a finite state automaton for the drilling process by using queries and counterexamples

Datasets will be generated by using the Virtual simulator OpenLab ( https://openlab.app/ ). The student will study the datasets and decide upon a good setting to extract a finite state automaton for the drilling process. The student will also do a short survey of existing techniques for extracting finite state automata from process data. We present a novel algorithm that uses exact learning and abstraction to extract a deterministic finite automaton describing the state dynamics of a given trained RNN. We do this using Angluin's L*algorithm as a learner and the trained RNN as an oracle. Our technique efficiently extracts accurate automata from trained RNNs, even when the state vectors are large and require fine differentiation.arxiv.org

Scaling Laws for Language Models in Generative AI

Large Language Models (LLM) power today's most prominent language technologies in Generative AI like ChatGPT, which, in turn, are changing the way that people access information and solve tasks of many kinds.

A recent interest on scaling laws for LLMs has shown trends on understanding how well they perform in terms of factors like the how much training data is used, how powerful the models are, or how much computational cost is allocated. (See, for example, Kaplan et al. - "Scaling Laws for Neural Language Models”, 2020.)

In this project, the task will consider to study scaling laws for different language models and with respect with one or multiple modeling factors.

Advisor: Dario Garigliotti

Applications of causal inference methods to omics data

Many hard problems in machine learning are directly linked to causality [1]. The graphical causal inference framework developed by Judea Pearl can be traced back to pioneering work by Sewall Wright on path analysis in genetics and has inspired research in artificial intelligence (AI) [1].

The Michoel group has developed the open-source tool Findr [2] which provides efficient implementations of mediation and instrumental variable methods for applications to large sets of omics data (genomics, transcriptomics, etc.). Findr works well on a recent data set for yeast [3].

We encourage students to explore promising connections between the fiels of causal inference and machine learning. Feel free to contact us to discuss projects related to causal inference. Possible topics include: a) improving methods based on structural causal models, b) evaluating causal inference methods on data for model organisms, c) comparing methods based on causal models and neural network approaches.

References:

1. Schölkopf B, Causality for Machine Learning, arXiv (2019): https://arxiv.org/abs/1911.10500

2. Wang L and Michoel T. Efficient and accurate causal inference with hidden confounders from genome-transcriptome variation data. PLoS Computational Biology 13:e1005703 (2017). https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1005703

3. Ludl A and and Michoel T. Comparison between instrumental variable and mediation-based methods for reconstructing causal gene networks in yeast. arXiv:2010.07417 https://arxiv.org/abs/2010.07417

Advisors: Adriaan Ludl , Tom Michoel

Space-Time Linkage of Fish Distribution to Environmental Conditions

Conditions in the marine environment, such as, temperature and currents, influence the spatial distribution and migration patterns of marine species. Hence, understanding the link between environmental factors and fish behavior is crucial in predicting, e.g., how fish populations may respond to climate change. Deriving this link is challenging because it requires analysis of two types of datasets (i) large environmental (currents, temperature) datasets that vary in space and time, and (ii) sparse and sporadic spatial observations of fish populations.

Project goal

The primary goal of the project is to develop a methodology that helps predict how spatial distribution of two fish stocks (capelin and mackerel) change in response to variability in the physical marine environment (ocean currents and temperature). The information can also be used to optimize data collection by minimizing time spent in spatial sampling of the populations.

The project will focus on the use of machine learning and/or causal inference algorithms. As a first step, we use synthetic (fish and environmental) data from analytic models that couple the two data sources. Because the ‘truth’ is known, we can judge the efficiency and error margins of the methodologies. We then apply the methodologies to real world (empirical) observations.

Advisors: Tom Michoel , Sam Subbey .

Towards precision medicine for cancer patient stratification

On average, a drug or a treatment is effective in only about half of patients who take it. This means patients need to try several until they find one that is effective at the cost of side effects associated with every treatment. The ultimate goal of precision medicine is to provide a treatment best suited for every individual. Sequencing technologies have now made genomics data available in abundance to be used towards this goal.

In this project we will specifically focus on cancer. Most cancer patients get a particular treatment based on the cancer type and the stage, though different individuals will react differently to a treatment. It is now well established that genetic mutations cause cancer growth and spreading and importantly, these mutations are different in individual patients. The aim of this project is use genomic data allow to better stratification of cancer patients, to predict the treatment most likely to work. Specifically, the project will use machine learning approach to integrate genomic data and build a classifier for stratification of cancer patients.

Advisor: Anagha Joshi

Unraveling gene regulation from single cell data

Multi-cellularity is achieved by precise control of gene expression during development and differentiation and aberrations of this process leads to disease. A key regulatory process in gene regulation is at the transcriptional level where epigenetic and transcriptional regulators control the spatial and temporal expression of the target genes in response to environmental, developmental, and physiological cues obtained from a signalling cascade. The rapid advances in sequencing technology has now made it feasible to study this process by understanding the genomewide patterns of diverse epigenetic and transcription factors as well as at a single cell level.

Single cell RNA sequencing is highly important, particularly in cancer as it allows exploration of heterogenous tumor sample, obstructing therapeutic targeting which leads to poor survival. Despite huge clinical relevance and potential, analysis of single cell RNA-seq data is challenging. In this project, we will develop strategies to infer gene regulatory networks using network inference approaches (both supervised and un-supervised). It will be primarily tested on the single cell datasets in the context of cancer.

Developing a Stress Granule Classifier

To carry out the multitude of functions 'expected' from a human cell, the cell employs a strategy of division of labour, whereby sub-cellular organelles carry out distinct functions. Thus we traditionally understand organelles as distinct units defined both functionally and physically with a distinct shape and size range. More recently a new class of organelles have been discovered that are assembled and dissolved on demand and are composed of liquid droplets or 'granules'. Granules show many properties characteristic of liquids, such as flow and wetting, but they can also assume many shapes and indeed also fluctuate in shape. One such liquid organelle is a stress granule (SG).

Stress granules are pro-survival organelles that assemble in response to cellular stress and important in cancer and neurodegenerative diseases like Alzheimer's. They are liquid or gel-like and can assume varying sizes and shapes depending on their cellular composition.

In a given experiment we are able to image the entire cell over a time series of 1000 frames; from which we extract a rough estimation of the size and shape of each granule. Our current method is susceptible to noise and a granule may be falsely rejected if the boundary is drawn poorly in a small majority of frames. Ideally, we would also like to identify potentially interesting features, such as voids, in the accepted granules.

We are interested in applying a machine learning approach to develop a descriptor for a 'classic' granule and furthermore classify them into different functional groups based on disease status of the cell. This method would be applied across thousands of granules imaged from control and disease cells. We are a multi-disciplinary group consisting of biologists, computational scientists and physicists.

Advisors: Sushma Grellscheid , Carl Jones

Machine Learning based Hyperheuristic algorithm

Develop a Machine Learning based Hyper-heuristic algorithm to solve a pickup and delivery problem. A hyper-heuristic is a heuristics that choose heuristics automatically. Hyper-heuristic seeks to automate the process of selecting, combining, generating or adapting several simpler heuristics to efficiently solve computational search problems [Handbook of Metaheuristics]. There might be multiple heuristics for solving a problem. Heuristics have their own strength and weakness. In this project, we want to use machine-learning techniques to learn the strength and weakness of each heuristic while we are using them in an iterative search for finding high quality solutions and then use them intelligently for the rest of the search. Once a new information is gathered during the search the hyper-heuristic algorithm automatically adjusts the heuristics.

Advisor: Ahmad Hemmati

Machine learning for solving satisfiability problems and applications in cryptanalysis

Advisor: Igor Semaev

Hybrid modeling approaches for well drilling with Sintef

Several topics are available.

"Flow models" are first-principles models simulating the flow, temperature and pressure in a well being drilled. Our project is exploring "hybrid approaches" where these models are combined with machine learning models that either learn from time series data from flow model runs or from real-world measurements during drilling. The goal is to better detect drilling problems such as hole cleaning, make more accurate predictions and correctly learn from and interpret real-word data.

The "surrogate model" refers to a ML model which learns to mimic the flow model by learning from the model inputs and outputs. Use cases for surrogate models include model predictions where speed is favoured over accuracy and exploration of parameter space.

Surrogate models with active Learning

While it is possible to produce a nearly unlimited amount of training data by running the flow model, the surrogate model may still perform poorly if it lacks training data in the part of the parameter space it operates in or if it "forgets" areas of the parameter space by being fed too much data from a narrow range of parameters.

The goal of this thesis is to build a surrogate model (with any architecture) for some restricted parameter range and implement an active learning approach where the ML requests more model runs from the flow model in the parts of the parameter space where it is needed the most. The end result should be a surrogate model that is quick and performs acceptably well over the whole defined parameter range.

Surrogate models trained via adversarial learning

How best to train surrogate models from runs of the flow model is an open question. This master thesis would use the adversarial learning approach to build a surrogate model which to its "adversary" becomes indistinguishable from the output of an actual flow model run.

GPU-based Surrogate models for parameter search

While CPU speed largely stalled 20 years ago in terms of working frequency on single cores, multi-core CPUs and especially GPUs took off and delivered increases in computational power by parallelizing computations.

Modern machine learning such as deep learning takes advantage this boom in computing power by running on GPUs.

The SINTEF flow models in contrast, are software programs that runs on a CPU and does not happen to utilize multi-core CPU functionality. The model runs advance time-step by time-step and each time step relies on the results from the previous time step. The flow models are therefore fundamentally sequential and not well suited to massive parallelization.

It is however of interest to run different model runs in parallel, to explore parameter spaces. The use cases for this includes model calibration, problem detection and hypothesis generation and testing.

The task of this thesis is to implement an ML-based surrogate model in such a way that many surrogate model outputs can be produced at the same time using a single GPU. This will likely entail some trade off with model size and maybe some coding tricks.

Uncertainty estimates of hybrid predictions (Lots of room for creativity, might need to steer it more, needs good background literature)

When using predictions from a ML model trained on time series data, it is useful to know if it's accurate or should be trusted. The student is challenged to develop hybrid approaches that incorporates estimates of uncertainty. Components could include reporting variance from ML ensembles trained on a diversity of time series data, implementation of conformal predictions, analysis of training data parameter ranges vs current input, etc. The output should be a "traffic light signal" roughly indicating the accuracy of the predictions.

Transfer learning approaches

We're assuming an ML model is to be used for time series prediction

It is possible to train an ML on a wide range of scenarios in the flow models, but we expect that to perform well, the model also needs to see model runs representative of the type of well and drilling operation it will be used in. In this thesis the student implements a transfer learning approach, where the model is trained on general model runs and fine-tuned on a most representative data set.

(Bonus1: implementing one-shot learning, Bonus2: Using real-world data in the fine-tuning stage)

ML capable of reframing situations

When a human oversees an operation like well drilling, she has a mental model of the situation and new data such as pressure readings from the well is interpreted in light of this model. This is referred to as "framing" and is the normal mode of work. However, when a problem occurs, it becomes harder to reconcile the data with the mental model. The human then goes into "reframing", building a new mental model that includes the ongoing problem. This can be seen as a process of hypothesis generation and testing.

A computer model however, lacks re-framing. A flow model will keep making predictions under the assumption of no problems and a separate alarm system will use the deviation between the model predictions and reality to raise an alarm. This is in a sense how all alarm systems work, but it means that the human must discard the computer model as a tool at the same time as she's handling a crisis.

The student is given access to a flow model and a surrogate model which can learn from model runs both with and without hole cleaning and is challenged to develop a hybrid approach where the ML+flow model continuously performs hypothesis generation and testing and is able to "switch" into predictions of a hole cleaning problem and different remediations of this.

Advisor: Philippe Nivlet at Sintef together with advisor from UiB

Explainable AI at Equinor

In the project Machine Teaching for XAI (see https://xai.w.uib.no ) a master thesis in collaboration between UiB and Equinor.

Advisor: One of Pekka Parviainen/Jan Arne Telle/Emmanuel Arrighi + Bjarte Johansen from Equinor.

Explainable AI at Eviny

In the project Machine Teaching for XAI (see https://xai.w.uib.no ) a master thesis in collaboration between UiB and Eviny.

Advisor: One of Pekka Parviainen/Jan Arne Telle/Emmanuel Arrighi + Kristian Flikka from Eviny.

If you want to suggest your own topic, please contact Pekka Parviainen , Fabio Massimo Zennaro or Nello Blaser .

- Current Members

- Off-Campus Students

- Robot Videos

- Funded Projects

- Publications by Year

- Publications by Type

- Robot Learning Lecture

- Robot Learning IP

- Humanoid Robotics Seminar

- Research Oberseminar

- New, Open Topics

- Ongoing Theses

- Completed Theses

- External Theses

- Advice for Thesis Students

- Thesis Checklist and Template

- Jobs and Open Positions

- Current Openings

- Information for Applicants

- Apply Here!

- TU Darmstadt Student Hiwi Jobs

- Contact Information

Currently Open Theses Topics

We offer these current topics directly for Bachelor and Master students at TU Darmstadt who can feel free to DIRECTLY contact the thesis advisor if you are interested in one of these topics. Excellent external students from another university may be accepted but are required to first email Jan Peters before contacting any other lab member for a thesis topic. Note that we cannot provide funding for any of these theses projects.

We highly recommend that you do either our robotics and machine learning lectures ( Robot Learning , Statistical Machine Learning ) or our colleagues ( Grundlagen der Robotik , Probabilistic Graphical Models and/or Deep Learning). Even more important to us is that you take both Robot Learning: Integrated Project, Part 1 (Literature Review and Simulation Studies) and Part 2 (Evaluation and Submission to a Conference) before doing a thesis with us.

In addition, we are usually happy to devise new topics on request to suit the abilities of excellent students. Please DIRECTLY contact the thesis advisor if you are interested in one of these topics. When you contact the advisor, it would be nice if you could mention (1) WHY you are interested in the topic (dreams, parts of the problem, etc), and (2) WHAT makes you special for the projects (e.g., class work, project experience, special programming or math skills, prior work, etc.). Supplementary materials (CV, grades, etc) are highly appreciated. Of course, such materials are not mandatory but they help the advisor to see whether the topic is too easy, just about right or too hard for you.

Only contact *ONE* potential advisor at the same time! If you contact a second one without first concluding discussions with the first advisor (i.e., decide for or against the thesis with her or him), we may not consider you at all. Only if you are super excited for at most two topics send an email to both supervisors, so that the supervisors are aware of the additional interest.

FOR FB16+FB18 STUDENTS: Students from other depts at TU Darmstadt (e.g., ME, EE, IST), you need an additional formal supervisor who officially issues the topic. Please do not try to arrange your home dept advisor by yourself but let the supervising IAS member get in touch with that person instead. Multiple professors from other depts have complained that they were asked to co-supervise before getting contacted by our advising lab member.

NEW THESES START HERE

Imitation Learning for High-Speed Robot Air Hockey