Sample Size Policy for Qualitative Studies Using In-Depth Interviews

- Published: 12 September 2012

- Volume 41 , pages 1319–1320, ( 2012 )

Cite this article

- Shari L. Dworkin 1

296k Accesses

565 Citations

28 Altmetric

Explore all metrics

Avoid common mistakes on your manuscript.

In recent years, there has been an increase in submissions to the Journal that draw on qualitative research methods. This increase is welcome and indicates not only the interdisciplinarity embraced by the Journal (Zucker, 2002 ) but also its commitment to a wide array of methodologies.

For those who do select qualitative methods and use grounded theory and in-depth interviews in particular, there appear to be a lot of questions that authors have had recently about how to write a rigorous Method section. This topic will be addressed in a subsequent Editorial. At this time, however, the most common question we receive is: “How large does my sample size have to be?” and hence I would like to take this opportunity to answer this question by discussing relevant debates and then the policy of the Archives of Sexual Behavior . Footnote 1

The sample size used in qualitative research methods is often smaller than that used in quantitative research methods. This is because qualitative research methods are often concerned with garnering an in-depth understanding of a phenomenon or are focused on meaning (and heterogeneities in meaning )—which are often centered on the how and why of a particular issue, process, situation, subculture, scene or set of social interactions. In-depth interview work is not as concerned with making generalizations to a larger population of interest and does not tend to rely on hypothesis testing but rather is more inductive and emergent in its process. As such, the aim of grounded theory and in-depth interviews is to create “categories from the data and then to analyze relationships between categories” while attending to how the “lived experience” of research participants can be understood (Charmaz, 1990 , p. 1162).

There are several debates concerning what sample size is the right size for such endeavors. Most scholars argue that the concept of saturation is the most important factor to think about when mulling over sample size decisions in qualitative research (Mason, 2010 ). Saturation is defined by many as the point at which the data collection process no longer offers any new or relevant data. Another way to state this is that conceptual categories in a research project can be considered saturated “when gathering fresh data no longer sparks new theoretical insights, nor reveals new properties of your core theoretical categories” (Charmaz, 2006 , p. 113). Saturation depends on many factors and not all of them are under the researcher’s control. Some of these include: How homogenous or heterogeneous is the population being studied? What are the selection criteria? How much money is in the budget to carry out the study? Are there key stratifiers (e.g., conceptual, demographic) that are critical for an in-depth understanding of the topic being examined? What is the timeline that the researcher faces? How experienced is the researcher in being able to even determine when she or he has actually reached saturation (Charmaz, 2006 )? Is the author carrying out theoretical sampling and is, therefore, concerned with ensuring depth on relevant concepts and examining a range of concepts and characteristics that are deemed critical for emergent findings (Glaser & Strauss, 1967 ; Strauss & Corbin, 1994 , 2007 )?

While some experts in qualitative research avoid the topic of “how many” interviews “are enough,” there is indeed variability in what is suggested as a minimum. An extremely large number of articles, book chapters, and books recommend guidance and suggest anywhere from 5 to 50 participants as adequate. All of these pieces of work engage in nuanced debates when responding to the question of “how many” and frequently respond with a vague (and, actually, reasonable) “it depends.” Numerous factors are said to be important, including “the quality of data, the scope of the study, the nature of the topic, the amount of useful information obtained from each participant, the use of shadowed data, and the qualitative method and study designed used” (Morse, 2000 , p. 1). Others argue that the “how many” question can be the wrong question and that the rigor of the method “depends upon developing the range of relevant conceptual categories, saturating (filling, supporting, and providing repeated evidence for) those categories,” and fully explaining the data (Charmaz, 1990 ). Indeed, there have been countless conferences and conference sessions on these debates, reports written, and myriad publications are available as well (for a compilation of debates, see Baker & Edwards, 2012 ).

Taking all of these perspectives into account, the Archives of Sexual Behavior is putting forward a policy for authors in order to have more clarity on what is expected in terms of sample size for studies drawing on grounded theory and in-depth interviews. The policy of the Archives of Sexual Behavior will be that it adheres to the recommendation that 25–30 participants is the minimum sample size required to reach saturation and redundancy in grounded theory studies that use in-depth interviews. This number is considered adequate for publications in journals because it (1) may allow for thorough examination of the characteristics that address the research questions and to distinguish conceptual categories of interest, (2) maximizes the possibility that enough data have been collected to clarify relationships between conceptual categories and identify variation in processes, and (3) maximizes the chances that negative cases and hypothetical negative cases have been explored in the data (Charmaz, 2006 ; Morse, 1994 , 1995 ).

The Journal does not want to paradoxically and rigidly quantify sample size when the endeavor at hand is qualitative in nature and the debates on this matter are complex. However, we are providing this practical guidance. We want to ensure that more of our submissions have an adequate sample size so as to get closer to reaching the goal of saturation and redundancy across relevant characteristics and concepts. The current recommendation that is being put forward does not include any comment on other qualitative methodologies, such as content and textual analysis, participant observation, focus groups, case studies, clinical cases or mixed quantitative–qualitative methods. The current recommendation also does not apply to phenomenological studies or life history approaches. The current guidance is intended to offer one clear and consistent standard for research projects that use grounded theory and draw on in-depth interviews.

Editor’s note: Dr. Dworkin is an Associate Editor of the Journal and is responsible for qualitative submissions.

Baker, S. E., & Edwards, R. (2012). How many qualitative interviews is enough? National Center for Research Methods. Available at: http://eprints.ncrm.ac.uk/2273/ .

Charmaz, K. (1990). ‘Discovering’ chronic illness: Using grounded theory. Social Science and Medicine, 30 , 1161–1172.

Article PubMed Google Scholar

Charmaz, K. (2006). Constructing grounded theory: A practical guide through qualitative analysis . London: Sage Publications.

Google Scholar

Glaser, B. G., & Strauss, A. L. (1967). The discovery of grounded theory: Strategies for qualitative research . Chicago: Aldine Publishing Co.

Mason, M. (2010). Sample size and saturation in PhD studies using qualitative interviews. Forum: Qualitative Social Research, 11 (3) [Article No. 8].

Morse, J. M. (1994). Designing funded qualitative research. In N. Denzin & Y. Lincoln (Eds.), Handbook of qualitative research (pp. 220–235). Thousand Oaks, CA: Sage Publications.

Morse, J. M. (1995). The significance of saturation. Qualitative Health Research, 5 , 147–149.

Article Google Scholar

Morse, J. M. (2000). Determining sample size. Qualitative Health Research, 10 , 3–5.

Strauss, A. L., & Corbin, J. M. (1994). Grounded theory methodology. In N. K. Denzin & Y. S. Lincoln (Eds.), Handbook of qualitative research (pp. 273–285). Thousand Oaks, CA: Sage Publications.

Strauss, A. L., & Corbin, J. M. (2007). Basics of qualitative research: Techniques and procedures for developing grounded theory . Thousand Oaks, CA: Sage Publications.

Zucker, K. J. (2002). From the Editor’s desk: Receiving the torch in the era of sexology’s renaissance. Archives of Sexual Behavior, 31 , 1–6.

Download references

Author information

Authors and affiliations.

Department of Social and Behavioral Sciences, University of California at San Francisco, 3333 California St., LHTS #455, San Francisco, CA, 94118, USA

Shari L. Dworkin

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Shari L. Dworkin .

Rights and permissions

Reprints and permissions

About this article

Dworkin, S.L. Sample Size Policy for Qualitative Studies Using In-Depth Interviews. Arch Sex Behav 41 , 1319–1320 (2012). https://doi.org/10.1007/s10508-012-0016-6

Download citation

Published : 12 September 2012

Issue Date : December 2012

DOI : https://doi.org/10.1007/s10508-012-0016-6

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Find a journal

- Publish with us

- Track your research

How many participants do I need for qualitative research?

- Participant recruitment

- Qualitative research

6 min read David Renwick

For those new to the qualitative research space, there’s one question that’s usually pretty tough to figure out, and that’s the question of how many participants to include in a study. Regardless of whether it’s research as part of the discovery phase for a new product, or perhaps an in-depth canvas of the users of an existing service, researchers can often find it difficult to agree on the numbers. So is there an easy answer? Let’s find out.

Here, we’ll look into the right number of participants for qualitative research studies. If you want to know about participants for quantitative research, read Nielsen Norman Group’s article .

Getting the numbers right

So you need to run a series of user interviews or usability tests and aren’t sure exactly how many people you should reach out to. It can be a tricky situation – especially for those without much experience. Do you test a small selection of 1 or 2 people to make the recruitment process easier? Or, do you go big and test with a series of 10 people over the course of a month? The answer lies somewhere in between.

It’s often a good idea (for qualitative research methods like interviews and usability tests) to start with 5 participants and then scale up by a further 5 based on how complicated the subject matter is. You may also find it helpful to add additional participants if you’re new to user research or you’re working in a new area.

What you’re actually looking for here is what’s known as saturation.

Understanding saturation

Whether it’s qualitative research as part of a master’s thesis or as research for a new online dating app, saturation is the best metric you can use to identify when you’ve hit the right number of participants.

In a nutshell, saturation is when you’ve reached the point where adding further participants doesn’t give you any further insights. It’s true that you may still pick up on the occasional interesting detail, but all of your big revelations and learnings have come and gone. A good measure is to sit down after each session with a participant and analyze the number of new insights you’ve noted down.

Interestingly, in a paper titled How Many Interviews Are Enough? , authors Greg Guest, Arwen Bunce and Laura Johnson noted that saturation usually occurs with around 12 participants in homogeneous groups (meaning people in the same role at an organization, for example). However, carrying out ethnographic research on a larger domain with a diverse set of participants will almost certainly require a larger sample.

Ensuring you’ve hit the right number of participants

How do you know when you’ve reached saturation point? You have to keep conducting interviews or usability tests until you’re no longer uncovering new insights or concepts.

While this may seem to run counter to the idea of just gathering as much data from as many people as possible, there’s a strong case for focusing on a smaller group of participants. In The logic of small samples in interview-based , authors Mira Crouch and Heather McKenzie note that using fewer than 20 participants during a qualitative research study will result in better data. Why? With a smaller group, it’s easier for you (the researcher) to build strong close relationships with your participants, which in turn leads to more natural conversations and better data.

There’s also a school of thought that you should interview 5 or so people per persona. For example, if you’re working in a company that has well-defined personas, you might want to use those as a basis for your study, and then you would interview 5 people based on each persona. This maybe worth considering or particularly important when you have a product that has very distinct user groups (e.g. students and staff, teachers and parents etc).

How your domain affects sample size

The scope of the topic you’re researching will change the amount of information you’ll need to gather before you’ve hit the saturation point. Your topic is also commonly referred to as the domain.

If you’re working in quite a confined domain, for example, a single screen of a mobile app or a very specific scenario, you’ll likely find interviews with 5 participants to be perfectly fine. Moving into more complicated domains, like the entire checkout process for an online shopping app, will push up your sample size.

As Mitchel Seaman notes : “Exploring a big issue like young peoples’ opinions about healthcare coverage, a broad emotional issue like postmarital sexuality, or a poorly-understood domain for your team like mobile device use in another country can drastically increase the number of interviews you’ll want to conduct.”

In-person or remote

Does the location of your participants change the number you need for qualitative user research? Well, not really – but there are other factors to consider.

- Budget: If you choose to conduct remote interviews/usability tests, you’ll likely find you’ve got lower costs as you won’t need to travel to your participants or have them travel to you. This also affects…

- Participant access: Remote qualitative research can be a lifesaver when it comes to participant access. No longer are you confined to the people you have physical access to — instead you can reach out to anyone you’d like.

- Quality: On the other hand, remote research does have its downsides. For one, you’ll likely find you’re not able to build the same kinds of relationships over the internet or phone as those in person, which in turn means you never quite get the same level of insights.

Is there value in outsourcing recruitment?

Recruitment is understandably an intensive logistical exercise with many moving parts. If you’ve ever had to recruit people for a study before, you’ll understand the need for long lead times (to ensure you have enough participants for the project) and the countless long email chains as you discuss suitable times.

Outsourcing your participant recruitment is just one way to lighten the logistical load during your research. Instead of having to go out and look for participants, you have them essentially delivered to you in the right number and with the right attributes.

We’ve got one such service at Optimal Workshop, which means it’s the perfect accompaniment if you’re also using our platform of UX tools. Read more about that here .

So that’s really most of what there is to know about participant recruitment in a qualitative research context. As we said at the start, while it can appear quite tricky to figure out exactly how many people you need to recruit, it’s actually not all that difficult in reality.

Overall, the number of participants you need for your qualitative research can depend on your project among other factors. It’s important to keep saturation in mind, as well as the locale of participants. You also need to get the most you can out of what’s available to you. Remember: Some research is better than none!

Capture, analyze and visualize your qualitative data.

Try our qualitative research tool for usability testing, interviewing and note-taking. Reframer by Optimal Workshop.

Published on August 8, 2019

David Renwick

David is Optimal Workshop's Content Strategist and Editor of CRUX. You can usually find him alongside one of the office dogs 🐕 (Bella, Bowie, Frida, Tana or Steezy). Connect with him on LinkedIn.

Recommended for you

Collating your user testing notes

Here's a step by step guide for turning your user testing notes into something useful.

Using the 'narrative arc' in your user interviews

If you’re more of a visual person, you can watch a 20 minute talk which explains how to use the narrative arc in your in-person research. The power of stories Stories are powerful things. You don’t need me to tell you that! You’ve probably read a book, seen a play, a film or a TV... View Article

9 tips to improve your note-taking skills

Note-taking for qualitative research is a hard skill to master, here are 9 tips to help you become a better note-taker.

Try Optimal Workshop tools for free

What are you looking for.

Explore all tags

Discover more from Optimal Workshop

Subscribe now to keep reading and get access to the full archive.

Type your email…

Continue reading

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 05 October 2018

Interviews and focus groups in qualitative research: an update for the digital age

- P. Gill 1 &

- J. Baillie 2

British Dental Journal volume 225 , pages 668–672 ( 2018 ) Cite this article

28k Accesses

48 Citations

20 Altmetric

Metrics details

Highlights that qualitative research is used increasingly in dentistry. Interviews and focus groups remain the most common qualitative methods of data collection.

Suggests the advent of digital technologies has transformed how qualitative research can now be undertaken.

Suggests interviews and focus groups can offer significant, meaningful insight into participants' experiences, beliefs and perspectives, which can help to inform developments in dental practice.

Qualitative research is used increasingly in dentistry, due to its potential to provide meaningful, in-depth insights into participants' experiences, perspectives, beliefs and behaviours. These insights can subsequently help to inform developments in dental practice and further related research. The most common methods of data collection used in qualitative research are interviews and focus groups. While these are primarily conducted face-to-face, the ongoing evolution of digital technologies, such as video chat and online forums, has further transformed these methods of data collection. This paper therefore discusses interviews and focus groups in detail, outlines how they can be used in practice, how digital technologies can further inform the data collection process, and what these methods can offer dentistry.

You have full access to this article via your institution.

Similar content being viewed by others

Interviews in the social sciences

Professionalism in dentistry: deconstructing common terminology

A review of technical and quality assessment considerations of audio-visual and web-conferencing focus groups in qualitative health research, introduction.

Traditionally, research in dentistry has primarily been quantitative in nature. 1 However, in recent years, there has been a growing interest in qualitative research within the profession, due to its potential to further inform developments in practice, policy, education and training. Consequently, in 2008, the British Dental Journal (BDJ) published a four paper qualitative research series, 2 , 3 , 4 , 5 to help increase awareness and understanding of this particular methodological approach.

Since the papers were originally published, two scoping reviews have demonstrated the ongoing proliferation in the use of qualitative research within the field of oral healthcare. 1 , 6 To date, the original four paper series continue to be well cited and two of the main papers remain widely accessed among the BDJ readership. 2 , 3 The potential value of well-conducted qualitative research to evidence-based practice is now also widely recognised by service providers, policy makers, funding bodies and those who commission, support and use healthcare research.

Besides increasing standalone use, qualitative methods are now also routinely incorporated into larger mixed method study designs, such as clinical trials, as they can offer additional, meaningful insights into complex problems that simply could not be provided by quantitative methods alone. Qualitative methods can also be used to further facilitate in-depth understanding of important aspects of clinical trial processes, such as recruitment. For example, Ellis et al . investigated why edentulous older patients, dissatisfied with conventional dentures, decline implant treatment, despite its established efficacy, and frequently refuse to participate in related randomised clinical trials, even when financial constraints are removed. 7 Through the use of focus groups in Canada and the UK, the authors found that fears of pain and potential complications, along with perceived embarrassment, exacerbated by age, are common reasons why older patients typically refuse dental implants. 7

The last decade has also seen further developments in qualitative research, due to the ongoing evolution of digital technologies. These developments have transformed how researchers can access and share information, communicate and collaborate, recruit and engage participants, collect and analyse data and disseminate and translate research findings. 8 Where appropriate, such technologies are therefore capable of extending and enhancing how qualitative research is undertaken. 9 For example, it is now possible to collect qualitative data via instant messaging, email or online/video chat, using appropriate online platforms.

These innovative approaches to research are therefore cost-effective, convenient, reduce geographical constraints and are often useful for accessing 'hard to reach' participants (for example, those who are immobile or socially isolated). 8 , 9 However, digital technologies are still relatively new and constantly evolving and therefore present a variety of pragmatic and methodological challenges. Furthermore, given their very nature, their use in many qualitative studies and/or with certain participant groups may be inappropriate and should therefore always be carefully considered. While it is beyond the scope of this paper to provide a detailed explication regarding the use of digital technologies in qualitative research, insight is provided into how such technologies can be used to facilitate the data collection process in interviews and focus groups.

In light of such developments, it is perhaps therefore timely to update the main paper 3 of the original BDJ series. As with the previous publications, this paper has been purposely written in an accessible style, to enhance readability, particularly for those who are new to qualitative research. While the focus remains on the most common qualitative methods of data collection – interviews and focus groups – appropriate revisions have been made to provide a novel perspective, and should therefore be helpful to those who would like to know more about qualitative research. This paper specifically focuses on undertaking qualitative research with adult participants only.

Overview of qualitative research

Qualitative research is an approach that focuses on people and their experiences, behaviours and opinions. 10 , 11 The qualitative researcher seeks to answer questions of 'how' and 'why', providing detailed insight and understanding, 11 which quantitative methods cannot reach. 12 Within qualitative research, there are distinct methodologies influencing how the researcher approaches the research question, data collection and data analysis. 13 For example, phenomenological studies focus on the lived experience of individuals, explored through their description of the phenomenon. Ethnographic studies explore the culture of a group and typically involve the use of multiple methods to uncover the issues. 14

While methodology is the 'thinking tool', the methods are the 'doing tools'; 13 the ways in which data are collected and analysed. There are multiple qualitative data collection methods, including interviews, focus groups, observations, documentary analysis, participant diaries, photography and videography. Two of the most commonly used qualitative methods are interviews and focus groups, which are explored in this article. The data generated through these methods can be analysed in one of many ways, according to the methodological approach chosen. A common approach is thematic data analysis, involving the identification of themes and subthemes across the data set. Further information on approaches to qualitative data analysis has been discussed elsewhere. 1

Qualitative research is an evolving and adaptable approach, used by different disciplines for different purposes. Traditionally, qualitative data, specifically interviews, focus groups and observations, have been collected face-to-face with participants. In more recent years, digital technologies have contributed to the ongoing evolution of qualitative research. Digital technologies offer researchers different ways of recruiting participants and collecting data, and offer participants opportunities to be involved in research that is not necessarily face-to-face.

Research interviews are a fundamental qualitative research method 15 and are utilised across methodological approaches. Interviews enable the researcher to learn in depth about the perspectives, experiences, beliefs and motivations of the participant. 3 , 16 Examples include, exploring patients' perspectives of fear/anxiety triggers in dental treatment, 17 patients' experiences of oral health and diabetes, 18 and dental students' motivations for their choice of career. 19

Interviews may be structured, semi-structured or unstructured, 3 according to the purpose of the study, with less structured interviews facilitating a more in depth and flexible interviewing approach. 20 Structured interviews are similar to verbal questionnaires and are used if the researcher requires clarification on a topic; however they produce less in-depth data about a participant's experience. 3 Unstructured interviews may be used when little is known about a topic and involves the researcher asking an opening question; 3 the participant then leads the discussion. 20 Semi-structured interviews are commonly used in healthcare research, enabling the researcher to ask predetermined questions, 20 while ensuring the participant discusses issues they feel are important.

Interviews can be undertaken face-to-face or using digital methods when the researcher and participant are in different locations. Audio-recording the interview, with the consent of the participant, is essential for all interviews regardless of the medium as it enables accurate transcription; the process of turning the audio file into a word-for-word transcript. This transcript is the data, which the researcher then analyses according to the chosen approach.

Types of interview

Qualitative studies often utilise one-to-one, face-to-face interviews with research participants. This involves arranging a mutually convenient time and place to meet the participant, signing a consent form and audio-recording the interview. However, digital technologies have expanded the potential for interviews in research, enabling individuals to participate in qualitative research regardless of location.

Telephone interviews can be a useful alternative to face-to-face interviews and are commonly used in qualitative research. They enable participants from different geographical areas to participate and may be less onerous for participants than meeting a researcher in person. 15 A qualitative study explored patients' perspectives of dental implants and utilised telephone interviews due to the quality of the data that could be yielded. 21 The researcher needs to consider how they will audio record the interview, which can be facilitated by purchasing a recorder that connects directly to the telephone. One potential disadvantage of telephone interviews is the inability of the interviewer and researcher to see each other. This is resolved using software for audio and video calls online – such as Skype – to conduct interviews with participants in qualitative studies. Advantages of this approach include being able to see the participant if video calls are used, enabling observation of non-verbal communication, and the software can be free to use. However, participants are required to have a device and internet connection, as well as being computer literate, potentially limiting who can participate in the study. One qualitative study explored the role of dental hygienists in reducing oral health disparities in Canada. 22 The researcher conducted interviews using Skype, which enabled dental hygienists from across Canada to be interviewed within the research budget, accommodating the participants' schedules. 22

A less commonly used approach to qualitative interviews is the use of social virtual worlds. A qualitative study accessed a social virtual world – Second Life – to explore the health literacy skills of individuals who use social virtual worlds to access health information. 23 The researcher created an avatar and interview room, and undertook interviews with participants using voice and text methods. 23 This approach to recruitment and data collection enables individuals from diverse geographical locations to participate, while remaining anonymous if they wish. Furthermore, for interviews conducted using text methods, transcription of the interview is not required as the researcher can save the written conversation with the participant, with the participant's consent. However, the researcher and participant need to be familiar with how the social virtual world works to engage in an interview this way.

Conducting an interview

Ensuring informed consent before any interview is a fundamental aspect of the research process. Participants in research must be afforded autonomy and respect; consent should be informed and voluntary. 24 Individuals should have the opportunity to read an information sheet about the study, ask questions, understand how their data will be stored and used, and know that they are free to withdraw at any point without reprisal. The qualitative researcher should take written consent before undertaking the interview. In a face-to-face interview, this is straightforward: the researcher and participant both sign copies of the consent form, keeping one each. However, this approach is less straightforward when the researcher and participant do not meet in person. A recent protocol paper outlined an approach for taking consent for telephone interviews, which involved: audio recording the participant agreeing to each point on the consent form; the researcher signing the consent form and keeping a copy; and posting a copy to the participant. 25 This process could be replicated in other interview studies using digital methods.

There are advantages and disadvantages of using face-to-face and digital methods for research interviews. Ultimately, for both approaches, the quality of the interview is determined by the researcher. 16 Appropriate training and preparation are thus required. Healthcare professionals can use their interpersonal communication skills when undertaking a research interview, particularly questioning, listening and conversing. 3 However, the purpose of an interview is to gain information about the study topic, 26 rather than offering help and advice. 3 The researcher therefore needs to listen attentively to participants, enabling them to describe their experience without interruption. 3 The use of active listening skills also help to facilitate the interview. 14 Spradley outlined elements and strategies for research interviews, 27 which are a useful guide for qualitative researchers:

Greeting and explaining the project/interview

Asking descriptive (broad), structural (explore response to descriptive) and contrast (difference between) questions

Asymmetry between the researcher and participant talking

Expressing interest and cultural ignorance

Repeating, restating and incorporating the participant's words when asking questions

Creating hypothetical situations

Asking friendly questions

Knowing when to leave.

For semi-structured interviews, a topic guide (also called an interview schedule) is used to guide the content of the interview – an example of a topic guide is outlined in Box 1 . The topic guide, usually based on the research questions, existing literature and, for healthcare professionals, their clinical experience, is developed by the research team. The topic guide should include open ended questions that elicit in-depth information, and offer participants the opportunity to talk about issues important to them. This is vital in qualitative research where the researcher is interested in exploring the experiences and perspectives of participants. It can be useful for qualitative researchers to pilot the topic guide with the first participants, 10 to ensure the questions are relevant and understandable, and amending the questions if required.

Regardless of the medium of interview, the researcher must consider the setting of the interview. For face-to-face interviews, this could be in the participant's home, in an office or another mutually convenient location. A quiet location is preferable to promote confidentiality, enable the researcher and participant to concentrate on the conversation, and to facilitate accurate audio-recording of the interview. For interviews using digital methods the same principles apply: a quiet, private space where the researcher and participant feel comfortable and confident to participate in an interview.

Box 1: Example of a topic guide

Study focus: Parents' experiences of brushing their child's (aged 0–5) teeth

1. Can you tell me about your experience of cleaning your child's teeth?

How old was your child when you started cleaning their teeth?

Why did you start cleaning their teeth at that point?

How often do you brush their teeth?

What do you use to brush their teeth and why?

2. Could you explain how you find cleaning your child's teeth?

Do you find anything difficult?

What makes cleaning their teeth easier for you?

3. How has your experience of cleaning your child's teeth changed over time?

Has it become easier or harder?

Have you changed how often and how you clean their teeth? If so, why?

4. Could you describe how your child finds having their teeth cleaned?

What do they enjoy about having their teeth cleaned?

Is there anything they find upsetting about having their teeth cleaned?

5. Where do you look for information/advice about cleaning your child's teeth?

What did your health visitor tell you about cleaning your child's teeth? (If anything)

What has the dentist told you about caring for your child's teeth? (If visited)

Have any family members given you advice about how to clean your child's teeth? If so, what did they tell you? Did you follow their advice?

6. Is there anything else you would like to discuss about this?

Focus groups

A focus group is a moderated group discussion on a pre-defined topic, for research purposes. 28 , 29 While not aligned to a particular qualitative methodology (for example, grounded theory or phenomenology) as such, focus groups are used increasingly in healthcare research, as they are useful for exploring collective perspectives, attitudes, behaviours and experiences. Consequently, they can yield rich, in-depth data and illuminate agreement and inconsistencies 28 within and, where appropriate, between groups. Examples include public perceptions of dental implants and subsequent impact on help-seeking and decision making, 30 and general dental practitioners' views on patient safety in dentistry. 31

Focus groups can be used alone or in conjunction with other methods, such as interviews or observations, and can therefore help to confirm, extend or enrich understanding and provide alternative insights. 28 The social interaction between participants often results in lively discussion and can therefore facilitate the collection of rich, meaningful data. However, they are complex to organise and manage, due to the number of participants, and may also be inappropriate for exploring particularly sensitive issues that many participants may feel uncomfortable about discussing in a group environment.

Focus groups are primarily undertaken face-to-face but can now also be undertaken online, using appropriate technologies such as email, bulletin boards, online research communities, chat rooms, discussion forums, social media and video conferencing. 32 Using such technologies, data collection can also be synchronous (for example, online discussions in 'real time') or, unlike traditional face-to-face focus groups, asynchronous (for example, online/email discussions in 'non-real time'). While many of the fundamental principles of focus group research are the same, regardless of how they are conducted, a number of subtle nuances are associated with the online medium. 32 Some of which are discussed further in the following sections.

Focus group considerations

Some key considerations associated with face-to-face focus groups are: how many participants are required; should participants within each group know each other (or not) and how many focus groups are needed within a single study? These issues are much debated and there is no definitive answer. However, the number of focus groups required will largely depend on the topic area, the depth and breadth of data needed, the desired level of participation required 29 and the necessity (or not) for data saturation.

The optimum group size is around six to eight participants (excluding researchers) but can work effectively with between three and 14 participants. 3 If the group is too small, it may limit discussion, but if it is too large, it may become disorganised and difficult to manage. It is, however, prudent to over-recruit for a focus group by approximately two to three participants, to allow for potential non-attenders. For many researchers, particularly novice researchers, group size may also be informed by pragmatic considerations, such as the type of study, resources available and moderator experience. 28 Similar size and mix considerations exist for online focus groups. Typically, synchronous online focus groups will have around three to eight participants but, as the discussion does not happen simultaneously, asynchronous groups may have as many as 10–30 participants. 33

The topic area and potential group interaction should guide group composition considerations. Pre-existing groups, where participants know each other (for example, work colleagues) may be easier to recruit, have shared experiences and may enjoy a familiarity, which facilitates discussion and/or the ability to challenge each other courteously. 3 However, if there is a potential power imbalance within the group or if existing group norms and hierarchies may adversely affect the ability of participants to speak freely, then 'stranger groups' (that is, where participants do not already know each other) may be more appropriate. 34 , 35

Focus group management

Face-to-face focus groups should normally be conducted by two researchers; a moderator and an observer. 28 The moderator facilitates group discussion, while the observer typically monitors group dynamics, behaviours, non-verbal cues, seating arrangements and speaking order, which is essential for transcription and analysis. The same principles of informed consent, as discussed in the interview section, also apply to focus groups, regardless of medium. However, the consent process for online discussions will probably be managed somewhat differently. For example, while an appropriate participant information leaflet (and consent form) would still be required, the process is likely to be managed electronically (for example, via email) and would need to specifically address issues relating to technology (for example, anonymity and use, storage and access to online data). 32

The venue in which a face to face focus group is conducted should be of a suitable size, private, quiet, free from distractions and in a collectively convenient location. It should also be conducted at a time appropriate for participants, 28 as this is likely to promote attendance. As with interviews, the same ethical considerations apply (as discussed earlier). However, online focus groups may present additional ethical challenges associated with issues such as informed consent, appropriate access and secure data storage. Further guidance can be found elsewhere. 8 , 32

Before the focus group commences, the researchers should establish rapport with participants, as this will help to put them at ease and result in a more meaningful discussion. Consequently, researchers should introduce themselves, provide further clarity about the study and how the process will work in practice and outline the 'ground rules'. Ground rules are designed to assist, not hinder, group discussion and typically include: 3 , 28 , 29

Discussions within the group are confidential to the group

Only one person can speak at a time

All participants should have sufficient opportunity to contribute

There should be no unnecessary interruptions while someone is speaking

Everyone can be expected to be listened to and their views respected

Challenging contrary opinions is appropriate, but ridiculing is not.

Moderating a focus group requires considered management and good interpersonal skills to help guide the discussion and, where appropriate, keep it sufficiently focused. Avoid, therefore, participating, leading, expressing personal opinions or correcting participants' knowledge 3 , 28 as this may bias the process. A relaxed, interested demeanour will also help participants to feel comfortable and promote candid discourse. Moderators should also prevent the discussion being dominated by any one person, ensure differences of opinions are discussed fairly and, if required, encourage reticent participants to contribute. 3 Asking open questions, reflecting on significant issues, inviting further debate, probing responses accordingly, and seeking further clarification, as and where appropriate, will help to obtain sufficient depth and insight into the topic area.

Moderating online focus groups requires comparable skills, particularly if the discussion is synchronous, as the discussion may be dominated by those who can type proficiently. 36 It is therefore important that sufficient time and respect is accorded to those who may not be able to type as quickly. Asynchronous discussions are usually less problematic in this respect, as interactions are less instant. However, moderating an asynchronous discussion presents additional challenges, particularly if participants are geographically dispersed, as they may be online at different times. Consequently, the moderator will not always be present and the discussion may therefore need to occur over several days, which can be difficult to manage and facilitate and invariably requires considerable flexibility. 32 It is also worth recognising that establishing rapport with participants via online medium is often more challenging than via face-to-face and may therefore require additional time, skills, effort and consideration.

As with research interviews, focus groups should be guided by an appropriate interview schedule, as discussed earlier in the paper. For example, the schedule will usually be informed by the review of the literature and study aims, and will merely provide a topic guide to help inform subsequent discussions. To provide a verbatim account of the discussion, focus groups must be recorded, using an audio-recorder with a good quality multi-directional microphone. While videotaping is possible, some participants may find it obtrusive, 3 which may adversely affect group dynamics. The use (or not) of a video recorder, should therefore be carefully considered.

At the end of the focus group, a few minutes should be spent rounding up and reflecting on the discussion. 28 Depending on the topic area, it is possible that some participants may have revealed deeply personal issues and may therefore require further help and support, such as a constructive debrief or possibly even referral on to a relevant third party. It is also possible that some participants may feel that the discussion did not adequately reflect their views and, consequently, may no longer wish to be associated with the study. 28 Such occurrences are likely to be uncommon, but should they arise, it is important to further discuss any concerns and, if appropriate, offer them the opportunity to withdraw (including any data relating to them) from the study. Immediately after the discussion, researchers should compile notes regarding thoughts and ideas about the focus group, which can assist with data analysis and, if appropriate, any further data collection.

Qualitative research is increasingly being utilised within dental research to explore the experiences, perspectives, motivations and beliefs of participants. The contributions of qualitative research to evidence-based practice are increasingly being recognised, both as standalone research and as part of larger mixed-method studies, including clinical trials. Interviews and focus groups remain commonly used data collection methods in qualitative research, and with the advent of digital technologies, their utilisation continues to evolve. However, digital methods of qualitative data collection present additional methodological, ethical and practical considerations, but also potentially offer considerable flexibility to participants and researchers. Consequently, regardless of format, qualitative methods have significant potential to inform important areas of dental practice, policy and further related research.

Gussy M, Dickson-Swift V, Adams J . A scoping review of qualitative research in peer-reviewed dental publications. Int J Dent Hygiene 2013; 11 : 174–179.

Article Google Scholar

Burnard P, Gill P, Stewart K, Treasure E, Chadwick B . Analysing and presenting qualitative data. Br Dent J 2008; 204 : 429–432.

Gill P, Stewart K, Treasure E, Chadwick B . Methods of data collection in qualitative research: interviews and focus groups. Br Dent J 2008; 204 : 291–295.

Gill P, Stewart K, Treasure E, Chadwick B . Conducting qualitative interviews with school children in dental research. Br Dent J 2008; 204 : 371–374.

Stewart K, Gill P, Chadwick B, Treasure E . Qualitative research in dentistry. Br Dent J 2008; 204 : 235–239.

Masood M, Thaliath E, Bower E, Newton J . An appraisal of the quality of published qualitative dental research. Community Dent Oral Epidemiol 2011; 39 : 193–203.

Ellis J, Levine A, Bedos C et al. Refusal of implant supported mandibular overdentures by elderly patients. Gerodontology 2011; 28 : 62–68.

Macfarlane S, Bucknall T . Digital Technologies in Research. In Gerrish K, Lathlean J (editors) The Research Process in Nursing . 7th edition. pp. 71–86. Oxford: Wiley Blackwell; 2015.

Google Scholar

Lee R, Fielding N, Blank G . Online Research Methods in the Social Sciences: An Editorial Introduction. In Fielding N, Lee R, Blank G (editors) The Sage Handbook of Online Research Methods . pp. 3–16. London: Sage Publications; 2016.

Creswell J . Qualitative inquiry and research design: Choosing among five designs . Thousand Oaks, CA: Sage, 1998.

Guest G, Namey E, Mitchell M . Qualitative research: Defining and designing In Guest G, Namey E, Mitchell M (editors) Collecting Qualitative Data: A Field Manual For Applied Research . pp. 1–40. London: Sage Publications, 2013.

Chapter Google Scholar

Pope C, Mays N . Qualitative research: Reaching the parts other methods cannot reach: an introduction to qualitative methods in health and health services research. BMJ 1995; 311 : 42–45.

Giddings L, Grant B . A Trojan Horse for positivism? A critique of mixed methods research. Adv Nurs Sci 2007; 30 : 52–60.

Hammersley M, Atkinson P . Ethnography: Principles in Practice . London: Routledge, 1995.

Oltmann S . Qualitative interviews: A methodological discussion of the interviewer and respondent contexts Forum Qualitative Sozialforschung/Forum: Qualitative Social Research. 2016; 17 : Art. 15.

Patton M . Qualitative Research and Evaluation Methods . Thousand Oaks, CA: Sage, 2002.

Wang M, Vinall-Collier K, Csikar J, Douglas G . A qualitative study of patients' views of techniques to reduce dental anxiety. J Dent 2017; 66 : 45–51.

Lindenmeyer A, Bowyer V, Roscoe J, Dale J, Sutcliffe P . Oral health awareness and care preferences in patients with diabetes: a qualitative study. Fam Pract 2013; 30 : 113–118.

Gallagher J, Clarke W, Wilson N . Understanding the motivation: a qualitative study of dental students' choice of professional career. Eur J Dent Educ 2008; 12 : 89–98.

Tod A . Interviewing. In Gerrish K, Lacey A (editors) The Research Process in Nursing . Oxford: Blackwell Publishing, 2006.

Grey E, Harcourt D, O'Sullivan D, Buchanan H, Kipatrick N . A qualitative study of patients' motivations and expectations for dental implants. Br Dent J 2013; 214 : 10.1038/sj.bdj.2012.1178.

Farmer J, Peressini S, Lawrence H . Exploring the role of the dental hygienist in reducing oral health disparities in Canada: A qualitative study. Int J Dent Hygiene 2017; 10.1111/idh.12276.

McElhinney E, Cheater F, Kidd L . Undertaking qualitative health research in social virtual worlds. J Adv Nurs 2013; 70 : 1267–1275.

Health Research Authority. UK Policy Framework for Health and Social Care Research. Available at https://www.hra.nhs.uk/planning-and-improving-research/policies-standards-legislation/uk-policy-framework-health-social-care-research/ (accessed September 2017).

Baillie J, Gill P, Courtenay P . Knowledge, understanding and experiences of peritonitis among patients, and their families, undertaking peritoneal dialysis: A mixed methods study protocol. J Adv Nurs 2017; 10.1111/jan.13400.

Kvale S . Interviews . Thousand Oaks (CA): Sage, 1996.

Spradley J . The Ethnographic Interview . New York: Holt, Rinehart and Winston, 1979.

Goodman C, Evans C . Focus Groups. In Gerrish K, Lathlean J (editors) The Research Process in Nursing . pp. 401–412. Oxford: Wiley Blackwell, 2015.

Shaha M, Wenzell J, Hill E . Planning and conducting focus group research with nurses. Nurse Res 2011; 18 : 77–87.

Wang G, Gao X, Edward C . Public perception of dental implants: a qualitative study. J Dent 2015; 43 : 798–805.

Bailey E . Contemporary views of dental practitioners' on patient safety. Br Dent J 2015; 219 : 535–540.

Abrams K, Gaiser T . Online Focus Groups. In Field N, Lee R, Blank G (editors) The Sage Handbook of Online Research Methods . pp. 435–450. London: Sage Publications, 2016.

Poynter R . The Handbook of Online and Social Media Research . West Sussex: John Wiley & Sons, 2010.

Kevern J, Webb C . Focus groups as a tool for critical social research in nurse education. Nurse Educ Today 2001; 21 : 323–333.

Kitzinger J, Barbour R . Introduction: The Challenge and Promise of Focus Groups. In Barbour R S K J (editor) Developing Focus Group Research . pp. 1–20. London: Sage Publications, 1999.

Krueger R, Casey M . Focus Groups: A Practical Guide for Applied Research. 4th ed. Thousand Oaks, California: SAGE; 2009.

Download references

Author information

Authors and affiliations.

Senior Lecturer (Adult Nursing), School of Healthcare Sciences, Cardiff University,

Lecturer (Adult Nursing) and RCBC Wales Postdoctoral Research Fellow, School of Healthcare Sciences, Cardiff University,

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to P. Gill .

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Gill, P., Baillie, J. Interviews and focus groups in qualitative research: an update for the digital age. Br Dent J 225 , 668–672 (2018). https://doi.org/10.1038/sj.bdj.2018.815

Download citation

Accepted : 02 July 2018

Published : 05 October 2018

Issue Date : 12 October 2018

DOI : https://doi.org/10.1038/sj.bdj.2018.815

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Translating brand reputation into equity from the stakeholder’s theory: an approach to value creation based on consumer’s perception & interactions.

- Olukorede Adewole

International Journal of Corporate Social Responsibility (2024)

Perceptions and beliefs of community gatekeepers about genomic risk information in African cleft research

- Abimbola M. Oladayo

- Oluwakemi Odukoya

- Azeez Butali

BMC Public Health (2024)

Assessment of women’s needs, wishes and preferences regarding interprofessional guidance on nutrition in pregnancy – a qualitative study

- Merle Ebinghaus

- Caroline Johanna Agricola

- Birgit-Christiane Zyriax

BMC Pregnancy and Childbirth (2024)

‘Baby mamas’ in Urban Ghana: an exploratory qualitative study on the factors influencing serial fathering among men in Accra, Ghana

- Rosemond Akpene Hiadzi

- Jemima Akweley Agyeman

- Godwin Banafo Akrong

Reproductive Health (2023)

Revolutionising dental technologies: a qualitative study on dental technicians’ perceptions of Artificial intelligence integration

- Galvin Sim Siang Lin

- Yook Shiang Ng

- Kah Hoay Chua

BMC Oral Health (2023)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Skip navigation

World Leaders in Research-Based User Experience

Why 5 participants are okay in a qualitative study, but not in a quantitative one.

July 11, 2021 2021-07-11

- Email article

- Share on LinkedIn

- Share on Twitter

In our quantitative-usability classes ( Measuring UX and ROI and Statistics for UX ) we often recommend a sizeable number of participants for quantitative studies — usually more than 30. We’ve said again and again that metrics collected in qualitative usability testing are often misleading and do not generalize to the general population. (There could be exceptions, but you always need to check by calculating confidence intervals and statistical significance ). And, almost inevitably, the retort comes back — Didn’t Jakob Nielsen recommend 5 users for usability studies ? If you need more users for statistical reasons, then it certainly means that the results obtained with 5 users aren’t valid, doesn’t it?

This question is so frequent, that we need to address the misunderstanding.

In This Article:

Quantitative usability studies: more than 5 participants, qualitative usability studies: assumptions behind the 5-user guideline, questioning the assumptions behind the 5-user guideline.

Quantitative usability studies are usually summative in nature: their goal is to measure the usability of a system (site, application, or some other product), arriving at one or more numbers. These studies attempt to get a sense of how good an interface is for its users by looking at a variety of metrics: how many users from the general population can complete one or more top tasks, how long it takes them, how many errors they make, and how satisfied they are with their experience. They usually involve collecting values for each participant, aggregating those values in summary statistics such as averages or success rates, calculating confidence intervals for those aggregates, and reporting likely ranges for the true score for the whole population. The results of such a study may indicate that the success rate for a top task for the whole population is somewhere between 75% and 90%, with a 95% confidence level and that the task time is between 2.3 and 2.6 minutes. These ranges (in effect, confidence intervals) should be fairly narrow to convey any interesting information (knowing that a success rate is between 5% and 95% is not very helpful, is it?), and they usually are narrow only if you include a large number of participants (40 or more). Hence, the recommendation to calculate confidence intervals for all metrics collected and not to rely on summary statistics when studies contain just a few users.

In contrast, qualitative user studies are mostly formative : their goal is to figure out what doesn’t work in a design, fix it, and then move on with a new, better version. The new version will usually also get tested, improved on, and so forth. While it is possible to have qualitative studies that have summative goals (let’s see all that’s wrong with our current website!), a lot of the times they simply aim to refine an existing design iteration . Qualitative studies (even when they are summative) do not try to predict how many users will complete a task, nor do they attempt to figure out how many people will run into any specific usability issue. They are meant to identify usability problems.

In comes Jakob Nielsen’s article that recommends qualitative testing with 5 users. There are three main assumptions behind that recommendation:

- That you are trying to identify issues in a design . By definition, an issue is some usability problem that the user experiences while using the design.

- That any issue that somebody encounters is a valid one worth fixing. To make an analogy for this assumption : if one person falls into a pothole, you know you need to fix it. You don’t need 100 people to fall into it to decide it needs fixing.

- That the probability of someone encountering an issue is 31%

Based on these assumptions, Jakob Nielsen and Tom Landauer built a mathematical model that shows that, by doing a qualitative test with 5 participants, you will identify 85% of the issues in an interface. And Jakob Nielsen has repeatedly argued (and justly so) that a good investment is to start with 5 people, find your 85% of the issues, fix them, then test again with another 5 people, and so on. It’s not worth trying to find all the issues in one test because you’ll spend too much time and money, and then you’ll be sure to introduce other issues in the redesign.

Note that the “metrics” collected in the quantitative and qualitative studies are very different: in quantitative studies you’re interested in how your general population will fare on measures such as task success, errors, satisfaction, and task time . In qualitative studies, you’re simply counting usability issues . And, while there is a statistical uncertainty about any number obtained from a quantitative study (how will the average obtained from my study compare with the average of the general population), there is absolutely no uncertainty in a qualitative study — any error identified is a legit problem that needs to be fixed.

I gave you a list of assumptions on which the 5-user guideline is based. However, you may not agree with (some of) them. I don’t think there’s much to argue about the first assumption, but you may bring some valid objections to the second and third one.

Does any error that someone encounters need to be fixed? One may argue that if 1000 out of a 1000 people fall into a pothole, you do need to repair it, but not if only one person out of 1000 falls into it. With a qualitative usability study, you have no guarantee (based on only the study) that an identified issue is likely to be encountered by more users than the ones that happened to come to your study. So, in that sense, the results cannot be generalized to the whole population.

Yes, if you wanted you could run a quantitative study to predict how many people in the general population are likely to encounter a particular error. And, then, yes, you could prioritize errors based on how likely they are and fix the ones with the highest priority. While that approach is certainly very sound, it’s probably going to be also very wasteful — you will need to test your design with a pretty large number of users to identify its main problems, then fix them, and introduce another ones that will need to be identified and prioritized.

Instead, the qualitative approach assumes that designers will use some other means to prioritize among different issues — maybe some of them are too expensive to fix or others are related to a functionality that only few of your users are likely to use. Qualitative user testing simply gives you a list of problems . It is the researcher job’s to prioritize among the different issues and move on.

Is the chance of encountering a problem in an interface 31%? The 31% number was based on an average across several projects run in the early 90s. It is possible that, since then, the chance of encountering an issue has changed. It’s also possible that, as you’re doing more design iterations and fixing more and more errors, the usability of your product is substantially better and, in fact, it’s more difficult to encounter new issues.

The good news is that the chance of encountering an error in an interface is only a parameter in Nielsen and Landauer’s model. So, if you know that your interface is pretty good, you can simply insert your desired probability in that model. The number of users will be given by this equation:

where L is your estimated probability of encountering an error in an interface, expressed as a decimal (i.e., 31% is entered as .31)

For example, if L is 20%, you would need 9 users to find 85% of the problems in the interface. If L is 10%, then you’d need 18 users. The more usable your interface is, the more users you need to include in the test to identify 85% of the usability problems.

However, your real goal is not to find a particular percentage of problems, but to maximize the business value of your user-research program . It turns out that peak ROI is fairly insensitive to variations in model parameters. If you are testing a terrible design for the very first time, your expenses will be low (it will be very easy to identify usability problems) and your gains will be high (the product will be hugely improved). Conversely, if you are researching a difficult problem, your expenses will be higher and your gains will be lower. However, the point that maximizes the ratio between gains and expenses (i.e., ROI) will still usually be around 5 test users, even though your study profitability will be higher for the easy study and lower for the harder study.

In general, it’s a good idea to start with 5 users, fix the errors that you find, and then slowly increase the number of users on further iterations if you think that you’ve made great progress. But, in practice, you can easily get a sense of how much insight you’ve found with 5 users. If you feel that not much, by all means, include a few additional users. Conversely, you can test with fewer than 5 users under other circumstances, such as when you can proceed to testing the next iteration very quickly. But if you have plenty of issues that you need to work on, first fix those, then move on.

There is no contradiction between the 5-user guideline for qualitative user testing and the idea that you cannot trust metrics obtained from small studies , because you do not collect metrics in a qualitative study. Quantitative and qualitative user studies have different goals :

- Quantitative studies aim to find metrics that predict the behavior of the whole population; such numbers will be imprecise — and thus useless — if they are based on a small sample size.

- Qualitative studies aim for insights : to identify usability issues in an interface. Researchers must use judgment rather than numbers to prioritize these issues. (And, to hammer home the point: the 5-user guideline only applies to qualitative, not to quantitative studies.]

If your interface has already gone through many rounds of testing, you may need to include more people even in a qualitative test, as the chance of encountering a problem may be smaller than the original assumptions of the model. Still, it’s good practice to start with 5 users and then increase the number if there are too few important findings.

Related Courses

Measuring ux and roi.

Use metrics from quantitative research to demonstrate value

How to Interpret UX Numbers: Statistics for UX

When research data should be trusted; what statistics to use when

Analytics and User Experience

Study your users’ real-life behaviors and make data-informed design decisions

Related Topics

- Research Methods Research Methods

Learn More:

Frequency ≠ Importance in Qualitative Data

Tanner Kohler · 3 min

Focus Groups 101

Therese Fessenden · 4 min

Deductively Analyzing Qualitative Data

Related Articles:

Internal vs. External Validity of UX Studies

Raluca Budiu · 9 min

UX Research Methods: Glossary

Raluca Budiu · 12 min

Quantifying UX Improvements: A Case Study

Kate Moran · 6 min

Benchmarking UX: Tracking Metrics

Kate Moran · 3 min

Quantitative Research: Study Guide

Kate Moran · 8 min

Quantitative UX: Glossary

Raluca Budiu · 8 min

Qualitative Data Coding

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

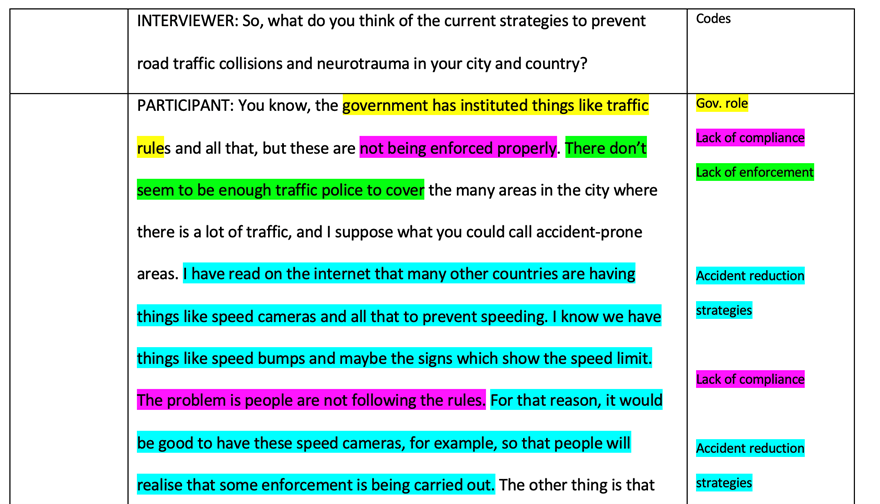

Coding is the process of analyzing qualitative data (usually text) by assigning labels (codes) to chunks of data that capture their essence or meaning. It allows you to condense, organize and interpret your data.

A code is a word or brief phrase that captures the essence of why you think a particular bit of data may be useful. A good analogy is that a code describes data like a hashtag describes a tweet.

Coding is an iterative process, with researchers refining and revising their codes as their understanding of the data evolves.

The ultimate goal is to develop a coherent and meaningful coding scheme that captures the richness and complexity of the participants’ experiences and helps answer the research questions.

Step 1: Familiarize yourself with the data

- Read through your data (interview transcripts, field notes, documents, etc.) several times. This process is called immersion.

- Think and reflect on what may be important in the data before making any firm decisions about ideas, or potential patterns.

Step 2: Decide on your coding approach

- Will you use predefined deductive codes (based on theory or prior research), or let codes emerge from the data (inductive coding)?

- Will a piece of data have one code or multiple?

- Will you code everything or selectively? Broader research questions may warrant coding more comprehensively.

If you decide not to code everything, it’s crucial to:

- Have clear criteria for what you will and won’t code

- Be transparent about your selection process in research reports

- Remain open to revisiting uncoded data later in analysis

Step 3: Do a first round of coding

- Go through the data and assign initial codes to chunks that stand out

- Create a code name (a word or short phrase) that captures the essence of each chunk

- Keep a codebook – a list of your codes with descriptions or definitions

- Be open to adding, revising or combining codes as you go

Descriptive codes

- In vivo coding / Semantic coding : This method uses words or short phrases directly from the participant’s own language as codes. It deals with the surface-level content, labeling what participants directly say or describe. It identifies keywords, phrases, or sentences that capture the literal content. Participant : “I was just so overwhelmed with everything.” Code : “overwhelmed”

- Process coding : Uses gerunds (“-ing” words) to connote observable or conceptual action in the data. Participant : “I started by brainstorming ideas, then I narrowed them down.” Codes : “brainstorming ideas,” “narrowing down”

- Open coding : A form of initial coding where the researcher remains open to any possible theoretical directions indicated by the data. Participant : “I found the class really challenging, but I learned a lot.” Codes : “challenging class,” “learning experience”

- Descriptive coding : Summarizes the primary topic of a passage in a word or short phrase. Participant : “I usually study in the library because it’s quiet.” Code : “study environment”

Step 4: Review and refine codes

- Look over your initial codes and see if any can be combined, split up, or revised

- Ensure your code names clearly convey the meaning of the data

- Check if your codes are applied consistently across the dataset

- Get a second opinion from a peer or advisor if possible

Interpretive codes

Interpretive codes go beyond simple description and reflect the researcher’s understanding of the underlying meanings, experiences, or processes captured in the data.

These codes require the researcher to interpret the participants’ words and actions in light of the research questions and theoretical framework.

For example, latent coding is a type of interpretive coding which goes beyond surface meaning in data. It digs for underlying emotions, motivations, or unspoken ideas the participant might not explicitly state

Latent coding looks for subtext, interprets the “why” behind what’s said, and considers the context (e.g. cultural influences, or unconscious biases).

- Example: A participant might say, “Whenever I see a spider, I feel like I’m going to pass out. It takes me back to a bad experience as a kid.” A latent code here could be “Feelings of Panic Triggered by Spiders” because it goes beyond the surface fear and explores the emotional response and potential cause.

It’s useful to ask yourself the following questions:

- What are the assumptions made by the participants?

- What emotions or feelings are expressed or implied in the data?

- How do participants relate to or interact with others in the data?

- How do the participants’ experiences or perspectives change over time?

- What is surprising, unexpected, or contradictory in the data?

- What is not being said or shown in the data? What are the silences or absences?

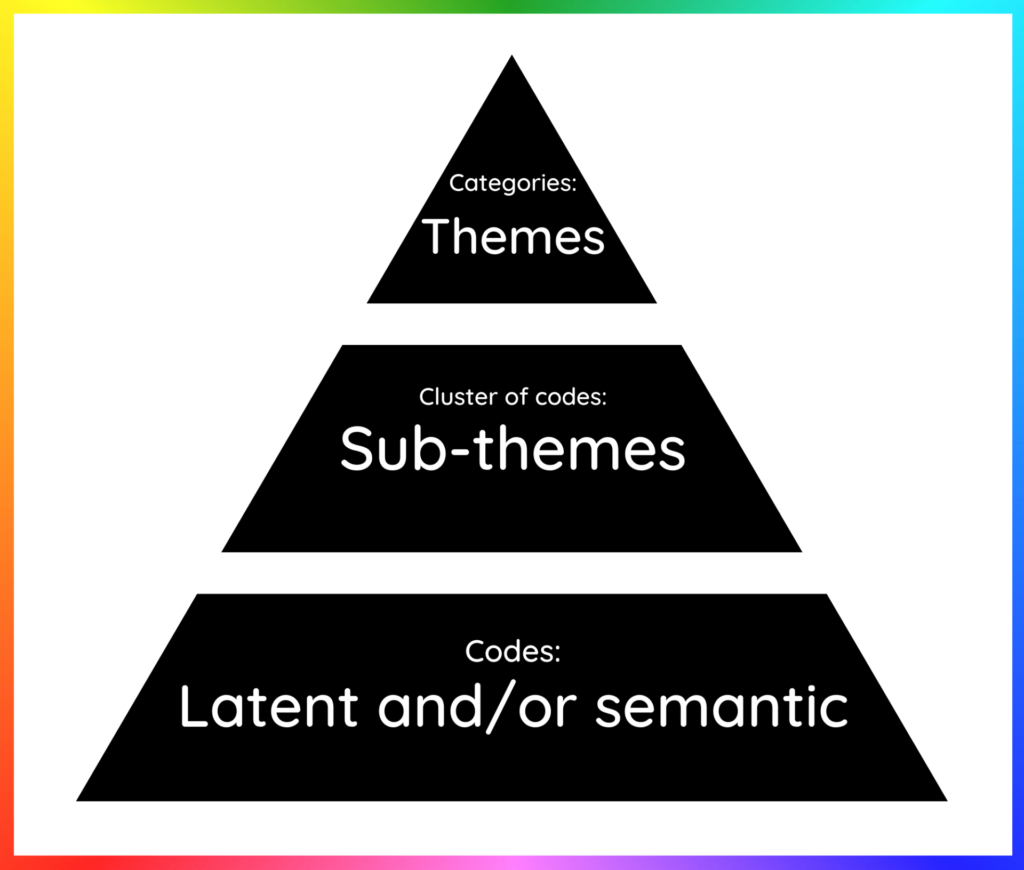

Theoretical codes

Theoretical codes are the most abstract and conceptual type of codes. They are used to link the data to existing theories or to develop new theoretical insights.

Theoretical codes often emerge later in the analysis process, as researchers begin to identify patterns and connections across the descriptive and interpretive codes.

- Structural coding : Applies a content-based phrase to a segment of data that relates to a specific research question. Research question : What motivates students to succeed? Participant : “I want to make my parents proud and be the first in my family to graduate college.” Interpretive Code : “family motivation” Theoretical code : “Social identity theory”

- Value coding : This method codes data according to the participants’ values, attitudes, and beliefs, representing their perspectives or worldviews. Participant : “I believe everyone deserves access to quality healthcare.” Interpretive Code : “healthcare access” (value) Theoretical code : “Distributive justice”

Pattern codes

Pattern coding is often used in the later stages of data analysis, after the researcher has thoroughly familiarized themselves with the data and identified initial descriptive and interpretive codes.

By identifying patterns and relationships across the data, pattern codes help to develop a more coherent and meaningful understanding of the phenomenon and can contribute to theory development or refinement.

For Example

Let’s say a researcher is studying the experiences of new mothers returning to work after maternity leave. They conduct interviews with several participants and initially use descriptive and interpretive codes to analyze the data. Some of these codes might include:

- “Guilt about leaving baby”

- “Struggle to balance work and family”

- “Support from colleagues”

- “Flexible work arrangements”

- “Breastfeeding challenges”

As the researcher reviews the coded data, they may notice that several of these codes relate to the broader theme of “work-family conflict.”

They might create a pattern code called “Navigating work-family conflict” that pulls together the various experiences and challenges described by the participants.

Related Articles

Research Methodology

What Is a Focus Group?

Cross-Cultural Research Methodology In Psychology

What Is Internal Validity In Research?

Research Methodology , Statistics

What Is Face Validity In Research? Importance & How To Measure

Criterion Validity: Definition & Examples

Convergent Validity: Definition and Examples

CRO Platform

Test your insights. Run experiments. Win. Or learn. And then win.

eCommerce Customer Analytics Platform

Acquisition matters. But retention matters more. Understand, monitor & nurture the best customers.

- Case Studies

- Ebooks, Tools, Templates

- Digital Marketing Glossary

- eCommerce Growth Stories

- eCommerce Growth Show

- Help & Technical Documentation

CRO Guide > Chapter 3.1

Qualitative Research: Definition, Methodology, Limitation & Examples

Qualitative research is a method focused on understanding human behavior and experiences through non-numerical data. Examples of qualitative research include:

- One-on-one interviews,

- Focus groups, Ethnographic research,

- Case studies,

- Record keeping,

- Qualitative observations

In this article, we’ll provide tips and tricks on how to use qualitative research to better understand your audience through real world examples and improve your ROI. We’ll also learn the difference between qualitative and quantitative data.

Table of Contents

Marketers often seek to understand their customers deeply. Qualitative research methods such as face-to-face interviews, focus groups, and qualitative observations can provide valuable insights into your products, your market, and your customers’ opinions and motivations. Understanding these nuances can significantly enhance marketing strategies and overall customer satisfaction.

What is Qualitative Research

Qualitative research is a market research method that focuses on obtaining data through open-ended and conversational communication. This method focuses on the “why” rather than the “what” people think about you. Thus, qualitative research seeks to uncover the underlying motivations, attitudes, and beliefs that drive people’s actions.

Let’s say you have an online shop catering to a general audience. You do a demographic analysis and you find out that most of your customers are male. Naturally, you will want to find out why women are not buying from you. And that’s what qualitative research will help you find out.

In the case of your online shop, qualitative research would involve reaching out to female non-customers through methods such as in-depth interviews or focus groups. These interactions provide a platform for women to express their thoughts, feelings, and concerns regarding your products or brand. Through qualitative analysis, you can uncover valuable insights into factors such as product preferences, user experience, brand perception, and barriers to purchase.

Types of Qualitative Research Methods

Qualitative research methods are designed in a manner that helps reveal the behavior and perception of a target audience regarding a particular topic.

The most frequently used qualitative analysis methods are one-on-one interviews, focus groups, ethnographic research, case study research, record keeping, and qualitative observation.

1. One-on-one interviews