Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

An Introduction to t Tests | Definitions, Formula and Examples

Published on January 31, 2020 by Rebecca Bevans . Revised on June 22, 2023.

A t test is a statistical test that is used to compare the means of two groups. It is often used in hypothesis testing to determine whether a process or treatment actually has an effect on the population of interest, or whether two groups are different from one another.

- The null hypothesis ( H 0 ) is that the true difference between these group means is zero.

- The alternate hypothesis ( H a ) is that the true difference is different from zero.

Table of contents

When to use a t test, what type of t test should i use, performing a t test, interpreting test results, presenting the results of a t test, other interesting articles, frequently asked questions about t tests.

A t test can only be used when comparing the means of two groups (a.k.a. pairwise comparison). If you want to compare more than two groups, or if you want to do multiple pairwise comparisons, use an ANOVA test or a post-hoc test.

The t test is a parametric test of difference, meaning that it makes the same assumptions about your data as other parametric tests. The t test assumes your data:

- are independent

- are (approximately) normally distributed

- have a similar amount of variance within each group being compared (a.k.a. homogeneity of variance)

If your data do not fit these assumptions, you can try a nonparametric alternative to the t test, such as the Wilcoxon Signed-Rank test for data with unequal variances .

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

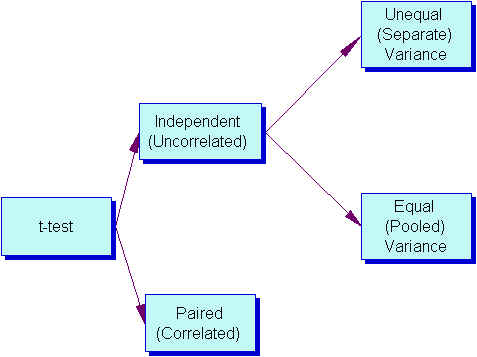

When choosing a t test, you will need to consider two things: whether the groups being compared come from a single population or two different populations, and whether you want to test the difference in a specific direction.

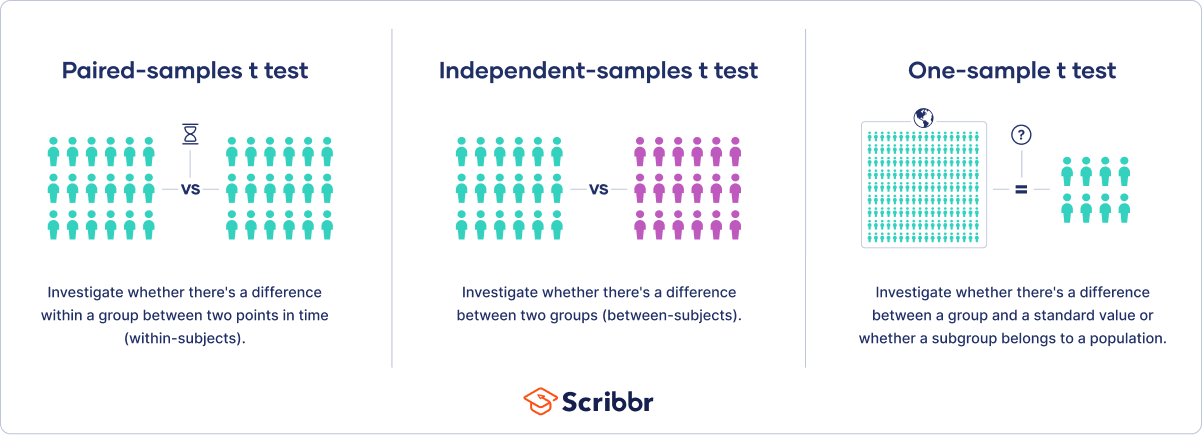

One-sample, two-sample, or paired t test?

- If the groups come from a single population (e.g., measuring before and after an experimental treatment), perform a paired t test . This is a within-subjects design .

- If the groups come from two different populations (e.g., two different species, or people from two separate cities), perform a two-sample t test (a.k.a. independent t test ). This is a between-subjects design .

- If there is one group being compared against a standard value (e.g., comparing the acidity of a liquid to a neutral pH of 7), perform a one-sample t test .

One-tailed or two-tailed t test?

- If you only care whether the two populations are different from one another, perform a two-tailed t test .

- If you want to know whether one population mean is greater than or less than the other, perform a one-tailed t test.

- Your observations come from two separate populations (separate species), so you perform a two-sample t test.

- You don’t care about the direction of the difference, only whether there is a difference, so you choose to use a two-tailed t test.

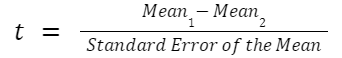

The t test estimates the true difference between two group means using the ratio of the difference in group means over the pooled standard error of both groups. You can calculate it manually using a formula, or use statistical analysis software.

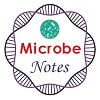

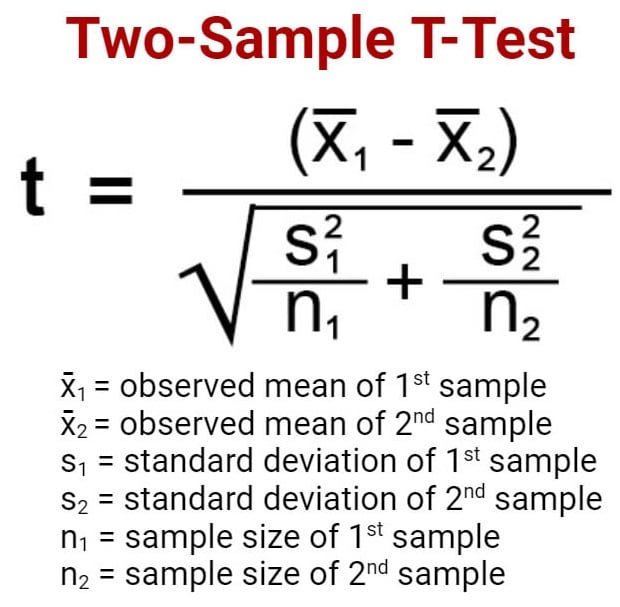

T test formula

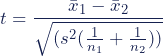

The formula for the two-sample t test (a.k.a. the Student’s t-test) is shown below.

In this formula, t is the t value, x 1 and x 2 are the means of the two groups being compared, s 2 is the pooled standard error of the two groups, and n 1 and n 2 are the number of observations in each of the groups.

A larger t value shows that the difference between group means is greater than the pooled standard error, indicating a more significant difference between the groups.

You can compare your calculated t value against the values in a critical value chart (e.g., Student’s t table) to determine whether your t value is greater than what would be expected by chance. If so, you can reject the null hypothesis and conclude that the two groups are in fact different.

T test function in statistical software

Most statistical software (R, SPSS, etc.) includes a t test function. This built-in function will take your raw data and calculate the t value. It will then compare it to the critical value, and calculate a p -value . This way you can quickly see whether your groups are statistically different.

In your comparison of flower petal lengths, you decide to perform your t test using R. The code looks like this:

Download the data set to practice by yourself.

Sample data set

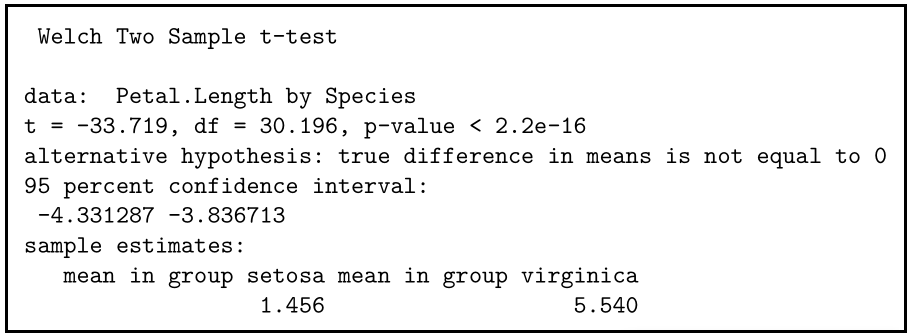

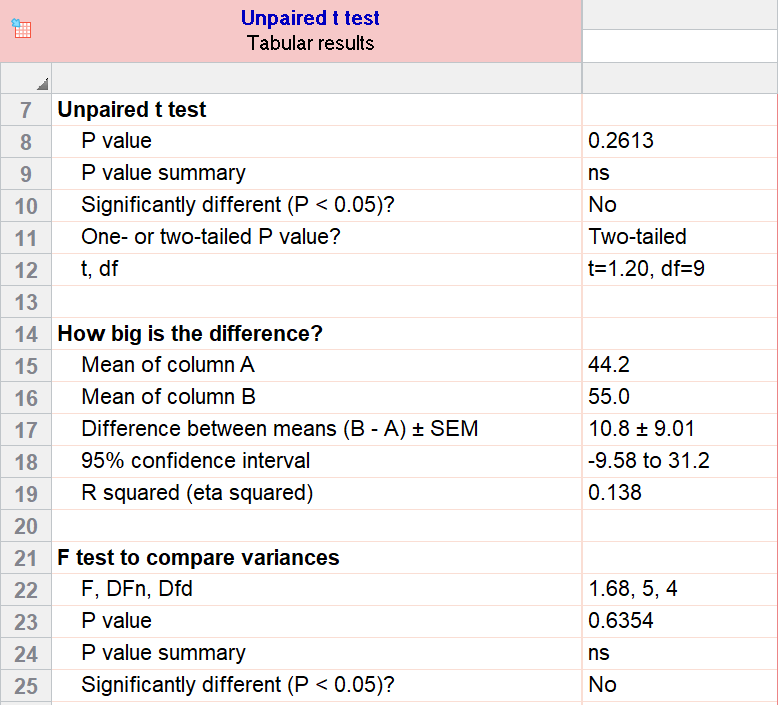

If you perform the t test for your flower hypothesis in R, you will receive the following output:

The output provides:

- An explanation of what is being compared, called data in the output table.

- The t value : -33.719. Note that it’s negative; this is fine! In most cases, we only care about the absolute value of the difference, or the distance from 0. It doesn’t matter which direction.

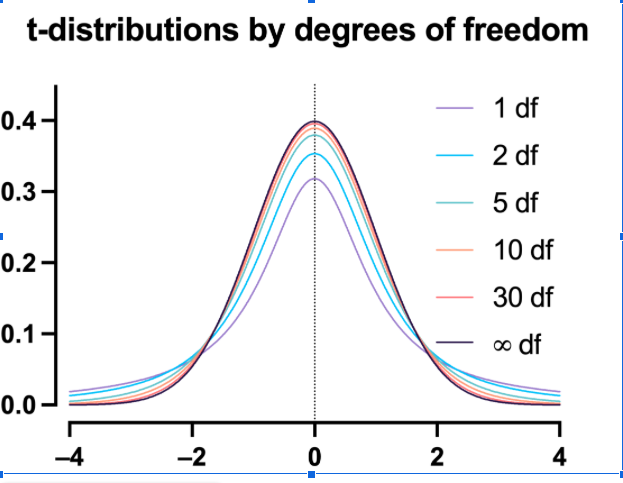

- The degrees of freedom : 30.196. Degrees of freedom is related to your sample size, and shows how many ‘free’ data points are available in your test for making comparisons. The greater the degrees of freedom, the better your statistical test will work.

- The p value : 2.2e-16 (i.e. 2.2 with 15 zeros in front). This describes the probability that you would see a t value as large as this one by chance.

- A statement of the alternative hypothesis ( H a ). In this test, the H a is that the difference is not 0.

- The 95% confidence interval . This is the range of numbers within which the true difference in means will be 95% of the time. This can be changed from 95% if you want a larger or smaller interval, but 95% is very commonly used.

- The mean petal length for each group.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

When reporting your t test results, the most important values to include are the t value , the p value , and the degrees of freedom for the test. These will communicate to your audience whether the difference between the two groups is statistically significant (a.k.a. that it is unlikely to have happened by chance).

You can also include the summary statistics for the groups being compared, namely the mean and standard deviation . In R, the code for calculating the mean and the standard deviation from the data looks like this:

flower.data %>% group_by(Species) %>% summarize(mean_length = mean(Petal.Length), sd_length = sd(Petal.Length))

In our example, you would report the results like this:

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Chi square test of independence

- Statistical power

- Descriptive statistics

- Degrees of freedom

- Pearson correlation

- Null hypothesis

Methodology

- Double-blind study

- Case-control study

- Research ethics

- Data collection

- Hypothesis testing

- Structured interviews

Research bias

- Hawthorne effect

- Unconscious bias

- Recall bias

- Halo effect

- Self-serving bias

- Information bias

A t-test is a statistical test that compares the means of two samples . It is used in hypothesis testing , with a null hypothesis that the difference in group means is zero and an alternate hypothesis that the difference in group means is different from zero.

A t-test measures the difference in group means divided by the pooled standard error of the two group means.

In this way, it calculates a number (the t-value) illustrating the magnitude of the difference between the two group means being compared, and estimates the likelihood that this difference exists purely by chance (p-value).

Your choice of t-test depends on whether you are studying one group or two groups, and whether you care about the direction of the difference in group means.

If you are studying one group, use a paired t-test to compare the group mean over time or after an intervention, or use a one-sample t-test to compare the group mean to a standard value. If you are studying two groups, use a two-sample t-test .

If you want to know only whether a difference exists, use a two-tailed test . If you want to know if one group mean is greater or less than the other, use a left-tailed or right-tailed one-tailed test .

A one-sample t-test is used to compare a single population to a standard value (for example, to determine whether the average lifespan of a specific town is different from the country average).

A paired t-test is used to compare a single population before and after some experimental intervention or at two different points in time (for example, measuring student performance on a test before and after being taught the material).

A t-test should not be used to measure differences among more than two groups, because the error structure for a t-test will underestimate the actual error when many groups are being compared.

If you want to compare the means of several groups at once, it’s best to use another statistical test such as ANOVA or a post-hoc test.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 22). An Introduction to t Tests | Definitions, Formula and Examples. Scribbr. Retrieved August 26, 2024, from https://www.scribbr.com/statistics/t-test/

Is this article helpful?

Rebecca Bevans

Other students also liked, choosing the right statistical test | types & examples, hypothesis testing | a step-by-step guide with easy examples, test statistics | definition, interpretation, and examples, what is your plagiarism score.

T Test (Student’s T-Test): Definition and Examples

T Test: Contents :

- What is a T Test?

- The T Score

- T Values and P Values

- Calculating the T Test

- What is a Paired T Test (Paired Samples T Test)?

What is a T test?

The t test tells you how significant the differences between group means are. It lets you know if those differences in means could have happened by chance. The t test is usually used when data sets follow a normal distribution but you don’t know the population variance .

For example, you might flip a coin 1,000 times and find the number of heads follows a normal distribution for all trials. So you can calculate the sample variance from this data, but the population variance is unknown. Or, a drug company may want to test a new cancer drug to find out if it improves life expectancy. In an experiment, there’s always a control group (a group who are given a placebo, or “sugar pill”). So while the control group may show an average life expectancy of +5 years, the group taking the new drug might have a life expectancy of +6 years. It would seem that the drug might work. But it could be due to a fluke. To test this, researchers would use a Student’s t-test to find out if the results are repeatable for an entire population.

In addition, a t test uses a t-statistic and compares this to t-distribution values to determine if the results are statistically significant .

However, note that you can only uses a t test to compare two means. If you want to compare three or more means, use an ANOVA instead.

The T Score.

The t score is a ratio between the difference between two groups and the difference within the groups .

- Larger t scores = more difference between groups.

- Smaller t score = more similarity between groups.

A t score of 3 tells you that the groups are three times as different from each other as they are within each other. So when you run a t test, bigger t-values equal a greater probability that the results are repeatable.

T-Values and P-values

How big is “big enough”? Every t-value has a p-value to go with it. A p-value from a t test is the probability that the results from your sample data occurred by chance. P-values are from 0% to 100% and are usually written as a decimal (for example, a p value of 5% is 0.05). Low p-values indicate your data did not occur by chance . For example, a p-value of .01 means there is only a 1% probability that the results from an experiment happened by chance.

Calculating the Statistic / Test Types

There are three main types of t-test:

- An Independent Samples t-test compares the means for two groups.

- A Paired sample t-test compares means from the same group at different times (say, one year apart).

- A One sample t-test tests the mean of a single group against a known mean.

You can find the steps for an independent samples t test here . But you probably don’t want to calculate the test by hand (the math can get very messy. Use the following tools to calculate the t test:

- How to do a T test in Excel.

- T test in SPSS.

- T-distribution on the TI 89.

- T distribution on the TI 83.

What is a Paired T Test (Paired Samples T Test / Dependent Samples T Test)?

A paired t test (also called a correlated pairs t-test , a paired samples t test or dependent samples t test ) is where you run a t test on dependent samples. Dependent samples are essentially connected — they are tests on the same person or thing. For example:

- Knee MRI costs at two different hospitals,

- Two tests on the same person before and after training,

- Two blood pressure measurements on the same person using different equipment.

When to Choose a Paired T Test / Paired Samples T Test / Dependent Samples T Test

Choose the paired t-test if you have two measurements on the same item, person or thing. But you should also choose this test if you have two items that are being measured with a unique condition. For example, you might be measuring car safety performance in vehicle research and testing and subject the cars to a series of crash tests. Although the manufacturers are different, you might be subjecting them to the same conditions.

With a “regular” two sample t test , you’re comparing the means for two different samples . For example, you might test two different groups of customer service associates on a business-related test or testing students from two universities on their English skills. But if you take a random sample each group separately and they have different conditions, your samples are independent and you should run an independent samples t test (also called between-samples and unpaired-samples).

The null hypothesis for the independent samples t-test is μ 1 = μ 2 . So it assumes the means are equal. With the paired t test, the null hypothesis is that the pairwise difference between the two tests is equal (H 0 : µ d = 0).

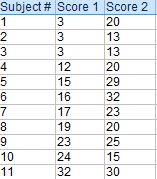

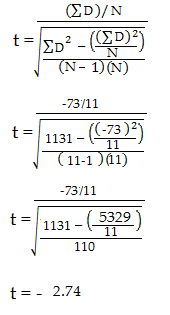

Paired Samples T Test By hand

- The “ΣD” is the sum of X-Y from Step 2.

- ΣD 2 : Sum of the squared differences (from Step 4).

- (ΣD) 2 : Sum of the differences (from Step 2), squared.

If you’re unfamiliar with the Σ notation used in the t test, it basically means to “add everything up”. You may find this article useful: summation notation .

Step 6: Subtract 1 from the sample size to get the degrees of freedom. We have 11 items. So 11 – 1 = 10.

Step 7: Find the p-value in the t-table , using the degrees of freedom in Step 6. But if you don’t have a specified alpha level , use 0.05 (5%).

So for this example t test problem, with df = 10, the t-value is 2.228.

Step 8: In conclusion, compare your t-table value from Step 7 (2.228) to your calculated t-value (-2.74). The calculated t-value is greater than the table value at an alpha level of .05. In addition, note that the p-value is less than the alpha level: p <.05. So we can reject the null hypothesis that there is no difference between means.

However, note that you can ignore the minus sign when comparing the two t-values as ± indicates the direction; the p-value remains the same for both directions.

In addition, check out our YouTube channel for more stats help and tips!

Goulden, C. H. Methods of Statistical Analysis, 2nd ed. New York: Wiley, pp. 50-55, 1956.

Microbe Notes

T-test: Definition, Formula, Types, Applications

The t-test is a test in statistics that is used for testing hypotheses regarding the mean of a small sample taken population when the standard deviation of the population is not known.

- The t-test is used to determine if there is a significant difference between the means of two groups.

- The t-test is used for hypothesis testing to determine whether a process has an effect on both samples or if the groups are different from each other.

- Basically, the t-test allows the comparison of the mean of two sets of data and the determination if the two sets are derived from the same population.

- After the null and alternative hypotheses are established, t-test formulas are used to calculate values that are then compared with standard values.

- Based on the comparison, the null hypothesis is either rejected or accepted.

- The T-test is similar to other tests like the z-test and f-test except that t-test is usually performed in cases where the sample size is small (n≤30).

Table of Contents

Interesting Science Videos

T-test Formula

T-tests can be performed manually using a formula or through some software.

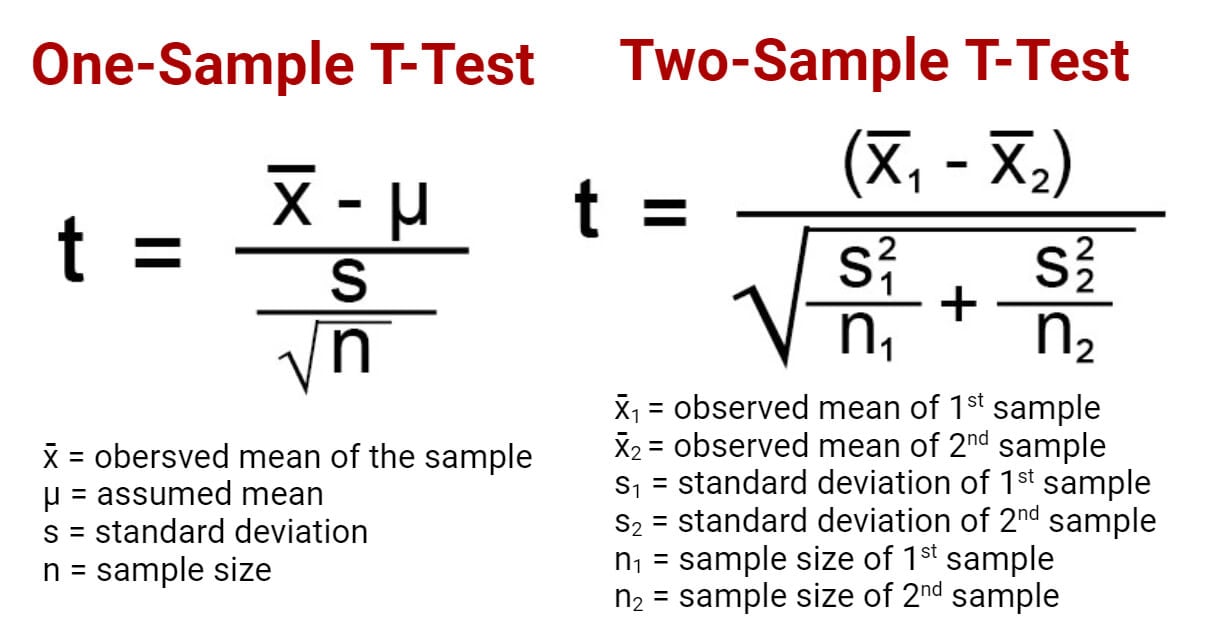

One sample t-test (one-tailed t-test)

- One sample t-test is a statistical test where the critical area of a distribution is one-sided so that the alternative hypothesis is accepted if the population parameter is either greater than or less than a certain value, but not both.

- In the case where the t-score of the sample being tested falls into the critical area of a one-sided test, the alternative hypothesis is to be accepted instead of the null hypothesis.

- A one-tailed test is used to determine if the population is either lower than or higher than some hypothesized value.

- A one-tailed test is appropriate if the estimated value might depart from the sample value in either of the directions, left or right, but not both.

- For this test, the null hypothesis states that there is no difference between the true mean and the assumed value whereas the alternative hypothesis states that either the assumed value is greater than or less than the true mean but not both.

- For instance, if our H 0 : µ 0 = µ and H a : µ < µ 0 , such a test would be a one-sided test or more precisely, a left-tailed test.

- Under such conditions, there is one rejection area only on the left tail of the distribution.

- If we consider µ = 100 and if our sample mean deviates significantly from 100 towards the lower direction, H 0 or null hypothesis is rejected. Otherwise, H 0 is accepted at a given level of significance.

- Similarly, if in another case, H 0 : µ = µ 0 and H a : µ > µ 0 , this is also a one-tailed test (right tail) and the rejection region is present on the right tail of the curve.

- In this case, when µ = 100 and the sample mean deviates significantly from 100 in the upward direction, H 0 is rejected otherwise, it is to be accepted.

Two sample t-test (two-tailed t-test)

- Two sample t-test is a test a method in which the critical area of a distribution is two-sided and the test is performed to determine whether the population parameter of the sample is greater than or less than a specific range of values.

- A two-tailed test rejects the null hypothesis in cases where the sample mean is significantly higher or lower than the assumed value of the mean of the population.

- This type of test is appropriate when the null hypothesis is some assumed value, and the alternative hypothesis is set as the value not equal to the specified value of the null hypothesis.

- The two-tailed test is appropriate when we have H 0 : µ = µ 0 and H a : µ ≠ µ 0 which may mean µ > µ 0 or µ < µ 0 .

- Therefore, in a two-tailed test, there are two rejection regions, one in either direction, left and right, towards each tail of the curve.

- Suppose, we take µ = 100 and if our sample mean deviates significantly from 100 in either direction, the null hypothesis can be rejected. But if the sample mean does not deviate considerably from µ, the null hypothesis is accepted.

Independent t-test

- An Independent t-test is a test used for judging the means of two independent groups to determine the statistical evidence to prove that the population means are significantly different.

- Subjects in each sample are also assumed to come from different populations, that is, subjects in “Sample A” are assumed to come from “Population A” and subjects in “Sample B” are assumed to come from “Population B.”

- The populations are assumed to differ only in the level of the independent variable.

- Thus, any difference found between the sample means should also exist between population means, and any difference between the population means must be due to the difference in the levels of the independent variable.

- Based on this information, a curve can be plotted to determine the effect of an independent variable on the dependent variable and vice versa.

T-test Applications

- The T-test compares the mean of two samples, dependent or independent.

- It can also be used to determine if the sample mean is different from the assumed mean.

- T-test has an application in determining the confidence interval for a sample mean.

References and Sources

- R. Kothari (1990) Research Methodology. Vishwa Prakasan. India.

- 3% – https://www.investopedia.com/terms/o/one-tailed-test.asp

- 2% – https://towardsdatascience.com/hypothesis-testing-in-machine-learning-using-python-a0dc89e169ce

- 2% – https://en.wikipedia.org/wiki/Two-tailed_test

- 1% – https://www.scribbr.com/statistics/t-test/

- 1% – https://www.scalelive.com/null-hypothesis.html

- 1% – https://www.investopedia.com/terms/t/two-tailed-test.asp

- 1% – https://www.investopedia.com/ask/answers/073115/what-assumptions-are-made-when-conducting-ttest.asp

- 1% – https://www.chegg.com/homework-help/questions-and-answers/sample-100-steel-wires-average-breaking-strength-x-50-kn-standard-deviation-sigma-4-kn–fi-q20558661

- 1% – https://support.minitab.com/en-us/minitab/18/help-and-how-to/statistics/basic-statistics/supporting-topics/basics/null-and-alternative-hypotheses/

- 1% – https://libguides.library.kent.edu/SPSS/IndependentTTest

- 1% – https://keydifferences.com/difference-between-t-test-and-z-test.html

- 1% – https://keydifferences.com/difference-between-t-test-and-f-test.html

- 1% – http://www.sci.utah.edu/~arpaiva/classes/UT_ece3530/hypothesis_testing.pdf

- <1% – https://www.thoughtco.com/overview-of-the-demand-curve-1146962

- <1% – https://www.slideshare.net/aniket0013/formulating-hypotheses

- <1% – https://en.wikipedia.org/wiki/Null_hypothesis

About Author

Anupama Sapkota

2 thoughts on “T-test: Definition, Formula, Types, Applications”

Hi, on the very top, the one sample t-test formula in the picture is incorrect. It should be x-bar – u, not +

Thanks, it has been corrected 🙂

Leave a Comment Cancel reply

Save my name, email, and website in this browser for the next time I comment.

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

T Test Overview: How to Use & Examples

By Jim Frost 12 Comments

What is a T Test?

A t test is a statistical hypothesis test that assesses sample means to draw conclusions about population means. Frequently, analysts use a t test to determine whether the population means for two groups are different. For example, it can determine whether the difference between the treatment and control group means is statistically significant.

The following are the standard t tests:

- One-sample: Compares a sample mean to a reference value.

- Two-sample: Compares two sample means.

- Paired: Compares the means of matched pairs, such as before and after scores.

In this post, you’ll learn about the different types of t tests, when you should use each one, and their assumptions. Additionally, I interpret an example of each type.

Which T Test Should I Use?

To choose the correct t test, you must know whether you are assessing one or two group means. If you’re working with two group means, do the groups have the same or different items/people? Use the table below to choose the proper analysis.

| One | One sample t test | |

| Two | Different items in each group | Two sample t test |

| Two | Same items in both groups | Paired t test |

Now, let’s review each t test to see what it can do!

Imagine we’ve developed a drug that supposedly boosts your IQ score. In the following sections, we’ll address the same research question, and I’ll show you how the various t tests can help you answer it.

One Sample T Test

Use a one-sample t test to compare a sample mean to a reference value. It allows you to determine whether the population mean differs from the reference value. The reference value is usually highly relevant to the subject area.

For example, a coffee shop claims their large cup contains 16 ounces. A skeptical customer takes a random sample of 10 large cups of coffee and measures their contents to determine if the mean volume differs from the claimed 16 ounces using a one-sample t test.

One-Sample T Test Hypotheses

- Null hypothesis (H 0 ): The population mean equals the reference value (µ = µ 0 ).

- Alternative hypothesis (H A ): The population mean DOES NOT equal the reference value (µ ≠ µ 0 ).

Reject the null when the p-value is less than the significance level (e.g., 0.05). This condition indicates the difference between the sample mean and the reference value is statistically significant. Your sample data support the idea that the population mean does not equal the reference value.

Learn more about the One-Sample T-Test .

The above hypotheses are two-sided analyses. Alternatively, you can use one-sided hypotheses to find effects in only one direction. Learn more in my article, One- and Two-Tailed Hypothesis Tests Explained .

Related posts : Null Hypothesis: Definition, Rejecting & Examples and Understanding Significance Levels

We want to evaluate our IQ boosting drug using a one-sample t test. First, we draw a single random sample of 15 participants and administer the medicine to all of them. Then we measure all their IQs and calculate a sample average IQ of 109.

In the general population, the average IQ is defined as 100 . So, we’ll use 100 as our reference value. Is the difference between our sample mean of 109 and the reference value of 100 statistically significant? The t test output is below.

In the output, we see that our sample mean is 109. The procedure compares the sample mean to the reference value of 100 and produces a p-value of 0.036. Consequently, we can reject the null hypothesis and conclude that the population mean for those who take the IQ drug is higher than 100.

Two-Sample T Test

Use a two-sample t test to compare the sample means for two groups. It allows you to determine whether the population means for these two groups are different. For the two-sample procedure, the groups must contain different sets of items or people.

For example, you might compare averages between males and females or treatment and controls.

Two-Sample T Test Hypotheses

- Null hypothesis (H 0 ): Two population means are equal (µ 1 = µ 2 ).

- Alternative hypothesis (H A ): Two population means are not equal (µ 1 ≠ µ 2 ).

Again, when the p-value is less than or equal to your significance level, reject the null hypothesis. The difference between the two means is statistically significant. Your sample data support the theory that the two population means are different. Learn more about the Null Hypothesis: Definition, Rejecting & Examples .

Learn more about the two-sample t test .

Related posts : How to Interpret P Values and Statistical Significance

For our IQ drug, we collect two random samples, a control group and a treatment group. Each group has 15 subjects. We give the treatment group the medication and a placebo to the control group.

We’ll use a two-sample t test to evaluate if the difference between the two group means is statistically significant. The t test output is below.

In the output, you can see that the treatment group (Sample 1) has a mean of 109 while the control group’s (Sample 2) average is 100. The p-value for the difference between the groups is 0.112. We fail to reject the null hypothesis. There is insufficient evidence to conclude that the IQ drug has an effect .

Paired Sample T Test

Use a paired t-test when you measure each subject twice, such as before and after test scores. This procedure determines if the mean difference between paired scores differs from zero, where zero represents no effect. Because researchers measure each item in both conditions, the subjects serve as their own controls.

For example, a pharmaceutical company develops a new drug to reduce blood pressure. They measure the blood pressure of 20 patients before and after administering the medication for one month. Analysts use a paired t-test to assess whether there is a statistically significant difference in pressure measurements before and after taking the drug.

Paired T Test Hypotheses

- Null hypothesis: The mean difference between pairs equals zero in the population (µ D = 0).

- Alternative hypothesis: The mean difference between pairs does not equal zero in the population (µ D ≠ 0).

Reject the null when the p-value is less than or equal to your significance level (e.g., 0.05). Your sample provides sufficiently strong evidence to conclude that the mean difference between pairs does not equal zero in the population.

Learn more about the paired t test.

Back to our IQ boosting drug. This time, we’ll draw one random sample of 15 participants. We’ll measure their IQ before taking the medicine and then again afterward. The before and after groups contain the same people. The procedure subtracts the After — Before scores to calculate the individual differences. Then it calculates the average difference.

If the drug increases IQs effectively, we should see a positive difference value. Conversely, a value near zero indicates that the IQ scores didn’t improve between the Before and After scores. The paired t test will determine whether the difference between the pre-test and post-test is statistically significant.

The t test output is below.

The mean difference between the pre-test and post-test scores is 9 IQ points. In other words, the average IQ increased by 9 points between the before and after measurements. The p-value of 0.000 causes us to reject the null. We conclude that the difference between the pre-test and post-test population means does not equal zero. The drug appears to increase IQs by an average of 9 IQ points in the population.

T Test Assumptions

For your t test to produce reliable results, your data should meet the following assumptions:

You have a random sample

Drawing a random sample from your target population helps ensure it represents the population. Representative samples are crucial for accurately inferring population properties. The t test results are invalid if your data do not reflect the population.

Related posts : Random Sampling and Representative Samples

Continuous data

A t test requires continuous data . Continuous variables can take on all numeric values, and the scale can be divided meaningfully into smaller increments, such as fractional and decimal values. For example, weight, height, and temperature are continuous.

Other analyses can assess additional data types. For more information, read Comparing Hypothesis Tests for Continuous, Binary, and Count Data .

Your sample data follow a normal distribution, or you have a large sample size

A t test assumes your data follow a normal distribution . However, due to the central limit theorem, you can waive this assumption when your sample is large enough.

The following sample size guidelines specify when normality becomes less of a restriction:

- One-Sample and Paired : 20 or more observations.

- Two-Sample : At least 15 in each group.

Related posts : Central Limit Theorem and Skewed Distributions

Population standard deviation is unknown

A t test assumes you have a sample estimate of the standard deviation. In other words, you don’t know the precise value of the population standard deviation. This assumption is almost always true. However, if you know the population standard deviation, use the Z test instead. However, when n > 30, the difference between the t and Z tests becomes trivial.

Learn more about the Z test .

Related post : Standard Deviations

Share this:

Reader Interactions

April 16, 2024 at 5:00 pm

Hello Jim, and thank you on behalf of the thousands you have helped.

Question about which t test to use:

20 members of a committee are asked to interview and rate two candidates for a position – one candidate on Monday, the other candidate on Tuesday. So, one group of 20 committee members interviews 2 separate candidates one day after the other on the same variables . Would this scenario use a paired or independent application? thank you,, js

April 16, 2024 at 8:37 pm

This would be a case where you’d potentially use a paired t-test . You’re determining whether there’s a significant difference between the two candidates as given by the same 20 committee members. The two observations are paired because it’s the same 20 members giving the two ratings.

The only wrinkle in that, which is why I say “potentially use,” is that ratings are often ordinal. If you have ordinal rankings, you might need to use a nonparametric test.

April 11, 2024 at 11:25 pm

Question about determining tails: when determining the P values, this is what I am told: “You draw a t curve and plot t value on the horizontal axis, then you check the sign in Ha, if it is > such as our case you shade the right hand side. ( if Ha has <sign, the shade the left hand side).II) Determine if the shaded side is a tail or not ( a smaller side is called a tail), if it is, P=sig/2;If it is not a tail then P=1-(sig/2)" When emailing the isntructor, this is all I was told: For p of t test, if the shaded area according to your Ha is small, it is a tail (which is half of the two tails), if it is large then 1- a tail.

So, when determining P of T test, how do I know whether to perform 1-(p/2) or just P/2

We use the software SPSS so P=sig in the instructions.

April 12, 2024 at 12:04 am

From your description, I can’t tell what you’re saying.

Tails are just the thin, extreme parts of the distribution. In this hypothesis testing context, shaded areas are called critical regions or rejection regions. You need to determine whether your t-value (or other test statistic) falls within a critical region. If it does, your results are significant and you reject the null. However that process doesn’t tell you the p-value. I think you’re mixing two different things. Here are a couple of posts I’ve written that will clarify the issues you asked about.

Finding the P-value One and Two Tailed Hypothesis Tests Explained

January 10, 2024 at 3:08 pm

Happy New Year!

I have a few questions I was hoping you’d be able to help me with please?

In the case of a t-test, I know one assumption is that the DV should be the scale variable and the IV should be the categorical variable. I wondered if it mattered whether it was the other way around – so the scale variable was the IV and the categorial variable the DV. Would it make much difference? When I’ve done a t-test like this before, it doesn’t seem to, but I may be missing something.

Would it be better to recode the scale variable to a categorical variable and do a chi-square test?

Or does it just depend on what I am aiming to do. So whether I want to examine relationships or compare means?

Any advice would be appreciated.

January 10, 2024 at 5:34 pm

Hi Charlotte

Yes, you can do that in the opposite direction but you’ll need to use a different analysis.

If you have two groups based on a categorical variable and a continuous variable, you have a couple of choices:

You can use the 2-sample t-test as you suggest to determine whether the group means are different.

Or, you can use something like binary logistic regression to use the continuous variable to predict the outcome of the binary variable.

Typically, you’ll choose the one that makes the most sense for your subject area. If you think group assignment affects the mean outcome, use the t-test. However, if you think the continuous value of a variable predicts the outcome of the binary variable, use binary logistic regression.

I hope that helps!

October 11, 2023 at 5:40 am

Jim, When the input variable is continuous (such as speed) and the output variable is categorical (pass/ fail) I know that logistic regression should be done. However can a standard 2-sample t-test be done to determine if the mean input level is independent of result (pass or fail)? Can a standard deviations test also be done to determine if the spread on values for the input variable is independent of result?

October 6, 2023 at 5:23 am

This was really helpful. After reading it, conducting a T test analysis is almost like a walk in the park. Thanks!

October 6, 2023 at 6:41 pm

Thanks so much, Mark!

September 8, 2023 at 2:14 am

Thank you for your awesome work.

September 7, 2023 at 2:03 am

Your explanation is comprehensive even to non-statisticians

September 7, 2023 at 6:57 pm

Thanks so much, Daniel. So glad my blog post could help!

Comments and Questions Cancel reply

An open portfolio of interoperable, industry leading products

The Dotmatics digital science platform provides the first true end-to-end solution for scientific R&D, combining an enterprise data platform with the most widely used applications for data analysis, biologics, flow cytometry, chemicals innovation, and more.

Statistical analysis and graphing software for scientists

Bioinformatics, cloning, and antibody discovery software

Plan, visualize, & document core molecular biology procedures

Electronic Lab Notebook to organize, search and share data

Proteomics software for analysis of mass spec data

Modern cytometry analysis platform

Analysis, statistics, graphing and reporting of flow cytometry data

Software to optimize designs of clinical trials

POPULAR USE CASES

The Ultimate Guide to T Tests

Get all of your t test questions answered here

The ultimate guide to t tests

The t test is one of the simplest statistical techniques that is used to evaluate whether there is a statistical difference between the means from up to two different samples. The t test is especially useful when you have a small number of sample observations (under 30 or so), and you want to make conclusions about the larger population.

The characteristics of the data dictate the appropriate type of t test to run. All t tests are used as standalone analyses for very simple experiments and research questions as well as to perform individual tests within more complicated statistical models such as linear regression. In this guide, we’ll lay out everything you need to know about t tests, including providing a simple workflow to determine what t test is appropriate for your particular data or if you’d be better suited using a different model.

What is a t test?

A t test is a statistical technique used to quantify the difference between the mean (average value) of a variable from up to two samples (datasets). The variable must be numeric. Some examples are height, gross income, and amount of weight lost on a particular diet.

A t test tells you if the difference you observe is “surprising” based on the expected difference. They use t-distributions to evaluate the expected variability. When you have a reasonable-sized sample (over 30 or so observations), the t test can still be used, but other tests that use the normal distribution (the z test) can be used in its place.

Sometimes t tests are called “Student’s” t tests, which is simply a reference to their unusual history.

It got its name because a brewer from the Guinness Brewery, William Gosset , published about the method under the pseudonym "Student". He wanted to get information out of very small sample sizes (often 3-5) because it took so much effort to brew each keg for his samples.

When should I use a t test?

A t test is appropriate to use when you’ve collected a small, random sample from some statistical “population” and want to compare the mean from your sample to another value. The value for comparison could be a fixed value (e.g., 10) or the mean of a second sample.

For example, if your variable of interest is the average height of sixth graders in your region, then you might measure the height of 25 or 30 randomly-selected sixth graders. A t test could be used to answer questions such as, “Is the average height greater than four feet?”

How does a t test work?

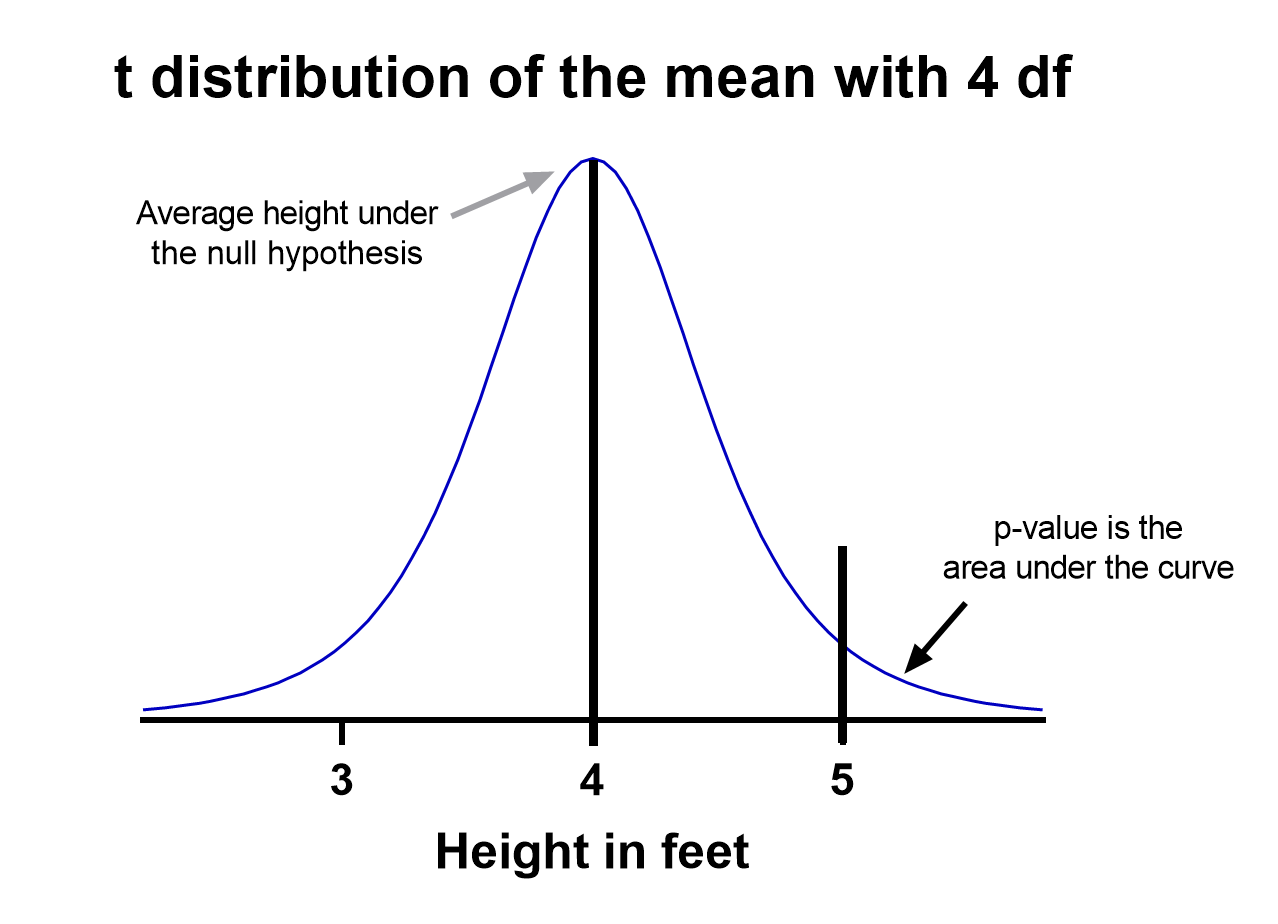

Based on your experiment, t tests make enough assumptions about your experiment to calculate an expected variability, and then they use that to determine if the observed data is statistically significant. To do this, t tests rely on an assumed “null hypothesis.” With the above example, the null hypothesis is that the average height is less than or equal to four feet.

Say that we measure the height of 5 randomly selected sixth graders and the average height is five feet. Does that mean that the “true” average height of all sixth graders is greater than four feet or did we randomly happen to measure taller than average students?

To evaluate this, we need a distribution that shows every possible average value resulting from a sample of five individuals in a population where the true mean is four. That may seem impossible to do, which is why there are particular assumptions that need to be made to perform a t test.

With those assumptions, then all that’s needed to determine the “sampling distribution of the mean” is the sample size (5 students in this case) and standard deviation of the data (let’s say it’s 1 foot).

That’s enough to create a graphic of the distribution of the mean, which is:

Notice the vertical line at x = 5, which was our sample mean. We (use software to) calculate the area to the right of the vertical line, which gives us the P value (0.09 in this case). Note that because our research question was asking if the average student is greater than four feet, the distribution is centered at four. Since we’re only interested in knowing if the average is greater than four feet, we use a one-tailed test in this case.

Using the standard confidence level of 0.05 with this example, we don’t have evidence that the true average height of sixth graders is taller than 4 feet.

What are the assumptions for t tests?

- One variable of interest : This is not correlation or regression, where you are interested in the relationship between multiple variables. With a t test, you can have different samples, but they are all measuring the same variable (e.g., height).

- Numeric data: You are dealing with a list of measurements that can be averaged. This means you aren’t just counting occurrences in various categories (e.g., eye color or political affiliation).

- Two groups or less: If you have more than two samples of data, a t test is the wrong technique. You most likely need to try ANOVA.

- Random sample : You need a random sample from your statistical “population of interest” in order to draw valid conclusions about the larger population. If your population is so small that you can measure everything, then you have a “census” and don’t need statistics. This is because you don’t need to estimate the truth, since you have measured the truth without variability.

- Normally Distributed : The smaller your sample size, the more important it is that your data come from a normal, Gaussian distribution bell curve. If you have reason to believe that your data are not normally distributed, consider nonparametric t test alternatives . This isn’t necessary for larger samples (usually 25 or 30 unless the data is heavily skewed). The reason is that the Central Limit Theorem applies in this case, which says that even if the distribution of your data is not normal, the distribution of the mean of your data is, so you can use a z-test rather than a t test.

How do I know which t test to use?

There are many types of t tests to choose from, but you don’t necessarily have to understand every detail behind each option.

You just need to be able to answer a few questions, which will lead you to pick the right t test. To that end, we put together this workflow for you to figure out which test is appropriate for your data.

Do you have one or two samples?

Are you comparing the means of two different samples, or comparing the mean from one sample to a fixed value? An example research question is, “Is the average height of my sample of sixth grade students greater than four feet?”

If you only have one sample of data, you can click here to skip to a one-sample t test example, otherwise your next step is to ask:

Are observations in the two samples matched up or related in some way?

This could be as before-and-after measurements of the same exact subjects, or perhaps your study split up “pairs” of subjects (who are technically different but share certain characteristics of interest) into the two samples. The same variable is measured in both cases.

If so, you are looking at some kind of paired samples t test . The linked section will help you dial in exactly which one in that family is best for you, either difference (most common) or ratio.

If you aren’t sure paired is right, ask yourself another question:

Are you comparing different observations in each of the two samples?

If the answer is yes, then you have an unpaired or independent samples t test. The two samples should measure the same variable (e.g., height), but are samples from two distinct groups (e.g., team A and team B).

The goal is to compare the means to see if the groups are significantly different. For example, “Is the average height of team A greater than team B?” Unlike paired, the only relationship between the groups in this case is that we measured the same variable for both. There are two versions of unpaired samples t tests (pooled and unpooled) depending on whether you assume the same variance for each sample.

Have you run the same experiment multiple times on the same subject/observational unit?

If so, then you have a nested t test (unless you have more than two sample groups). This is a trickier concept to understand. One example is if you are measuring how well Fertilizer A works against Fertilizer B. Let’s say you have 12 pots to grow plants in (6 pots for each fertilizer), and you grow 3 plants in each pot.

In this case you have 6 observational units for each fertilizer, with 3 subsamples from each pot. You would want to analyze this with a nested t test . The “nested” factor in this case is the pots. It’s important to note that we aren’t interested in estimating the variability within each pot, we just want to take it into account.

You might be tempted to run an unpaired samples t test here, but that assumes you have 6*3 = 18 replicates for each fertilizer. However, the three replicates within each pot are related, and an unpaired samples t test wouldn’t take that into account.

What if none of these sound like my experiment?

If you’re not seeing your research question above, note that t tests are very basic statistical tools. Many experiments require more sophisticated techniques to evaluate differences. If the variable of interest is a proportion (e.g., 10 of 100 manufactured products were defective), then you’d use z-tests. If you take before and after measurements and have more than one treatment (e.g., control vs a treatment diet), then you need ANOVA.

How do I perform a t test using software?

If you’re wondering how to do a t test, the easiest way is with statistical software such as Prism or an online t test calculator .

If you’re using software, then all you need to know is which t test is appropriate ( use the workflow here ) and understand how to interpret the output. To do that, you’ll also need to:

- Determine whether your test is one or two-tailed

- Choose the level of significance

Is my test one or two-tailed?

Whether or not you have a one- or two-tailed test depends on your research hypothesis. Choosing the appropriately tailed test is very important and requires integrity from the researcher. This is because you have more “power” with one-tailed tests, meaning that you can detect a statistically significant difference more easily. Unless you have written out your research hypothesis as one directional before you run your experiment, you should use a two-tailed test.

Two-tailed tests

Two-tailed tests are the most common, and they are applicable when your research question is simply asking, “is there a difference?”

One-tailed tests

Contrast that with one-tailed tests, where the research questions are directional, meaning that either the question is, “is it greater than ” or the question is, “is it less than ”. These tests can only detect a difference in one direction.

Choosing the level of significance

All t tests estimate whether a mean of a population is different than some other value, and with all estimates come some variability, or what statisticians call “error.” Before analyzing your data, you want to choose a level of significance, usually denoted by the Greek letter alpha, 𝛼. The scientific standard is setting alpha to be 0.05.

An alpha of 0.05 results in 95% confidence intervals, and determines the cutoff for when P values are considered statistically significant.

One sample t test

If you only have one sample of a list of numbers, you are doing a one-sample t test. All you are interested in doing is comparing the mean from this group with some known value to test if there is evidence, that it is significantly different from that standard. Use our free one-sample t test calculator for this.

A one sample t test example research question is, “Is the average fifth grader taller than four feet?”

It is the simplest version of a t test, and has all sorts of applications within hypothesis testing. Sometimes the “known value” is called the “null value”. While the null value in t tests is often 0, it could be any value. The name comes from being the value which exactly represents the null hypothesis, where no significant difference exists.

Any time you know the exact number you are trying to compare your sample of data against, this could work well. And of course: it can be either one or two-tailed.

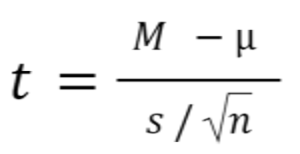

One sample t test formula

Statistical software handles this for you, but if you want the details, the formula for a one sample t test is:

- M: Calculated mean of your sample

- μ: Hypothetical mean you are testing against

- s: The standard deviation of your sample

- n: The number of observations in your sample.

In a one-sample t test, calculating degrees of freedom is simple: one less than the number of objects in your dataset (you’ll see it written as n-1 ).

Example of a one sample t test

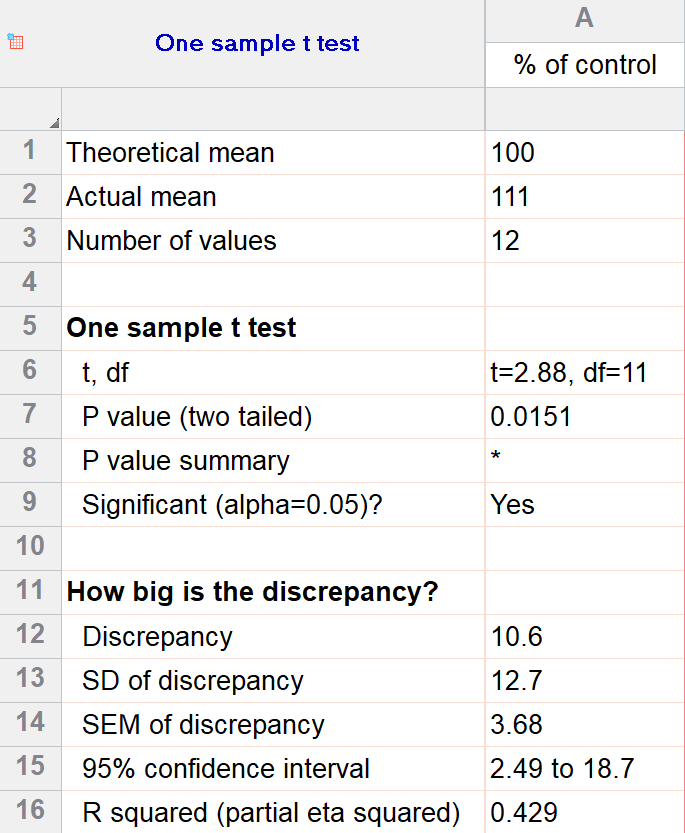

For our example within Prism, we have a dataset of 12 values from an experiment labeled “% of control”. Perhaps these are heights of a sample of plants that have been treated with a new fertilizer. A value of 100 represents the industry-standard control height. Likewise, 123 represents a plant with a height 123% that of the control (that is, 23% larger).

We’ll perform a two-tailed, one-sample t test to see if plants are shorter or taller on average with the fertilizer. We will use a significance threshold of 0.05. Here is the output:

You can see in the output that the actual sample mean was 111. Is that different enough from the industry standard (100) to conclude that there is a statistical difference?

The quick answer is yes, there’s strong evidence that the height of the plants with the fertilizer is greater than the industry standard (p=0.015). The nice thing about using software is that it handles some of the trickier steps for you. In this case, it calculates your test statistic (t=2.88), determines the appropriate degrees of freedom (11), and outputs a P value.

More informative than the P value is the confidence interval of the difference, which is 2.49 to 18.7. The confidence interval tells us that, based on our data, we are confident that the true difference between our sample and the baseline value of 100 is somewhere between 2.49 and 18.7. As long as the difference is statistically significant, the interval will not contain zero.

You can follow these tips for interpreting your own one-sample test.

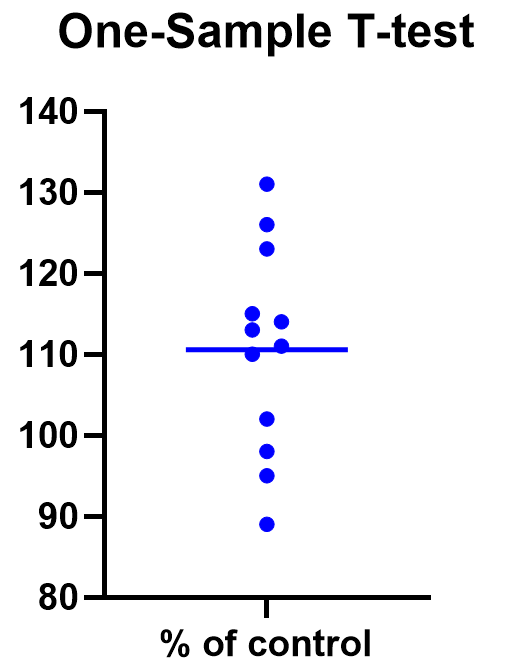

Graphing a one-sample t test

For some techniques (like regression), graphing the data is a very helpful part of the analysis. For t tests, making a chart of your data is still useful to spot any strange patterns or outliers, but the small sample size means you may already be familiar with any strange things in your data.

Here we have a simple plot of the data points, perhaps with a mark for the average. We’ve made this as an example, but the truth is that graphing is usually more visually telling for two-sample t tests than for just one sample.

Two sample t tests

There are several kinds of two sample t tests, with the two main categories being paired and unpaired (independent) samples.

Paired samples t test

In a paired samples t test, also called dependent samples t test, there are two samples of data, and each observation in one sample is “paired” with an observation in the second sample. The most common example is when measurements are taken on each subject before and after a treatment. A paired t test example research question is, “Is there a statistical difference between the average red blood cell counts before and after a treatment?”

Having two samples that are closely related simplifies the analysis. Statistical software, such as this paired t test calculator , will simply take a difference between the two values, and then compare that difference to 0.

In some (rare) situations, taking a difference between the pairs violates the assumptions of a t test, because the average difference changes based on the size of the before value (e.g., there’s a larger difference between before and after when there were more to start with). In this case, instead of using a difference test, use a ratio of the before and after values, which is referred to as ratio t tests .

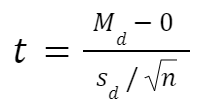

Paired t test formula

The formula for paired samples t test is:

- Md: Mean difference between the samples

- sd: The standard deviation of the differences

- n: The number of differences

Degrees of freedom are the same as before. If you’re studying for an exam, you can remember that the degrees of freedom are still n-1 (not n-2) because we are converting the data into a single column of differences rather than considering the two groups independently.

Also note that the null value here is simply 0. There is no real reason to include “minus 0” in an equation other than to illustrate that we are still doing a hypothesis test. After you take the difference between the two means, you are comparing that difference to 0.

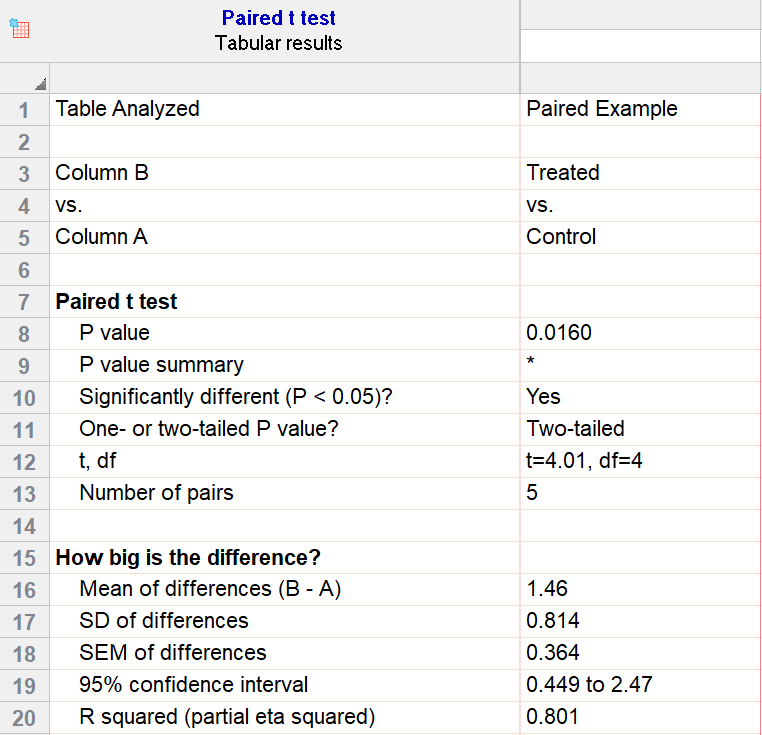

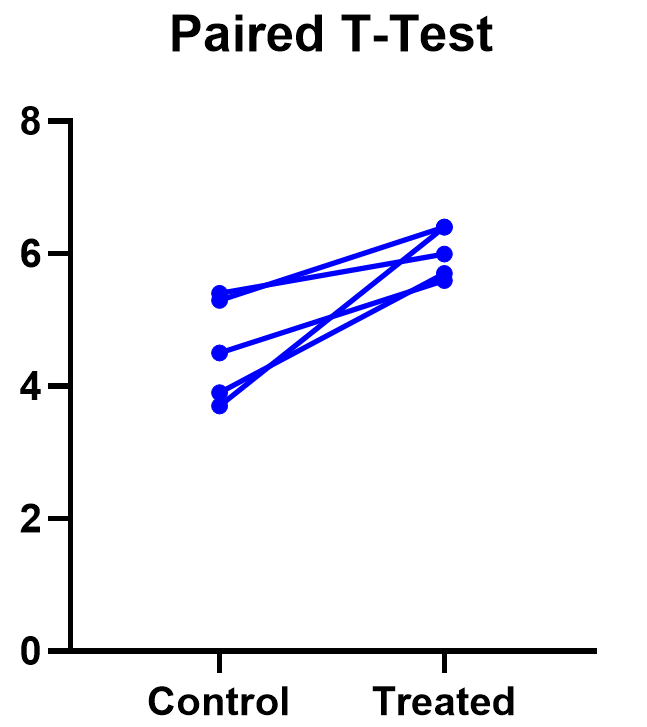

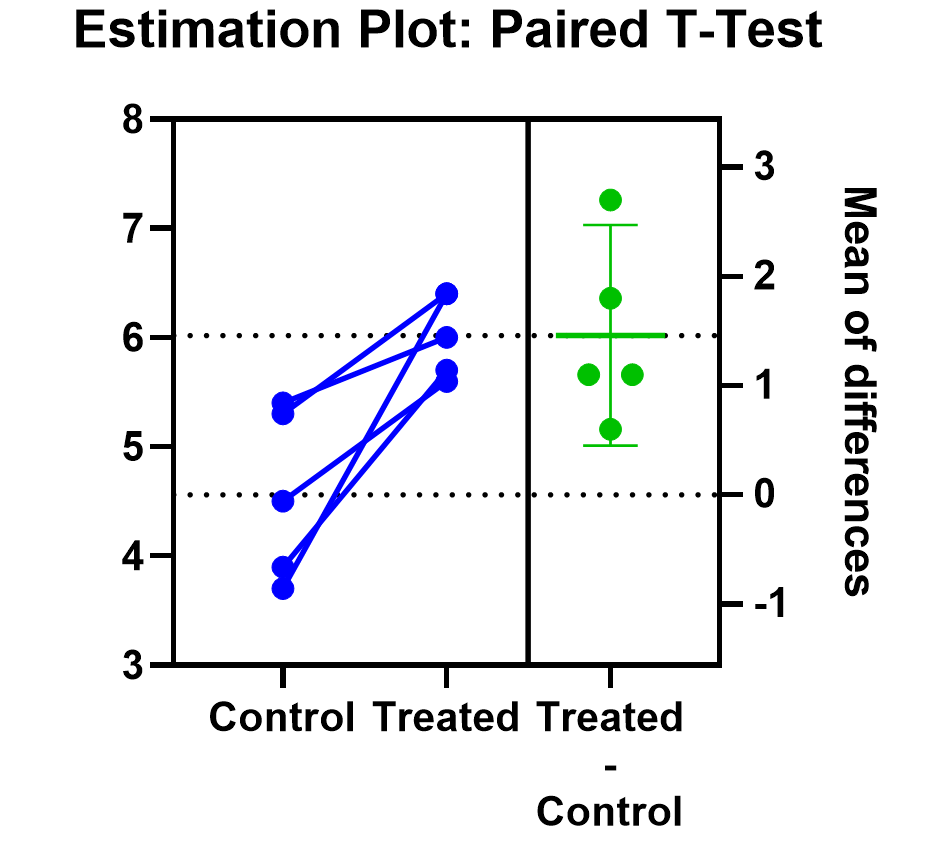

For our example data, we have five test subjects and have taken two measurements from each: before (“control”) and after a treatment (“treated”). If we set alpha = 0.05 and perform a two-tailed test, we observe a statistically significant difference between the treated and control group (p=0.0160, t=4.01, df = 4). We are 95% confident that the true mean difference between the treated and control group is between 0.449 and 2.47.

Graphing a paired t test

The significant result of the P value suggests evidence that the treatment had some effect, and we can also look at this graphically. The lines that connect the observations can help us spot a pattern, if it exists. In this case the lines show that all observations increased after treatment. While not all graphics are this straightforward, here it is very consistent with the outcome of the t test.

Prism’s estimation plot is even more helpful because it shows both the data (like above) and the confidence interval for the difference between means. You can easily see the evidence of significance since the confidence interval on the right does not contain zero.

Here are some more graphing tips for paired t tests .

Unpaired samples t test

Unpaired samples t test, also called independent samples t test, is appropriate when you have two sample groups that aren’t correlated with one another. A pharma example is testing a treatment group against a control group of different subjects. Compare that with a paired sample, which might be recording the same subjects before and after a treatment.

With unpaired t tests, in addition to choosing your level of significance and a one or two tailed test, you need to determine whether or not to assume that the variances between the groups are the same or not. If you assume equal variances, then you can “pool” the calculation of the standard error between the two samples. Otherwise, the standard choice is Welch’s t test which corrects for unequal variances. This choice affects the calculation of the test statistic and the power of the test, which is the test’s sensitivity to detect statistical significance.

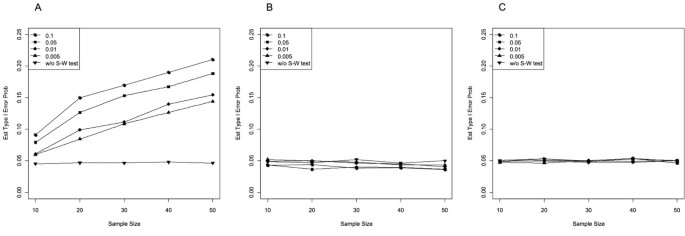

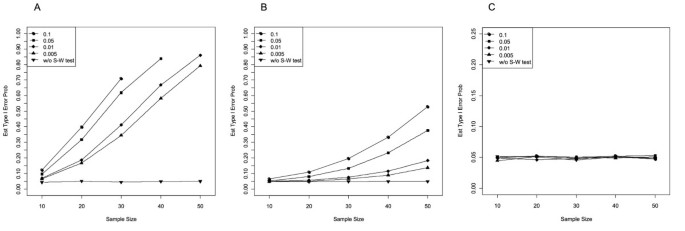

It’s best to choose whether or not you’ll use a pooled or unpooled (Welch’s) standard error before running your experiment, because the standard statistical test is notoriously problematic. See more details about unequal variances here .

As long as you’re using statistical software, such as this two-sample t test calculator , it’s just as easy to calculate a test statistic whether or not you assume that the variances of your two samples are the same. If you’re doing it by hand, however, the calculations get more complicated with unequal variances.

Unpaired (independent) samples t test formula

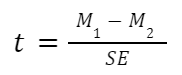

The general two-sample t test formula is:

- M1 and M2: Two means you are comparing, one from each dataset

- SE : The combined standard error of the two samples (calculated using pooled or unpooled standard error)

The denominator (standard error) calculation can be complicated, as can the degrees of freedom. If the groups are not balanced (the same number of observations in each), you will need to account for both when determining n for the test as a whole.

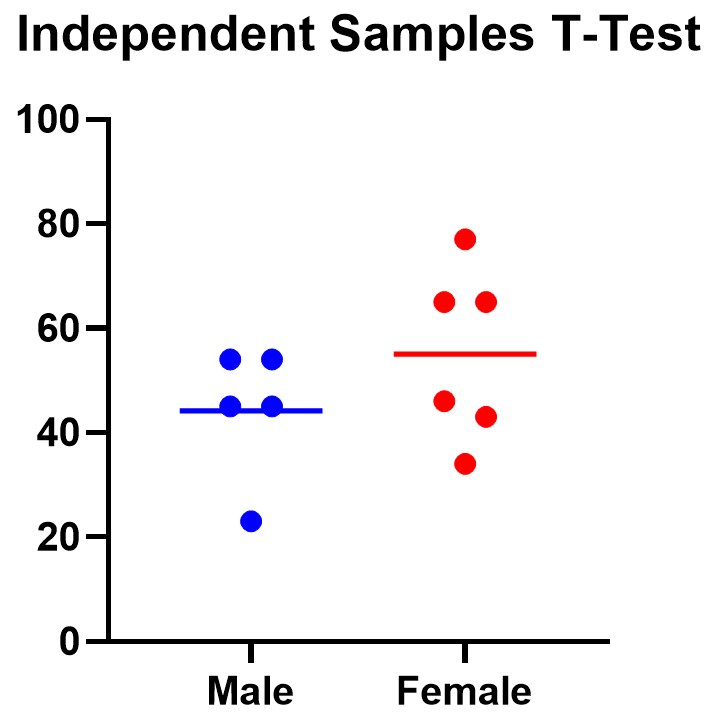

As an example for this family, we conduct a paired samples t test assuming equal variances (pooled). Based on our research hypothesis, we’ll conduct a two-tailed test, and use alpha=0.05 for our level of significance. Our samples were unbalanced, with two samples of 6 and 5 observations respectively.

The P value (p=0.261, t = 1.20, df = 9) is higher than our threshold of 0.05. We have not found sufficient evidence to suggest a significant difference. You can see the confidence interval of the difference of the means is -9.58 to 31.2.

Note that the F-test result shows that the variances of the two groups are not significantly different from each other.

Graphing an unpaired samples t test

For an unpaired samples t test, graphing the data can quickly help you get a handle on the two groups and how similar or different they are. Like the paired example, this helps confirm the evidence (or lack thereof) that is found by doing the t test itself.

Below you can see that the observed mean for females is higher than that for males. But because of the variability in the data, we can’t tell if the means are actually different or if the difference is just by chance.

Nonparametric alternatives for t tests

If your data comes from a normal distribution (or something close enough to a normal distribution), then a t test is valid. If that assumption is violated, you can use nonparametric alternatives.

T tests evaluate whether the mean is different from another value, whereas nonparametric alternatives compare either the median or the rank. Medians are well-known to be much more robust to outliers than the mean.

The downside to nonparametric tests is that they don’t have as much statistical power, meaning a larger difference is required in order to determine that it’s statistically significant.

Wilcoxon signed-rank test

The Wilcoxon signed-rank test is the nonparametric cousin to the one-sample t test. This compares a sample median to a hypothetical median value. It is sometimes erroneously even called the Wilcoxon t test (even though it calculates a “W” statistic).

And if you have two related samples, you should use the Wilcoxon matched pairs test instead. The two versions of Wilcoxon are different, and the matched pairs version is specifically for comparing the median difference for paired samples.

Mann-Whitney and Kolmogorov-Smirnov tests

For unpaired (independent) samples, there are multiple options for nonparametric testing. Mann-Whitney is more popular and compares the mean ranks (the ordering of values from smallest to largest) of the two samples. Mann-Whitney is often misrepresented as a comparison of medians, but that’s not always the case. Kolmogorov-Smirnov tests if the overall distributions differ between the two samples.

More t test FAQs

What is the formula for a t test.

The exact formula depends on which type of t test you are running, although there is a basic structure that all t tests have in common. All t test statistics will have the form:

- t : The t test statistic you calculate for your test

- Mean1 and Mean2: Two means you are comparing, at least 1 from your own dataset

- Standard Error of the Mean : The standard error of the mean , also called the standard deviation of the mean, which takes into account the variance and size of your dataset

The exact formula for any t test can be slightly different, particularly the calculation of the standard error. Not only does it matter whether one or two samples are being compared, the relationship between the samples can make a difference too.

What is a t-distribution?

A t-distribution is similar to a normal distribution. It’s a bell-shaped curve, but compared to a normal it has fatter tails, which means that it’s more common to observe extremes. T-distributions are identified by the number of degrees of freedom. The higher the number, the closer the t-distribution gets to a normal distribution. After about 30 degrees of freedom, a t and a standard normal are practically the same.

What are degrees of freedom?

Degrees of freedom are a measure of how large your dataset is. They aren’t exactly the number of observations, because they also take into account the number of parameters (e.g., mean, variance) that you have estimated.

What is the difference between paired vs unpaired t tests?

Both paired and unpaired t tests involve two sample groups of data. With a paired t test, the values in each group are related (usually they are before and after values measured on the same test subject). In contrast, with unpaired t tests, the observed values aren’t related between groups. An unpaired, or independent t test, example is comparing the average height of children at school A vs school B.

When do I use a z-test versus a t test?

Z-tests, which compare data using a normal distribution rather than a t-distribution, are primarily used for two situations. The first is when you’re evaluating proportions (number of failures on an assembly line). The second is when your sample size is large enough (usually around 30) that you can use a normal approximation to evaluate the means.

When should I use ANOVA instead of a t test?

Use ANOVA if you have more than two group means to compare.

What are the differences between t test vs chi square?

Chi square tests are used to evaluate contingency tables , which record a count of the number of subjects that fall into particular categories (e.g., truck, SUV, car). t tests compare the mean(s) of a variable of interest (e.g., height, weight).

What are P values?

P values are the probability that you would get data as or more extreme than the observed data given that the null hypothesis is true. It’s a mouthful, and there are a lot of issues to be aware of with P values.

What are t test critical values?

Critical values are a classical form (they aren’t used directly with modern computing) of determining if a statistical test is significant or not. Historically you could calculate your test statistic from your data, and then use a t-table to look up the cutoff value (critical value) that represented a “significant” result. You would then compare your observed statistic against the critical value.

How do I calculate degrees of freedom for my t test?

In most practical usage, degrees of freedom are the number of observations you have minus the number of parameters you are trying to estimate. The calculation isn’t always straightforward and is approximated for some t tests.

Statistical software calculates degrees of freedom automatically as part of the analysis, so understanding them in more detail isn’t needed beyond assuaging any curiosity.

Perform your own t test

Are you ready to calculate your own t test? Start your 30 day free trial of Prism and get access to:

- A step by step guide on how to perform a t test

- Sample data to save you time

- More tips on how Prism can help your research

With Prism, in a matter of minutes you learn how to go from entering data to performing statistical analyses and generating high-quality graphs.

- History & Society

- Science & Tech

- Biographies

- Animals & Nature

- Geography & Travel

- Arts & Culture

- Games & Quizzes

- On This Day

- One Good Fact

- New Articles

- Lifestyles & Social Issues

- Philosophy & Religion

- Politics, Law & Government

- World History

- Health & Medicine

- Browse Biographies

- Birds, Reptiles & Other Vertebrates

- Bugs, Mollusks & Other Invertebrates

- Environment

- Fossils & Geologic Time

- Entertainment & Pop Culture

- Sports & Recreation

- Visual Arts

- Demystified

- Image Galleries

- Infographics

- Top Questions

- Britannica Kids

- Saving Earth

- Space Next 50

- Student Center

- Where was science invented?

- When did science begin?

Student’s t-test

Our editors will review what you’ve submitted and determine whether to revise the article.

- National Center for Biotechnology Information - PubMed Central - Application of Student's t-test, Analysis of Variance, and Covariance

- BCcampus Publishing - The t-Test

- University of Missouri System - Introduction to t Tests

- University of California - Department of Statistics - t-Tests

- Rice University - Foundations of Linguistics - 'Student's' t Test (For Independent Samples)

- Statistics LibreTexts - The Independent Samples t-test (Student Test)

Student’s t-test , in statistics , a method of testing hypotheses about the mean of a small sample drawn from a normally distributed population when the population standard deviation is unknown.

In 1908 William Sealy Gosset, an Englishman publishing under the pseudonym Student, developed the t -test and t distribution. (Gosset worked at the Guinness brewery in Dublin and found that existing statistical techniques using large samples were not useful for the small sample sizes that he encountered in his work.) The t distribution is a family of curves in which the number of degrees of freedom (the number of independent observations in the sample minus one) specifies a particular curve. As the sample size (and thus the degrees of freedom) increases, the t distribution approaches the bell shape of the standard normal distribution . In practice, for tests involving the mean of a sample of size greater than 30, the normal distribution is usually applied.

It is usual first to formulate a null hypothesis , which states that there is no effective difference between the observed sample mean and the hypothesized or stated population mean—i.e., that any measured difference is due only to chance . In an agricultural study, for example, the null hypothesis could be that an application of fertilizer has had no effect on crop yield, and an experiment would be performed to test whether it has increased the harvest. In general, a t -test may be either two-sided (also termed two-tailed), stating simply that the means are not equivalent, or one-sided, specifying whether the observed mean is larger or smaller than the hypothesized mean. The test statistic t is then calculated. If the observed t -statistic is more extreme than the critical value determined by the appropriate reference distribution, the null hypothesis is rejected. The appropriate reference distribution for the t -statistic is the t distribution. The critical value depends on the significance level of the test (the probability of erroneously rejecting the null hypothesis).

A second application of the t distribution tests the hypothesis that two independent random samples have the same mean. The t distribution can also be used to construct confidence intervals for the true mean of a population (the first application) or for the difference between two sample means (the second application). See also interval estimation .

- Get new issue alerts Get alerts

- Submit a Manuscript

Secondary Logo

Journal logo.

Colleague's E-mail is Invalid

Your message has been successfully sent to your colleague.

Save my selection

Commonly Used t -tests in Medical Research

Pandey, R. M.

Department of Biostatistics, All India Institute of Medical Sciences, New Delhi, India

Address for correspondence: Dr. R.M. Pandey, Department of Biostatistics, All India Institute of Medical Sciences, New Delhi, India. E-mail: [email protected]

This is an open access journal, and articles are distributed under the terms of the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 License, which allows others to remix, tweak, and build upon the work non-commercially, as long as appropriate credit is given and the new creations are licensed under the identical terms.

Student's t -test is a method of testing hypotheses about the mean of a small sample drawn from a normally distributed population when the population standard deviation is unknown. In 1908 William Sealy Gosset, an Englishman publishing under the pseudonym Student, developed the t -test. This article discusses the types of T test and shows a simple way of doing a T test.

INTRODUCTION

To draw some conclusion about a population parameter (true result of any phenomena in the population) using the information contained in a sample, two approaches of statistical inference are used, that is, confidence interval (range of results likely to be obtained, usually, 95% of the times) and hypothesis testing, to find how often the observed finding could be due to chance alone, reported by P value which is the probability of obtaining the result as extreme as observed under null hypothesis. Statistical tests used for hypothesis testing are broadly classified into two groups, that is, parametric tests and nonparametric tests. In parametric tests, some assumption is made about the distribution of population from which the sample is drawn. In all parametric tests, the distribution of quantitative variable in the population is assumed to be normally distributed. As one does not have access to the population values to say normal or nonnormal, assumption of normality is made based on the sample values. Nonparametric statistical methods are also known as distribution-free methods or methods based on ranks where no assumptions are made about the distribution of variable in the population.

The family of t -tests falls in the category of parametric statistical tests where the mean value(s) is (are) compared against a hypothesized value. In hypothesis testing of any statistic (summary), for example, mean or proportion, the hypothesized value of the statistic is specified while the population variance is not specified, in such a situation, available information is only about variability in the sample. Therefore, to compute the standard error (measure of variability of the statistic of interest which is always in the denominator of the test statistic), it is considered reasonable to use sample standard deviation. William Sealy Gosset, a chemist working for a brewery in Dublin Ireland introduced the t -statistic. As per the company policy, chemists were not allowed to publish their findings, so Gosset published his mathematical work under the pseudonym “Student,” his pen name. The Student's t -test was published in the journal Biometrika in 1908.[ 1 , 2 ]

In medical research, various t -tests and Chi-square tests are the two types of statistical tests most commonly used. In any statistical hypothesis testing situation, if the test statistic follows a Student's t -test distribution under null hypothesis, it is a t -test. Most frequently used t -tests are: For comparison of mean in single sample; two samples related; two samples unrelated tests; and testing of correlation coefficient and regression coefficient against a hypothesized value which is usually zero. In one-sample location test, it is tested whether or not the mean of the population has a value as specified in a null hypothesis; in two independent sample location test, equality of means of two populations is tested; to compare the mean delta (difference between two related samples) against hypothesized value of zero in a null hypothesis, also known as paired t -test or repeated-measures t -test; and, to test whether or not the slope of a regression line differs significantly from zero. For a binary variable (such as cure, relapse, hypertension, diabetes, etc.,) which is either yes or no for a subject, if we take 1 for yes and 0 for no and consider this as a score attached to each study subject then the sample proportion (p) and the sample mean would be the same. Therefore, the approach of t -test for mean can be used for proportion as well.

The focus here is on describing a situation where a particular t -test would be used. This would be divided into t -tests used for testing: (a) Mean/proportion in one sample, (b) mean/proportion in two unrelated samples, (c) mean/proportion in two related samples, (d) correlation coefficient, and (e) regression coefficient. The process of hypothesis testing is same for any statistical test: Formulation of null and alternate hypothesis; identification and computation of test statistics based on sample values; deciding of alpha level, one-tailed or two-tailed test; rejection or acceptance of null hypothesis by comparing the computed test statistic with the theoretical value of “ t ” from the t -distribution table corresponding to given degrees of freedom. In hypothesis testing, P value is reported as P < 0.05. However, in significance testing, the exact P value is reported so that the reader is in a better position to judge the level of statistical significance.

- t -test for one sample: For example, in a random sample of 30 hypertensive males, the observed mean body mass index (BMI) is 27.0 kg/m 2 and the standard deviation is 4.0. Also, suppose it is known that the mean BMI in nonhypertensive males is 25 kg/m 2 . If the question is to know whether or not these 30 observations could have come from a population with a mean of 25 kg/m 2 . To determine this, one sample t -test is used with the null hypothesis H0: Mean = 25, against alternate hypothesis of H1: Mean ≠ 25. Since the standard deviation of the hypothesized population is not known, therefore, t -test would be appropriate; otherwise, Z -test would have been used