- A-Z Commands

- Privacy Policy

- Terms & Conditions

- Google News

Top 10 Best Open Source Speech Recognition Tools for Linux

Speech is a popular and smart method in modern time to make interaction with electronic devices. As we know, there are many open source speech recognition tools available on different platforms. From the beginning of this technology, it has been improved simultaneously in understanding the human voice. This is the reason; it has now engaged a lot of professionals than before. The technical advancement is strong enough to make it more clear to the common people.

Open Source Speech Recognition Tools

Open source voice recognition tool is not much available like the typical software we use in our daily lives in Linux platform. After a long way of research, we found some well-featured applications for you with a short description. Let’s have a look at the points below!

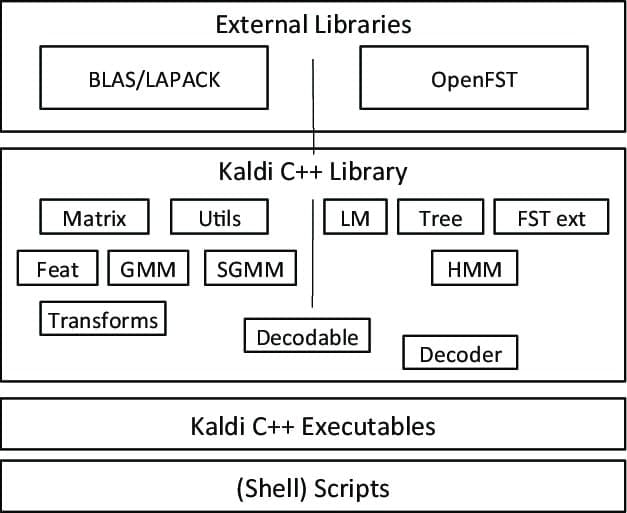

Kaldi is a special kind of speech recognition software, started as a part of a project at John Hopkins University. This toolkit comes with an extensible design and written in C++ programming language. It provides a flexible and comfortable environment to its users with a lot of extensions to enhance the power of Kaldi.

Noteworthy Features of Kaldi

- A free and flexible open source voice recognition application, under the Apache license.

- Runs on multiple platforms, including GNU/Linux , BSD, and Microsoft Windows.

- Provides support to install and configure the application to your system.

- Besides the speech recognition system, it also supports deep neural networks and linear transforms.

2. CMUSphinx

CMUS Sphinx comes with a group of featured-enriched systems with several pre-built packages related to speech recognition. It is an open source program , developed at Carnegie Mellon University. You will get this speaker-independent recognition tool in several languages, including French, English, German, Dutch, and more.

Noteworthy Features of CMUSphinx

- It is an easy-to-use and fast speech recognition system with a user-friendly interface.

- Comes with a flexible design and efficient system, even in low resource platforms.

- Provides acoustic model training tools through its Sphinxtrain package.

- Helps to perform different types of tasks through its helpful packages, including keyword spotting, pronunciation evaluation, alignment, and more.

- It is a cross-platform tool that supports both Windows and Linux systems.

Get CMUSphinx

3. DeepSpeech

DeepSpeech is an open source speech recognition engine to convert your speech to text. It is a free application by Mozilla. To run DeepSearch project to your device, you will need Python 3.r or above. Also, it needs a Git extension file, namely Git Large File Storage. It is used for versioning large files while you run it to your system.

Noteworthy Features of DeepSpeech

- DeepSpeech uses TensorFlow framework to make the voice transformation more comfortable.

- It supports NVIDIA GPU, which helps to perform quicker inference.

- You can use the DeepSearch inference in three different ways; The Python package, Node.JS package, or Command-line client .

- Each time you want to run this software to your system, you’ll need to activate the virtual environment by Python command.

- It needs a Linux or Mac environment to run this application.

Get DeepSpeech

4. Wav2Letter++

WavLetter++ is a modern and popular speech recognition tool, developed by the Facebook AI Research team. It is another open source program under the BCD license. This superfast voice recognition software was built in C++ and introduced with a lot of features. It provides the facility of language modeling, machine translation, speech synthesis, and more to its users in a flexible environment.

Noteworthy Features of Wav2Letter++

- It contains an active community in popular platforms like Facebook and Google group to assist its users worldwide.

- WavLetter++ is a fast and flexible toolkit which uses ArrayFire tensor library for the maximum efficiency.

- It lets you work with a high-performance framework like wav2letter++, which helps to do a successful research and model tuning.

- Also, it provides complete documentation through the tutorial sections.

- In the recipes folder, you will get the detailed recipes for WSJ, Timit, and Librispeech.

Get Wav2Letter++

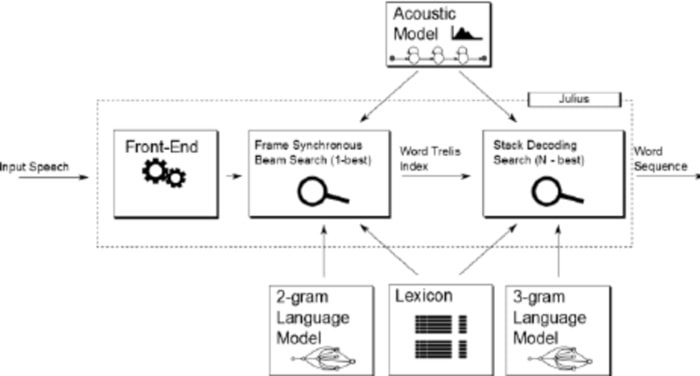

Julius is comparatively an older open source voice recognition software developed by Lee Akinobu. This tool is written in the C programming language by the developers of Kawahara Lab, Kyoto University. It is a high-performance speech recognition application having a large vocabulary. You can use it in both English and Japanese languages. It can be a great choice if you want to use it for academic and research purposes.

Noteworthy Features of Julius

- Julius is a highly configurable application that can set different search parameters to tune its performance.

- This tool is based on a 2-pass strategy which provides you a real-time and high-quality performance.

- It is a cross-platform project that runs on Linux, BSD, Windows, and Android Systems.

- Integrated with Julian, a grammar-based recognition parser.

- Besides supporting rule-based grammar, it also provides Word graph output, Confidence scoring, GMM-based input rejection, and many more facilities.

Get Julius

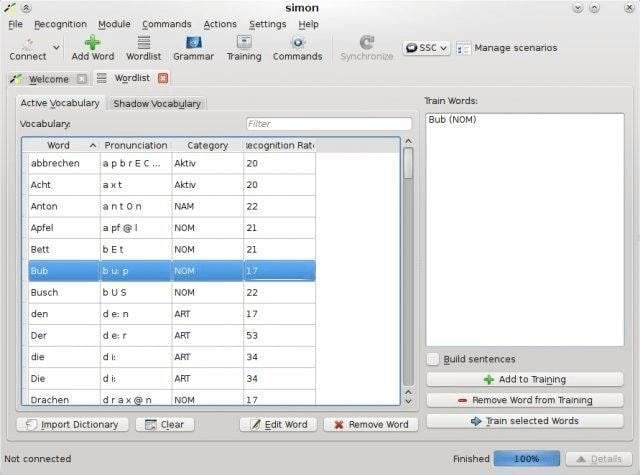

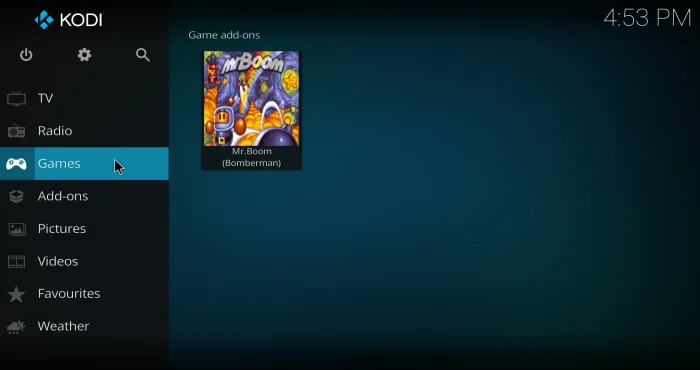

Simon comes with a modern and easy-to-use speech recognition software, developed by Peter Grasch. It is another open source program under the GNU General Public License. You are free to use Simon in both Linux and Windows systems. Also, it provides the flexibility to work with any language you want.

Noteworthy Features of Simon

- Using its voice-controlled calculator, Simon provides the facility to do various arithmetic operations.

- Compatible with Skype and other popular VOIP programs to establish an easy communication system with friends and relatives.

- It allows users to watch slide shows and videos, listen to music , and more with a few simple voice commands.

- Also, it is an essential tool in reading newspapers and surfing the internet.

Mycroft comes with an easy-to-use open source voice assistant for converting voice to text. It is regarded as one of the most popular Linux speech recognition tools in modern time, written in Python. It allows users to make the best use of this tool in a science project or enterprise software application. Also, it can be used as a practical assistant, that can tell you the time, date, weather, and more like these.

Noteworthy Features of Mycroft

- Integrated with the most popular social media and professional platforms, including Facebook, Github , LinkedIn, and more.

- You can run this application on different software and hardware platforms. It can be a desktop or a Raspberry Pi .

- Besides being a smart voice assistant, it provides the facility of the audio record, machine learning, software library, and more.

- It lets users convert the natural language to machine-readable data through Adapt, an intent parser of Mycroft.

Get Mycroft

8. OpenMindSpeech

Open Mind Speech is one of the essential Linux speech recognition tools aims to convert your speech to text for free. It is a part of Open Mind Initiative, runs its operation, especially for developers. This program was introduced with different names like VoiceControl, SpeechInput, and FreeSpeech before getting the present name.

Noteworthy Features of OpenMindSpeech

- It uses the Overflow environment in the voice recognition operation to make the complex applications flexible.

- Open Mind Speech is mostly compatible with Linux and UNIX-based platforms.

- Using the internet, it can collect speech data from e-citizens, who are the contributors of raw data.

Get OpenMindSpeech

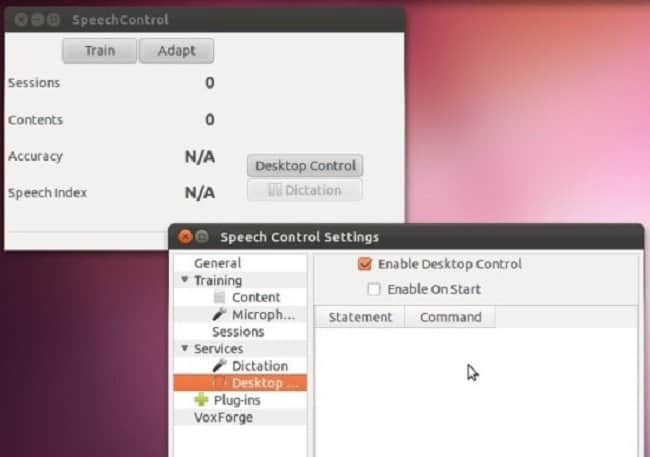

9. SpeechControl

Speech Control is a free speech recognition application, suitable for any Ubuntu distro. It comes with a graphical user interface based on Qt. Though it is still in its early development stage, you can use it for your simple project.

Noteworthy Features of SpeechControl

- Speech Control is an open source program under the General Public License (GPL).

- It aims to work as a virtual assistant that provides repetitive task guidance to execute the process smoothly.

- It is mostly suitable for Linux-based platforms.

- Also, provides easy-to-understand user documentation with project details.

Get SpeechControl

10. Deepspeech.pytorch

Deepspeech.pytorch is another mentionable open source speech recognition application which is ultimately implementation of DeepSpeech2 for PyTorch. It contains a set of powerful networks based DeepSpeech2 architecture. With many helpful resources, it can be used as one of the essential Linux speech recognition tools for research and project development.

Noteworthy Features of Deepspeech.pytorch

- Supports noise augmentation that helps to increase robustness at the time of loading audio.

- To send the post request to the server, it provides a basic server script.

- Support several datasets for downloading, including TEDLIUM, AN4, Voxforge, and LibriSpeech.

- Lets you add noise into the training data through noise injection.

- Supports Visdom and Tensorboard for visualizing training on scientific experimentation.

Get Deepspeech.pytorch

Finishing Thoughts

So, we have reached the finishing point on open source speech recognition tools for Linux. Hope, you got comprehensive information regarding this topic. The above-mentioned applications are free, easy-to-use, and ready to be a part of your academic or personal project.

Which one do you prefer most? If you have any other choices, then don’t hesitate to let us know. Please do share this article with your community, if you get it helpful. Till then, have a nice time. Thanks!

I dont understand alot of this github stuff i just need a deb

i just want to talk to my computer

I frequently make live videos (usually streamed by Instagram or Facebook) and I would like to know if there is a software that can automatically transcribe what I say in these videos, like Youtube does automatically for subtitles. Anyone can help? Thanks

I’m searching for a simple speech recognition to create a variable to select audio files to play for a blind person. This lady only wants to listen to a Bible version called The Message Bible. Unfortunately it isn’t available in a manner that doesn’t require the User to respond to visual selections. I envision a simple command line file triggered by a variable created by her voice when she says something like “Goto the book of Psalms, chapter 23. (since Psalms is indexed by Psalm they would be inside folders marked as chapters.

LEAVE A REPLY Cancel reply

Save my name, email, and website in this browser for the next time I comment.

You May Like It!

11 best reference manager and bibliography tools for linux, visual studio code – a free and open source code editor for ubuntu, linux supported fastest supercomputer in the world is here- “the summit”, the 20 best to-do list apps for android device, trending now, the 20 best science apps for android device, 10 best vpn apps for iphone and ipad, ultimate maia gtk theme and icon packs for gnome and plasma, 15 best torrent clients for linux system, 15 best internet of things (iot) books you should read in 2024, linux or windows: 25 things to know while choosing the best platform, 5 most popular linux distros: which one is right for you, studio by creative fabrica: what’s so good, waiting for you, top 20 best cryptocurrency exchange platforms in 2024, 15 independent linux distros you should know in 2024.

© 2024. All Rights Reserved. Ubuntu is a registered trademark of Canonical Ltd . Proudly Hosted on Vultr .

The Linux Portal Site

13 Best Free Linux Speech Recognition Tools

Speech is an increasingly popular method of interacting with electronic devices such as computers, phones, tablets, and televisions. Speech is probabilistic, and speech engines are never 100% accurate. But technological advances have meant speech recognition engines offer better accuracy in understanding speech. The better the accuracy, the more likely customers will engage with this method of control. And, according to a study by Stanford University, the University of Washington and Chinese search giant Baidu, smartphone speech is three times quicker than typing a search query into a screen interface.

Witness the rise of intelligent personal assistants, such as Siri for Apple, Cortana for Microsoft, and Mycroft for Linux. The assistants use voice queries and a natural language user interface to attempt to answer questions, make recommendations, and perform actions without the requirement of keyboard input. And the popularity of speech to control devices is testament to dedicated products that have dropped in large quantities such as Amazon Echo. Speech recognition is also used in smart watches, household appliances, and in-car assistants. In-car applications have lots of mileage (excuse the pun). Some of the in-car applications include navigation, asking for weather forecasts, finding out the traffic situation ahead, and controlling elements of the car, such as the sunroof, windows, and music player.

The key challenge for developing speech recognition software, whether it’s used in a computer or another device, is that human speech is extremely complex. The software has to cope with varied speech patterns, and individuals’ accents. And speech is a dynamic process without clearly distinguished parts. Fortunately, technical advancements have meant it’s easier to create speech recognition tools. Powerful tools like machine learning and artificial intelligence, coupled with improved speech algorithms, have altered the way these tools are developed. You don’t need phoneme dictionaries. Instead, speech engines can employ deep learning techniques to cope with the complexities of human speech.

There aren’t that many speech recognition toolkits available, and some of them are proprietary software. Fortunately, there are some very exciting open source speech recognition toolkits available. These toolkits are meant to be the foundation to build a speech recognition engine.

This article highlights the best open source speech recognition software for Linux. The rating chart summarizes our verdict.

Let’s explore the 13 free speech recognition tools at hand. For each title we have compiled its own portal page with a full description and an in-depth analysis of its features.

This site uses Akismet to reduce spam. Learn how your comment data is processed .

What is really wrong with the license terms of HTK?

This clause is particularly damning:

2.2 The Licensed Software either in whole or in part can not be distributed or sub-licensed to any third party in any form.

…and nothing else matters…

Sadly my machine doesn’t have sufficient RAM on my graphics card to experiment with DeepSpeech. Any recommendations for a good GPU that works well with DeepSpeech?

Thanks for the comprehensive info regarding the open source tools. From the perspective of a visually impaired person, what I would like to know is which of these would be most suitable (now or in near future) for dictating to get text that could go into documents, e-mail, etc. Is that Simon?

Yes, Simon is very good for what you’re looking for. Most of the other open source speech recognition tools are not really aimed at a desktop user e.g. they are for academic research etc.

Is there any speech to text tool like Dragon Nat in linux? I work as a translator and I have it on windows but I wonder if there is something like that out there.

Baidu is required by Chinese laws to act, as and when demanded, as an arm of the Chinese Communist Party. Not sure I would trust a tool created by them.

I think you are jumping on the Hauwei bandwagon with absolutely no justification.

A few of the open source programs here are using speech recognition models based on Baidu DeepSpeech2. But the model is an approach, not a means of capturing data or doing anything else nefarious.

What concerns are you raising? The source code of the programs here (DeepSpeech etc) are open source, so you can see exactly what they are doing.

completely agree

This account is solely made for saying yes to other accounts called “john”

LinuxLinks doesn’t have accounts

Could Android speech recognition be ported to Linux desktop packages, since android is open source?

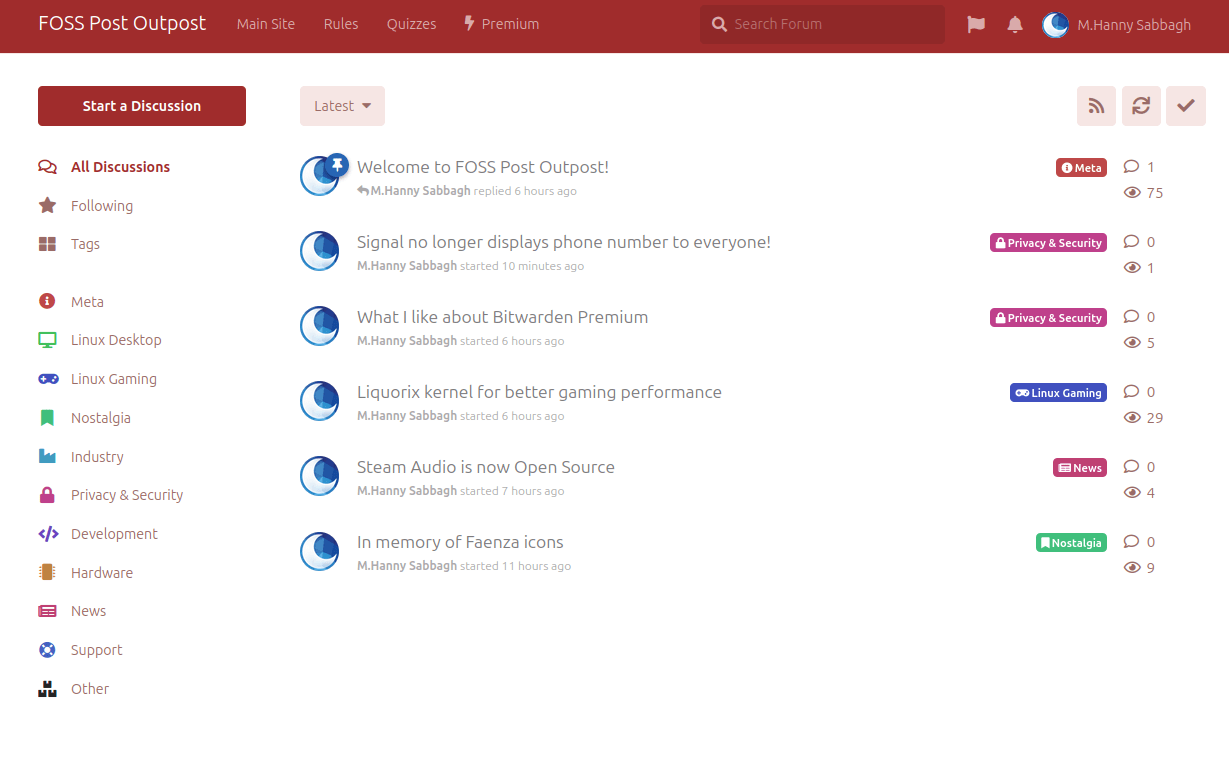

Top 11 Open Source Speech Recognition/Speech-to-Text Systems

Last Updated on: March 21, 2024

Table of Contents:

What is a Speech Recognition Library/System?

What is an open source speech recognition library, what are the benefits of using open source speech recognition, 1. project deepspeech, 4. flashlight asr (formerly wav2letter++), 5. paddlespeech (formerly deepspeech2), 6. openseq2seq, 10. whisper, 11. styletts2, what is the best open source speech recognition system.

A speech-to-text (STT) system , or sometimes called automatic speech recognition (ASR) is as its name implies: A way of transforming the spoken words via sound into textual data that can be used later for any purpose.

Speech recognition technology is extremely useful. It can be used for a lot of applications such as the automation of transcription, writing books/texts using sound only, enabling complicated analysis on information using the generated textual files and a lot of other things.

In the past, the speech-to-text technology was dominated by proprietary software and libraries. Open source speech recognition alternatives didn’t exist or existed with extreme limitations and no community around.

This is changing, today there are a lot of open source speech-to-text tools and libraries that you can use right now.

It is the software engine responsible for transforming voice to texts.

It is not meant to be used by end users. Developers will first have to adapt these libraries and use them to create computer programs that can enable speech recognition to users.

Some of them come with preloaded and trained dataset to recognize the given voices in one language and generate the corresponding texts, while others just give the engine without the dataset, and developers will have to build the training models themselves.

You can think of them as the underlying engines of speech recognition programs.

If you are an ordinary user looking for speech recognition, then none of these will be suitable for you, as they are meant for development use only.

The difference between proprietary speech recognition and open source speech recognition, is that the library used to process the voices should be licensed under one of the known open source licenses, such as GPL, MIT and others.

Microsoft and IBM for example have their own speech recognition toolkits that they offer for developers, but they are not open source. Simply because they are not licensed under one of the open source licenses in the market.

Mainly, you get few or no restrictions at all on the commercial usage for your application, as the open source speech recognition libraries will allow you to use them for whatever use case you may need.

Also, most – if not all – open source speech recognition toolkits in the market are also free of charge, saving you tons of money instead of using the proprietary ones.

The benefits of using open source speech recognition toolkits are indeed too many to be summarized in one article.

Top Open Source Speech Recognition Systems

In our article we’ll see a couple of them, what are their pros and cons and when they should be used.

This project is made by Mozilla, the organization behind the Firefox browser.

It’s a 100% free and open source speech-to-text library that also implies the machine learning technology using TensorFlow framework to fulfill its mission. In other words, you can use it to build training models by yourself to enhance the underlying speech-to-text technology and get better results, or even to bring it to other languages if you want.

You can also easily integrate it to your other machine learning projects that you are having on TensorFlow. Sadly it sounds like the project is currently only supporting English by default. It’s also available in many languages such as Python (3.6).

However, after the recent Mozilla restructure, the future of the project is unknown, as it may be shut down (or not) depending on what they are going to decide .

You may visit its Project DeepSpeech homepage to learn more.

Kaldi is an open source speech recognition software written in C++, and is released under the Apache public license.

It works on Windows, macOS and Linux. Its development started back in 2009. Kaldi’s main features over some other speech recognition software is that it’s extendable and modular: The community is providing tons of 3rd-party modules that you can use for your tasks.

Kaldi also supports deep neural networks, and offers an excellent documentation on its website . While the code is mainly written in C++, it’s “wrapped” by Bash and Python scripts.

So if you are looking just for the basic usage of converting speech to text, then you’ll find it easy to accomplish that via either Python or Bash. You may also wish to check Kaldi Active Grammar , which is a Python pre-built engine with English trained models already ready for usage.

Learn more about Kaldi speech recognition from its official website .

Probably one of the oldest speech recognition software ever, as its development started in 1991 at the University of Kyoto, and then its ownership was transferred to as an independent project in 2005. A lot of open source applications use it as their engine (Think of KDE Simon).

Julius main features include its ability to perform real-time STT processes, low memory usage (Less than 64MB for 20000 words), ability to produce N-best/Word-graph output, ability to work as a server unit and a lot more.

This software was mainly built for academic and research purposes. It is written in C, and works on Linux, Windows, macOS and even Android (on smartphones). Currently it supports both English and Japanese languages only.

The software is probably available to install easily using your Linux distribution’s repository; Just search for julius package in your package manager.

You can access Julius source code from GitHub.

If you are looking for something modern, then this one can be included.

Flashlight ASR is an open source speech recognition software that was released by Facebook’s AI Research Team. The code is a C++ code released under the MIT license.

Facebook was describing its library as “the fastest state-of-the-art speech recognition system available” up to 2018.

The concepts on which this tool is built makes it optimized for performance by default. Facebook’s machine learning library Flashlight is used as the underlying core of Flashlight ASR. The software requires that you first build a training model for the language you desire before becoming able to run the speech recognition process.

No pre-built support of any language (including English) is available. It’s just a machine-learning-driven tool to convert speech to text.

You can learn more about it from the following link .

Researchers at the Chinese giant Baidu are also working on their own speech recognition toolkit, called PaddleSpeech.

The speech toolkit is built on the PaddlePaddle deep learning framework, and provides many features such as:

- Speech-to-Text support.

- Text-to-Speech support.

- State-of-the-art performance in audio transcription, it even won the NAACL2022 Best Demo Award ,

- Support for many large language models (LLMs), mainly for English and Chinese languages.

The engine can be trained on any model and for any language you desire.

PaddleSpeech ‘s source code is written in Python, so it should be easy for you to get familiar with it if that’s the language you use.

Developed by NVIDIA for sequence-to-sequence models training.

While it can be used for way more than just speech recognition, it is a good engine nonetheless for this use case. You can either build your own training models for it, or use models which are shipped by default. It supports parallel processing using multiple GPUs/Multiple CPUs, besides a heavy support for some NVIDIA technologies like CUDA and its strong graphics cards.

As of 2021 the project is archived; it can still be used but looks like it is no longer under active development.

Check its speech recognition documentation page for more information, or you may visit its official source code page .

One of the newest open source speech recognition systems, as its development just started in 2020.

Unlike other systems in this list, Vosk is quite ready to use after installation, as it supports 10 languages (English, German, French, Turkish…) with portable 50MB-sized models already available for users (There are other larger models up to 1.4GB if you need).

It also works on Raspberry Pi, iOS and android devices, and provides a streaming API which allows you to connect to it to do your speech recognition tasks online. Vosk has bindings for Java, Python, JavaScript, C# and NodeJS.

Learn more about Vosk from its official website .

An end-to-end speech recognition engine which implements ASR.

Written in Python and licensed under the Apache 2.0 license. Supports unsupervised pre-training and multi-GPUs training either on same or multiple machines. Built on the top of TensorFlow.

Has a large model available for both English and Chinese languages.

Visit Athena source code .

Written in Python on the top of PyTorch.

Also supports end-to-end ASR. It follows Kaldi style for data processing, so it would be easier to migrate from it to ESPnet. The main marketing point for ESPnet is the state-of-art performance it gives in many benchmarks, and its support for other language processing tasks such as speech-to-text (STT), machine translation (MT) and speech translation (ST).

Licensed under the Apache 2.0 license.

You can access ESPnet from the following link .

The newest speech recognition toolkit in the family, developed by the famous OpenAI company (the same company behind ChatGPT ).

The main marketing point for Whisper is that it does not specialize in a set of training datasets for specific languages only; instead, it can be used with any suitable model and for any language. It was trained on 680 thousand hours of audio files, one third of which were non-English datasets.

It supports speech-to-text, text-to-speech, speech translation. And the company claims that its toolkit has 50% less errors in the output compared to other toolkit in the market.

Learn more about Whisper from its official website .

The newest speech recognition library on the list, which was just released in the middle of November, 2023. It employs diffusion techniques with large speech language models (SLMs) training in order to achieve more advanced results than other models.

The makers of the model published it along with a research paper, where they make the following claim about their work:

This work achieves the first human-level TTS synthesis on both single and multispeaker datasets, showcasing the potential of style diffusion and adversarial training with large SLMs.

It is written in Python, and has some Jupyter notebooks shipped with it to demonstrate how to use it. The model is licensed under the MIT license.

There is an online demo where you can see different benchmarks of the model: https://styletts2.github.io/

If you are building a small application that you want to be portable everywhere, then Vosk is your best option, as it is written in Python and works on iOS, android and Raspberry pi too, and supports up to 10 languages. It also provides a huge training dataset if you shall need it, and a smaller one for portable applications.

If, however, you want to train and build your own models for much complex tasks, then any of PaddleSpeech, Whisper and Athena should be more than enough for your needs, as they are the most modern state-of-the-art toolkits.

As for Mozilla’s DeepSpeech , it lacks a lot of features behind its other competitors in this list, and isn’t really cited a lot in speech recognition academic research like the others. And its future is concerning after the recent Mozilla restructure, so one would want to stay away from it for now.

Traditionally, Julius and Kaldi are also very much cited in the academic literature.

Alternatively, you may try these open source speech recognition libraries to see how they work for you in your use case.

The speech recognition category is starting to become mainly driven by open source technologies, a situation that seemed to be very far-fetched a few years ago.

The current open source speech recognition software are very modern and bleeding-edge, and one can use them to fulfill any purpose instead of depending on Microsoft’s or IBM’s toolkits.

If you have any other recommendations for this list, or comments in general, we’d love to hear them below!

FOSS Post has been providing high-quality content about open source and Linux software for around 7 years now. All of our content is free so that you can enjoy it whenever you like. However, consider buying us a cup of coffee by joining our Patreon campaign or doing a one-time donation to support our efforts!

Our community platform is here. Join it now so that you can explore tons of interesting and fun discussions about various open source aspects and issues!

Are you stuck following one of our articles or technical tutorials? Drop us a support request in the forum and we'll get right back to you.

You can take a number of interesting and exciting quizzes that the FOSS Post team prepared about various open source software from FOSS Quiz.

Hanny is a computer science & engineering graduate with a master degree, and an open source software developer. He has created a lot of open source programs over the years, and maintains separate online platforms for promoting open source in his local communities.

Hanny is the founder of FOSS Post.

Enter your email address to subscribe to our newsletter. We only send you an email when we have a couple of new posts or some important updates to share.

Social Links

Recent comments.

Open Source Directory

Join the force.

For the price of one cup of coffee per month:

- Support the FOSS Post to produce more content.

- Get a special account on our website.

- Remove all the ads you are seeing (including this one!).

- Get an OPML file containing +70 RSS feeds for various FOSS-related websites and blogs, so that you can import it into your favorite RSS reader and stay updated about the FOSS world!

Become a Supporter

Sign up in our modern forum to discuss various issues and see a lot of insightful, entertaining and informational content about Linux and open source software! Your content is yours and you can take it with you wherever you go.

* Premium members get a special badge.

No thanks, I’m not interested!

Originally published on August 23, 2020, Last Updated on March 21, 2024 by M.Hanny Sabbagh

'ZDNET Recommends': What exactly does it mean?

ZDNET's recommendations are based on many hours of testing, research, and comparison shopping. We gather data from the best available sources, including vendor and retailer listings as well as other relevant and independent reviews sites. And we pore over customer reviews to find out what matters to real people who already own and use the products and services we’re assessing.

When you click through from our site to a retailer and buy a product or service, we may earn affiliate commissions. This helps support our work, but does not affect what we cover or how, and it does not affect the price you pay. Neither ZDNET nor the author are compensated for these independent reviews. Indeed, we follow strict guidelines that ensure our editorial content is never influenced by advertisers.

ZDNET's editorial team writes on behalf of you, our reader. Our goal is to deliver the most accurate information and the most knowledgeable advice possible in order to help you make smarter buying decisions on tech gear and a wide array of products and services. Our editors thoroughly review and fact-check every article to ensure that our content meets the highest standards. If we have made an error or published misleading information, we will correct or clarify the article. If you see inaccuracies in our content, please report the mistake via this form .

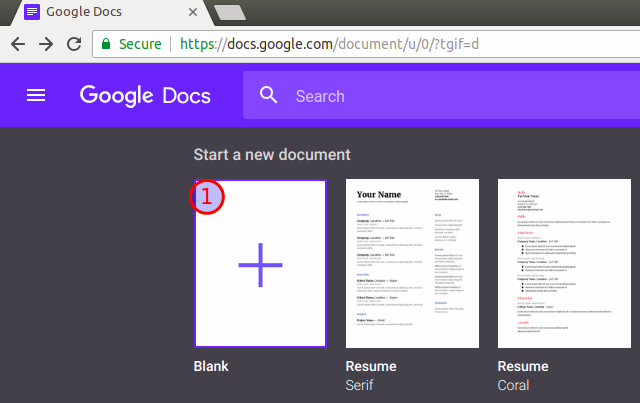

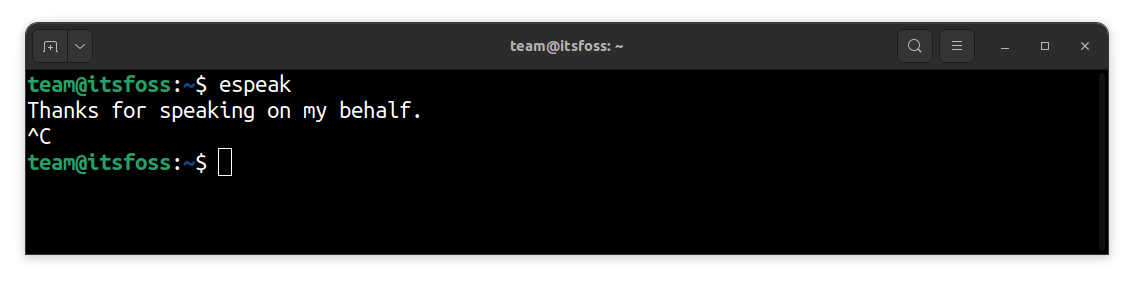

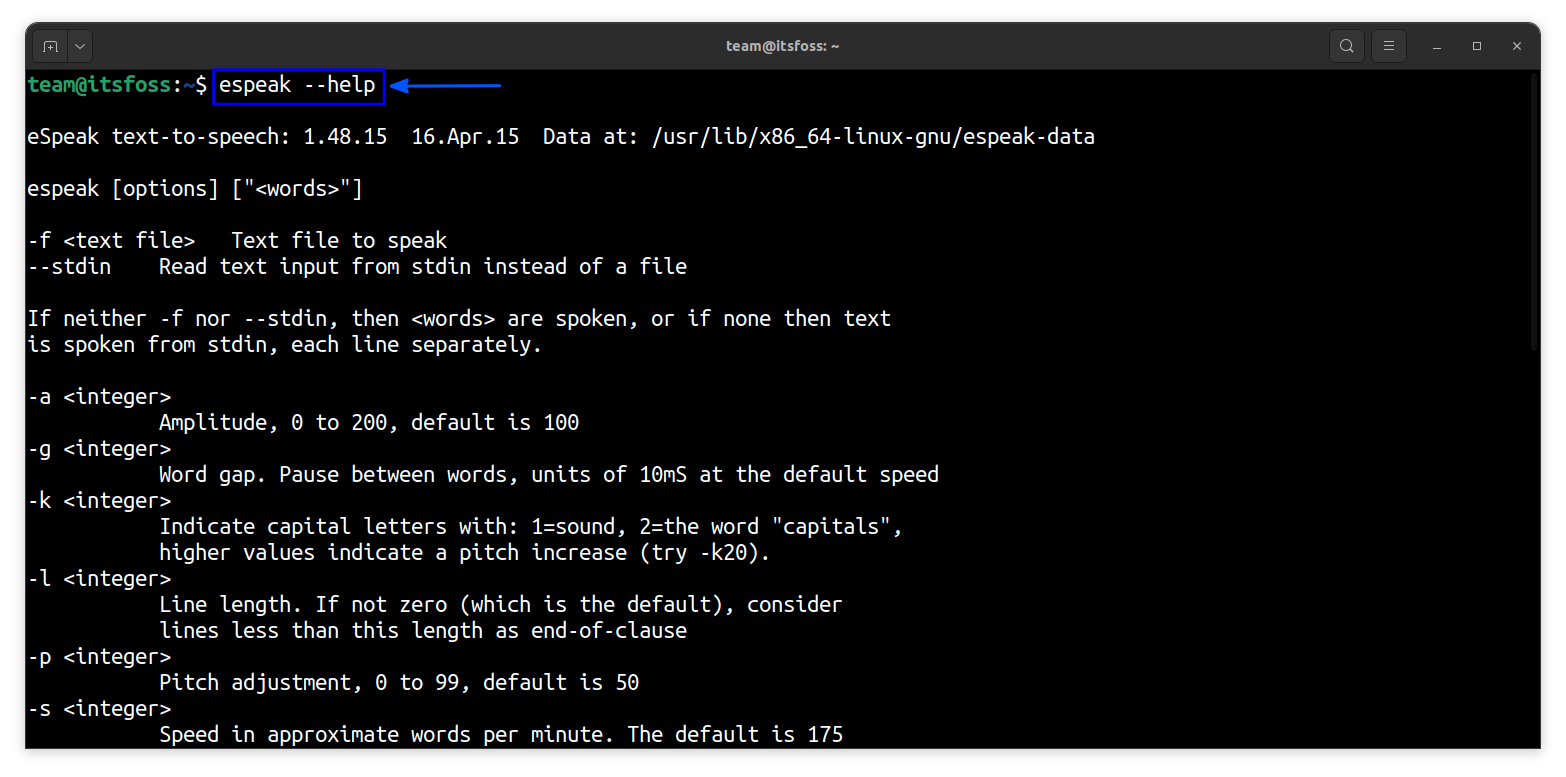

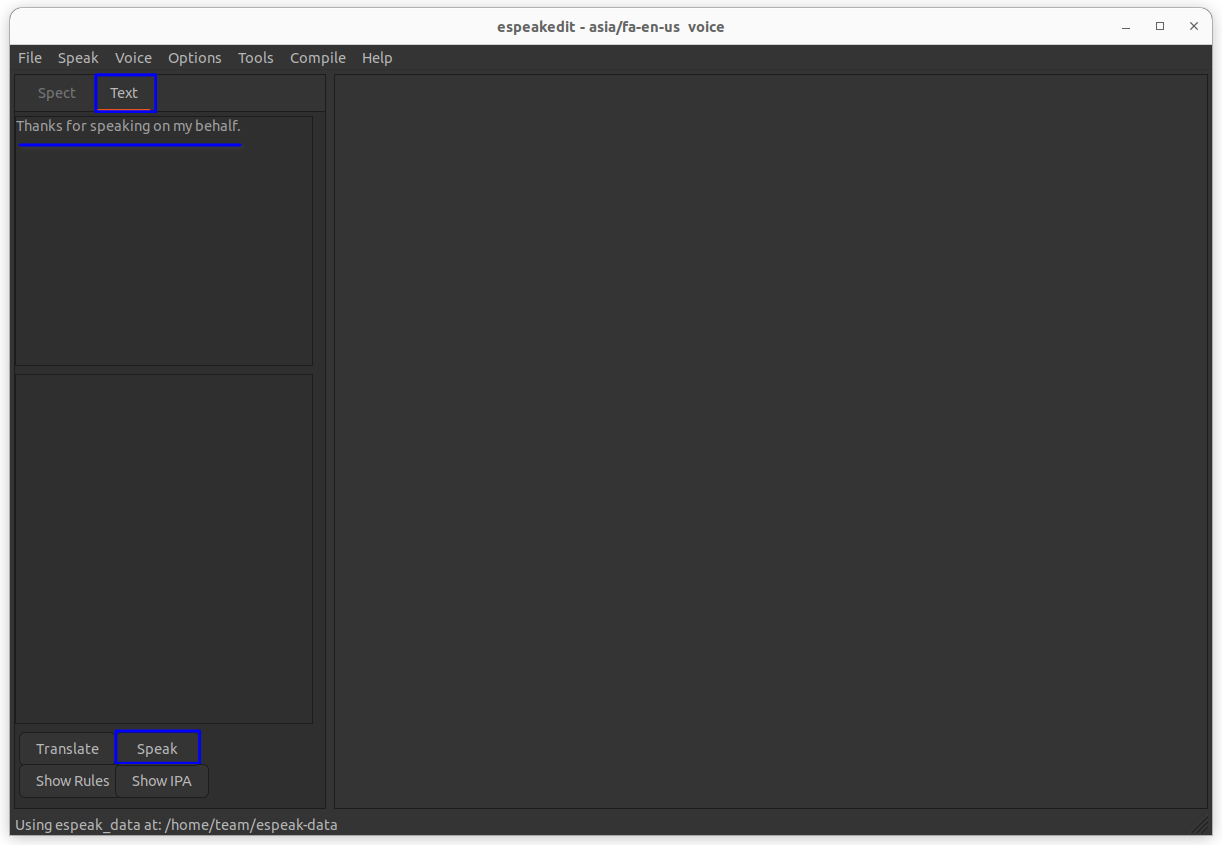

How to enable speech-to-text in Linux with this simple app

I'm not a big user of speech-to-text but that's only because I "word" for a living and still have fingers that are capable of typing very fast. That's not something I ever take for granted. And given I've known many people over the years who depended on speech-to-text, I am always very grateful to point out the means to make an operating system more accessible.

So, when I came across the Speech Note app, I was thrilled to find it was quite simple to add speech-to-text in Linux. However, once I installed the app and started using it, I realized that it comes with a considerable caveat…it requires power (and a lot of it).

Also: How to turn on flash notifications on Android 14

The reason this app requires so much power is that speech-to-text processing happens offline, which means it will depend on your CPU (and GPU if you have one) to carry the heavy lifting. If your machine is underpowered, one of two things will happen: the computer will crash while trying to process speech-to-text, or it will happen very slowly. So, if you don't have a powerful desktop computer, you might want to depend on a third-party speech-to-text service, such as that found in Google Docs (which only works with the Chrome browser).

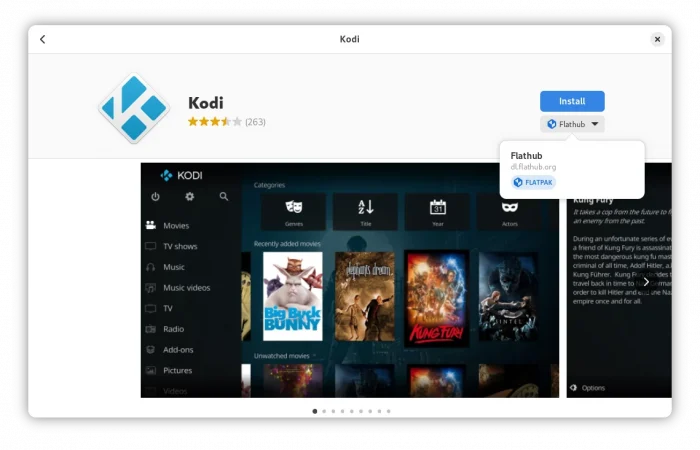

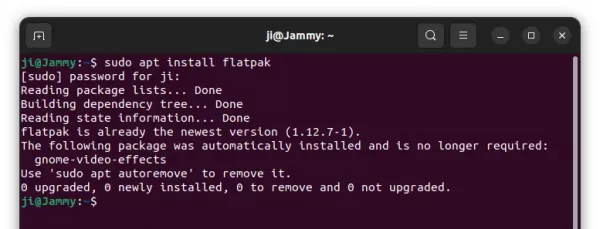

If you have a powerful enough machine, you can turn to the open-source Speech Note app. This app can be installed on any Linux distribution that supports Flatpak . It's important to note, however, that the base installation is very small. However, downloading the language model can take up to 2GB of space, so keep that in mind if your system has limited local storage.

Once installed and ready, Speech Note does a great job of processing speech-to-text on Linux.

Let me show you how to install and prepare Speech Note for use.

How to install Speech Note

What you'll need: To get Speech Note installed, you'll need a Linux machine with Flatpak installed and over 2GB of free internal storage. That's it. Let's make it happen.

1. Open your terminal window and install

Log into your desktop and open the terminal window app. Once the app is open, paste the following command and hit Enter on your keyboard:

Make sure to answer Y to the questions to complete the installation.

2. Open Speech Note

Click your desktop menu and look for the Speech Note launcher. If you don't see it, you might have to log out and log back into your desktop to make it appear.

Speech Note is a simple-to-use GUI app for speech to text on Linux.

3. Download your language model

From the main Speech Note window, click Languages. In the resulting pop-up, locate the language you want to download. Hover over that language and click the associated Download button. When the language model has been downloaded, click Close.

You can download as many language models as you need (so long as your machine has the storage space for it).

4. Configure Speech Note

Click the three-dot menu button in the upper left corner. From the resulting dropdown, click Settings. In the Settings popup, you'll want to consider two changes. The first is the Audio source. Click the dropdown and make sure to select the source associated with your mic. If you're using a built-in mic, you'll probably want to stick Auto. If you're using an external mic, make sure to select it from the list.

Also: Do you need a speech therapist? Now you can consult AI

The next setting is the Listening mode, for which there are three choices: one sentence, press and hold, and always on. One sentence will listen to one sentence at a time. As soon as you stop speaking, Speech Note will stop listening.

Press and hold means it will keep listening as long as you hold the Listen button. Always on means as soon as you click Listen, it will listen and continue to do so until you stop it.

There are a number of configurations you can undertake but these will get you up and running right away.

5. Use Speech Note

Using Speech Note is simple. Click the Listen button and start talking. There will be a lag between your speaking and Speech Notes transcribing. Depending on the speed of your hardware, that lag can be considerable (if the machine is underpowered).

And that's all there is to using the Speech Note app for easy speech-to-text on Linux. Remember, if your machine isn't powerful enough to handle the processing, you can always turn to Google Chrome and Google Docs (which does work quite well on Linux).

How to use Android's 'Photomoji' feature in Google Messages (and why you should)

Openai's voice engine can clone a voice from a 15-second clip. listen for yourself, how to screen calls on your android phone and stop the spam deluge.

- About AssemblyAI

Kaldi Speech Recognition for Beginners - A Simple Tutorial

Want to learn how to use Kaldi for Speech Recognition? Check out this simple tutorial to start transcribing audio in minutes.

Developer Educator at AssemblyAI

In this tutorial, we’ll use the open-source speech recognition toolkit Kaldi in conjunction with Python to automatically transcribe audio files. By the end of the tutorial, you’ll be able to get transcriptions in minutes with one simple command!

Important Note

For this tutorial, we are using Ubuntu 20.04.03 LTS (x86_64 ISA) . If you are on Windows, the recommended procedure is to install a virtual machine and follow this tutorial exactly on a Debian-based distro (preferably the exact one mentioned above - you can find an ISO here )

Before we can get started with Kaldi for Speech Recognition, we'll need to perform some installations.

Installations

Prerequisites.

The most notable prerequisite is time and space . The Kaldi installation can take hours, and consumes almost 40 GB of disk space, so prepare accordingly. If you need transcriptions ASAP, check out the Cloud Speech-to-Text APIs section!

Automatic Installation

If you would like to manually install Kaldi and its dependencies, you can move on to the next subsection . If you are comfortable with an automatic installation, you can follow this subsection.

You will need wget and git installed on your machine in order to follow along. wget comes installed natively on most Linux distributions, but you may need to open a terminal and install git with

Next, navigate into the directory in which you would like to install Kaldi, and then fetch the installation script with

This command downloads the setup.sh file, which effectively just automates the manual installation below. Be sure to open this file in a text editor and inspect it to make sure you understand it and are comfortable running it. You can then perform the setup with

Install Note

If you have multiple CPUs, you can perform a parallel build by supplying the number of processors you would like to use. For example, to use 4 CPUs, enter sudo bash setup.sh 4

Running the above command will install all of Kaldi's dependencies, and then Kaldi itself. You will be required to confirm that all dependencies are installed at one point (several minutes into the installation). We suggest checking and confirming, but if you are following along on a fresh Ubuntu 20.04.03 LTS install (perhaps on a virtual machine), then you can skip confirming by instead running

In this case, you do not need to interact with the terminal at all during installation. The installation will likely take several hours, so you can leave and come back to it when the installation is complete. Once the installation is complete, enter the project directory with

and then move on to transcribing an audio file .

Manual Installation

Before manually installing Kaldi, we’ll need to install some additional packages. First, open a terminal, and run the following commands:

Additional Information

- You can copy these commands and paste them into the terminal by right clicking in terminal and selecting “Paste”.

- We’ll also need Intel MKL , which we will install later via Kaldi if you do not have it already.

Installing Kaldi

Now we can get started installing Kaldi for Speech Recognition. First, we need to clone the Kaldi repository. In the terminal, navigate to the directory in which you’d like to clone the repository. In this case, we are cloning to the Home directory.

Run the following command:

Installing Tools

To begin our Kaldi installation, we’ll first need to perform the tools installation. Navigate into the tools directory with the following command:

and then install Intel MKL if you don’t already have it. This will take time - MKL is a large library.

Now we check to ensure all dependencies are installed. Given our preparatory installations, you should get a message telling you that all dependencies are indeed installed.

If you do not have all dependencies installed, you will get an output telling you which dependencies are missing. Install any remaining packages you need, and then rerun the extras/check_dependencies.sh command . New required installations may now appear as a result of the dependencies you just installed. Continue alternating between these two steps (checking missing dependencies and installing them) until you receive a message saying that all dependencies are installed ("all OK.") .

Finally, run make . See the install note below if you have a multi-CPU build.

If you have multiple CPUs, you can do a parallel build by supplying the "-j" option to make in order to expedite the install. For example, to use 4 CPUs, enter make -j 4

Installing Src

Next, we need to perform src install. First, cd into src

And then run the following commands. See the install note below if you have a multi-CPU build. This build may take several hours for uniprocessor systems.

Again, you can supply the -j option to both make depend and make if you have multiple CPUs in order to expedite the install. For example, to use 4 CPUs, enter make depend -j 4 and make -j 4

Cloning the Project Repository

Now it’s time to clone the project repository provided by AssemblyAI, which hosts the code required for the remainder of the tutorial. The project repository follows the structure of the other folders in kaldi/egs (the “examples” directory in Kaldi root) and includes additional files to automate the transcription generation for you.

Navigate into egs folder, clone the project repository, and then navigate into the s5 subdirectory

At this point you can delete all other folders in the egs directory. They take up about 10 GB of disk space, but consist of other examples that you may want to check out after this tutorial.

Transcribing an Audio File - Quick Usage

Now we’re ready to get started transcribing an audio file! We’ve provided everything you need to automatically transcribe a .wav file in a single line of code.

For a minimal example, all you need to do is run

This command will transcribe the provided example audio file gettysburg.wav - a 10 second .wav file containing the first line of the Gettysburg Address. The command will take several minutes to execute, after which you will find the transcription in kaldi-asr-tutorial/s5/out.txt

You will need an internet connection the first time you run main .py in order to download the pre-trained models.

If you would like to transcribe your own .wav file, first place it in the s5 subdirectory , and then run:

Where you replace gettysburg.wav with the name of your file. If the only .wav file in the s5 subdirectory is your target audio file, you can simply run python3 main.py without specifying the filename.

This automated process will work best with a single speaker and a relatively short audio. For more complicated usage, you’ll have to read the next section and modify the code to suit your needs, following along with the Kaldi documentation .

Resetting the Directory

Each time you run main.py it will call reset_directory.py , which removes all files/folders generated by main.py (except the downloaded tarballs of the pre-trained models) in order to start each run with a clean slate. This means that your out.txt transcription will be deleted if you call main.py on another file , so be sure to move out.txt to another directory if you would like to keep it before transcribing another file.

If you interrupt the main.py execution while the pre-trained models are downloading, you will receive errors downstream. In this case, run the following command to completely reset the directory (i.e. remove the pre-trained model tarballs in addition to the files/folder removed by reset_directory.py )

Transcribing an Audio File - Understanding the Code

If you’re interested in understanding how Kaldi's Speech Recognition generated the transcription in the previous section, then read on!

We’re going to dive into main.py in order to understand the entire process of generating a transcription with Kaldi. Keep in mind that our use case is a toy example to showcase how to use pre-trained Kaldi models for ASR. Kaldi is a very powerful toolkit which accommodates much more complicated usage; but it does have a sizable learning curve, so learning how to properly apply it to more complicated tasks will take some time.

Also, we’ll give brief overviews of the theory behind what’s going on in different sections, but ASR is a complicated topic, so by nature our conversation will be surface level!

Let’s get started.

We kick things off with some imports. First, we call the reset_directory.py file that clears the directory of files/folders generated by the rest of main.py so we can start with a clean slate. Then we import subprocess so we can issue bash commands, as well as some other packages which we’ll use for os navigation and file manipulation.

Argument Validation

Next, we perform some argument validation. We ensure that there is a maximum of one additional argument passed in to main.py ; and, if there is one, we ensure that is a .wav file. If there is no argument given, then we simply choose the first .wav file found by glob.glob , if such a file exists.

We save the filename (with and without extension) in variables for later use.

Kaldi File Generation

Now it’s time to create some standard files that Kaldi requires to generate transcriptions. We save the s5 directory path into a variable so that we can easily navigate back to it, and then create and navigate into a data/test directory that we will store our data in.

The first file we’ll generate is called spk2utt , which maps speakers to their utterances. For our purposes, we assume that there is one speaker and one utterance , so the file is easy to generate automatically.

Next, we create the inverse mapping in the utt2spk file. Note that this file is one-to-one, unlike the one-to-many nature of spk2utt (one speaker may have multiple utterances, but each utterance can have only one speaker). For our purposes it is once again easy to generate this file:

The last file we create is called wav.scp . It maps audio file identifiers to their system paths. We again generate this file automatically.

Finally, we return to the root directory

Note that these are not the only possible input files that Kaldi can use, just the bare minimum. For more advanced usage, such as gender mapping, check out the Kaldi documentation .

MFCC Configuration File Modification

To perform ASR with Kaldi on our audio file, we must first determine some method of representing this data in a format that a Kaldi model can handle. For this, we use Mel-frequency cepstral coefficients (MFCCs) . MFCCs are a set of coefficients that define the mel-frequency cepstrum of the audio, which itself is a cosine transform of the logarithmic power spectrum of a nonlinear mapping (mel-frequency) of the Fourier transform of the signal. If that sounds confusing, don’t worry - it’s not necessary to understand for the purposes of generating transcriptions! The important thing to know is that MFCCs are a low dimensional representation of an audio signal that are inspired by human auditory processing .

There is a configuration file that we use when we are generating MFCCs, located in ./conf/mfcc_hires.conf. The only thing we need to know from a practical standpoint is that we must modify this file to list the proper sample rate for our input .wav file . We do this automatically as follows:

First, we call a subprocess which opens a bash shell and uses sox to get the audio information of the .wav file. Then, we perform string manipulation to isolate the sample rate of the .wav file.

Next, we open and read the MFCC configuration file so that we can modify it

And identify the line that sets the sample frequency and isolate it.

Next, we reformat this line to list the sample rate of our .wav file as identified by the soxi command.

Finally, we replace the relevant line in the lines list, collapse this list back into a string, and then write this string to the MFCC configuration file.

Feature Extraction

Now we can get started processing our audio file. First, we open a file for logging our bash outputs, which we will use for every bash command going forward. Then, we copy our .wav file into the ./data/test directory, and then copy the whole ./data/test directory into a new directory (./data/test_hires) for processing.

Next, we generate MFCC features using our data and the configuration file we previously modified.

More information about the arguments of the bash command can be found here:

- steps/make_mfcc.sh : specifies the location of the shell script which generates mfccs

- --nj 1 : specifies the number of jobs to run with. If you have a multi-core machine, you can increase this number

- --mfcc-config conf/mfcc_hires.conf : specifies the location of the configuration file we previously modified

- data/test_hires : specifies the data folder containing the relevant data we will operate on

This command generates the conf , data , and log directories as well as the feats.scp , frame_shift , utt2dur , and utt2num_frames files (all within the data/test_hires directory)

After this, we compute the cepstral mean and variance normalization ( CMVN ) statistics on the data, which minimizes the distortion caused by noise contamination. That is, CMVN helps make our ASR system more robust against noise.

Finally, we use the fix_data_dir.sh shell script to ensure that the files within the data directory are properly sorted and filtered, and also to create a data backup in data/test_hires/.backup .

Pre-trained Model Download and Extraction

Now that we have performed MFCC feature extraction and CMVN normalization, we need a model to pass the data through. In this case we will be using the Librispeech ASR Model , found in Kaldi’s pre-trained model library , which was trained on the LibriSpeech dataset. This model is composed of four submodels:

- An i-vector extractor

- A TDNN-F based chain model

- A small trigram language model

- An LSTM-based model for rescoring

To download these models, we first check to see if these tarballs are already in our directory. If they are not, we download them using wget

and extract them using tar .

This creates the exp/nnet3_cleaned , exp/chain_cleaned , data/lang_test_tgsmall , and exp/rnnlm_lstm_1a directories.

- nnet3_cleaned is the i-vector extractor directory

- chain_cleaned is the chain model directory

- tgsmall is the small trigram language model directory

- and rnnlm is the LSTM-based rescoring model

If the wget process is interrupted during download, you will run into errors downstream. In this case, run the below in terminal to delete any model tarballs that are there and completely reset the directory. We call reset_directory.py rather than reset_directory_completely.py by default so we don't have to download the models (~430 MB compressed) each time we run main.py .

Decoding Generation

Extracting i-vectors.

Next up, we’ll extract i-vectors, which are used to identify different speakers. Even though we have only one speaker in this case, we extract i-vectors anyway for the general use case, and because they are expected downstream.

We create a directory to store the i-vectors and then run a bash command to extract them:

- steps/online/nnet2/extract_ivectors_online.sh : specifies the location of the shell script which extracts the i-vectors

- data/test_hires : specifies the location of the data directory

- exp/nnet3_cleaned/extractor : specifies the location of the extractor directory

- exp/nnet3_cleaned/ivectors_test_hires : specifies the location to store the i-vectors

Constructing the Decoding Graph

In order to get our transcription, we need to pass our data through the decoding graph . In our case, we will construct a fully-expanded decoding graph ( HCLG ) that represents the language model, lexicon (pronunciation dictionary), context-dependency, and HMM structure in the model.

The output of the decoding graph is a Finite State Transducer that has word-ids on the output, and transition-ids on the input (the indices that resolve to pdf-ids)

HCLG stands for a composition of functions, where

- H contains HMM definitions, whose inputs are transition-ids and outputs are context-dependent phones

- C is the context-dependency, that takes in context-dependent phones and outputs phones

- L is the lexicon, which takes in phones and outputs words

- and G is an acceptor that encodes the grammar or language model, which both takes in and outputs words

The end result is our decoding , in this case a transcription of our single utterance.

Before we can pass our data through the decoding graph, we need to construct it. We create a directory to store the graph, and then construct it with the following command.

- utils/mkgraph.sh : specifies the location of the shell script which constructs the decoding graph

- --self-loop-scale 1.0 : Scales self-loops by the specified value relative to the language model 1

- --remove-oov : remove out-of-vocabulary (oov) words

- data/lang_test_tgsmall : specifies the location of the language directory

- exp/chain_cleaned/tdnn_1d_sp : specifies the location of the model directory

- exp/chain_cleaned/tdnn_1d_sp/graph_tgsmall : specifies the location to store the constructed graph

Decoding using the Generated Graph

Now that we have constructed our decoding graph, we can finally use it to generate our transcription!

First we create a directory to store the decoding information, and then decode using the following command.

- steps/nnet3/decode.sh : specifies the location of the shell script which runs the decoding

- --acwt 1.0 : Sets the acoustic scale. The default is 0.1, but this is not suitable for chain models 2

- --post-decode-acwt 10.0 : Scales the acoustics by 10 so that the regular scoring script works (necessary for chain models)

- --online-ivector-dir exp/nnet3_cleaned/ivectors_test_hires : specifies the i-vector directory

- exp/chain_cleaned/tdnn_1d_sp/graph_tgsmall : specifies the location of the graph directory

- exp/chain_cleaned/tdnn_1d_sp/decode_test_tgsmall : specifies the location to store the decoding information

Transcription Retrieval

It’s time to retrieve our transcription! The transcription lattice is stored as a GNU zip file in the decode_test_tgsmall directory, among other files (including word-error rates if you have input a Kaldi text file).

We store the directory paths of our zip file and graph word.txt file, and then pass these into a command variable which stores our bash command. This command unzips our zip file, and then writes the optimal path through the lattice (the transcription) to a file called out.txt in our s5 directory.

- ../../../src/latbin/lattice-best-path : specifies the location of the file which navigates the lattice to generate the decoding

- ark:'gunzip -c {0} |' : pipes the command to unzip the lattice file to shell via popen() 3

- 'ark,t:| utils/int2sym.pl -f 2- {1} > out.txt' : writes the decoding to out.txt 4

Let’s take a look at how our generated transcription compares to the true transcription!

FOUR SCORE AND SEVEN YEARS AGO OUR FATHERS BROUGHT FORTH ON THIS CONTINENT A NEW NATION CONCEIVED IN LIBERTY AND DEDICATED TO THE PROPOSITION THAT ALL MEN ARE CREATED EQUAL

Transcription:

FOUR SCORE AN SEVEN YEARS AGO OUR FATHERS BROUGHT FORTH UND IS CONTINENT A NEW NATION CONCEIVED A LIBERTY A DEDICATED TO THE PROPOSITION THAT ALL MEN ARE CREATED EQUAL

Out of 30 words we had 5 errors, yielding a word error rate of about 17%.

Rescoring with LSTM-based Model

We can rescore with the LSTM-based model using the below command:

- ../../../scripts/rnnlm/lmrescore_pruned.sh : specifies the location of the shell script which runs the rescoring 5

- --weight 0.45 : specifies the interpolation weight for the RNNLM

- --max-ngram-order 4 : approximates the lattice-rescoring by merging histories in the lattice if they share the same ngram history which prevents the lattice from exploding exponentially

- data/lang_test_tgsmall : specifies the old language model directory

- exp/rnnlm_lstm_1a : specifies the RNN language model directory

- data/test_hires : specifies the data directory

- exp/chain_cleaned/tdnn_1d_sp/decode_test_tgsmall : specifies the input decoding directory

- exp/chain_cleaned/tdnn_1d_sp/decode_test_rescore : specifies the output decoding directory

We again output the transcription to a .txt file, in this case called out_rescore.txt :

In our case, rescoring did not change our generated transcription, but it may improve yours!

Advanced Kaldi Speech Recognition

Hopefully this tutorial gave you an understanding of the Kaldi basics and a jumping off point for more complicated NLP tasks! We just used a single utterance and a single .wav file, but we might also consider cases where we want to do speaker identification, audio alignment, or more.

You can also go beyond using pre-trained models with Kaldi. For example, if you have data to train your own model, you could make your own end-to-end system, or integrate a custom acoustic model into a system that uses a pre-trained language model. Whatever your goals, you can use the building blocks identified in this article to help you get started!

There are a ton of different ways to process audio to extract useful information, and each way offers its own subfield rich with task-specific knowledge and a history of creative approaches. If you want to dive deeper into Kaldi to build your own complicated NLP systems, you can check out the Kaldi documentation here .

Cloud Speech-to-Text APIs

Kaldi is a very powerful and well-maintained framework for NLP applications, but it’s not designed for the casual user. It can take a long time to understand how Kaldi operates under the hood, an understanding that is necessary to put it to proper use.

In this vein, Kaldi is consequently not designed for plug-and-play speech processing applications. This can pose difficulties for those who don’t have the time or know-how to customize and train NLP models, but who want to implement speech recognition in larger applications.

If you want to get high quality transcripts in just a few lines of code, AssemblyAI offers a fast, accurate, and easy-to-use Speech-to-Text API . You can sign up for a free API token here and gain access to state-of-the-art models that provide:

- Asynchronous Speech-to-Text

- Real-Time Speech-to-Text

- Summarization

- Emotion Detection

- Sentiment Analysis

- Topic Detection

- Content Moderation

- Entity Detection

- PII Redaction

- And much more!

Grab a token and check out the AssemblyAI docs to get started.

1) Link to "Scaling of transition of acoustic probabilities" in the Kaldi documentation

2) Link to "Decoding with 'chain' models" in the Kaldi documentation

3) Link to "Extended filenames: rxfilenames and wxfilenames" in the Kaldi documentation

4) Link to "Table I/O" in the Kaldi documentation

5) Link to the lmrescore_pruned.sh script in the Kaldi ASR GitHub repo

6) For other beginner resources on getting started with Kaldi, check out this , this , or this resource. Elements from these sources have been adapted for use within this article.

Popular posts

AI trends in 2024: Graph Neural Networks

AI for Universal Audio Understanding: Qwen-Audio Explained

Combining Speech Recognition and Diarization in one model

How DALL-E 2 Actually Works

- Download

- Documentation

- Get involved

- Forum

- File a Bug

Developer Blogs

- Simon 0.4.90 beta released

- Simon 0.4.80 alpha released

- New life in Simon speech recognition

- Simon on OS X

- Open Academy

Suramya's Blog : Welcome to my crazy life…

January 21, 2022, nerd-dictation: a fantastic open source speech to text software for linux.

After a long time of searching I finally found a speech to text software for Linux that actually works well enough that I can use it for dictating without having to jump through too many hoops to configure and use. The software is called nerd-dictation and is an open source software. It is fairly easy to setup as compared to the other voice-to-text systems that are available but still not at a stage where a non-tech savvy person would be able to install it easily. (There is effort ongoing to fix that)

The steps to install are fairly simple and documented below for reference:

- pip3 install vosk

- git clone https://github.com/ideasman42/nerd-dictation.git

- cd nerd-dictation

- wget https://alphacephei.com/kaldi/models/vosk-model-small-en-us-0.15.zip

- unzip vosk-model-small-en-us-0.15.zip

- mv vosk-model-small-en-us-0.15 model

nerd-dictation allows you to dictate text into any software or editor which is open so I can dictate into a word document or a blog post or even the command prompt. Previously I have used tried using software like otter.ai which actually works quite well but doesn’t allow you to edit the text as you’re typing, so you basically dictate the whole thing and the system gives you the transcription after you are done. So, you have to go back and edit/correct the transcript which can be a pain for long dictations. This software works more like Microsoft dictate which is built into Word. Unfortunately my word install on Linux using Crossover doesn’t allow me to use the built in dictate function and I have no desire to boot into windows just so that I can dictate a document.

This downloads the software in the current directory. I set it up on /usr/local but it is up to you where you want it. In addition, I would recommend that you install one of the larger dictionaries/models which makes the voice recognition a lot more accurate. However, do keep in mind that the larger models use up a lot more memory so you need to ensure that your computer has enough memory to support the larger models. The smaller ones can run on systems as small as a raspberry pi, so depending on your system configuration you can choose. The models are available here .

The software does have some quirks, like when you are talking and you pause it will take it as a start of a new sentence and for some reason it doesn’t put a space after the last word. So unless you’re careful you need to go back and add spaces to all the sentences that you have dictated, which can get annoying. (I started manually pressing space everytime I paused to add the space). Another issue is that it doesn’t automatically capitalize the words when you dictate such as those at the beginning of the sentence or the word ‘I’. This requires you to go back and edit, but that being said it still works a lot better than the other software that I have used so far on Linux. For Windows system Dragon Voice Dictation works quite well but is expensive. I tested it out by typing out this post using it and for the most part it does work it worked quite well.

Running the software again requires you to run commands on the commandline, but I configured shortcut keys to start and stop the dictation which makes it very convenient to use. Instructions on how to configure custom shortcut keys are available here . If you don’t want to do that, then you can start the transcription by issuing the following command (assuming the software is installed in /usr/local/nerd-dictation):

This starts the software and tells it that we are going to dictate for a long time. More details on the options available are available on the project site. To stop the software you should run the following command:

I suggest you try this if you are looking for a speech-to-text software for Linux. Well this is all for now. Will post more later.

Thanks to Hacker News: Nerd-dictation, hackable speech to text on Linux for the link.

– Suramya

No Comments »

No comments yet.

RSS feed for comments on this post. TrackBack URL

Leave a comment

Name (required)

Mail (will not be published) (required)

XHTML: You can use these tags: <a href="" title=""> <abbr title=""> <acronym title=""> <b> <blockquote cite=""> <cite> <code> <del datetime=""> <em> <i> <q cite=""> <s> <strike> <strong>

- Article Releases

- Astronomy / Space

- Reviews-Fantasy

- Reviews-Paranormal

- Reviews-Romance

- Reviews-Science Fiction

- Reviews-Thriller

- Reviews-Urban Fantasy

- Reviews-Young Adult Fantasy

- Computer Hardware

- Security Tools

- Security Tutorials

- Computer Software

- Computer Tips

- Artificial Intelligence

- Quantum Computing

- General/News

- Interesting Sites

- Knowledgebase

- Linux/Unix Related

- My Thoughts

- Computer Related

- Science Related

- Tech Related

- Travel/Trips

- Uncategorized

- Website Updates

- Search for:

- Suramya on Fixing problems with nvidia-driver on Debian Unstable after latest upgrade : “ @asd, I am running the Unstable branch, which is what is used to perform the E2E testing that you are… ” Apr 1, 14:59

- asd on Fixing problems with nvidia-driver on Debian Unstable after latest upgrade : “ It shouldn’t happen in the first place, this software should’ve been extensively unit and E2E tested by a large team… ” Mar 30, 02:25

- pelorustech on Internet of Things (IoT) Forensics: Challenges and Approaches : “ This insightful blog on IoT forensics is a gem! Your in-depth exploration of challenges and approaches is truly commendable. It’s… ” Aug 30, 16:07

- Abhishek on My Trip to Gujarat : “ Very nice post with beautiful pictures!!! ” May 23, 17:16

- February 2024

- January 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- January 2022

- September 2021

- August 2021

- February 2021

- January 2021

- November 2020

- October 2020

- September 2020

- August 2020

- January 2020

- December 2019

- November 2019

- October 2019

- September 2019

- August 2019

- January 2019

- September 2018

- August 2018

- February 2018

- January 2018

- December 2017

- October 2017

- September 2016

- February 2016

- January 2016

- October 2015

- September 2015

- August 2015

- January 2015

- December 2014

- November 2014

- October 2014

- September 2014

- February 2013

- November 2012

- October 2012

- August 2012

- February 2012

- January 2012

- December 2011

- November 2011

- October 2011

- September 2011

- December 2010

- October 2010

- September 2010

- August 2010

- February 2010

- January 2010

- December 2009

- November 2009

- October 2009

- September 2009

- August 2009

- February 2009

- January 2009

- December 2008

- November 2008

- October 2008

- September 2008

- August 2008

- February 2008

- January 2008

- December 2007

- November 2007

- October 2007

- September 2007

- August 2007

- February 2007

- January 2007

- December 2006

- November 2006

- October 2006

- September 2006

- August 2006

- February 2006

- January 2006

- December 2005

- November 2005

- October 2005

- September 2005

- August 2005

- February 2005

- January 2005

- December 2004

- November 2004

- October 2004

- Entries feed

- Comments feed

- WordPress.org

Powered by WordPress

Ubuntu Speech-to-Text Tutorial

We love Ubuntu at Picovoice. Our standard dev machines are running Ubuntu. No offence to macOS and Windows fans 😉

Today you can run Ubuntu on a single-board computer (SBC) like Raspberry Pi, NVIDIA Jetson, or BeagleBone. At the same time, one can have it on a server or a desktop. Below we look at options for running Speech-to-Text on an Ubuntu machine. Then we dive deeper into how to run Picovoice Leopard Speech-to-Text Engine on Ubuntu.

Speech-to-Text on Ubuntu

You can use any API: Google Speech-to-Text, Amazon Transcribe, IBM Watson Speech-to-Text, or Azure Cognitive Services Speech-to-Text. The downside? They are pretty expensive for anything other than a proof of concept but are relatively accurate. Additionally, you need to send raw audio data to the cloud, which means extra power consumption and bandwidth cost. The latter is only a concern if you are on a cellular connection.

Alternatively, you can use free and open-source (FOSS) software. Kaldi (derivations of such as Vosk), Mozilla DeepSpeech (derivations of such as Coqui), and many more. The upside is that they are free, but the downside is that they hardly match the accuracy of API-based ASRs nor have all the features you might require (e.g. custom words and keyword boosting). If you care about the runtime efficiency, they are not necessarily optimized. These can be good starting points if you decide to build your own.

Picovoice Leopard Speech-to-Text processes voice locally on the device while matching the accuracy of API alternatives from Big Tech. Developers can start transcribing in seconds with Picovoice’s Free Plan , even for commercial projects.

Leopard comes with a total package size of 20MB (compared to GBs of FOSS alternatives). Leopard runtime efficiency enables it to run even on Raspberry Pi 3 using only a quarter of only one of the CPU cores.

Leopard Python SDK

Install Leopard Python package using PIP:

Sign up for Picovoice Console and copy your AccessKey to the clipboard. AccessKey handles authentication and authorization.

Create an instance of Leopard STT and transcribe a file:

Node.js, Rust, Go, Java, .NET, ...

Subscribe to our newsletter

More from Picovoice

Learn how to perform Speech Recognition in JavaScript, including Speech-to-Text, Voice Commands, Wake Word Detection, and Voice Activity Det...

Have you ever thought of getting a summary of a YouTube video by sending a WhatsApp message? Ezzeddin Abdullah built an application that tra...

The launch of Leopard Speech-to-Text and Cheetah Speech-to-Text for streaming brought cloud-level automatic speech recognition (ASR) to loca...

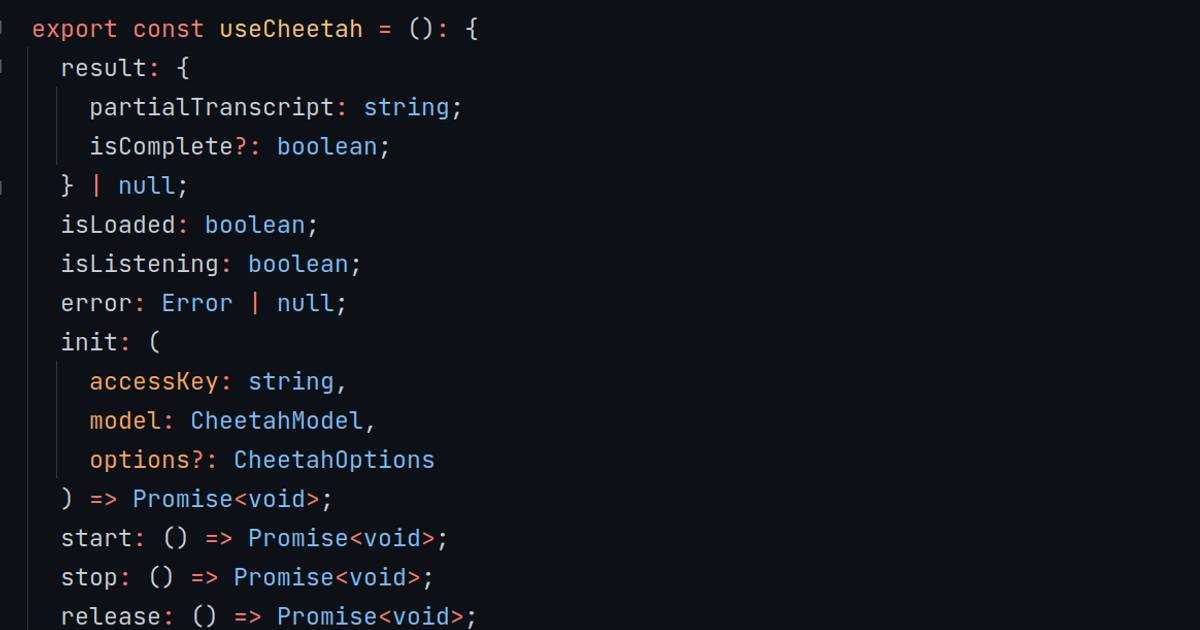

Transcribe speech-to-text in real-time using Picovoice Cheetah Streaming Speech-to-Text React.js SDK. The SDK runs on Linux, macOS, Windows,...

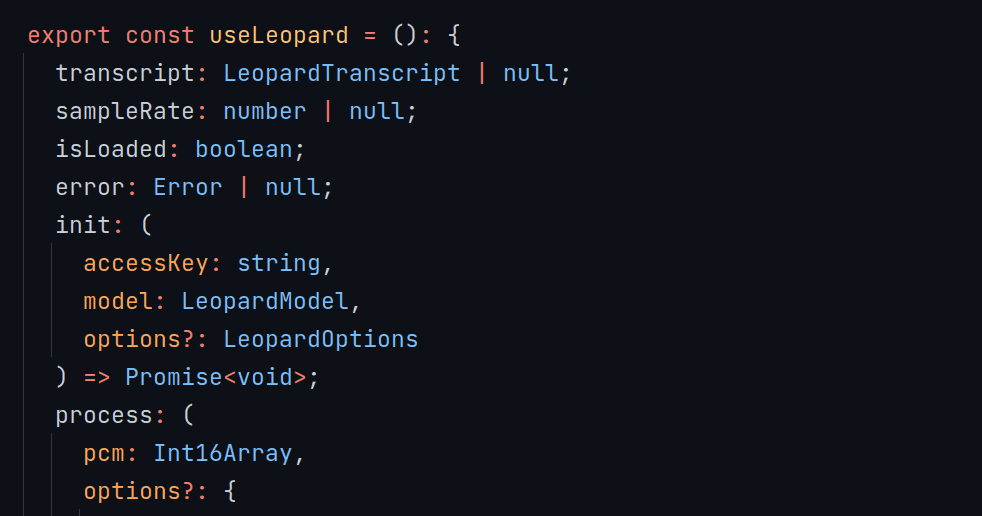

Transcribe speech to text using Picovoice Leopard speech-to-text React.js SDK. The SDK runs on Linux, macOS, Windows, Raspberry Pi, and NVID...

Learn how to create a custom speech-to-text model on the Picovoice Console using the Leopard & Cheetah Speech-to-Text Engines

Add speech-to-text to a Django project using Picovoice Leopard Speech-to-Text Python SDK. The SDK runs on Linux, macOS, Windows, Raspberry P...

Perform keyword spotting on Arm Cortex-M microcontrollers using Picovoice Porcupine Wake Word. Run NLU on MCUs using Picovoice Rhino Speech-...

Search code, repositories, users, issues, pull requests...

Provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

speech-to-text

Here are 2,809 public repositories matching this topic..., ggerganov / whisper.cpp.

Port of OpenAI's Whisper model in C/C++

- Updated Apr 12, 2024

mozilla / DeepSpeech

DeepSpeech is an open source embedded (offline, on-device) speech-to-text engine which can run in real time on devices ranging from a Raspberry Pi 4 to high power GPU servers.

- Updated Feb 18, 2024

leon-ai / leon

🧠 Leon is your open-source personal assistant.

- Updated Feb 25, 2024

kaldi-asr / kaldi

kaldi-asr/kaldi is the official location of the Kaldi project.

- Updated Jan 31, 2024

m-bain / whisperX

WhisperX: Automatic Speech Recognition with Word-level Timestamps (& Diarization)

- Updated Apr 11, 2024

SYSTRAN / faster-whisper

Faster Whisper transcription with CTranslate2

Uberi / speech_recognition

Speech recognition module for Python, supporting several engines and APIs, online and offline.

- Updated Apr 2, 2024

speechbrain / speechbrain

A PyTorch-based Speech Toolkit

nl8590687 / ASRT_SpeechRecognition

A Deep-Learning-Based Chinese Speech Recognition System 基于深度学习的中文语音识别系统

- Updated Jan 16, 2024

alphacep / vosk-api

Offline speech recognition API for Android, iOS, Raspberry Pi and servers with Python, Java, C# and Node

- Updated Apr 8, 2024

- Jupyter Notebook

TalAter / annyang

💬 Speech recognition for your site

- Updated Oct 3, 2022

jianchang512 / pyvideotrans

Translate the video from one language to another and add dubbing. 将视频从一种语言翻译为另一种语言,并添加配音

snakers4 / silero-models

Silero Models: pre-trained speech-to-text, text-to-speech and text-enhancement models made embarrassingly simple

- Updated Oct 18, 2023

sanchit-gandhi / whisper-jax

JAX implementation of OpenAI's Whisper model for up to 70x speed-up on TPU.

- Updated Apr 3, 2024

tensorflow / lingvo

Toverainc / willow.

Open source, local, and self-hosted Amazon Echo/Google Home competitive Voice Assistant alternative

- Updated Mar 2, 2024

pannous / tensorflow-speech-recognition

🎙Speech recognition using the tensorflow deep learning framework, sequence-to-sequence neural networks

- Updated Jan 17, 2024

coqui-ai / STT

🐸STT - The deep learning toolkit for Speech-to-Text. Training and deploying STT models has never been so easy.

- Updated Mar 11, 2024

MahmoudAshraf97 / whisper-diarization

Automatic Speech Recognition with Speaker Diarization based on OpenAI Whisper

- Updated Mar 12, 2024

mesolitica / NLP-Models-Tensorflow

Gathers machine learning and Tensorflow deep learning models for NLP problems, 1.13 < Tensorflow < 2.0

- Updated Jul 20, 2020

Improve this page

Add a description, image, and links to the speech-to-text topic page so that developers can more easily learn about it.

Curate this topic

Add this topic to your repo

To associate your repository with the speech-to-text topic, visit your repo's landing page and select "manage topics."

Command-line tools for speech and intent recognition on Linux

voice2json is a collection of command-line tools for offline speech/intent recognition on Linux. It is free, open source ( MIT ), and supports 18 human languages .

Getting Started

- Data Formats

- Node-RED Plugin

From the command-line:

produces a JSON event like:

when trained with this template :

Tools like Node-RED can be easily integrated with voice2json through MQTT .

voice2json is optimized for :

- Sets of voice commands that are described well by a grammar

- Commands with uncommon words or pronunciations

- Commands or intents that can vary at runtime

It can be used to:

- Add voice commands to existing applications or Unix-style workflows

- Provide basic voice assistant functionality completely offline on modest hardware

- Bootstrap more sophisticated speech/intent recognition systems

Supported speech to text systems include:

- CMU’s pocketsphinx

- Dan Povey’s Kaldi

- Mozilla’s DeepSpeech 0.9

- Kyoto University’s Julius

- Install voice2json

- Your profile settings will be in $HOME/.local/share/voice2json/<PROFILE>/profile.yml

- Edit sentences.ini in your profile and add your custom voice commands

- Train your profile

- See the recipes for more possibilities

Supported Languages