Pilot Study in Research: Definition & Examples

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A pilot study, also known as a feasibility study, is a small-scale preliminary study conducted before the main research to check the feasibility or improve the research design.

Pilot studies can be very important before conducting a full-scale research project, helping design the research methods and protocol.

How Does it Work?

Pilot studies are a fundamental stage of the research process. They can help identify design issues and evaluate a study’s feasibility, practicality, resources, time, and cost before the main research is conducted.

It involves selecting a few people and trying out the study on them. It is possible to save time and, in some cases, money by identifying any flaws in the procedures designed by the researcher.

A pilot study can help the researcher spot any ambiguities (i.e., unusual things), confusion in the information given to participants, or problems with the task devised.

Sometimes the task is too hard, and the researcher may get a floor effect because none of the participants can score at all or can complete the task – all performances are low.

The opposite effect is a ceiling effect, when the task is so easy that all achieve virtually full marks or top performances and are “hitting the ceiling.”

This enables researchers to predict an appropriate sample size, budget accordingly, and improve the study design before performing a full-scale project.

Pilot studies also provide researchers with preliminary data to gain insight into the potential results of their proposed experiment.

However, pilot studies should not be used to test hypotheses since the appropriate power and sample size are not calculated. Rather, pilot studies should be used to assess the feasibility of participant recruitment or study design.

By conducting a pilot study, researchers will be better prepared to face the challenges that might arise in the larger study. They will be more confident with the instruments they will use for data collection.

Multiple pilot studies may be needed in some studies, and qualitative and/or quantitative methods may be used.

To avoid bias, pilot studies are usually carried out on individuals who are as similar as possible to the target population but not on those who will be a part of the final sample.

Feedback from participants in the pilot study can be used to improve the experience for participants in the main study. This might include reducing the burden on participants, improving instructions, or identifying potential ethical issues.

Experiment Pilot Study

In a pilot study with an experimental design , you would want to ensure that your measures of these variables are reliable and valid.

You would also want to check that you can effectively manipulate your independent variables and that you can control for potential confounding variables.

A pilot study allows the research team to gain experience and training, which can be particularly beneficial if new experimental techniques or procedures are used.

Questionnaire Pilot Study

It is important to conduct a questionnaire pilot study for the following reasons:

- Check that respondents understand the terminology used in the questionnaire.

- Check that emotive questions are not used, as they make people defensive and could invalidate their answers.

- Check that leading questions have not been used as they could bias the respondent’s answer.

- Ensure that the questionnaire can be completed in a reasonable amount of time. If it’s too long, respondents may lose interest or not have enough time to complete it, which could affect the response rate and the data quality.

By identifying and addressing issues in the pilot study, researchers can reduce errors and risks in the main study. This increases the reliability and validity of the main study’s results.

Assessing the practicality and feasibility of the main study

Testing the efficacy of research instruments

Identifying and addressing any weaknesses or logistical problems

Collecting preliminary data

Estimating the time and costs required for the project

Determining what resources are needed for the study

Identifying the necessity to modify procedures that do not elicit useful data

Adding credibility and dependability to the study

Pretesting the interview format

Enabling researchers to develop consistent practices and familiarize themselves with the procedures in the protocol

Addressing safety issues and management problems

Limitations

Require extra costs, time, and resources.

Do not guarantee the success of the main study.

Contamination (ie: if data from the pilot study or pilot participants are included in the main study results).

Funding bodies may be reluctant to fund a further study if the pilot study results are published.

Do not have the power to assess treatment effects due to small sample size.

- Viscocanalostomy: A Pilot Study (Carassa, Bettin, Fiori, & Brancato, 1998)

- WHO International Pilot Study of Schizophrenia (Sartorius, Shapiro, Kimura, & Barrett, 1972)

- Stephen LaBerge of Stanford University ran a series of experiments in the 80s that investigated lucid dreaming. In 1985, he performed a pilot study that demonstrated that time perception is the same as during wakefulness. Specifically, he had participants go into a state of lucid dreaming and count out ten seconds, signaling the start and end with pre-determined eye movements measured with the EOG.

- Negative Word-of-Mouth by Dissatisfied Consumers: A Pilot Study (Richins, 1983)

- A pilot study and randomized controlled trial of the mindful self‐compassion program (Neff & Germer, 2013)

- Pilot study of secondary prevention of posttraumatic stress disorder with propranolol (Pitman et al., 2002)

- In unstructured observations, the researcher records all relevant behavior without a system. There may be too much to record, and the behaviors recorded may not necessarily be the most important, so the approach is usually used as a pilot study to see what type of behaviors would be recorded.

- Perspectives of the use of smartphones in travel behavior studies: Findings from a literature review and a pilot study (Gadziński, 2018)

Further Information

- Lancaster, G. A., Dodd, S., & Williamson, P. R. (2004). Design and analysis of pilot studies: recommendations for good practice. Journal of evaluation in clinical practice, 10 (2), 307-312.

- Thabane, L., Ma, J., Chu, R., Cheng, J., Ismaila, A., Rios, L. P., … & Goldsmith, C. H. (2010). A tutorial on pilot studies: the what, why and how. BMC Medical Research Methodology, 10 (1), 1-10.

- Moore, C. G., Carter, R. E., Nietert, P. J., & Stewart, P. W. (2011). Recommendations for planning pilot studies in clinical and translational research. Clinical and translational science, 4 (5), 332-337.

Carassa, R. G., Bettin, P., Fiori, M., & Brancato, R. (1998). Viscocanalostomy: a pilot study. European journal of ophthalmology, 8 (2), 57-61.

Gadziński, J. (2018). Perspectives of the use of smartphones in travel behaviour studies: Findings from a literature review and a pilot study. Transportation Research Part C: Emerging Technologies, 88 , 74-86.

In J. (2017). Introduction of a pilot study. Korean Journal of Anesthesiology, 70 (6), 601–605. https://doi.org/10.4097/kjae.2017.70.6.601

LaBerge, S., LaMarca, K., & Baird, B. (2018). Pre-sleep treatment with galantamine stimulates lucid dreaming: A double-blind, placebo-controlled, crossover study. PLoS One, 13 (8), e0201246.

Leon, A. C., Davis, L. L., & Kraemer, H. C. (2011). The role and interpretation of pilot studies in clinical research. Journal of psychiatric research, 45 (5), 626–629. https://doi.org/10.1016/j.jpsychires.2010.10.008

Malmqvist, J., Hellberg, K., Möllås, G., Rose, R., & Shevlin, M. (2019). Conducting the Pilot Study: A Neglected Part of the Research Process? Methodological Findings Supporting the Importance of Piloting in Qualitative Research Studies. International Journal of Qualitative Methods. https://doi.org/10.1177/1609406919878341

Neff, K. D., & Germer, C. K. (2013). A pilot study and randomized controlled trial of the mindful self‐compassion program. Journal of Clinical Psychology, 69 (1), 28-44.

Pitman, R. K., Sanders, K. M., Zusman, R. M., Healy, A. R., Cheema, F., Lasko, N. B., … & Orr, S. P. (2002). Pilot study of secondary prevention of posttraumatic stress disorder with propranolol. Biological psychiatry, 51 (2), 189-192.

Richins, M. L. (1983). Negative word-of-mouth by dissatisfied consumers: A pilot study. Journal of Marketing, 47 (1), 68-78.

Sartorius, N., Shapiro, R., Kimura, M., & Barrett, K. (1972). WHO International Pilot Study of Schizophrenia1. Psychological medicine, 2 (4), 422-425.

Teijlingen, E. R; V. Hundley (2001). The importance of pilot studies, Social research UPDATE, (35)

How to Conduct Effective Pilot Tests: Tips and Tricks

Introduction

What is the main purpose of pilot testing, what qualitative research approaches rely on a pilot test, what are the benefits of a pilot study, how do you conduct a pilot study, steps after evaluation of pilot testing.

Successful qualitative research projects often begin with an essential step: pilot testing. Similar to beta testing for computer programs and online services, a pilot test, sometimes referred to as a small-scale preliminary study or pilot study, is to trial a design on a smaller scale before embarking on the main study.

Whether the focus is psychiatric research, a randomized controlled trial, or any other project, conducting a pilot test provides invaluable data, allowing research teams to refine their approach, optimize their evaluation criteria, and better predict the outcomes of a full-scale project.

Pilot testing, or the act of conducting a pilot study, is a crucial phase in the process, especially in qualitative and social science research . It serves as a preparatory step , a preliminary test, allowing researchers to evaluate, refine, and if necessary, redesign aspects of their study before full implementation, as well as determine the cost of a full study.

Pilot studies for assessing feasibility

One of the most significant purposes of a pilot test is to assess the feasibility of and identify potential design issues in the main study. It provides insights into whether a study's design is practical and achievable.

For instance, a research team might find that the originally planned method of interviewing is too time-consuming for a larger study or that participants may not be as forthcoming as hoped. Such insights from a feasibility study can save time, effort, and resources in the long run.

During pilot testing, a researcher can also determine how many or what kinds of participants might be needed for the main study to achieve meaningful results. It helps in ensuring that the target population is adequately represented without overwhelming the team with excessive data .

Refining research methods

A pilot study with a small sample size offers a testing ground for the instruments, tools, or techniques that the researchers plan to use.

For example, suppose a project involves using a new interview technique. In that case, the pilot group can provide feedback on the clarity of questions, the flow of the interview, or even the comfort level of the interaction. This feedback from a carefully selected group is vital in refining the tools to ensure that the main study captures the richest insights possible.

No design is perfect from the outset. Pilot testing acts as a litmus test, highlighting any potential challenges or issues that might arise during the full-scale project.

By identifying these hurdles in advance, researchers can preemptively devise solutions, ensuring smoother execution when the full study is conducted.

Gathering preliminary data

While the primary aim of pilot testing is not necessarily data collection for the main study, the knowledge garnered can be incredibly valuable for improving the current study or building toward a future study.

During the pilot phase of a research project, patterns, anomalies, or unexpected results can emerge. These can lead researchers to refine their propositions or research objectives, adjusting them to better align with observed realities.

Beyond its direct application to the design of the research, the initial findings from a pilot study can have broader, more strategic uses. When seeking funding for a full-scale project, having tangible results, even if they're preliminary, can lend credibility and weight to a research proposal.

Demonstrating that a concept has been tested, even on a small scale, and has yielded insightful data can make a compelling case to potential sponsors or stakeholders.

Pilot studies are a foundational component of many approaches in qualitative research . The exploratory and interpretative nature of qualitative methodologies means that research tools and strategies often benefit from preliminary testing to ensure their effectiveness.

In ethnographic research , where the goal is to study cultures and communities in-depth, pilot studies help researchers become familiar with the environment and its people. A brief preliminary visit can aid in understanding local dynamics, forging initial relationships, and refining methods to respect cultural sensitivities.

Grounded theory research , which seeks to develop theories grounded in empirical data, often starts with pilot studies. These preliminary tests aid in refining the interview protocols and sampling strategies, ensuring that the main study captures data that genuinely represents and informs the emerging theory.

Narrative research relies on the collection of stories from individuals about their experiences. Given the depth and nuance of personal narratives, a pilot test can be instrumental in determining the most effective ways to prompt and capture these stories while ensuring participants feel comfortable and understood.

Phenomenological research , which endeavors to understand the essence of participants' lived experiences around a phenomenon, often employs pilot testing to refine interview questions. It ensures that these questions elicit detailed, rich descriptions of experiences without leading or influencing the participants' responses.

In the field of case study research , where a particular case (or a few cases) is studied in-depth, pilot studies can help in delineating the boundaries of the case, deciding on the data collection methods , and anticipating potential challenges in data gathering or interpretation.

Lastly, psychiatric research, which delves into understanding mental processes, behaviors, and disorders, frequently employs pilot studies, especially when introducing new therapeutic techniques or interventions. A small-scale preliminary study can help identify any potential risks or issues before applying a new method or tool more broadly.

ATLAS.ti handles all research projects big and small

Qualitative analysis made easy with our powerful interface. See how with a free trial.

A pilot study, being a precursor to the main research, is not merely a preliminary step; it's a vital one for the researcher. These initial investigations through pilot studies, while smaller in scale, can prove consequential in terms of benefits they offer to the research process.

Ensuring methodological rigor

At its core, a pilot study is a testing ground for the tools, techniques, and strategies that will be employed in the main study. By test-driving these elements, you can identify weaknesses or areas of improvement in the methodology.

This helps in ensuring that when the full future study is conducted, the methods used are sound, reliable, and capable of yielding meaningful results. For instance, if an interview question consistently confuses participants during the pilot phase, you can revise it for clarity in the main study.

Optimizing resource allocation

One of the significant advantages of pilot testing is the potential for resource optimization. You can gain insights into the time, effort, and funds required for various activities, allowing for more accurate budgeting and scheduling.

Moreover, by preempting potential challenges or obstacles, a pilot study can prevent costly mistakes or oversights when scaling up to the full research. For example, discovering that a particular method is inefficient during the pilot phase can save countless hours and resources in the larger study.

Enhancing participant experience and ethical considerations

The qualitative researcher often delves deep into participants' personal experiences, emotions, and perceptions. A pilot study provides an opportunity to ensure that the research process is respectful, sensitive, and ethically sound.

By trialing interactions with a smaller group, those who conduct the study can refine their approach to ensure participants feel valued, understood, and comfortable. This not only enhances the quality of the insights collected but also fosters trust and rapport with the research subjects.

In sum, the benefits of conducting a pilot study extend far beyond mere preliminary testing. They fortify the research process, ensuring studies are rigorous, efficient, and ethically sound.

As such, pilot studies remain a cornerstone of robust qualitative research , laying the groundwork for meaningful and impactful insights.

A pilot study is an integral phase in the process , acting as a bridge between initial study design and the full-scale project by providing information for future guidance. To generate actionable insights and pave the way for a successful full study, there are key steps researchers need to follow.

Defining objectives and scope

Before diving into the pilot study, it's essential to clearly define its objectives. What specific aspects of the main study are you testing? Is it the data collection methods , the feasibility of the research design of the study, or the clarity of the interview questions?

When researchers answer these questions, they can gain insight on whether the pilot study remains manageable and yields specific, actionable insights from a completed pilot.

Selecting a representative sample

For a pilot study to be effective, the sample chosen should be a good representation of the target population. This doesn't mean it needs to be large; after all, it's a small-scale preliminary study.

However, it should capture the diversity and characteristics of the population to provide a realistic preview of how the research might unfold. Think about how your selected group addresses the needs of your study and evaluate whether their contributions to the research can help you answer your research questions.

Collecting and analyzing data

Once the objectives are set and the participants are chosen, the next step is data collection . Employ the same tools, methods, or interventions you plan to use in the research. Finally, analyze what you have collected with a keen eye for patterns, anomalies, or unexpected outcomes.

This phase isn't just about collecting preliminary insights for the main study but about gauging the effectiveness of your methods and drawing insights to refine your approach.

Reflections on the design of your study should follow pilot testing. The final design that you decide on should be comprehensively informed by any useful insight you gather from your pilot study.

Adjust study methods

Pilot studies are especially useful when they help identify design issues. You can adjust aspects of your study if you found they did not prove effective in collecting insights during your pilot study.

Identify opportunities for richer collection

Pilot testing is not merely a phase to iron out mistakes and shortcomings. A good pilot study should also allow you to identify aspects of your study that were successful and would be even more successful if fully optimized. If there are interview questions that resonated with research participants, for example, think about how those questions can be better utilized in a full-scale study.

Conduct and refine your research with ATLAS.ti

From study design to key insights, make ATLAS.ti the platform for your analysis. Download a free trial today.

- Open access

- Published: 31 October 2020

Guidance for conducting feasibility and pilot studies for implementation trials

- Nicole Pearson ORCID: orcid.org/0000-0003-2677-2327 1 , 2 ,

- Patti-Jean Naylor 3 ,

- Maureen C. Ashe 5 ,

- Maria Fernandez 4 ,

- Sze Lin Yoong 1 , 2 &

- Luke Wolfenden 1 , 2

Pilot and Feasibility Studies volume 6 , Article number: 167 ( 2020 ) Cite this article

81k Accesses

117 Citations

24 Altmetric

Metrics details

Implementation trials aim to test the effects of implementation strategies on the adoption, integration or uptake of an evidence-based intervention within organisations or settings. Feasibility and pilot studies can assist with building and testing effective implementation strategies by helping to address uncertainties around design and methods, assessing potential implementation strategy effects and identifying potential causal mechanisms. This paper aims to provide broad guidance for the conduct of feasibility and pilot studies for implementation trials.

We convened a group with a mutual interest in the use of feasibility and pilot trials in implementation science including implementation and behavioural science experts and public health researchers. We conducted a literature review to identify existing recommendations for feasibility and pilot studies, as well as publications describing formative processes for implementation trials. In the absence of previous explicit guidance for the conduct of feasibility or pilot implementation trials specifically, we used the effectiveness-implementation hybrid trial design typology proposed by Curran and colleagues as a framework for conceptualising the application of feasibility and pilot testing of implementation interventions. We discuss and offer guidance regarding the aims, methods, design, measures, progression criteria and reporting for implementation feasibility and pilot studies.

Conclusions

This paper provides a resource for those undertaking preliminary work to enrich and inform larger scale implementation trials.

Peer Review reports

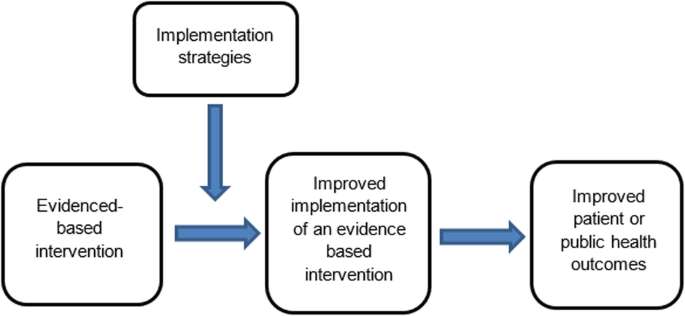

The failure to translate effective interventions for improving population and patient outcomes into policy and routine health service practice denies the community the benefits of investment in such research [ 1 ]. Improving the implementation of effective interventions has therefore been identified as a priority of health systems and research agencies internationally [ 2 , 3 , 4 , 5 , 6 ]. The increased emphasis on research translation has resulted in the rapid emergence of implementation science as a scientific discipline, with the goal of integrating effective medical and public health interventions into health care systems, policies and practice [ 1 ]. Implementation research aims to do this via the generation of new knowledge, including the evaluation of the effectiveness of implementation strategies [ 7 ]. The term “implementation strategies” is used to describe the methods or techniques (e.g. training, performance feedback, communities of practice) used to enhance the adoption, implementation and/or sustainability of evidence-based interventions (Fig. 1 ) [ 8 , 9 ].

Conceptual role of implementation strategies in improving intervention implementation and patient and public health outcomes

While there has been a rapid increase in the number of implementation trials over the past decade, the quality of trials has been criticised, and the effects of the strategies for such trials on implementation, patient or public health outcomes have been modest [ 11 , 12 , 13 ]. To improve the likelihood of impact, factors that may impede intervention implementation should be considered during intervention development and across each phase of the research translation process [ 2 ]. Feasibility and pilot studies play an important role in improving the conduct and quality of a definitive randomised controlled trial (RCT) for both intervention and implementation trials [ 10 ]. For clinical or public health interventions, pilot and feasibility studies may serve to identify potential refinements to the intervention, address uncertainties around the feasibility of intervention trial methods, or test preliminary effects of the intervention [ 10 ]. In implementation research, feasibility and pilot studies perform the same functions as those for intervention trials, however with a focus on developing or refining implementation strategies, refining research methods for an implementation intervention trial, or undertake preliminary testing of implementation strategies [ 14 , 15 ]. Despite this, reviews of implementation studies appear to suggest that few full implementation randomised controlled trials have undertaken feasibility and pilot work in advance of a larger trial [ 16 ].

A range of publications provides guidance for the conduct of feasibility and pilot studies for conventional clinical or public health efficacy trials including Guidance for Exploratory Studies of complex public health interventions [ 17 ] and the Consolidated Standards of Reporting Trials (CONSORT 2010) for Pilot and Feasibility trials [ 18 ]. However, given the differences between implementation trials and conventional clinical or public health efficacy trials, the field of implementation science has identified the need for nuanced guidance [ 14 , 15 , 16 , 19 , 20 ]. Specifically, unlike traditional feasibility and pilot studies that may include the preliminary testing of interventions on individual clinical or public health outcomes, implementation feasibility and pilot studies that explore strategies to improve intervention implementation often require assessing changes across multiple levels including individuals (e.g. service providers or clinicians) and organisational systems [ 21 ]. Due to the complexity of influencing behaviour change, the role of feasibility and pilot studies of implementation may also extend to identifying potential causal mechanisms of change and facilitate an iterative process of refining intervention strategies and optimising their impact [ 16 , 17 ]. In addition, where conventional clinical or public health efficacy trials are typically conducted under controlled conditions and directed mostly by researchers, implementation trials are more pragmatic [ 15 ]. As is the case for well conducted effectiveness trials, implementation trials often require partnerships with end-users and at times, the prioritisation of end-user needs over methods (e.g. random assignment) that seek to maximise internal validity [ 15 , 22 ]. These factors pose additional challenges for implementation researchers and underscore the need for guidance on conducting feasibility and pilot implementation studies.

Given the importance of feasibility and pilot studies in improving implementation strategies and the quality of full-scale trials of those implementation strategies, our aim is to provide practice guidance for those undertaking formative feasibility or pilot studies in the field of implementation science. Specifically, we seek to provide guidance pertaining to the three possible purposes of undertaking pilot and feasibility studies, namely (i) to inform implementation strategy development, (ii) to assess potential implementation strategy effects and (iii) to assess the feasibility of study methods.

A series of three facilitated group discussions were conducted with a group comprising of the 6 members from Canada, the U.S. and Australia (authors of the manuscript) that were mutually interested in the use of feasibility and pilot trials in implementation science. Members included international experts in implementation and behavioural science, public health and trial methods, and had considerable experience in conducting feasibility, pilot and/ or implementation trials. The group was responsible for developing the guidance document, including identification and synthesis of pertinent literature, and approving the final guidance.

To inform guidance development, a literature review was undertaken in electronic bibliographic databases and google, to identify and compile existing recommendations and guidelines for feasibility and pilot studies broadly. Through this process, we identified 30 such guidelines and recommendations relevant to our aim [ 2 , 10 , 14 , 15 , 17 , 18 , 23 , 24 , 25 , 26 , 27 , 28 , 29 , 30 , 31 , 32 , 33 , 34 , 35 , 36 , 37 , 38 , 39 , 40 , 41 , 42 , 43 , 44 , 45 ]. In addition, seminal methods and implementation science texts recommended by the group were examined. These included the CONSORT 2010 Statement: extension to randomised pilot and feasibility trials [ 18 ], the Medical Research Council’s framework for development and evaluation of randomised controlled trials for complex interventions to improve health [ 2 ], the National Institute of Health Research (NIHR) definitions [ 39 ] and the Quality Enhancement Research Initiative (QUERI) Implementation Guide [ 4 ]. A summary of feasibility and pilot study guidelines and recommendations, and that of seminal methods and implementation science texts, was compiled by two authors. This document served as the primary discussion document in meetings of the group. Additional targeted searches of the literature were undertaken in circumstances where the identified literature did not provide sufficient guidance. The manuscript was developed iteratively over 9 months via electronic circulation and comment by the group. Any differences in views between reviewers was discussed and resolved via consensus during scheduled international video-conference calls. All members of the group supported and approved the content of the final document.

The broad guidance provided is intended to be used as supplementary resources to existing seminal feasibility and pilot study resources. We used the definitions of feasibility and pilot studies as proposed by Eldridge and colleagues [ 10 ]. These definitions propose that any type of study relating to the preparation for a main study may be classified as a “feasibility study”, and that the term “pilot” study represents a subset of feasibility studies that specifically look at a design feature proposed for the main trial, whether in part of in full, that is being conducted on a smaller scale [ 10 ]. In addition, when referring to pilot studies, unless explicitly stated otherwise, we will primarily focus on pilot trials using a randomised design. We focus on randomised trials as such designs are the most common trial design in implementation research, and randomised designs may provide the most robust estimates of the potential effect of implementation strategies [ 46 ]. Those undertaking pilot studies that employ non-randomised designs need to interpret the guidance provided in this context. We acknowledge, however, that using randomised designs can prove particularly challenging in the field of implementation science, where research is often undertaken in real-world contexts with pragmatic constraints.

We used the effectiveness-implementation hybrid trial design typology proposed by Curran and colleagues as the framework for conceptualising the application of feasibility testing of implementation interventions [ 47 ]. The typology makes an explicit distinction between the purpose and methods of implementation and conventional clinical (or public health efficacy) trials. Specifically, the first two of the three hybrid designs may be relevant for implementation feasibility or pilot studies. Hybrid Type 1 trials are those designed to test the effectiveness of an intervention on clinical or public health outcomes (primary aim) while conducting a feasibility or pilot study for future implementation via observing and gathering information regarding implementation in a real-world setting/situation (secondary aim) [ 47 ]. Hybrid Type 2 trials involve the simultaneous testing of both the clinical intervention and the testing or feasibility of a formed implementation intervention/strategy as co-primary aims. For this design, “testing” is inclusive of pilot studies with an outcome measure and related hypothesis [ 47 ]. Hybrid Type 3 trials are definitive implementation trials designed to test the effectiveness of an implementation strategy whilst also collecting secondary outcome data on clinical or public health outcomes on a population of interest [ 47 ]. As the implementation aim of the trial is a definitively powered trial, it was not considered relevant to the conduct of feasibility and pilot studies in the field and will not be discussed.

Embedding of feasibility and pilot studies within Type 1 and Type 2 effectiveness-implementation hybrid trials has been recommended as an efficient way to increase the availability of information and evidence to accelerate the field of implementation science and the development and testing of implementation strategies [ 4 ]. However, implementation feasibility and pilot studies are also undertaken as stand-alone exploratory studies and do not include effectiveness measures in terms of the patient or public health outcomes. As such, in addition to discussing feasibility and pilot trials embedded in hybrid trial designs, we will also refer to stand-alone implementation feasibility and pilot studies.

An overview of guidance (aims, design, measures, sample size and power, progression criteria and reporting) for feasibility and pilot implementation studies can be found in Table 1 .

Purpose (aims)

The primary objective of hybrid type 1 trial is to assess the effectiveness of a clinical or public health intervention (rather than an implementation strategy) on the patient or population health outcomes [ 47 ]. Implementation strategies employed in these trials are often designed to maximise the likelihood of an intervention effect [ 51 ], and may not be intended to represent the strategy that would (or could feasibly), be used to support implementation in more “real world” contexts. Specific aims of implementation feasibility or pilot studies undertaken as part of Hybrid Type 1 trials are therefore formative and descriptive as the implementation strategy has not been fully formed nor will be tested. Thus, the purpose of a Hybrid Type 1 feasibility study is generally to inform the development or refinement of the implementation strategy rather than to test potential effects or mechanisms [ 22 , 47 ]. An example of a Hybrid Type 1 trial by Cabassa and colleagues is provided in Additional file 1 [ 52 ].

In Hybrid Type 2 trial designs, there is a dual purpose to test: (i) the clinical or public health effectiveness of the intervention on clinical or public health outcomes (e.g. measure of disease or health behaviour) and (ii) test or measure the impact of the implementation strategy on implementation outcomes (e.g. adoption of health policy in a community setting) [ 53 ]. However, testing the implementation strategy on implementation outcomes may be a secondary aim in these trials and positioned as a pilot [ 22 ]. In Hybrid Type 2 trial designs, the implementation strategy is more developed than in Hybrid Type 1 trials, resembling that intended for future testing in a definitive implementation randomised controlled trial. The dual testing of the evidence-based intervention and implementation interventions or strategies in Hybrid Type 2 trial designs allows for direct assessment of potential effects of an implementation strategy and exploration of components of the strategy to further refine logic models. Additionally, such trials allow for assessments of the feasibility, utility, acceptability or quality of research methods for use in a planned definitive trial. An example of a Hybrid Type 2 trial design by Barnes and colleagues [ 54 ] is included in Additional file 2 .

Non-hybrid pilot implementation studies are undertaken in the absence of a broader effectiveness trial. Such studies typically occur when the effectiveness of a clinical or public health intervention is well established, but robust strategies to promote its broader uptake and integration into clinical or public health services remain untested [ 15 ]. In these situations, implementation pilot studies may test or explore specific trial methods for a future definitive randomised implementation trial. Similarly, a pilot implementation study may also be undertaken in a way that provides a more rigorous formative evaluation of hypothesised implementation strategy mechanisms [ 55 ], or potential impact of implementation strategies [ 56 ], using similar approaches to that employed in Hybrid Type 2 trials. Examples of potential aims for feasibility and pilot studies are outlined in Table 2 .

For implementation feasibility or pilot studies, as is the case for these types of studies in general, the selection of research design should be guided by the specific research question that the study is seeking to address [ 57 ]. Although almost any study design may be used, researchers should review the merits and potential threats to internal and external validity to help guide the selection of research design for feasibility/pilot testing [ 15 ].

As Hybrid Type 1 trials are primarily concerned with testing the effectiveness of an intervention (rather than implementation strategy), the research design will typically employ power calculations and randomisation procedures at the health outcome level to measure the effect on behaviour, symptoms, functional and/or other clinical or public health outcomes. Hybrid Type 1 feasibility studies may employ a variety of designs usually nested within the experimental group (those receiving the intervention and any form of an implementation support strategy) of the broader efficacy trial [ 47 ]. Consistent with the aims of Hybrid Type 1 feasibility and pilot studies, the research designs employed are likely to be non-comparative. Cross-sectional surveys, interviews or document review, qualitative research or mix methods approaches may be used to assess implementation contextual factors, such as barriers and enablers to implementation and/or the acceptability, perceived feasibility or utility of implementation strategies or research methods [ 47 ].

Pilot implementation studies as part of Hybrid Type 2 designs can make use of the comparative design of the broader effectiveness trial to examine the potential effects of the implementation strategy [ 47 ] and more robustly assess the implementation mechanisms, determinants and influence of broader contextual factors [ 53 ]. In this trial type, mixed method and qualitative methods may complement the findings of between group (implementation strategy arm versus comparison) quantitative comparisons, enable triangulation and provide more comprehensive evidence to inform implementation strategy development and assessment. Stand-alone implementation feasibility and pilot implementation studies are free from the constraints and opportunities of research embedded in broader effectiveness trials. As such, research can be designed in a way that best addresses the explicit implementation objectives of the study. Specifically, non-hybrid pilot studies can maximise the applicability of study findings for future definitive trials by employing methods to directly test trial methods such as recruitment or retention strategies [ 17 ], enabling estimates of implementation strategies effects [ 56 ] or capturing data to explicitly test logic models or strategy mechanisms.

The selection of outcome measures should be linked directly to the objectives of the feasibility or pilot study. Where appropriate, measures should be objective or have suitable psychometric properties, such as evidence of reliability and validity [ 58 , 59 ]. Public health evaluation frameworks often guide the choice of outcome measure in feasibility and pilot implementation work and include RE_AIM [ 60 ], PRECEDE_PROCEED [ 61 ], Proctor and colleagues framework on outcomes for implementation research [ 62 ] and more recently, the “Implementation Mapping” framework [ 63 ]. Recent work by McKay and colleagues suggests a minimum data set of implementation outcomes that includes measures of adoption, reach, dose, fidelity and sustainability [ 46 ]. We discuss selected measures below and provide a summary in Table 3 [ 46 ]. Such measures could be assessed using quantitative or qualitative or mixed methods [ 46 ].

Measures to assess potential implementation strategy effects

In addition to assessing the effects of an intervention on individual clinical or public health outcomes, Hybrid Type 2 trials (and some non-hybrid pilot studies) are interested in measures of the potential effects of an implementation strategy on desired organisational or clinician practice change such as adherence to a guideline, process, clinical standard or delivery of a program [ 62 ]. A range of potential outcomes that could be used to assess implementation strategy effects has been identified, including measures of adoption, reach, fidelity and sustainability [ 46 ]. These outcomes are described in Table 2 , including definitions and examples of how they may be applied to the implementation component of innovation being piloted. Standardised tools to assess these outcomes are often unavailable due to the unique nature of interventions being implemented and the variable (and changing) implementation context in which the research is undertaken [ 64 ]. Researchers may collect outcome data for these measures as part of environmental observations, self-completed checklists or administrative records, audio recording of client sessions or other methods suited to their study and context [ 62 ]. The limitations of such methods, however, need to be considered.

Measures to inform the design or development of the implementation strategy

Measures informing the design or development of the implementation strategy are potentially part of all types of feasibility and pilot implementation studies. An understanding of the determinants of implementation is critical to implementation strategy development. A range of theoretical determinant frameworks have been published which describe factors that may influence intervention implementation [ 65 ], and systematic reviews have been undertaken describing the psychometric properties of many of these measures [ 64 , 66 ]. McKay and colleagues have also identified a priority set of determinants for implementation trials that could be considered for use in implementation feasibility and pilot studies, including measures of context, acceptability, adaptability, feasibility, compatibility, cost, culture, dose, complexity and self-efficacy [ 46 ]. These determinants are described in Table 3 , including definitions and how such measures may be applied to an implementation feasibility or pilot study. Researchers should consider, however, the application of such measures to assess both the intervention that is being implemented (as in a conventional intervention feasibility and pilot study) and the strategy that is being employed to facilitate its implementation, given the importance of the interaction between these factors and implementation success [ 46 ]. Examples of the potential application of measures to both the intervention and its implementation strategies have been outlined elsewhere [ 46 ]. Although a range of quantitative tools could be used to measure such determinants [ 58 , 66 ], qualitative or mixed methods are generally recommended given the capacity of qualitative measures to provide depth to the interpretation of such evaluations [ 40 ].

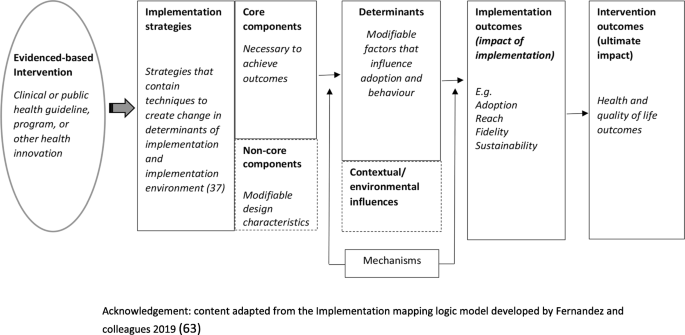

Measures of potential implementation determinants may be included to build or enhance logic models (Hybrid Type 1 and 2 feasibility and pilot studies) and explore implementation strategy mechanisms (Hybrid Type 2 pilot studies and non-hybrid pilot studies) [ 67 ]. If exploring strategy mechanisms, a hypothesized logic model underpinning the implementation strategy should be articulated including strategy-mechanism linkages, which are required to guide the measurement of key determinants [ 55 , 63 ]. An important determinant which can complicate logic model specification and measurement is the process of adaptation—modifications to the intervention or its delivery (implementation), through the input of service providers or implementers [ 68 ]. Logic models should specify components of implementation strategies thought to be “core” to their effects and those which are thought to be “non-core” where adaptation may occur without adversely impacting on effects. Stirman and colleagues propose a method for assessing adaptations that could be considered for use in pilot and feasibility studies of implementation trials [ 69 ]. Figure 2 provides an example of some of the implementation logic model components that may be developed or refined as part of feasibility or pilot studies of implementation [ 15 , 63 ].

Example of components of an Implementation logic model

Measures to assess the feasibility of study methods

Measures of implementation feasibility and pilot study methods are similar to those of conventional studies for clinical or public health interventions. For example, standard measures of study participation and thresholds for study attrition (e.g. >20%) rates [ 73 ] can be employed in implementation studies [ 67 ]. Previous studies have also surveyed study data collectors to assess the success of blinding strategies [ 74 ]. Researchers may also consider assessing participation or adherence to implementation data collection procedures, the comprehension of survey items, data management strategies or other measures of feasibility of study methods [ 15 ].

Pilot study sample size and power

In effectiveness trials, power calculations and sample size decisions are primarily based on the detection of a clinically meaningful difference in measures of the effects of the intervention on the patient or public health outcomes such as behaviour, disease, symptomatology or functional outcomes [ 24 ]. In this context, the available study sample for implementation measures included in Hybrid Type 1 or 2 feasibility and pilot studies may be constrained by the sample and power calculations of the broader effectiveness trial in which they are embedded [ 47 ]. Nonetheless, a justification for the anticipated sample size for all implementation feasibility or pilot studies (hybrid or stand-alone) is recommended [ 18 ], to ensure that implementation measures and outcomes achieve sufficient estimates of precision to be useful. For Hybrid type 2 and relevant stand-alone implementation pilot studies, sample size calculations for implementation outcomes should seek to achieve adequate estimates of precision deemed sufficient to inform progression to a fully powered trial [ 18 ].

Progression criteria

Stating progression criteria when reporting feasibility and pilot studies is recommended as part of the CONSORT 2010 extension to randomised pilot and feasibility trials guidelines [ 18 ]. Generally, it is recommended that progression criteria should be set a priori and be specific to the feasibility measures, components and/or outcomes assessed in the study [ 18 ]. While little guidance is available, ideas around suitable progression criteria include assessment of uncertainties around feasibility, meeting recruitment targets, cost-effectiveness and refining causal hypotheses to be tested in future trials [ 17 ]. When developing progression criteria, the use of guidelines is suggested rather than strict thresholds [ 18 ], in order to allow for appropriate interpretation and exploration of potential solutions, for example, the use of a traffic light system with varying levels of acceptability [ 17 , 24 ]. For example, Thabane and colleagues recommend that, in general, the outcome of a pilot study can be one of the following: (i) stop—main study not feasible (red); (ii) continue, but modify protocol—feasible with modifications (yellow); (iii) continue without modifications, but monitor closely—feasible with close monitoring and (iv) continue without modifications (green) (44)p5.

As the goal of Hybrid Type 1 implementation component is usually formative, it may not be necessary to set additional progression criteria in terms of the implementation outcomes and measures examined. As Hybrid Type 2 trials test an intervention and can pilot an implementation strategy, criteria for these and non-hybrid pilot studies may set progression criteria based on evidence of potential effects but may also consider the feasibility of trial methods, service provider, organisational or patient (or community) acceptability, fit with organisational systems and cost-effectiveness [ 17 ]. In many instances, the progression of implementation pilot studies will often require the input and agreement of stakeholders [ 27 ]. As such, the establishment of progression criteria and the interpretation of pilot and feasibility study findings in the context of such criteria require stakeholder input [ 27 ].

Reporting suggestions

As formal reporting guidelines do not exist for hybrid trial designs, we would recommend that feasibility and pilot studies as part of hybrid designs draw upon best practice recommendations from relevant reporting standards such as the CONSORT extension for randomised pilot and feasibility trials, the Standards for Reporting Implementation Studies (STaRI) guidelines and the Template for Intervention Description and Replication (TIDieR) guide as well as any other design relevant reporting standards [ 48 , 50 , 75 ]. These, and further reporting guidelines, specific to the particular research design chosen, can be accessed as part of the EQUATOR (Enhancing the QUAility and Transparency Of health Research) network—a repository for reporting guidance [ 76 ]. In addition, researchers should specify the type of implementation feasibility or pilot study being undertaken using accepted definitions. If applicable, specification and justification behind the choice of hybrid trial design should also be stated. In line with existing recommendations for reporting of implementation trials generally, reporting on the referent of outcomes (e.g. specifying if the measure in relation to the specific intervention or the implementation strategy) [ 62 ], is also particularly pertinent when reporting hybrid trial designs.

Concerns are often raised regarding the quality of implementation trials and their capacity to contribute to the collective evidence base [ 3 ]. Although there have been many recent developments in the standardisation of guidance for implementation trials, information on the conduct of feasibility and pilot studies for implementation interventions remains limited, potentially contributing to a lack of exploratory work in this area and a limited evidence base to inform effective implementation intervention design and conduct [ 15 ]. To address this, we synthesised the existing literature and provide commentary and guidance for the conduct of implementation feasibility and pilot studies. To our knowledge, this work is the first to do so and is an important first step to the development of standardised guidelines for implementation-related feasibility and pilot studies.

Availability of data and materials

Not applicable.

Abbreviations

Randomised controlled trial

Consolidated Standards of Reporting Trials

Enhancing the QUAility and Transparency Of health Research

Standards for Reporting Implementation Studies

Strengthening the Reporting of Observational Studies in Epidemiology

Template for Intervention Description and Replication

National Institute of Health Research

Quality Enhancement Research Initiative

Bauer MS, Damschroder L, Hagedorn H, Smith J, Kilbourne AM. An introduction to implementation science for the non-specialist. BMC Psychol. 2015;3:32.

Article Google Scholar

Craig P, Dieppe P, Macintyre S, Michie S, Nazareth I, Petticrew M, et al. Developing and evaluating complex interventions: the new Medical Research Council guidance. BMJ. 2008;337:a1655.

Eccles MP, Armstrong D, Baker R, Cleary K, Davies H, Davies S, et al. An implementation research agenda. Implement Sci. 2009;4:18.

Department of Veterans Health Administration. Implementation Guide. Health Services Research & Development, Quality Enhancement Research Initiative. Updated 2013.

Peters DH, Nhan TT, Adam T. Implementation research: a practical guide; 2013.

Google Scholar

Neta G, Sanchez MA, Chambers DA, Phillips SM, Leyva B, Cynkin L, et al. Implementation science in cancer prevention and control: a decade of grant funding by the National Cancer Institute and future directions. Implement Sci. 2015;10:4.

Foy R, Sales A, Wensing M, Aarons GA, Flottorp S, Kent B, et al. Implementation science: a reappraisal of our journal mission and scope. Implement Sci. 2015;10:51.

Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8:139.

Leeman J, Birken SA, Powell BJ, Rohweder C, Shea CM. Beyond "implementation strategies": classifying the full range of strategies used in implementation science and practice. Implement Sci. 2017;12(1):125.

Eldridge SM, Lancaster GA, Campbell MJ, Thabane L, Hopewell S, Coleman CL, et al. Defining feasibility and pilot studies in preparation for randomised controlled trials: development of a conceptual framework. PLoS One. 2016;11(3):e0150205.

Article CAS Google Scholar

Powell BJ, McMillen JC, Proctor EK, Carpenter CR, Griffey RT, Bunger AC, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev. 2012;69(2):123–57.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the expert recommendations for implementing change (ERIC) project. Implement Sci. 2015;10:21.

Lewis CC, Stanick C, Lyon A, Darnell D, Locke J, Puspitasari A, et al. Proceedings of the fourth biennial conference of the Society for Implementation Research Collaboration (SIRC) 2017: implementation mechanisms: what makes implementation work and why? Part 1. Implement Sci. 2018;13(Suppl 2):30.

Levati S, Campbell P, Frost R, Dougall N, Wells M, Donaldson C, et al. Optimisation of complex health interventions prior to a randomised controlled trial: a scoping review of strategies used. Pilot Feasibility Stud. 2016;2:17.

Bowen DJ, Kreuter M, Spring B, Cofta-Woerpel L, Linnan L, Weiner D, et al. How we design feasibility studies. Am J Prev Med. 2009;36(5):452–7.

Eccles M, Grimshaw J, Walker A, Johnston M, Pitts N. Changing the behavior of healthcare professionals: the use of theory in promoting the uptake of research findings. J Clin Epidemiol. 2005;58(2):107–12.

Hallingberg B, Turley R, Segrott J, Wight D, Craig P, Moore L, et al. Exploratory studies to decide whether and how to proceed with full-scale evaluations of public health interventions: a systematic review of guidance. Pilot Feasibility Stud. 2018;4:104.

Eldridge SM, Chan CL, Campbell MJ, Bond CM, Hopewell S, Thabane L, et al. CONSORT 2010 statement: extension to randomised pilot and feasibility trials. Pilot Feasibility Stud. 2016;2:64.

Proctor EK, Powell BJ, Baumann AA, Hamilton AM, Santens RL. Writing implementation research grant proposals: ten key ingredients. Implement Sci. 2012;7:96.

Stetler CB, Legro MW, Wallace CM, Bowman C, Guihan M, Hagedorn H, et al. The role of formative evaluation in implementation research and the QUERI experience. J Gen Intern Med. 2006;21(Suppl 2):S1–8.

Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Admin Pol Ment Health. 2011;38(1):4–23.

Johnson AL, Ecker AH, Fletcher TL, Hundt N, Kauth MR, Martin LA, et al. Increasing the impact of randomized controlled trials: an example of a hybrid effectiveness-implementation design in psychotherapy research. Transl Behav Med. 2018.

Arain M, Campbell MJ, Cooper CL, Lancaster GA. What is a pilot or feasibility study? A review of current practice and editorial policy. BMC Med Res Methodol. 2010;10(1):67.

Avery KN, Williamson PR, Gamble C, O’Connell Francischetto E, Metcalfe C, Davidson P, et al. Informing efficient randomised controlled trials: exploration of challenges in developing progression criteria for internal pilot studies. BMJ Open. 2017;7(2):e013537.

Bell ML, Whitehead AL, Julious SA. Guidance for using pilot studies to inform the design of intervention trials with continuous outcomes. J Clin Epidemiol. 2018;10:153–7.

Billingham SAM, Whitehead AL, Julious SA. An audit of sample sizes for pilot and feasibility trials being undertaken in the United Kingdom registered in the United Kingdom clinical research Network database. BMC Med Res Methodol. 2013;13(1):104.

Bugge C, Williams B, Hagen S, Logan J, Glazener C, Pringle S, et al. A process for decision-making after pilot and feasibility trials (ADePT): development following a feasibility study of a complex intervention for pelvic organ prolapse. Trials. 2013;14:353.

Charlesworth G, Burnell K, Hoe J, Orrell M, Russell I. Acceptance checklist for clinical effectiveness pilot trials: a systematic approach. BMC Med Res Methodol. 2013;13(1):78.

Eldridge SM, Costelloe CE, Kahan BC, Lancaster GA, Kerry SM. How big should the pilot study for my cluster randomised trial be? Stat Methods Med Res. 2016;25(3):1039–56.

Fletcher A, Jamal F, Moore G, Evans RE, Murphy S, Bonell C. Realist complex intervention science: applying realist principles across all phases of the Medical Research Council framework for developing and evaluating complex interventions. Evaluation (Lond). 2016;22(3):286–303.

Hampson LV, Williamson PR, Wilby MJ, Jaki T. A framework for prospectively defining progression rules for internal pilot studies monitoring recruitment. Stat Methods Med Res. 2018;27(12):3612–27.

Kraemer HC, Mintz J, Noda A, Tinklenberg J, Yesavage JA. Caution regarding the use of pilot studies to guide power calculations for study proposals. Arch Gen Psychiatry. 2006;63(5):484–9.

Smith LJ, Harrison MB. Framework for planning and conducting pilot studies. Ostomy Wound Manage. 2009;55(12):34–48.

Lancaster GA, Dodd S, Williamson PR. Design and analysis of pilot studies: recommendations for good practice. J Eval Clin Pract. 2004;10(2):307–12.

Leon AC, Davis LL, Kraemer HC. The role and interpretation of pilot studies in clinical research. J Psychiatr Res. 2011;45(5):626–9.

Medical Research Council. A framework for development and evaluation of RCTs for complex interventions to improve health. London: Medical Research Council; 2000.

Möhler R, Bartoszek G, Meyer G. Quality of reporting of complex healthcare interventions and applicability of the CReDECI list - a survey of publications indexed in PubMed. BMC Med Res Methodol. 2013;13(1):125.

Möhler R, Köpke S, Meyer G. Criteria for reporting the development and evaluation of complex interventions in healthcare: revised guideline (CReDECI 2). Trials. 2015;16(1):204.

National Institute for Health Research. Definitions of feasibility vs pilot stuides [Available from: https://www.nihr.ac.uk/documents/guidance-on-applying-for-feasibility-studies/20474 ].

O'Cathain A, Hoddinott P, Lewin S, Thomas KJ, Young B, Adamson J, et al. Maximising the impact of qualitative research in feasibility studies for randomised controlled trials: guidance for researchers. Pilot Feasibility Stud. 2015;1:32.

Shanyinde M, Pickering RM, Weatherall M. Questions asked and answered in pilot and feasibility randomized controlled trials. BMC Med Res Methodol. 2011;11(1):117.

Teare MD, Dimairo M, Shephard N, Hayman A, Whitehead A, Walters SJ. Sample size requirements to estimate key design parameters from external pilot randomised controlled trials: a simulation study. Trials. 2014;15(1):264.

Thabane L, Lancaster G. Improving the efficiency of trials using innovative pilot designs: the next phase in the conduct and reporting of pilot and feasibility studies. Pilot Feasibility Stud. 2017;4(1):14.

Thabane L, Ma J, Chu R, Cheng J, Ismaila A, Rios LP, et al. A tutorial on pilot studies: the what, why and how. BMC Med Res Methodol. 2010;10:1.

Westlund E. E.a. S. The nonuse, misuse, and proper use of pilot studies in experimental evaluation research. Am J Eval. 2016;38(2):246–61.

McKay H, Naylor PJ, Lau E, Gray SM, Wolfenden L, Milat A, et al. Implementation and scale-up of physical activity and behavioural nutrition interventions: an evaluation roadmap. Int J Behav Nutr Phys Act. 2019;16(1):102.

Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50(3):217–26.

Equator Network. Standards for reporting implementation studies (StaRI) statement 2017 [Available from: http://www.equator-network.org/reporting-guidelines/stari-statement/ ].

Vandenbroucke JP, von Elm E, Altman DG, Gøtzsche PC, Mulrow CD, Pocock SJ, et al. Strengthening the Reporting of Observational Studies in Epidemiology (STROBE): explanation and elaboration. PLoS Med. 2007;4(10):e297–e.

Hoffmann TC, Glasziou PP, Boutron I, Milne R, Perera R, Moher D, et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ. 2014;348:g1687.

Schliep ME, Alonzo CN, Morris MA. Beyond RCTs: innovations in research design and methods to advance implementation science. Evid Based Commun Assess Inter. 2017;11(3-4):82–98.

Cabassa LJ, Stefancic A, O'Hara K, El-Bassel N, Lewis-Fernández R, Luchsinger JA, et al. Peer-led healthy lifestyle program in supportive housing: study protocol for a randomized controlled trial. Trials. 2015;16:388.

Landes SJ, McBain SA, Curran GM. Reprint of: An introduction to effectiveness-implementation hybrid designs. J Psychiatr Res. 2020;283:112630.

Barnes C, Grady A, Nathan N, Wolfenden L, Pond N, McFayden T, Ward DS, Vaughn AE, Yoong SL. A pilot randomised controlled trial of a web-based implementation intervention to increase child intake of fruit and vegetables within childcare centres. Pilot and Feasibility Studies. 2020. https://doi.org/10.1186/s40814-020-00707-w .

Lewis CC, Klasnja P, Powell BJ, Lyon AR, Tuzzio L, Jones S, et al. From classification to causality: advancing understanding of mechanisms of change in implementation science. Front Public Health. 2018;6:136.

Department of Veterans Health Affairs. Implementation Guide. Health Services Research & Development, Quality Enhancement Research Initiative. 2013.

Moore GF, Audrey S, Barker M, Bond L, Bonell C, Hardeman W, et al. Process evaluation of complex interventions: Medical Research Council guidance. BMJ. 2015;350:h1258.

Weiner BJ, Lewis CC, Stanick C, Powell BJ, Dorsey CN, Clary AS, et al. Psychometric assessment of three newly developed implementation outcome measures. Implement Sci. 2017;12(1):108.

Lewis CC, Mettert KD, Dorsey CN, Martinez RG, Weiner BJ, Nolen E, et al. An updated protocol for a systematic review of implementation-related measures. Syst Rev. 2018;7(1):66.

Glasgow RE, Klesges LM, Dzewaltowski DA, Estabrooks PA, Vogt TM. Evaluating the impact of health promotion programs: using the RE-AIM framework to form summary measures for decision making involving complex issues. Health Educ Res. 2006;21(5):688–94.

Green L, Kreuter M. Health promotion planning: an educational and ecological approach. Mountain View: Mayfield Publishing; 1999.

Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Admin Pol Ment Health. 2011;38(2):65–76.

Fernandez ME, Ten Hoor GA, van Lieshout S, Rodriguez SA, Beidas RS, Parcel G, et al. Implementation mapping: using intervention mapping to develop implementation strategies. Front Public Health. 2019;7:158.

Lewis CC, Weiner BJ, Stanick C, Fischer SM. Advancing implementation science through measure development and evaluation: a study protocol. Implement Sci. 2015;10:102.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50.

Clinton-McHarg T, Yoong SL, Tzelepis F, Regan T, Fielding A, Skelton E, et al. Psychometric properties of implementation measures for public health and community settings and mapping of constructs against the consolidated framework for implementation research: a systematic review. Implement Sci. 2016;11(1):148.

Moore CG, Carter RE, Nietert PJ, Stewart PW. Recommendations for planning pilot studies in clinical and translational research. Clin Transl Sci. 2011;4(5):332–7.

Pérez D, Van der Stuyft P, Zabala MC, Castro M, Lefèvre P. A modified theoretical framework to assess implementation fidelity of adaptive public health interventions. Implement Sci. 2016;11(1):91.

Stirman SW, Miller CJ, Toder K, Calloway A. Development of a framework and coding system for modifications and adaptations of evidence-based interventions. Implement Sci. 2013;8:65.

Carroll C, Patterson M, Wood S, Booth A, Rick J, Balain S. A conceptual framework for implementation fidelity. Implement Sci. 2007;2:40.

Durlak JA, DuPre EP. Implementation matters: a review of research on the influence of implementation on program outcomes and the factors affecting implementation. Am J Community Psychol. 2008;41(3-4):327–50.

Saunders RP, Evans MH, Joshi P. Developing a process-evaluation plan for assessing health promotion program implementation: a how-to guide. Health Promot Pract. 2005;6(2):134–47.

Higgins JP, Altman DG, Gøtzsche PC, Jüni P, Moher D, Oxman AD, et al. The Cochrane Collaboration's tool for assessing risk of bias in randomised trials. BMJ. 2011;343:d5928.

Wyse RJ, Wolfenden L, Campbell E, Brennan L, Campbell KJ, Fletcher A, et al. A cluster randomised trial of a telephone-based intervention for parents to increase fruit and vegetable consumption in their 3- to 5-year-old children: study protocol. BMC Public Health. 2010;10:216.

Consort Transparent Reporting of Trials. Pilot and Feasibility Trials 2016 [Available from: http://www.consort-statement.org/extensions/overview/pilotandfeasibility ].

Equator Network. Ehancing the QUAlity and Transparency Of health Research. [Avaliable from: https://www.equator-network.org/ ].

Download references

Acknowledgements

Associate Professor Luke Wolfenden receives salary support from a NHMRC Career Development Fellowship (grant ID: APP1128348) and Heart Foundation Future Leader Fellowship (grant ID: 101175). Dr Sze Lin Yoong is a postdoctoral research fellow funded by the National Heart Foundation. A/Prof Maureen C. Ashe is supported by the Canada Research Chairs program.

Author information

Authors and affiliations.

School of Medicine and Public Health, University of Newcastle, University Drive, Callaghan, NSW 2308, Australia

Nicole Pearson, Sze Lin Yoong & Luke Wolfenden

Hunter New England Population Health, Locked Bag 10, Wallsend, NSW 2287, Australia

School of Exercise Science, Physical and Health Education, Faculty of Education, University of Victoria, PO Box 3015 STN CSC, Victoria, BC, V8W 3P1, Canada

Patti-Jean Naylor

Center for Health Promotion and Prevention Research, University of Texas Health Science Center at Houston School of Public Health, Houston, TX, 77204, USA

Maria Fernandez

Department of Family Practice, University of British Columbia (UBC) and Centre for Hip Health and Mobility, University Boulevard, Vancouver, BC, V6T 1Z3, Canada

Maureen C. Ashe

You can also search for this author in PubMed Google Scholar

Contributions

NP and LW led the development of the manuscript. NP, LW, NP, MCA, PN, MF and SY contributed to the drafting and final approval of the manuscript.

Corresponding author

Correspondence to Nicole Pearson .

Ethics declarations

Ethics approval and consent to participate, consent for publication, competing interests.

The authors have no financial or non-financial interests to declare .

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1..

Example of a Hybrid Type 1 trial. Summary of publication by Cabassa et al.

Additional file 2.

Example of a Hybrid Type 2 trial. Summary of publication by Barnes et al.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ . The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

Reprints and permissions

About this article

Cite this article.

Pearson, N., Naylor, PJ., Ashe, M.C. et al. Guidance for conducting feasibility and pilot studies for implementation trials. Pilot Feasibility Stud 6 , 167 (2020). https://doi.org/10.1186/s40814-020-00634-w

Download citation

Received : 08 January 2020

Accepted : 18 June 2020

Published : 31 October 2020

DOI : https://doi.org/10.1186/s40814-020-00634-w

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Feasibility

- Hybrid trial designs

- Implementation science

Pilot and Feasibility Studies

ISSN: 2055-5784

- Submission enquiries: Access here and click Contact Us

- General enquiries: [email protected]

Why Is a Pilot Study Important in Research?

Are you working on a new research project ? We know that you are excited to start, but before you dive in, make sure your study is feasible. You don’t want to end up having to process too many samples at once or realize you forgot to add an essential question to your questionnaire.

What is a Pilot Study?

You can determine the feasibility of your research design, with a pilot study before you start. This is a preliminary, small-scale “rehearsal” in which you test the methods you plan to use for your research project. You will use the results to guide the methodology of your large-scale investigation. Pilot studies should be performed for both qualitative and quantitative studies. Here, we discuss the importance of the pilot study and how it will save you time, frustration and resources.

“ You never test the depth of a river with both feet ” – African proverb

Components of a Pilot Study

Whether your research is a clinical trial of a medical treatment or a survey in the form of a questionnaire, you want your study to be informative and add value to your research field. Things to consider in your pilot study include:

- Sample size and selection. Your data needs to be representative of the target study population. You should use statistical methods to estimate the feasibility of your sample size.

- Determine the criteria for a successful pilot study based on the objectives of your study. How will your pilot study address these criteria?

- When recruiting subjects or collecting samples ensure that the process is practical and manageable.

- Always test the measurement instrument . This could be a questionnaire, equipment, or methods used. Is it realistic and workable? How can it be improved?

- Data entry and analysis . Run the trial data through your proposed statistical analysis to see whether your proposed analysis is appropriate for your data set.

- Create a flow chart of the process.

How to Conduct a Pilot Study

Conducting a pilot study is an essential step in many research projects. Here’s a general guide on how to conduct a pilot study:

Step 1: Define Objectives

Inspect what specific aspects of your main study do you want to test or evaluate in your pilot study.

Step 2: Evaluate Sample Size

Decide on an appropriate sample size for your pilot study. This can be smaller than your main study but should still be large enough to provide meaningful feedback.

Step 3: Select Participants

Choose participants who are similar to those you’ll include in the main study. Ensure they match the demographics and characteristics of your target population.

Step 4: Prepare Materials

Develop or gather all the materials needed for the study, such as surveys, questionnaires, protocols, etc.

Step 5: Explain the Purpose of the Study

Briefly explain the purpose and implementation method of the pilot study to participants. Pay attention to the study duration to help you refine your timeline for the main study.

Step 6: Gather Feedback

Gather feedback from participants through surveys, interviews, or discussions. Ask about their understanding of the questions, clarity of instructions, time taken, etc.

Step 7: Analyze Results

Analyze the collected data and identify any trends or patterns. Take note of any unexpected issues, confusion, or problems that arise during the pilot.

Step 8: Report Findings

Write a brief report detailing the process, results, and any changes made.

Based on the results observed in the pilot study, make necessary adjustments to your study design, materials, procedures, etc. Furthermore, ensure you are following ethical guidelines for research, even in a pilot study.

Ready to test your understanding on conducting a pilot study? Take our short quiz today!

Fill the Details to Check Your Score

Importance of Pilot Study in Research

Pilot studies should be routinely incorporated into research design s because they:

- Help define the research question

- Test the proposed study design and process. This could alert you to issues which may negatively affect your project.

- Educate yourself on different techniques related to your study.

- Test the safety of the medical treatment in preclinical trials on a small number of participants. This is an essential step in clinical trials.

- Determine the feasibility of your study, so you don’t waste resources and time.

- Provide preliminary data that you can use to improve your chances for funding and convince stakeholders that you have the necessary skills and expertise to successfully carry out the research.

Are Pilot Studies Always Necessary?

We recommend pilot studies for all research. Scientific research does not always go as planned; therefore, you should optimize the process to minimize unforeseen events. Why risk disastrous and expensive mistakes that could have been discovered and corrected in a pilot study?

An Essential Component for Good Research Design

Pilot work not only gives you a chance to determine whether your project is feasible but also an opportunity to publish its results. You have an ethical and scientific obligation to get your information out to assist other researchers in making the most of their resources.

A successful pilot study does not ensure the success of a research project. However, it does help you assess your approach and practice the necessary techniques required for your project. It will give you an indication of whether your project will work. Would you start a research project without a pilot study? Let us know in the comments section below.

But it depends on the nature of the research, I suppose.

Awesome document

Good document

I totally agree with this article that pilot study helps the researcher be sure how feasible his research idea is. And is well worth the time, as it saves future time wastage.

Great article, it is always wise to carry out that test before putting out the Main stuff. It saves you time and future embarrasment.

Rate this article Cancel Reply

Your email address will not be published.

Enago Academy's Most Popular Articles

- Reporting Research