Sciencing_Icons_Science SCIENCE

Sciencing_icons_biology biology, sciencing_icons_cells cells, sciencing_icons_molecular molecular, sciencing_icons_microorganisms microorganisms, sciencing_icons_genetics genetics, sciencing_icons_human body human body, sciencing_icons_ecology ecology, sciencing_icons_chemistry chemistry, sciencing_icons_atomic & molecular structure atomic & molecular structure, sciencing_icons_bonds bonds, sciencing_icons_reactions reactions, sciencing_icons_stoichiometry stoichiometry, sciencing_icons_solutions solutions, sciencing_icons_acids & bases acids & bases, sciencing_icons_thermodynamics thermodynamics, sciencing_icons_organic chemistry organic chemistry, sciencing_icons_physics physics, sciencing_icons_fundamentals-physics fundamentals, sciencing_icons_electronics electronics, sciencing_icons_waves waves, sciencing_icons_energy energy, sciencing_icons_fluid fluid, sciencing_icons_astronomy astronomy, sciencing_icons_geology geology, sciencing_icons_fundamentals-geology fundamentals, sciencing_icons_minerals & rocks minerals & rocks, sciencing_icons_earth scructure earth structure, sciencing_icons_fossils fossils, sciencing_icons_natural disasters natural disasters, sciencing_icons_nature nature, sciencing_icons_ecosystems ecosystems, sciencing_icons_environment environment, sciencing_icons_insects insects, sciencing_icons_plants & mushrooms plants & mushrooms, sciencing_icons_animals animals, sciencing_icons_math math, sciencing_icons_arithmetic arithmetic, sciencing_icons_addition & subtraction addition & subtraction, sciencing_icons_multiplication & division multiplication & division, sciencing_icons_decimals decimals, sciencing_icons_fractions fractions, sciencing_icons_conversions conversions, sciencing_icons_algebra algebra, sciencing_icons_working with units working with units, sciencing_icons_equations & expressions equations & expressions, sciencing_icons_ratios & proportions ratios & proportions, sciencing_icons_inequalities inequalities, sciencing_icons_exponents & logarithms exponents & logarithms, sciencing_icons_factorization factorization, sciencing_icons_functions functions, sciencing_icons_linear equations linear equations, sciencing_icons_graphs graphs, sciencing_icons_quadratics quadratics, sciencing_icons_polynomials polynomials, sciencing_icons_geometry geometry, sciencing_icons_fundamentals-geometry fundamentals, sciencing_icons_cartesian cartesian, sciencing_icons_circles circles, sciencing_icons_solids solids, sciencing_icons_trigonometry trigonometry, sciencing_icons_probability-statistics probability & statistics, sciencing_icons_mean-median-mode mean/median/mode, sciencing_icons_independent-dependent variables independent/dependent variables, sciencing_icons_deviation deviation, sciencing_icons_correlation correlation, sciencing_icons_sampling sampling, sciencing_icons_distributions distributions, sciencing_icons_probability probability, sciencing_icons_calculus calculus, sciencing_icons_differentiation-integration differentiation/integration, sciencing_icons_application application, sciencing_icons_projects projects, sciencing_icons_news news.

- Share Tweet Email Print

- Home ⋅

- Math ⋅

- Probability & Statistics ⋅

- Distributions

How to Write a Hypothesis for Correlation

How to Calculate a P-Value

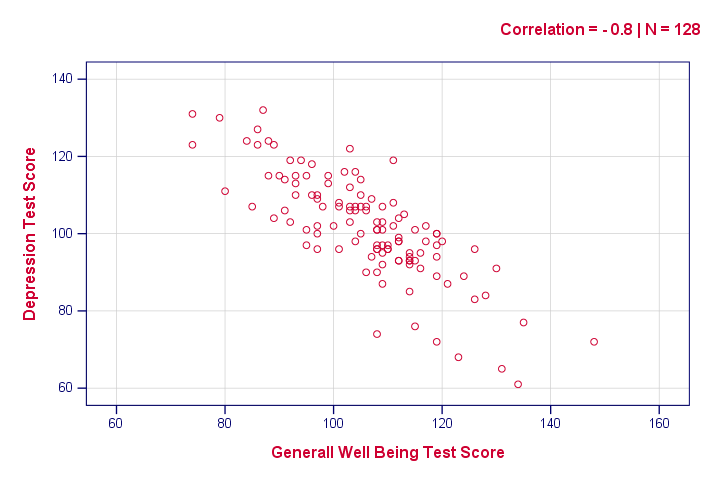

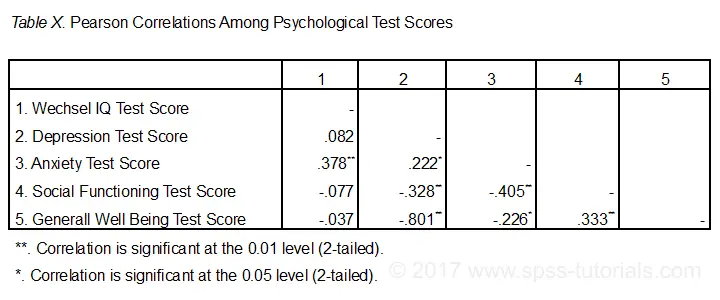

A hypothesis is a testable statement about how something works in the natural world. While some hypotheses predict a causal relationship between two variables, other hypotheses predict a correlation between them. According to the Research Methods Knowledge Base, a correlation is a single number that describes the relationship between two variables. If you do not predict a causal relationship or cannot measure one objectively, state clearly in your hypothesis that you are merely predicting a correlation.

Research the topic in depth before forming a hypothesis. Without adequate knowledge about the subject matter, you will not be able to decide whether to write a hypothesis for correlation or causation. Read the findings of similar experiments before writing your own hypothesis.

Identify the independent variable and dependent variable. Your hypothesis will be concerned with what happens to the dependent variable when a change is made in the independent variable. In a correlation, the two variables undergo changes at the same time in a significant number of cases. However, this does not mean that the change in the independent variable causes the change in the dependent variable.

Construct an experiment to test your hypothesis. In a correlative experiment, you must be able to measure the exact relationship between two variables. This means you will need to find out how often a change occurs in both variables in terms of a specific percentage.

Establish the requirements of the experiment with regard to statistical significance. Instruct readers exactly how often the variables must correlate to reach a high enough level of statistical significance. This number will vary considerably depending on the field. In a highly technical scientific study, for instance, the variables may need to correlate 98 percent of the time; but in a sociological study, 90 percent correlation may suffice. Look at other studies in your particular field to determine the requirements for statistical significance.

State the null hypothesis. The null hypothesis gives an exact value that implies there is no correlation between the two variables. If the results show a percentage equal to or lower than the value of the null hypothesis, then the variables are not proven to correlate.

Record and summarize the results of your experiment. State whether or not the experiment met the minimum requirements of your hypothesis in terms of both percentage and significance.

Related Articles

How to determine the sample size in a quantitative..., how to calculate a two-tailed test, how to interpret a student's t-test results, how to know if something is significant using spss, quantitative vs. qualitative data and laboratory testing, similarities of univariate & multivariate statistical..., what is the meaning of sample size, distinguishing between descriptive & causal studies, how to calculate cv values, how to determine your practice clep score, what are the different types of correlations, how to calculate p-hat, how to calculate percentage error, how to calculate percent relative range, how to calculate a sample size population, how to calculate bias, how to calculate the percentage of another number, how to find y value for the slope of a line, advantages & disadvantages of finding variance.

- University of New England; Steps in Hypothesis Testing for Correlation; 2000

- Research Methods Knowledge Base; Correlation; William M.K. Trochim; 2006

- Science Buddies; Hypothesis

About the Author

Brian Gabriel has been a writer and blogger since 2009, contributing to various online publications. He earned his Bachelor of Arts in history from Whitworth University.

Photo Credits

Thinkstock/Comstock/Getty Images

Find Your Next Great Science Fair Project! GO

12.4 Testing the Significance of the Correlation Coefficient

The correlation coefficient, r , tells us about the strength and direction of the linear relationship between x and y . However, the reliability of the linear model also depends on how many observed data points are in the sample. We need to look at both the value of the correlation coefficient r and the sample size n , together.

We perform a hypothesis test of the "significance of the correlation coefficient" to decide whether the linear relationship in the sample data is strong enough to use to model the relationship in the population.

The sample data are used to compute r , the correlation coefficient for the sample. If we had data for the entire population, we could find the population correlation coefficient. But because we have only sample data, we cannot calculate the population correlation coefficient. The sample correlation coefficient, r , is our estimate of the unknown population correlation coefficient.

- The symbol for the population correlation coefficient is ρ , the Greek letter "rho."

- ρ = population correlation coefficient (unknown)

- r = sample correlation coefficient (known; calculated from sample data)

The hypothesis test lets us decide whether the value of the population correlation coefficient ρ is "close to zero" or "significantly different from zero". We decide this based on the sample correlation coefficient r and the sample size n .

If the test concludes that the correlation coefficient is significantly different from zero, we say that the correlation coefficient is "significant."

- Conclusion: There is sufficient evidence to conclude that there is a significant linear relationship between x and y because the correlation coefficient is significantly different from zero.

- What the conclusion means: There is a significant linear relationship between x and y . We can use the regression line to model the linear relationship between x and y in the population.

If the test concludes that the correlation coefficient is not significantly different from zero (it is close to zero), we say that correlation coefficient is "not significant".

- Conclusion: "There is insufficient evidence to conclude that there is a significant linear relationship between x and y because the correlation coefficient is not significantly different from zero."

- What the conclusion means: There is not a significant linear relationship between x and y . Therefore, we CANNOT use the regression line to model a linear relationship between x and y in the population.

- If r is significant and the scatter plot shows a linear trend, the line can be used to predict the value of y for values of x that are within the domain of observed x values.

- If r is not significant OR if the scatter plot does not show a linear trend, the line should not be used for prediction.

- If r is significant and if the scatter plot shows a linear trend, the line may NOT be appropriate or reliable for prediction OUTSIDE the domain of observed x values in the data.

PERFORMING THE HYPOTHESIS TEST

- Null Hypothesis: H 0 : ρ = 0

- Alternate Hypothesis: H a : ρ ≠ 0

WHAT THE HYPOTHESES MEAN IN WORDS:

- Null Hypothesis H 0 : The population correlation coefficient IS NOT significantly different from zero. There IS NOT a significant linear relationship (correlation) between x and y in the population.

- Alternate Hypothesis H a : The population correlation coefficient IS significantly DIFFERENT FROM zero. There IS A SIGNIFICANT LINEAR RELATIONSHIP (correlation) between x and y in the population.

DRAWING A CONCLUSION: There are two methods of making the decision. The two methods are equivalent and give the same result.

- Method 1: Using the p -value

- Method 2: Using a table of critical values

In this chapter of this textbook, we will always use a significance level of 5%, α = 0.05

Using the p -value method, you could choose any appropriate significance level you want; you are not limited to using α = 0.05. But the table of critical values provided in this textbook assumes that we are using a significance level of 5%, α = 0.05. (If we wanted to use a different significance level than 5% with the critical value method, we would need different tables of critical values that are not provided in this textbook.)

METHOD 1: Using a p -value to make a decision

Using the ti-83, 83+, 84, 84+ calculator.

To calculate the p -value using LinRegTTEST: On the LinRegTTEST input screen, on the line prompt for β or ρ , highlight " ≠ 0 " The output screen shows the p-value on the line that reads "p =". (Most computer statistical software can calculate the p -value.)

- Decision: Reject the null hypothesis.

- Conclusion: "There is sufficient evidence to conclude that there is a significant linear relationship between x and y because the correlation coefficient is significantly different from zero."

- Decision: DO NOT REJECT the null hypothesis.

- Conclusion: "There is insufficient evidence to conclude that there is a significant linear relationship between x and y because the correlation coefficient is NOT significantly different from zero."

- You will use technology to calculate the p -value. The following describes the calculations to compute the test statistics and the p -value:

- The p -value is calculated using a t -distribution with n - 2 degrees of freedom.

- The formula for the test statistic is t = r n − 2 1 − r 2 t = r n − 2 1 − r 2 . The value of the test statistic, t , is shown in the computer or calculator output along with the p -value. The test statistic t has the same sign as the correlation coefficient r .

- The p -value is the combined area in both tails.

An alternative way to calculate the p -value (p) given by LinRegTTest is the command 2*tcdf(abs(t),10^99, n-2) in 2nd DISTR.

- Consider the third exam/final exam example .

- The line of best fit is: ŷ = -173.51 + 4.83 x with r = 0.6631 and there are n = 11 data points.

- Can the regression line be used for prediction? Given a third exam score ( x value), can we use the line to predict the final exam score (predicted y value)?

- H 0 : ρ = 0

- H a : ρ ≠ 0

- The p -value is 0.026 (from LinRegTTest on your calculator or from computer software).

- The p -value, 0.026, is less than the significance level of α = 0.05.

- Decision: Reject the Null Hypothesis H 0

- Conclusion: There is sufficient evidence to conclude that there is a significant linear relationship between the third exam score ( x ) and the final exam score ( y ) because the correlation coefficient is significantly different from zero.

Because r is significant and the scatter plot shows a linear trend, the regression line can be used to predict final exam scores.

METHOD 2: Using a table of Critical Values to make a decision

The 95% Critical Values of the Sample Correlation Coefficient Table can be used to give you a good idea of whether the computed value of r r is significant or not . Compare r to the appropriate critical value in the table. If r is not between the positive and negative critical values, then the correlation coefficient is significant. If r is significant, then you may want to use the line for prediction.

Example 12.7

Suppose you computed r = 0.801 using n = 10 data points. df = n - 2 = 10 - 2 = 8. The critical values associated with df = 8 are -0.632 and + 0.632. If r < negative critical value or r > positive critical value, then r is significant. Since r = 0.801 and 0.801 > 0.632, r is significant and the line may be used for prediction. If you view this example on a number line, it will help you.

Try It 12.7

For a given line of best fit, you computed that r = 0.6501 using n = 12 data points and the critical value is 0.576. Can the line be used for prediction? Why or why not?

Example 12.8

Suppose you computed r = –0.624 with 14 data points. df = 14 – 2 = 12. The critical values are –0.532 and 0.532. Since –0.624 < –0.532, r is significant and the line can be used for prediction

Try It 12.8

For a given line of best fit, you compute that r = 0.5204 using n = 9 data points, and the critical value is 0.666. Can the line be used for prediction? Why or why not?

Example 12.9

Suppose you computed r = 0.776 and n = 6. df = 6 – 2 = 4. The critical values are –0.811 and 0.811. Since –0.811 < 0.776 < 0.811, r is not significant, and the line should not be used for prediction.

Try It 12.9

For a given line of best fit, you compute that r = –0.7204 using n = 8 data points, and the critical value is = 0.707. Can the line be used for prediction? Why or why not?

THIRD-EXAM vs FINAL-EXAM EXAMPLE: critical value method

Consider the third exam/final exam example . The line of best fit is: ŷ = –173.51+4.83 x with r = 0.6631 and there are n = 11 data points. Can the regression line be used for prediction? Given a third-exam score ( x value), can we use the line to predict the final exam score (predicted y value)?

- Use the "95% Critical Value" table for r with df = n – 2 = 11 – 2 = 9.

- The critical values are –0.602 and +0.602

- Since 0.6631 > 0.602, r is significant.

- Conclusion:There is sufficient evidence to conclude that there is a significant linear relationship between the third exam score ( x ) and the final exam score ( y ) because the correlation coefficient is significantly different from zero.

Example 12.10

Suppose you computed the following correlation coefficients. Using the table at the end of the chapter, determine if r is significant and the line of best fit associated with each r can be used to predict a y value. If it helps, draw a number line.

- r = –0.567 and the sample size, n , is 19. The df = n – 2 = 17. The critical value is –0.456. –0.567 < –0.456 so r is significant.

- r = 0.708 and the sample size, n , is nine. The df = n – 2 = 7. The critical value is 0.666. 0.708 > 0.666 so r is significant.

- r = 0.134 and the sample size, n , is 14. The df = 14 – 2 = 12. The critical value is 0.532. 0.134 is between –0.532 and 0.532 so r is not significant.

- r = 0 and the sample size, n , is five. No matter what the dfs are, r = 0 is between the two critical values so r is not significant.

Try It 12.10

For a given line of best fit, you compute that r = 0 using n = 100 data points. Can the line be used for prediction? Why or why not?

Assumptions in Testing the Significance of the Correlation Coefficient

Testing the significance of the correlation coefficient requires that certain assumptions about the data are satisfied. The premise of this test is that the data are a sample of observed points taken from a larger population. We have not examined the entire population because it is not possible or feasible to do so. We are examining the sample to draw a conclusion about whether the linear relationship that we see between x and y in the sample data provides strong enough evidence so that we can conclude that there is a linear relationship between x and y in the population.

The regression line equation that we calculate from the sample data gives the best-fit line for our particular sample. We want to use this best-fit line for the sample as an estimate of the best-fit line for the population. Examining the scatterplot and testing the significance of the correlation coefficient helps us determine if it is appropriate to do this.

- There is a linear relationship in the population that models the average value of y for varying values of x . In other words, the expected value of y for each particular value lies on a straight line in the population. (We do not know the equation for the line for the population. Our regression line from the sample is our best estimate of this line in the population.)

- The y values for any particular x value are normally distributed about the line. This implies that there are more y values scattered closer to the line than are scattered farther away. Assumption (1) implies that these normal distributions are centered on the line: the means of these normal distributions of y values lie on the line.

- The standard deviations of the population y values about the line are equal for each value of x . In other words, each of these normal distributions of y values has the same shape and spread about the line.

- The residual errors are mutually independent (no pattern).

- The data are produced from a well-designed, random sample or randomized experiment.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute OpenStax.

Access for free at https://openstax.org/books/introductory-statistics/pages/1-introduction

- Authors: Barbara Illowsky, Susan Dean

- Publisher/website: OpenStax

- Book title: Introductory Statistics

- Publication date: Sep 19, 2013

- Location: Houston, Texas

- Book URL: https://openstax.org/books/introductory-statistics/pages/1-introduction

- Section URL: https://openstax.org/books/introductory-statistics/pages/12-4-testing-the-significance-of-the-correlation-coefficient

© Jun 23, 2022 OpenStax. Textbook content produced by OpenStax is licensed under a Creative Commons Attribution License . The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Interpreting Correlation Coefficients

By Jim Frost 143 Comments

What are Correlation Coefficients?

Correlation coefficients measure the strength of the relationship between two variables. A correlation between variables indicates that as one variable changes in value, the other variable tends to change in a specific direction. Understanding that relationship is useful because we can use the value of one variable to predict the value of the other variable. For example, height and weight are correlated—as height increases, weight also tends to increase. Consequently, if we observe an individual who is unusually tall, we can predict that his weight is also above the average.

In statistics , correlation coefficients are a quantitative assessment that measures both the direction and the strength of this tendency to vary together. There are different types of correlation coefficients that you can use for different kinds of data . In this post, I cover the most common type of correlation—Pearson’s correlation coefficient.

Before we get into the numbers, let’s graph some data first so we can understand the concept behind what we are measuring.

Graph Your Data to Find Correlations

Scatterplots are a great way to check quickly for correlation between pairs of continuous data. The scatterplot below displays the height and weight of pre-teenage girls. Each dot on the graph represents an individual girl and her combination of height and weight. These data are actual data that I collected during an experiment.

At a glance, you can see that there is a correlation between height and weight. As height increases, weight also tends to increase. However, it’s not a perfect relationship. If you look at a specific height, say 1.5 meters, you can see that there is a range of weights associated with it. You can also find short people who weigh more than taller people. However, the general tendency that height and weight increase together is unquestionably present—a correlation exists.

Pearson’s correlation coefficient takes all of the data points on this graph and represents them as a single number. In this case, the statistical output below indicates that the Pearson’s correlation coefficient is 0.694.

What do the Pearson correlation coefficient and p-value mean? We’ll interpret the output soon. First, let’s look at a range of possible correlation coefficients so we can understand how our height and weight example fits in.

Related posts : Using Excel to Calculate Correlation and Guide to Scatterplots

How to Interpret Pearson Correlation Coefficients

Pearson’s correlation coefficient is represented by the Greek letter rho ( ρ ) for the population parameter and r for a sample statistic. This correlation coefficient is a single number that measures both the strength and direction of the linear relationship between two continuous variables. Values can range from -1 to +1.

The greater the absolute value of the Pearson correlation coefficient, the stronger the relationship.

- The extreme values of -1 and 1 indicate a perfectly linear relationship where a change in one variable is accompanied by a perfectly consistent change in the other. For these relationships, all of the data points fall on a line. In practice, you won’t see either type of perfect relationship.

- A coefficient of zero represents no linear relationship. As one variable increases, there is no tendency in the other variable to either increase or decrease.

- When the value is in-between 0 and +1/-1, there is a relationship, but the points don’t all fall on a line. As r approaches -1 or 1, the strength of the relationship increases and the data points tend to fall closer to a line.

The sign of the Pearson correlation coefficient represents the direction of the relationship.

- Positive coefficients indicate that when the value of one variable increases, the value of the other variable also tends to increase. Positive relationships produce an upward slope on a scatterplot.

- Negative coefficients represent cases when the value of one variable increases, the value of the other variable tends to decrease. Negative relationships produce a downward slope.

Statisticians consider Pearson’s correlation coefficients to be a standardized effect size because they indicate the strength of the relationship between variables using unitless values that fall within a standardized range of -1 to +1. Effect sizes help you understand how important the findings are in a practical sense. To learn more about unstandardized and standardized effect sizes, read my post about Effect Sizes in Statistics .

Learn how to calculate correlation in my post, Correlation Coefficient Formula Walkthrough .

Covariance is an unstandardized form of correlation. Learn about it in my posts:

- Covariance: Definition, Formula & Example

- Covariances vs Correlation: Understanding the Differences

Examples of Positive and Negative Correlation Coefficients

A positive correlation example is the relationship between the speed of a wind turbine and the amount of energy it produces. As the turbine speed increases, electricity production also increases.

A negative correlation example is the relationship between outdoor temperature and heating costs. As the temperature increases, heating costs decrease.

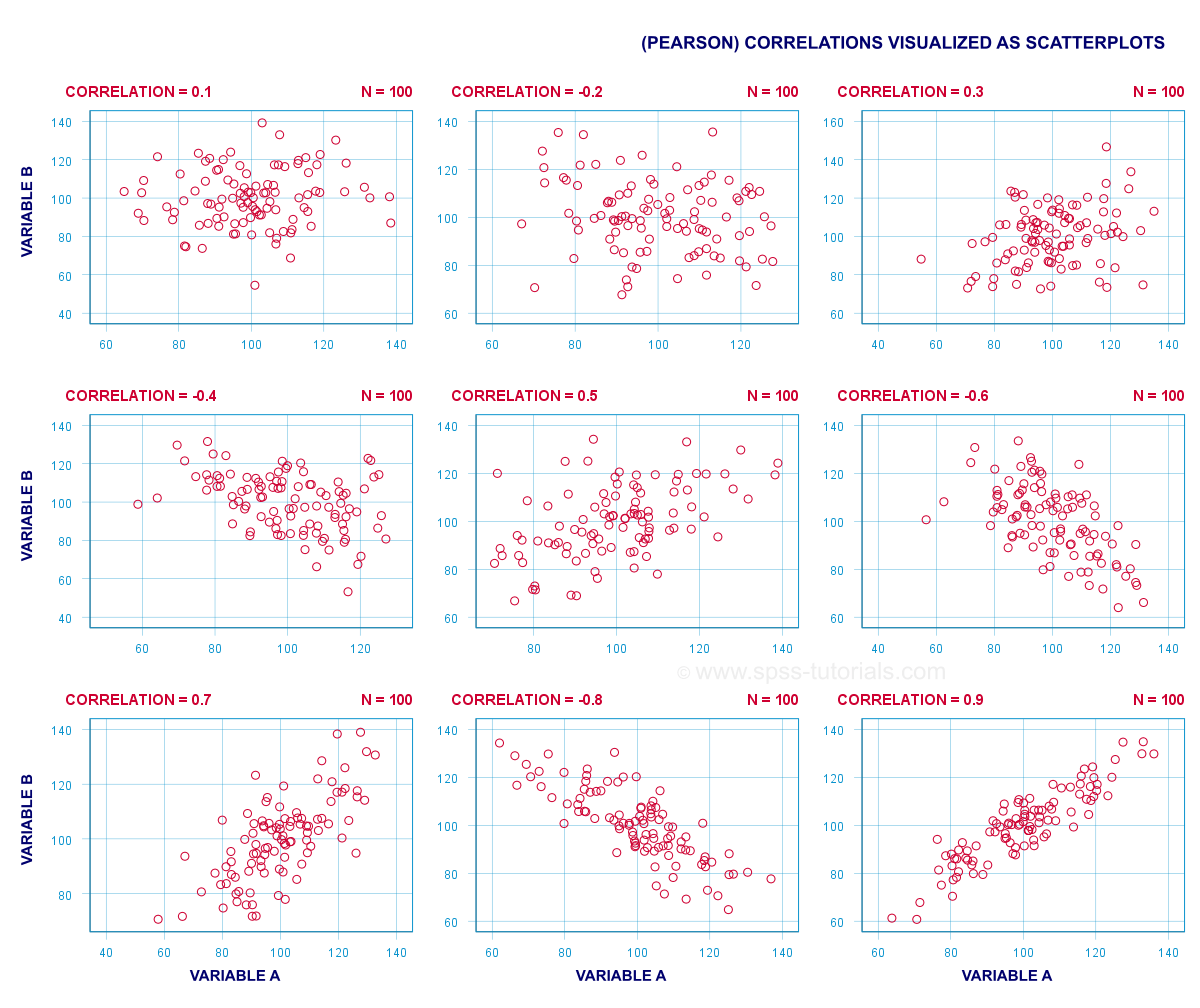

Graphs for Different Correlation Coefficients

Graphs always help bring concepts to life. The scatterplots below represent a spectrum of different Pearson correlation coefficients. I’ve held the horizontal and vertical scales of the scatterplots constant to allow for valid comparisons between them.

Discussion about the Scatterplots

For the scatterplots above, I created one positive correlation between the variables and one negative relationship between the variables. Then, I varied only the amount of dispersion between the data points and the line that defines the relationship. That process illustrates how correlation measures the strength of the relationship. The stronger the relationship, the closer the data points fall to the line. I didn’t include plots for weaker correlation coefficients that are closer to zero than 0.6 and -0.6 because they start to look like blobs of dots and it’s hard to see the relationship.

A common misinterpretation is assuming that negative Pearson correlation coefficients indicate that there is no relationship. After all, a negative correlation sounds suspiciously like no relationship. However, the scatterplots for the negative correlations display real relationships. For negative correlation coefficients, high values of one variable are associated with low values of another variable. For example, there is a negative correlation coefficient for school absences and grades. As the number of absences increases, the grades decrease.

Earlier I mentioned how crucial it is to graph your data to understand them better. However, a quantitative measurement of the relationship does have an advantage. Graphs are a great way to visualize the data, but the scaling can exaggerate or weaken the appearance of a correlation. Additionally, the automatic scaling in most statistical software tends to make all data look similar .

Fortunately, Pearson’s correlation coefficients are unaffected by scaling issues. Consequently, a statistical assessment is better for determining the precise strength of the relationship.

Graphs and the relevant statistical measures often work better in tandem.

Pearson’s Correlation Coefficients Measure Linear Relationship

Pearson’s correlation coefficients measure only linear relationships. Consequently, if your data contain a curvilinear relationship, the Pearson correlation coefficient will not detect it. For example, the correlation for the data in the scatterplot below is zero. However, there is a relationship between the two variables—it’s just not linear.

This example illustrates another reason to graph your data! Just because the coefficient is near zero, it doesn’t necessarily indicate that there is no relationship.

Spearman’s correlation is a nonparametric alternative to Pearson’s correlation coefficient. Use Spearman’s correlation for nonlinear, monotonic relationships and for ordinal data. For more information, read my post Spearman’s Correlation Explained !

Hypothesis Test for Correlation Coefficients

Correlation coefficients have a hypothesis test. As with any hypothesis test, this test takes sample data and evaluates two mutually exclusive statements about the population from which the sample was drawn. For Pearson correlations, the two hypotheses are the following:

- Null hypothesis: There is no linear relationship between the two variables. ρ = 0.

- Alternative hypothesis: There is a linear relationship between the two variables. ρ ≠ 0.

Correlation coefficients that equal zero indicate no linear relationship exists. If your p-value is less than your significance level , the sample contains sufficient evidence to reject the null hypothesis and conclude that the Pearson correlation coefficient does not equal zero. In other words, the sample data support the notion that the relationship exists in the population.

Related post : Overview of Hypothesis Tests

Interpreting our Height and Weight Correlation Example

Now that we have seen a range of positive and negative relationships, let’s see how our Pearson correlation coefficient of 0.694 fits in. We know that it’s a positive relationship. As height increases, weight tends to increase. Regarding the strength of the relationship, the graph shows that it’s not a very strong relationship where the data points tightly hug a line. However, it’s not an entirely amorphous blob with a very low correlation. It’s somewhere in between. That description matches our moderate correlation coefficient of 0.694.

For the hypothesis test, our p-value equals 0.000. This p-value is less than any reasonable significance level. Consequently, we can reject the null hypothesis and conclude that the relationship is statistically significant. The sample data support the notion that the relationship between height and weight exists in the population of preteen girls.

Correlation Does Not Imply Causation

I’m sure you’ve heard this expression before, and it is a crucial warning. Correlation between two variables indicates that changes in one variable are associated with changes in the other variable. However, correlation does not mean that the changes in one variable actually cause the changes in the other variable.

Sometimes it is clear that there is a causal relationship. For the height and weight data, it makes sense that adding more vertical structure to a body causes the total mass to increase. Or, increasing the wattage of lightbulbs causes the light output to increase.

However, in other cases, a causal relationship is not possible. For example, ice cream sales and shark attacks have a positive correlation coefficient. Clearly, selling more ice cream does not cause shark attacks (or vice versa). Instead, a third variable, outdoor temperatures, causes changes in the other two variables. Higher temperatures increase both sales of ice cream and the number of swimmers in the ocean, which creates the apparent relationship between ice cream sales and shark attacks.

Beware of spurious correlations!

In statistics, you typically need to perform a randomized, controlled experiment to determine that a relationship is causal rather than merely correlation. Conversely, Correlational Studies will find relationships quickly and easily but they are not suitable for establishing causality.

Learn more about Correlation vs. Causation: Understanding the Differences .

Related posts : Using Random Assignment in Experiments and Observational Studies

How Strong of a Correlation is Considered Good?

What is a good correlation? How high should correlation coefficients be? These are commonly asked questions. I have seen several schemes that attempt to classify correlations as strong, medium, and weak.

However, there is only one correct answer. A Pearson correlation coefficient should accurately reflect the strength of the relationship. Take a look at the correlation between the height and weight data, 0.694. It’s not a very strong relationship, but it accurately represents our data. An accurate representation is the best-case scenario for using a statistic to describe an entire dataset.

The strength of any relationship naturally depends on the specific pair of variables. Some research questions involve weaker relationships than other subject areas. Case in point, humans are hard to predict. Studies that assess relationships involving human behavior tend to have correlation coefficients weaker than +/- 0.6.

However, if you analyze two variables in a physical process, and have very precise measurements, you might expect correlations near +1 or -1. There is no one-size fits all best answer for how strong a relationship should be. The correct values for correlation coefficients depend on your study area.

Taking Correlation to the Next Level with Regression Analysis

Wouldn’t it be nice if instead of just describing the strength of the relationship between height and weight, we could define the relationship itself using an equation? Regression analysis does just that. That analysis finds the line and corresponding equation that provides the best fit to our dataset. We can use that equation to understand how much weight increases with each additional unit of height and to make predictions for specific heights. Read my post where I talk about the regression model for the height and weight data .

Regression analysis allows us to expand on correlation in other ways. If we have more variables that explain changes in weight, we can include them in the model and potentially improve our predictions. And, if the relationship is curved, we can still fit a regression model to the data.

Additionally, a form of the Pearson correlation coefficient shows up in regression analysis. R-squared is a primary measure of how well a regression model fits the data. This statistic represents the percentage of variation in one variable that other variables explain. For a pair of variables, R-squared is simply the square of the Pearson’s correlation coefficient. For example, squaring the height-weight correlation coefficient of 0.694 produces an R-squared of 0.482, or 48.2%. In other words, height explains about half the variability of weight in preteen girls.

If you’re learning about statistics and like the approach I use in my blog, check out my Introduction to Statistics book! It’s available at Amazon and other retailers.

Share this:

Reader Interactions

May 7, 2024 at 9:18 am

Is there any benefit to doing both a correlation and a regression test? I don’t think there is – I believe that a regression output will give you the same information a correlation output would plus more. Please could you let me know if that is correct or am I missing something?

May 7, 2024 at 2:08 pm

Hi Charlotte,

In general, you are correct for simple regression, where you have one independent variable and the dependent variable. The R-square for that model is literally the square of the Pearson’s correlation (r) for those two variables. As you mention, regression gives you additional output along with the strength of the relationship.

But there are a few caveats.

Regression is much more flexible than correlation because it allows you to add other variables, fit curvature and include interaction effects. For example, regression allows you to fit curvature between the two variables using polynomials. So, there are cases where using Pearson’s correlation is inappropriate because the data violate some of the assumptions but regression analysis can handle those data acceptably.

But what you say is correct when you’re looking at a straight line relationship between a pair of variables. In that specific case, simple regression and Pearson’s correlation provide consistent information with regression providing more details.

March 12, 2024 at 4:11 am

Hi If you are finding the trend between one type of quantitative discrete data and one type of qualitative ordinal data, what correlation test do you use?

September 9, 2023 at 4:46 am

It could be that the sharks are using ice cream as bait. Maybe the sharks are smarter than we think… Seriously, the ice cream as a cause is not likely, but sometimes a perfectly sensible hypothesis with lots of data behind it can be just plain wrong.

September 9, 2023 at 11:43 pm

It can be wrong in causal sense but if ice cream cones has a non-causal correlation with the number of shark attacks, it can still help you make predictions. Now, if you thought limiting ice cream sales will reduce shark attacks, that’s not going to work!

June 9, 2023 at 1:56 am

What is to be done when two positive items show a negative correlation within one variable.. e.g increase in house help decreases no interruptions in work?? It’s confusing as both r positive questions

June 10, 2023 at 1:09 am

It’s possibly the result of other variables, known as confounding variables (or confounders) that you might not even have recorded. For example, there might be some other variable that correlates with both “house help” and “interruptions at work” that explain the unexpected negative correlation. Perhaps individuals with house help have more activities occurring throughout the day at home. Those activities would then cause more interruptions. So, you might have chain of correlations where the “home activities” and “house help” have positive correlations. Additionally, “home activities” and “interruptions” might have a negative correlation. Given this arrangement, it wouldn’t be surprising to see a negative correlation between “home activities” and “interruptions.”

It goes to show that you need to understand the larger context when analyzing data. Technically, this phenomenon is known as omitted variable bias . Your model (pairwise correlation) omits an important variable (a confounder) which is biasing the results. Click the link to learn more.

The answer is to identify and record the confounding variables and include them in your model, likely a regression model or partial correlation.

May 8, 2023 at 12:58 pm

What if my pearson’s r is 0.187 and p-value is 0.001 do i reject the null hypothesis?

May 8, 2023 at 2:56 pm

Yes! That p-value is below any reasonable significance level. Hence, you can reject the null hypothesis. However, be aware that while the correlation is statistically significant, it is so weak that it probably isn’t practically significant in the real world. In other words, it probably exists in the population you’re assessing but it is too weak to be noticeable/meaningful.

November 30, 2022 at 4:53 am

Thank you, Jim. I really appreciate your help. I will read your post about statistical v practical significance – that sounds really useful. I love how you explain things in such an accessible way.

I have one more question that I was hoping you would be able to help me with, please?

If I have done a correlation test and I have found an extremely weak negative relationship (e.g., -.02) but the relationship is not statistically significant, would this mean that although I have found that there is a very weak negative correlation between the variables in the sample data, this would unlikely to be found in the population. Therefore, I would fail to reject the null hypothesis that the correlation in the population equals zero.

Thank you again for your help and for this wonderful blog.

December 1, 2022 at 1:57 am

You’re very welcome!

In the case where the correlation is not significant, it indicates that you have insufficient evidence to conclude that it does not equal zero. That’s a mouthful but there’s a reason for the convoluted wording. Insignificant results don’t prove that there is no effect, it just indicates that your test didn’t detect an effect in the population. It could be that the effect doesn’t exist in the population OR it could be that your sample size was too small or there’s too much variability in the data.

In short, we say that you failed to reject the null hypothesis.

Basically, you can’t prove a negative (no effect). All you can say is that your study didn’t detect an effect. In this case, it didn’t detect a non-zero correlation.

You can read more about the reason behind the wording failing to reject the null hypothesis and what it means precisely.

November 29, 2022 at 12:39 pm

Thank you for this webpage. It is great. I have a question, which I was hoping you’d be able to help me with please.

I have carried out a correlation test, and from my understanding a null hypothesis would be that there is no relationship between the two variables (the variables are independent – there is no correlation).

The p value is statistically significant (.000), and the Pearson correlation result is -.036.

My understanding is that if there is a statically significant relationship then I would reject the null hypothesis (which suggests there is no relationship between the two variables). My issue is then whether -.036 suggests a very weak relationship or no relationship at all given how close to 0 it is. If it is the latter, would I then say I have failed to reject the null hypothesis even though there is a statisicially significant relationship? Or would I say that I have rejected the null hypothesis because there is a statically significant relationship, but the correlation is very weak.

Any help would be appreciated. Kind regards.

November 29, 2022 at 4:10 pm

What you’re seeing is the difference between statistical significance and practically significance. Yes, your results are statistically significant. You can reject the null hypothesis that rho (the correlation in the population) does not equal zero. Your data provide enough evidence to conclude that the negative correlation exists in the population (not just your sample).

However, as you say, it’s an extremely weak relationship. Even though it’s not zero it is essentially zero in a practical sense. Statistically significant results don’t automatically mean that the effect size (correlation is this case) is meaningful in the real-world. When a test has very high statistical power (e.g., sometimes due to a very large sample size), it can detect trivial effects. Those effects are real but they’re small in size.

I write more about this in my post about statistical vs. practical significance . But, in a nutshell, your correlation coefficient is statistically significant, but it is not a meaningful effect in the real world.

September 28, 2022 at 10:44 am

I have a simple question, only to frame how to use correlation. Imagine a trial with plants, testing different phosphate (Pi) concentrations (like 8) and its effect on plant growth (assessed as mean plant size per Pi concentration, from enough replicates and data validity to perform classical parametric statistics).

In case A, I have a strong (positive) and significant Pearson correlation between these two parameters, and in particular, the 8 average size values show statistical significant differences (ANOVA) between all the Pi concentrations tested.

In case B, I have the same strong (positive) significant Pearson correlation, but there is no any statistical significant difference in term of size between any Pi concentration tested.

My guess is that it may be possible to interpret the case A as Pi is correlated with plant growth; but in case B, no interpretation can be provided given that no significant difference is seen between Pi concentrations on plant size, even if a correlation is obtained. Is this right ? But in this case, if I have 3 out the 8 Pi concentrations which I obtained significant difference on plant size, should I perform correlation only between significant Pi groups or could I still take all the 8 Pi groups to make interpretations ? Thanks in advance !

September 29, 2022 at 7:02 pm

I don’t fully understand your trial. You say that you have a continuous measure of Pi concentration and then average plant sizes. Pearson correlations work with two continuous measures–not a group average. So, you’d need to correlate the Pi concentration with plant size, not average plant size. Or perhaps I’m misunderstanding your description. Please clarify your process. Thanks!

In a more general sense, you have to remember that statistical significance doesn’t necessarily indicate there is a real-world, practical significance to your results. That’s possibly what you’re finding in case B. Although again it’s hard to say if you’re applying correlation to averages.

Statistical significance just indicates that you have reason to believe that a relationship/effect exists in the population. It doesn’t necessarily mean that the effect is large enough to be practically meaningful. For more information, read my post about Practical vs. Statistical Significance .

August 16, 2022 at 11:16 am

This was very educative and easy to follow through for a statistics noob such as me. Thanks! I like your books. Which one is most suited for a beginner level of knowledge?

August 17, 2022 at 12:20 am

My Introduction to Statistics book is the best to get started with for beginners. Click the link to see a post where I discuss it and included a full table of contents.

After reading that, you’d be ready to read both of my two other books: Hypothesis Testing Regression Analysis

May 16, 2022 at 2:45 pm

Jim, Nassim Taleb makes the point on YouTube (search for Taleb and correlation) that an r = 0.10 is much closer to zero than to r = 0.20) implying that the distribution function for r is very dependent on the r in the population, and the sample size and that the scale of -1.0 to +1.0 is not a scale separated by equal units. He then warns of significance tests because r is a random variable and subject to sampling fluctuations and r = .25 could easily be zero due to sampling error (especially for small sample sizes). Can you please discuss if the scale of r = -1.0 to 1.0 is set in equidistant units, or units that only superficially look like they are equidistant?

May 16, 2022 at 6:41 pm

I did a quick search and found a video where he’s talking about using correlation in the financial and investment areas. He seems to be saying that correlation is not the correct tool for that context. I can’t talk to that point because I’m not familiar with the context.

However, yes, I can help you out with most of the other points!

I’ll start with the fact that the scale of -1 to +1 is, in some ways, not consistent. To start, correlation coefficients are a standardized effect. As such, they are unitless. You can’t link them to anything real, but they help you compare between disparate types of studies. In other words, they excel at providing a standard basis of comparison between studies. However, they’re not as good for knowing what the statistic actually means, except for a few specific values, -1, +1, and 0. And perhaps that’s why Taleb isn’t fond of them. (At 20 minutes, I didn’t watch the entire video.)

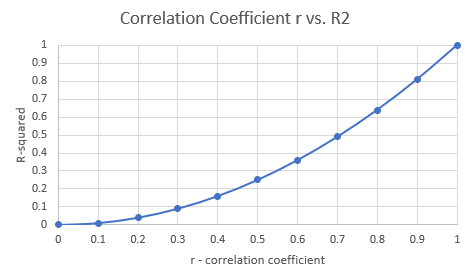

However, we can convert r to R-squared and it becomes more meaningful. R-squared tells us how much of the variance the relationship accounts for. And, as the name implies, you simply square r to get R-squared. It’s in R-squared where you see that the difference between r of 0.1 and 0.2 is different from say 0.8 and 0.9. When you go from 0.1 to 0.2, R-squared increases from 0.01 to 0.04, an increase of 3%. And note that at those correlations, we’re only explaining between 1 – 4% of the variance. Virtually nothing! Now, if we look at going from an r of 0.8 to 0.9, R-squared increases from 0.64 to 0.81, or 17%. So, we have the same size increase in r (0.1) in both cases, but R-squared increases by 3% in one case and 17% in the other. Also, notice how at a r of 0.5, you’re only accounting for 25% of the variance. That’s not very much. You need an r of 0.707 to explain half the variance (50%). Another way to think of it is that the range of r [0, 0.7] accounts for half the variance while r [0.7, 1] accounts for the other half.

I agree with the point that r = 0.1 is virtually nothing. In fact, you need an r of 0.316 to explain even a tenth (10%) of the variability. I also agree that fixed differences in r (e.g., 0.1) indicates different changes in the strength of the relationship, as I illustrate above. I think those points are valid.

Below, I include a graph showing r vs. R-squared and the curved line indicates that the relationship between the two statistics changes (the inconsistency you mention). If the relationship was consistent, it would be a straight line. For me, R-squared is the better statistic, particularly in conjunction with regression analysis, which provides more information about the nature of the relationships. Of course, the negative range of r produces the mirror graph but the same ideas apply.

I think correlation coefficients (r) have some other shortcomings. They describe the strength of the relationship but not the actual relationship. And they don’t account for other variables. Regression analysis handles those aspects and I generally prefer that methodology. For me, simple correlation just doesn’t provide enough information by itself in most cases. You also typically don’t get residual plots so you can be sure that you’re satisfying the assumptions (Pearson’s correlation (r) is essentially a linear model).

The sample r does depend on the relationship in the population. But that’s true for all sample statistics–as I write in my post, Sample Statistics Are Always Wrong to Some Extent! I don’t think it’s any worse for correlation than other types of sample statistics. As you increase your sample size, the estimate’s precision will increase (i.e., the error bars become smaller).

I think significance tests are valid for correlation. Yes, it’s subject to sampling fluctuations ( sampling error ) but so are all sample based statistics. Hypothesis testing is designed to factor that in. In fact, significance testing specifically helps you distinguish between cases where the sample r = 0.25 might represent 0 in the population vs. cases where that is unlikely. That’s the very intention of significance testing, so I strongly disagree with that point!

April 9, 2022 at 2:20 am

Thank you for the fast response!! I have alaso read the Spearman’s Rho article (very insightful). In my scatterplot it is suggesting that there is no correlation (completely random distribution). However, I would still like to test the correlation but in the Spearmans’s Rho article you mentioned that if it is there is no correlation, both the spearman’s Rho value and Pearson’s correlation value would be close to zero. Is it also possible that one value is positive and one is negative? My results right now are R2 Linear= 0.003, Pearson correlation= .058, and Spearman’s correlation coefficient= -0.19. Should I base the rejection of either of my hypothesises on Spaerman’s value or Pearson’s value

Thank you so much!!!

April 9, 2022 at 10:42 pm

I’m glad that it was helpful! It’s definitely possible for correlations to switch directions like that. That’s especially true because both correlations are barely different from zero. So, it wouldn’t take much to cause them to be on opposite sides of zero. The R-squared is telling you that the Pearson’s correlation explains hardly any of the variability.

April 8, 2022 at 7:05 pm

Thank you for this post!! I was wondering, I did a scatterplot which gave me a R2 value of 0.003. The fitline showed a really weak positive correlation which I wanted to test with the Spearmans rho. However, this value is showing a negative value (negative relationship). Do you maybe know why it is showing different correlations since I am using the exact same values?

April 8, 2022 at 7:51 pm

The R-squared value and slope you’re seeing are related to Pearson’s correlation, which differs from Spearmans rho. They’re different statistical measures using different methods, so it’s not surprising that their values can be different. For more information, read my post about Spearman’s Rho .

April 6, 2022 at 3:37 am

Hi Jim, I had a question. It’s kinda complicated but I try my best to explain it well.

I run a correlation test between objective social isolation and subjective social isolation. To measure OSI, I used an instrument called LSNS-6, while I used R-UCLA Loneliness Scale to measure the SSI. Here is the scoring guide for the instruments: * higher score obtained in LSNS-6 = low objective social isolation * higher score obtained in R-UCLA Loneliness scale = high subjective social isolation

After I run the correlation test, I found the value was r= -.437.

My question is, did the value represents correlation between variables (meaning when someone is objectively isolated, they are less likely to be subjectively isolated and vice versa) OR the value represents correlation between scores of instruments used (meaning when someone score higher in LSNS-6, they will had a lower scores for R-UCLA Loneliness Scale and vice versa)? I had confusions due to the scoring guide. I hope you can help me.

Thank you Jim!

April 8, 2022 at 8:17 pm

This specific correlation is a bit tricky because, based on what you wrote, the LSNS-6 is inverted. High LSNS-6 scores correspond to low objective social isolation. Let’s work through this example.

The negative correlation (-0.437) indicates that high LSNS-6 scores tend to correlate with low R-UCLA scores. Now, if we “translate” the instrument measures into what the scores mean as constructs, low objective social isolation tends to correspond low subjective social isolation.

In other words, there is a negative correlation between the instrument scores. However, there is a positive correlation between the concepts of objective social isolation and subjective isolation, which makes theoretical sense.

The reason why the instrument scores have a negative correlation and the constructs having a positive correlation goes back to the fact that high LSNs-6 scores relate to low objective isolation.

I hope that helps!

April 2, 2022 at 7:16 am

Thanks so much for the highly helpful statistical resources on this website. I am a bit confused about an analysis I carried out. My scatter plot show a kind of negative relationship between two variables but my Pearson’s correlation coefficient results tend to say something different. r= -0.198 and p-value of 0.082. I would appreciate clarification on this.

April 4, 2022 at 3:56 pm

I’m not sure what is surprising you? Can you be more specific?

It sounds like your scatterplot displays a negative correlation and your negative correlation is also negative, which sounds consistent. It’s a fairly weak correlation. The p-value indicates that your data don’t provide quite enough evidence to conclude that the correlation you see in the sample via the scatterplot and correlation coefficient also exists in the population. It might just be sampling error.

January 14, 2022 at 8:31 am

Hi Jim, Andrew here.

I am using a Pearson test for two variables: LifeSatisfaction and JobSatisfaction. I have gotten a P-Value 0.000 whilst my R-Value is 0.338. Can you explain to me what relation this is? Am I right in thinking that is strong significance with a weak correlation? And that there is no significant correlation between the two.

January 14, 2022 at 4:59 pm

What you’re running in to is the difference between statistical significance and practical significance in the real world. A statistically significant results, such as your correlation, suggests that the relationship you observe in your sample also exists in the population as a whole. However, statistical significance says nothing about how important that relationship is in a practical sense.

Your correlation results suggest that a positive correlation exists between life satisfaction and job satisfaction amongst the population from which you drew your sample. However, the fairly weak correlation of 0.338 might not be of practical significant. People with satisfying jobs might be a little happier but perhaps not to a noticeable degree.

So, for your correlation, statistical significance–yes! Practical significant–maybe not.

For more information, read my post about statistical significance vs. practical significance where I go into it in more detail.

January 7, 2022 at 7:07 pm

Thank you, Jim, will do.

January 7, 2022 at 5:07 pm

Hello Jim, I just came across this website. I have a query.

I wrote the following for a report: Table 5 shows the associations between all the domains. The correlation coefficients between the environment and the economy, social, and culture domains are rs=0.335 (weak), rs=0.427 (low) and rs=0.374 (weak), respectively. The correlation coefficient between the economy and the social and culture domains are rs=0.224 and rs=0.157, respectively and are negligible. The correlation coefficient (rs =0.451) between the social and the culture domains is low, positive, and significant. These weak to low correlation coefficient values imply that changes in one domain are not correlated strongly with changes in the related domain.

The comment I received was: Correlation studies are meant to see relationships- not influence- even if there is a positive correlation between x and y, one can never conclude if x or y is the reason for such correlation. It can never determine which variables have the most influence. Thus the caution and need to re-word for some of the lines above. A correlation study also does not take into account any extraneous variables that might influence the correlation outcome.

I am not sure how I should reword? I have checked several sources and their interpretations are similar to mine, Please advise. Thank you

January 7, 2022 at 9:25 pm

Personally, I think your wording is fine. Appropriately, you don’t suggest that correlation implies causation. You state that there is correlation. So, I’m not sure why the reviewer has an issue with it.

Perhaps the reviewer wants an explicit statement to that effect? “As with all correlation studies, these correlations do not necessarily represent causal relationships.”

The second portion of the review comment about extraneous variables is, in my opinion, more relevant. Pairwise correlations don’t control for the effects of other variables. Omitted variable bias can affect these pairs. I write about this in a post about omitted variable bias . These biases can exaggerate or minimize the apparent strength of pairwise correlations.

You can avoid that problem by using partial correlations or multiple regression analysis. Although, it’s not necessarily a problem. It’s just a possibility.

January 5, 2022 at 8:52 pm

Is it possible to compare two correlation coefficients? For example, let’s say that I have three data points (A, B, and C) for each of 75 subjects. If I run a Pearson’s on the A&B survey points and receive a result of .006, while the Pearson’s on the A&C survey points is .215…although both are not significant, can I say that there is a stronger correlation between A&C than between A&B? thank you!

January 6, 2022 at 8:31 pm

I am not aware of test that will assess whether the difference between two correlation coefficients is statistically significant. I know you can do that with regression coefficients , so you might want to determine whether you can use that approach. Click the link to learn more.

However, I can guess that your two coefficients probably are not significantly different and thus you can’t say one is higher. Each of your hypothesis tests are assessing whether one of the coefficients is significantly different from zero. In both cases (0.006 and 0.215), neither are significantly different from zero. Because both of your coefficients are on the same side of zero (positive) the distance between them is even smaller than your larger coefficients (0.215) distance from zero. Hence, that difference probably is also not statistically significant. However, one muddling issue is that with the two datasets combined you have a larger total sample size than either alone, which might allow a supposed combined test to determine that the smaller difference is significant. But that’s uncertain and probably unlikely.

There’s a more fundamental issue to consider beyond statistical significance . . . practical significance. The correlation of 0.006 is so small it might as well be zero. The other is 0.215 (which according to the hypothesis test, also might as well be zero). However, in practical terms, a correlation of 0.215 is also a very weak correlation. So, even if its hypothesis test said it was statistically significant from zero, it’s a puny correlation that doesn’t provide much predictive power at all. So, you’re looking at the difference between two practically insignificant correlations. Even if the larger sample size for a combined test did indicate the difference is statistically significant, that difference (0.215 – 0.006 = 0.209) almost certainly is not practically significant in a real-world sense.

But, if you really want to know the statistical answer, look into the regression method.

May 16, 2022 at 2:57 pm

JIm – here is a YT purporting to demonstrate how to compare correlation coefficients for statistical significance. I’m not a statistician and cannot vouch for the contents. https://www.youtube.com/watch?v=ipqUoAN2m4g

May 16, 2022 at 7:22 pm

That seems like a very non-standard approach in the YT video. And, with a sample size of 200 (100 males, 100 females), even very small effect sizes should be significant. So, I have some doubts about that process, but I haven’t dug into it. It might be totally valid, but it seems inefficient in terms of statistical power for the sample size.

Here’s how I would’ve done that analysis. Instead of correlation, I’d use regression with an interaction effect. I’d want to model the relationship between the amount time studying for a test and the scores. Additionally, I also gather 100 males and females and want to see if the relationship between time studying and test scores differs between genders. In regression, that’s an interaction effect. It’s the same question the YT video assesses, but using a different approach that provides a whole lot more answers.

To see that approach in action, read my post about Comparing Regression Lines Using Hypothesis Tests . In that post, I refer to comparing the relationships between two conditions, A and B. You can equate those two conditions to gender (male and female). And I look at the relationship between Input and Output, which you can equate to Time Studying and Test Score, respectively. While reading that post, notice how much more information you obtain using that approach than just the two correlation coefficients and whether they’re significantly different.

That’s what I mean by generally preferring regression analysis over simple correlation.

December 9, 2021 at 7:33 pm

salut Jim merci beaucoup pour cette explication je travaille sur un article et je veux calculer la taille d’echantillon pour critiquer la taille d’echantillon utulisé est ce que c posiible de deduire le P par le graphqiue et puis appliquer la regle pour d”duire N ?

December 12, 2021 at 11:57 pm

Unfortunately, I don’t speak French. However, I used Google Translate and I think I understand your question.

No, you can’t calculate the p-value by looking at a graph. You need the actual data values to do that. However, there is another approach you can use to determine whether they have a reasonable sample size.

You can use power and sample size software (such as the free G*Power ) to determine a good sample size. Keep in mind that the sample size you need depends on the strength of the correlation in the population. If the population has a correlation of 0.3, then you’ll need 67 data points to obtain a statistical power of 0.8. However, if the population correlation is higher, the required sample size declines while maintaining the statistical power of 0.8. For instance, for population correlations of 0.5 and 0.8, you’ll only need sample sizes of 23 and 8, respectively.

Using this approach, you’ll at least be able to determine whether they’re using a reasonable sample size given the size of correlation that they report even though you won’t know the p-value.

Hopefully, the reported the sample size, but, if not, you can just count the number of dots on the scatterplot.

November 19, 2021 at 4:47 pm

Hi Jim. How do I interpret r(12) = -.792, p < .001 for Pearson Coefficiient Correlation?

October 26, 2021 at 4:53 am

Hi If the correlation between the two independent constructs/variables and the dependent variable/constructs is medium or large, what must the manager to improve the two independent constructs/variables

October 7, 2021 at 1:12 am

Hi Jim, First of all thank you, this is an excellent resource and has really helped clarify some queries I had. I have run a Pearson’s r test on some stats software to analyse relationship between increasing age and need for friendship. The return is r = 0.052 and p = 0.381. Am I right in assuming there is a very slight positive correlation between the variables but one that is not statistically significant so the null hypothesis cannot be rejected? Kind regards

October 7, 2021 at 11:26 pm

Hi Victoria,

That correlation is so close to 0 that it essentially means that there is no relationship between your two variables. In fact, it’s so close to zero that calling it a very slight positive correlation might be exaggerating by a bit.

As for the p-value, you’re correct. It’s testing the null hypothesis that the correlation equals zero. Because your p-value is greater than any reasonable significance level, you fail to reject the null. Your data provide insufficient evidence to conclude that the correlation doesn’t equal zero (no effect).

If you haven’t, you should graph your data in a scatterplot. Perhaps there’s a U shaped relationship that Pearson’s won’t detect?

July 21, 2021 at 11:23 pm

No Jim, I mean to ask, let’s assume correlation between variable x and y is 0.91, how do we interpret the remaining 0.09 assuming correlation at 1 is strong positive linear correlation. ?

Is this because of diversification, correlation residual or any error term?

July 21, 2021 at 11:29 pm

Oh, ok. Basically, you’re asking why it’s not a perfect correlation of 1? What explains that difference of 0.09 between the observed correlation and 1? There are several reasons. The typical reason is that most relationships aren’t perfect. There’s usually a certain amount of inherent uncertainty between two variables. It’s the nature of the relationship. Occasionally, you might find very near perfect correlations for relationships governed by physical laws.

If you were to have pair of variables that should have a perfect correlation for theoretical reasons, you might still observe an imperfect correlation thanks to measurement error.

July 20, 2021 at 12:49 pm

If two variable has a correlation of 0.91 what is 0.09, in the equation?

July 21, 2021 at 10:59 pm

I’d need more information/context to be able to answer that question. Is it a regression coefficient?

June 30, 2021 at 4:21 pm

You are a great resource. Thank you for being so responsive. I’m sure I’ll be bugging you some more in the future.

June 30, 2021 at 12:48 pm

Jim, using Excel, I just calculated that the correlation between two variables (A and B) is .57, which I believe you would consider to be “moderate.” My question is, how can I translate that correlation into a statement that predicts what would happen to B if A goes up by 1 point. Thanks in advance for your help and most especially for your clarity.

June 30, 2021 at 2:59 pm

Hi Gerry, to get that type of information, you’ll need use regression analysis. Read my post about using Excel to perform regression for details . For your example, be sure to use A as the independent variable and B as the dependent variable. Then look at the regression coefficient for A to get your answer!

May 24, 2021 at 11:51 pm

Hey Man, I’m taking my stats final this week and I’m so glad I found you! Thank you for saving random college kids like me!

May 19, 2021 at 8:38 am

Hi, I am Nasib Zaman The Spearman correlation between high temperature and COVID-19 cases was significant ( r = 0.393). Correlation between UV index and COVID-19 cases was also significant ( r = 0.386). Is it true?

May 20, 2021 at 1:31 am

Both suggests that as temperature and UV increase that the number of COVID cases increases. Although it is a weak correlation. I don’t know whether that’s true or not. You’d have to assess the validity of the data to make that determination. Additionally, their might be confounding variables at play, which could bias the correlations. I have no way of knowing.

April 12, 2021 at 1:49 pm

I am using Pearson’s correlation co-efficient to to express the strength of relationship between my two variables on happiness, would this be an appropriate use?

Happiness Diet RelationshipSatisfaction

Pearson Correlation

Happiness 1.000 .310 . 416 Diet .310 1.000 .193 RelationshipSatisfaction .416 .193 1.000

Sig. (1-tailed) 0.00 0.00 Happiness Diet 0.00 0.00 RelationshipSatisfaction 0.00 0.00

N Happiness 1297 1297 1297 Diet 1297 1297 1297 RelationshipSatisfaction 1297 1297 1297

If so, would I be right to say that because the coefficient was r= (.193), it suggests that there is not too strong a relationship between the two independent variables. Can I use anything else to indicate significance levels?

March 29, 2021 at 3:12 am

I just want to say that your posts are great, but the QA section in the comments is even greater!

Congrats, Jim.

March 29, 2021 at 2:57 pm

Thanks so much!! 🙂

And, I’m really glad you enjoy the QA in the comments. I always request readers to post their questions in the comments section of the relevant post so the answers benefit everyone!

March 24, 2021 at 1:16 am

Thank you very much. This question was troubling me since last some days , thanks for helping.

Have a nice day…

March 24, 2021 at 1:34 am

You’re very welcome, Ronak! I’m glad to help!

March 22, 2021 at 12:56 pm

Nalin here. I found your article to be very clarifying conceptually. I had a doubt.

So there is this dataset I have been working on and I calculated the Pearson correlation coefficient between the target variable and the predictor variables. I found out that none of the predictor variables had a correlation >0.1 and <-0.1 with the target variable, hence indicating that no linear relationship exists between them.

How can I verify whether or not any non-linear relationships exist between these pairs of variables or not? Will a scatterplot confirm my claims?

March 23, 2021 at 3:09 pm

Yes, graphing the data in a scatterplot is always a good idea. While you might not have a linear relationship, you could have a curvilinear relationship. A scatterplot would reveal that.

One other thing to watch out for is omitted variable bias. When you perform correlation on a pair of variables, you’re not factoring in other relevant variables that can be confounding the results. To see what I mean, read my post about omitted variable bias . In it, I start with a correlation that appear to be zero even though there actually is a relationship. After I accounted for another variable, there was a significant relationship between the original pair of variables! Just another thing to watch out for that isn’t obvious!

March 20, 2021 at 3:23 am

Yes, I am also doing well…

I am having some subsequent queries…

By overall trend you mean that correlation coefficient will capture how y is changing with respect to x (means y is increasing or decreasing with increase or decrease in x), am i interpreting correctly ?

March 22, 2021 at 12:25 am

This is something should be clear by examining the scatterplot. Will a straight line fit the dots? Do the dots fall randomly about a straight line or are there patterns? If a straight line fits the data, Pearson’s correlation is valid. However, if it does not, then Pearson’s is not valid. Graphing is the best way to make the determination.

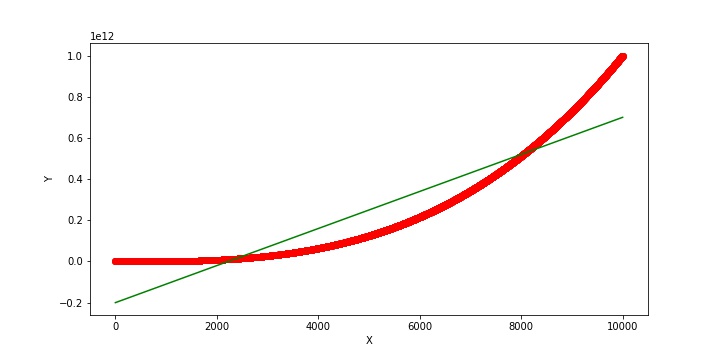

Thanks for the image.

March 23, 2021 at 3:41 pm

Hi again Ronak!

On your graph, the data points are the red line (actually lots and lots of data points and not really a line!). And, the green line is the linear fit. You don’t usually think of Pearson’s correlation as modeling the data but it uses a linear fit. So, the green line is how Pearson’s correlation models your data. You can see that the model doesn’t fit the data adequately. There are systematic (i.e., non-random departures) from the data points. Right there you know that Pearson’s correlation is invalid for these data.

Your data has an upward trend. That is, as X increases, Y also increases. And Pearson’s partially captures that trend. Hence, the positive slope for the green line and the positive correlation you calculated. But, it’s not perfect. You need a better model! In terms of correlation, the graph displays a monotonic relationship and Spearman’s correlation would be a good candidate. Or, you could use regression analysis and include a polynomial to model the curvature . Either of these methods will produce a better fit and more accurate results!

March 18, 2021 at 11:01 am

i am ronak from india. how are you?…hoping corona has not troubled you much. you have simplified concept very well. you are doing amazing job ,great work. i have one doubt and want to clarify it.

Question : whenever we talk correlation coefficient we talk in terms of linear relationship. but i have calculated correlation coefficient for relationship Y vs X^3.

X variable : 1 to 10000 Y = X^3

and correlation coefficient is coming around 0.9165. it is strange even relationship is not linear still it is giving me very high correlation coefficient.

March 19, 2021 at 3:53 pm

I’m doing well here. Just hunkering down like everyone else! I hope you’re doing well too! 🙂

For your data, I’d recommend graphing them in a scatterplot and fit a linear trend line. You can do that in Excel. If your data follow an S-shaped cubic relationship, it is still possible to get a relatively strong correlation. You’ll be able to see how that happens in the scatterplot with trend line. There’s an overall trend to the data that your line follows, but it does hug the curves. However, if you fit a model with a cubic term to fit the curves, you’ll get a better model.

So, let’s switch from a correlation to R-squared. Your correlation of 0.9165 corresponds to an R-squared of 0.84. I’m literally squaring your correlation coefficient to get the R-squared value. Now, fit a regression model with the quadratic and cubic terms to fit your data. You’ll find that your R-squared for this model is higher than for the linear model.

In short, the linear correlation is capturing the overall trend in the data but doesn’t fit the data points as well as the model designed for curvilinear data. Your correlation seems good but it doesn’t fully fit the data.

March 11, 2021 at 10:56 am

Hi Jim Do the partial correlation include the continuous (scale) variables all times? Is it possible to include other types of variables (as nominal or ordinal)? Regards Jagar

March 16, 2021 at 12:30 am

Pearson correlations are for continuous data that follow a linear relationship. If you have ordinal data or continuous data that follow a monotonic relationship, you can use Spearman’s correlation.

There are correlations specifically for nominal data. I need to write a blog post about those!

March 10, 2021 at 11:45 am

if the correlation coefficient is 0.153 what type of correlation is it?

February 14, 2021 at 1:49 pm

February 12, 2021 at 8:09 pm

If my r value when finding correlation between two things is -0.0258 what would that be negative weak correlation or something else?

February 14, 2021 at 12:08 am

Hi Dez, your correlation coefficient is essentially zero, which indicates no relationship between the variables. As one variable increases, there is no tendency for the variable to either increase or decrease. There’s just no relationship between them according to your data.

January 9, 2021 at 12:10 pm

my coefficient correlation between my independent variables (anger, anxiety, happiness, satisfaction) and a dependent variable(entrepreneurial decision making behavior) is 0.401, 0.303, 0.369, 0.384.

what does this mean? how do i interpret explain this? what’s the relationship?

January 10, 2021 at 1:33 am