Hypothesis Testing - Chi Squared Test

Lisa Sullivan, PhD

Professor of Biostatistics

Boston University School of Public Health

Introduction

This module will continue the discussion of hypothesis testing, where a specific statement or hypothesis is generated about a population parameter, and sample statistics are used to assess the likelihood that the hypothesis is true. The hypothesis is based on available information and the investigator's belief about the population parameters. The specific tests considered here are called chi-square tests and are appropriate when the outcome is discrete (dichotomous, ordinal or categorical). For example, in some clinical trials the outcome is a classification such as hypertensive, pre-hypertensive or normotensive. We could use the same classification in an observational study such as the Framingham Heart Study to compare men and women in terms of their blood pressure status - again using the classification of hypertensive, pre-hypertensive or normotensive status.

The technique to analyze a discrete outcome uses what is called a chi-square test. Specifically, the test statistic follows a chi-square probability distribution. We will consider chi-square tests here with one, two and more than two independent comparison groups.

Learning Objectives

After completing this module, the student will be able to:

- Perform chi-square tests by hand

- Appropriately interpret results of chi-square tests

- Identify the appropriate hypothesis testing procedure based on type of outcome variable and number of samples

Tests with One Sample, Discrete Outcome

Here we consider hypothesis testing with a discrete outcome variable in a single population. Discrete variables are variables that take on more than two distinct responses or categories and the responses can be ordered or unordered (i.e., the outcome can be ordinal or categorical). The procedure we describe here can be used for dichotomous (exactly 2 response options), ordinal or categorical discrete outcomes and the objective is to compare the distribution of responses, or the proportions of participants in each response category, to a known distribution. The known distribution is derived from another study or report and it is again important in setting up the hypotheses that the comparator distribution specified in the null hypothesis is a fair comparison. The comparator is sometimes called an external or a historical control.

In one sample tests for a discrete outcome, we set up our hypotheses against an appropriate comparator. We select a sample and compute descriptive statistics on the sample data. Specifically, we compute the sample size (n) and the proportions of participants in each response

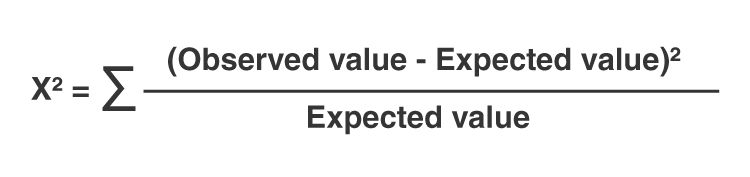

Test Statistic for Testing H 0 : p 1 = p 10 , p 2 = p 20 , ..., p k = p k0

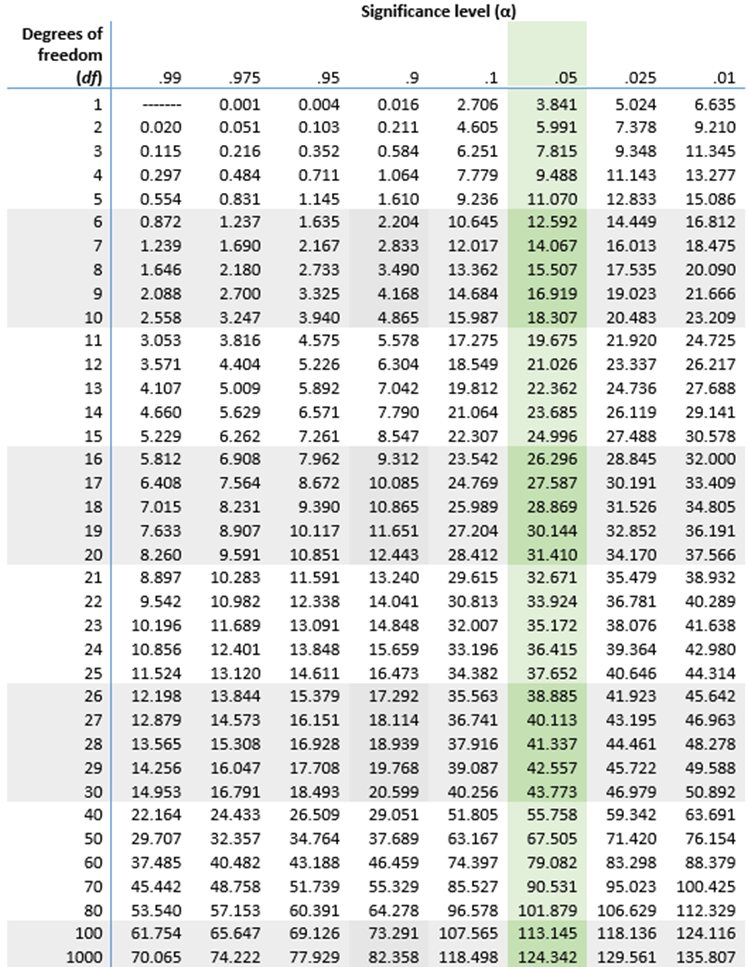

We find the critical value in a table of probabilities for the chi-square distribution with degrees of freedom (df) = k-1. In the test statistic, O = observed frequency and E=expected frequency in each of the response categories. The observed frequencies are those observed in the sample and the expected frequencies are computed as described below. χ 2 (chi-square) is another probability distribution and ranges from 0 to ∞. The test above statistic formula above is appropriate for large samples, defined as expected frequencies of at least 5 in each of the response categories.

When we conduct a χ 2 test, we compare the observed frequencies in each response category to the frequencies we would expect if the null hypothesis were true. These expected frequencies are determined by allocating the sample to the response categories according to the distribution specified in H 0 . This is done by multiplying the observed sample size (n) by the proportions specified in the null hypothesis (p 10 , p 20 , ..., p k0 ). To ensure that the sample size is appropriate for the use of the test statistic above, we need to ensure that the following: min(np 10 , n p 20 , ..., n p k0 ) > 5.

The test of hypothesis with a discrete outcome measured in a single sample, where the goal is to assess whether the distribution of responses follows a known distribution, is called the χ 2 goodness-of-fit test. As the name indicates, the idea is to assess whether the pattern or distribution of responses in the sample "fits" a specified population (external or historical) distribution. In the next example we illustrate the test. As we work through the example, we provide additional details related to the use of this new test statistic.

A University conducted a survey of its recent graduates to collect demographic and health information for future planning purposes as well as to assess students' satisfaction with their undergraduate experiences. The survey revealed that a substantial proportion of students were not engaging in regular exercise, many felt their nutrition was poor and a substantial number were smoking. In response to a question on regular exercise, 60% of all graduates reported getting no regular exercise, 25% reported exercising sporadically and 15% reported exercising regularly as undergraduates. The next year the University launched a health promotion campaign on campus in an attempt to increase health behaviors among undergraduates. The program included modules on exercise, nutrition and smoking cessation. To evaluate the impact of the program, the University again surveyed graduates and asked the same questions. The survey was completed by 470 graduates and the following data were collected on the exercise question:

|

|

|

|

|

|

| Number of Students | 255 | 125 | 90 | 470 |

Based on the data, is there evidence of a shift in the distribution of responses to the exercise question following the implementation of the health promotion campaign on campus? Run the test at a 5% level of significance.

In this example, we have one sample and a discrete (ordinal) outcome variable (with three response options). We specifically want to compare the distribution of responses in the sample to the distribution reported the previous year (i.e., 60%, 25%, 15% reporting no, sporadic and regular exercise, respectively). We now run the test using the five-step approach.

- Step 1. Set up hypotheses and determine level of significance.

The null hypothesis again represents the "no change" or "no difference" situation. If the health promotion campaign has no impact then we expect the distribution of responses to the exercise question to be the same as that measured prior to the implementation of the program.

H 0 : p 1 =0.60, p 2 =0.25, p 3 =0.15, or equivalently H 0 : Distribution of responses is 0.60, 0.25, 0.15

H 1 : H 0 is false. α =0.05

Notice that the research hypothesis is written in words rather than in symbols. The research hypothesis as stated captures any difference in the distribution of responses from that specified in the null hypothesis. We do not specify a specific alternative distribution, instead we are testing whether the sample data "fit" the distribution in H 0 or not. With the χ 2 goodness-of-fit test there is no upper or lower tailed version of the test.

- Step 2. Select the appropriate test statistic.

The test statistic is:

We must first assess whether the sample size is adequate. Specifically, we need to check min(np 0 , np 1, ..., n p k ) > 5. The sample size here is n=470 and the proportions specified in the null hypothesis are 0.60, 0.25 and 0.15. Thus, min( 470(0.65), 470(0.25), 470(0.15))=min(282, 117.5, 70.5)=70.5. The sample size is more than adequate so the formula can be used.

- Step 3. Set up decision rule.

The decision rule for the χ 2 test depends on the level of significance and the degrees of freedom, defined as degrees of freedom (df) = k-1 (where k is the number of response categories). If the null hypothesis is true, the observed and expected frequencies will be close in value and the χ 2 statistic will be close to zero. If the null hypothesis is false, then the χ 2 statistic will be large. Critical values can be found in a table of probabilities for the χ 2 distribution. Here we have df=k-1=3-1=2 and a 5% level of significance. The appropriate critical value is 5.99, and the decision rule is as follows: Reject H 0 if χ 2 > 5.99.

- Step 4. Compute the test statistic.

We now compute the expected frequencies using the sample size and the proportions specified in the null hypothesis. We then substitute the sample data (observed frequencies) and the expected frequencies into the formula for the test statistic identified in Step 2. The computations can be organized as follows.

|

|

|

|

|

|

|---|---|---|---|---|

|

| 255 | 125 | 90 | 470 |

|

| 470(0.60) =282 | 470(0.25) =117.5 | 470(0.15) =70.5 | 470 |

Notice that the expected frequencies are taken to one decimal place and that the sum of the observed frequencies is equal to the sum of the expected frequencies. The test statistic is computed as follows:

- Step 5. Conclusion.

We reject H 0 because 8.46 > 5.99. We have statistically significant evidence at α=0.05 to show that H 0 is false, or that the distribution of responses is not 0.60, 0.25, 0.15. The p-value is p < 0.005.

In the χ 2 goodness-of-fit test, we conclude that either the distribution specified in H 0 is false (when we reject H 0 ) or that we do not have sufficient evidence to show that the distribution specified in H 0 is false (when we fail to reject H 0 ). Here, we reject H 0 and concluded that the distribution of responses to the exercise question following the implementation of the health promotion campaign was not the same as the distribution prior. The test itself does not provide details of how the distribution has shifted. A comparison of the observed and expected frequencies will provide some insight into the shift (when the null hypothesis is rejected). Does it appear that the health promotion campaign was effective?

Consider the following:

|

|

|

|

|

|

|---|---|---|---|---|

|

| 255 | 125 | 90 | 470 |

|

| 282 | 117.5 | 70.5 | 470 |

If the null hypothesis were true (i.e., no change from the prior year) we would have expected more students to fall in the "No Regular Exercise" category and fewer in the "Regular Exercise" categories. In the sample, 255/470 = 54% reported no regular exercise and 90/470=19% reported regular exercise. Thus, there is a shift toward more regular exercise following the implementation of the health promotion campaign. There is evidence of a statistical difference, is this a meaningful difference? Is there room for improvement?

The National Center for Health Statistics (NCHS) provided data on the distribution of weight (in categories) among Americans in 2002. The distribution was based on specific values of body mass index (BMI) computed as weight in kilograms over height in meters squared. Underweight was defined as BMI< 18.5, Normal weight as BMI between 18.5 and 24.9, overweight as BMI between 25 and 29.9 and obese as BMI of 30 or greater. Americans in 2002 were distributed as follows: 2% Underweight, 39% Normal Weight, 36% Overweight, and 23% Obese. Suppose we want to assess whether the distribution of BMI is different in the Framingham Offspring sample. Using data from the n=3,326 participants who attended the seventh examination of the Offspring in the Framingham Heart Study we created the BMI categories as defined and observed the following:

|

|

|

|

|

30 |

|

|---|---|---|---|---|---|

|

| 20 | 932 | 1374 | 1000 | 3326 |

- Step 1. Set up hypotheses and determine level of significance.

H 0 : p 1 =0.02, p 2 =0.39, p 3 =0.36, p 4 =0.23 or equivalently

H 0 : Distribution of responses is 0.02, 0.39, 0.36, 0.23

H 1 : H 0 is false. α=0.05

The formula for the test statistic is:

We must assess whether the sample size is adequate. Specifically, we need to check min(np 0 , np 1, ..., n p k ) > 5. The sample size here is n=3,326 and the proportions specified in the null hypothesis are 0.02, 0.39, 0.36 and 0.23. Thus, min( 3326(0.02), 3326(0.39), 3326(0.36), 3326(0.23))=min(66.5, 1297.1, 1197.4, 765.0)=66.5. The sample size is more than adequate, so the formula can be used.

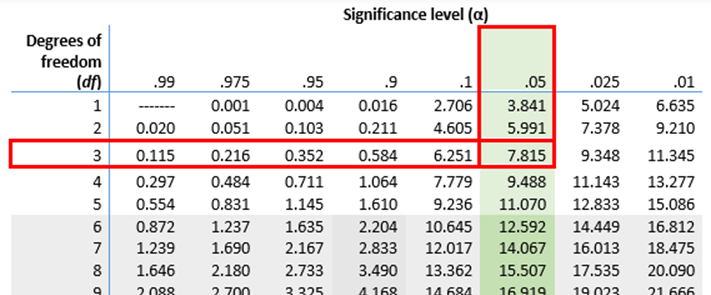

Here we have df=k-1=4-1=3 and a 5% level of significance. The appropriate critical value is 7.81 and the decision rule is as follows: Reject H 0 if χ 2 > 7.81.

We now compute the expected frequencies using the sample size and the proportions specified in the null hypothesis. We then substitute the sample data (observed frequencies) into the formula for the test statistic identified in Step 2. We organize the computations in the following table.

|

|

|

|

|

30 |

|

|---|---|---|---|---|---|

|

| 20 | 932 | 1374 | 1000 | 3326 |

|

| 66.5 | 1297.1 | 1197.4 | 765.0 | 3326 |

The test statistic is computed as follows:

We reject H 0 because 233.53 > 7.81. We have statistically significant evidence at α=0.05 to show that H 0 is false or that the distribution of BMI in Framingham is different from the national data reported in 2002, p < 0.005.

Again, the χ 2 goodness-of-fit test allows us to assess whether the distribution of responses "fits" a specified distribution. Here we show that the distribution of BMI in the Framingham Offspring Study is different from the national distribution. To understand the nature of the difference we can compare observed and expected frequencies or observed and expected proportions (or percentages). The frequencies are large because of the large sample size, the observed percentages of patients in the Framingham sample are as follows: 0.6% underweight, 28% normal weight, 41% overweight and 30% obese. In the Framingham Offspring sample there are higher percentages of overweight and obese persons (41% and 30% in Framingham as compared to 36% and 23% in the national data), and lower proportions of underweight and normal weight persons (0.6% and 28% in Framingham as compared to 2% and 39% in the national data). Are these meaningful differences?

In the module on hypothesis testing for means and proportions, we discussed hypothesis testing applications with a dichotomous outcome variable in a single population. We presented a test using a test statistic Z to test whether an observed (sample) proportion differed significantly from a historical or external comparator. The chi-square goodness-of-fit test can also be used with a dichotomous outcome and the results are mathematically equivalent.

In the prior module, we considered the following example. Here we show the equivalence to the chi-square goodness-of-fit test.

The NCHS report indicated that in 2002, 75% of children aged 2 to 17 saw a dentist in the past year. An investigator wants to assess whether use of dental services is similar in children living in the city of Boston. A sample of 125 children aged 2 to 17 living in Boston are surveyed and 64 reported seeing a dentist over the past 12 months. Is there a significant difference in use of dental services between children living in Boston and the national data?

We presented the following approach to the test using a Z statistic.

- Step 1. Set up hypotheses and determine level of significance

H 0 : p = 0.75

H 1 : p ≠ 0.75 α=0.05

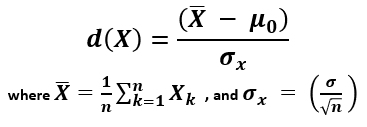

We must first check that the sample size is adequate. Specifically, we need to check min(np 0 , n(1-p 0 )) = min( 125(0.75), 125(1-0.75))=min(94, 31)=31. The sample size is more than adequate so the following formula can be used

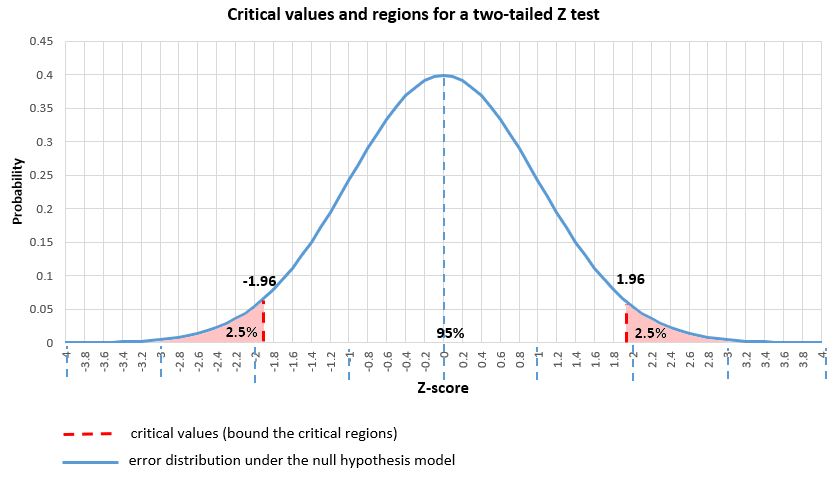

This is a two-tailed test, using a Z statistic and a 5% level of significance. Reject H 0 if Z < -1.960 or if Z > 1.960.

We now substitute the sample data into the formula for the test statistic identified in Step 2. The sample proportion is:

We reject H 0 because -6.15 < -1.960. We have statistically significant evidence at a =0.05 to show that there is a statistically significant difference in the use of dental service by children living in Boston as compared to the national data. (p < 0.0001).

We now conduct the same test using the chi-square goodness-of-fit test. First, we summarize our sample data as follows:

|

| Saw a Dentist in Past 12 Months | Did Not See a Dentist in Past 12 Months | Total |

|---|---|---|---|

| # of Participants | 64 | 61 | 125 |

H 0 : p 1 =0.75, p 2 =0.25 or equivalently H 0 : Distribution of responses is 0.75, 0.25

We must assess whether the sample size is adequate. Specifically, we need to check min(np 0 , np 1, ...,np k >) > 5. The sample size here is n=125 and the proportions specified in the null hypothesis are 0.75, 0.25. Thus, min( 125(0.75), 125(0.25))=min(93.75, 31.25)=31.25. The sample size is more than adequate so the formula can be used.

Here we have df=k-1=2-1=1 and a 5% level of significance. The appropriate critical value is 3.84, and the decision rule is as follows: Reject H 0 if χ 2 > 3.84. (Note that 1.96 2 = 3.84, where 1.96 was the critical value used in the Z test for proportions shown above.)

|

|

|

|

|

|---|---|---|---|

|

| 64 | 61 | 125 |

|

| 93.75 | 31.25 | 125 |

(Note that (-6.15) 2 = 37.8, where -6.15 was the value of the Z statistic in the test for proportions shown above.)

We reject H 0 because 37.8 > 3.84. We have statistically significant evidence at α=0.05 to show that there is a statistically significant difference in the use of dental service by children living in Boston as compared to the national data. (p < 0.0001). This is the same conclusion we reached when we conducted the test using the Z test above. With a dichotomous outcome, Z 2 = χ 2 ! In statistics, there are often several approaches that can be used to test hypotheses.

Tests for Two or More Independent Samples, Discrete Outcome

Here we extend that application of the chi-square test to the case with two or more independent comparison groups. Specifically, the outcome of interest is discrete with two or more responses and the responses can be ordered or unordered (i.e., the outcome can be dichotomous, ordinal or categorical). We now consider the situation where there are two or more independent comparison groups and the goal of the analysis is to compare the distribution of responses to the discrete outcome variable among several independent comparison groups.

The test is called the χ 2 test of independence and the null hypothesis is that there is no difference in the distribution of responses to the outcome across comparison groups. This is often stated as follows: The outcome variable and the grouping variable (e.g., the comparison treatments or comparison groups) are independent (hence the name of the test). Independence here implies homogeneity in the distribution of the outcome among comparison groups.

The null hypothesis in the χ 2 test of independence is often stated in words as: H 0 : The distribution of the outcome is independent of the groups. The alternative or research hypothesis is that there is a difference in the distribution of responses to the outcome variable among the comparison groups (i.e., that the distribution of responses "depends" on the group). In order to test the hypothesis, we measure the discrete outcome variable in each participant in each comparison group. The data of interest are the observed frequencies (or number of participants in each response category in each group). The formula for the test statistic for the χ 2 test of independence is given below.

Test Statistic for Testing H 0 : Distribution of outcome is independent of groups

and we find the critical value in a table of probabilities for the chi-square distribution with df=(r-1)*(c-1).

Here O = observed frequency, E=expected frequency in each of the response categories in each group, r = the number of rows in the two-way table and c = the number of columns in the two-way table. r and c correspond to the number of comparison groups and the number of response options in the outcome (see below for more details). The observed frequencies are the sample data and the expected frequencies are computed as described below. The test statistic is appropriate for large samples, defined as expected frequencies of at least 5 in each of the response categories in each group.

The data for the χ 2 test of independence are organized in a two-way table. The outcome and grouping variable are shown in the rows and columns of the table. The sample table below illustrates the data layout. The table entries (blank below) are the numbers of participants in each group responding to each response category of the outcome variable.

Table - Possible outcomes are are listed in the columns; The groups being compared are listed in rows.

|

|

|

| |||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| N |

In the table above, the grouping variable is shown in the rows of the table; r denotes the number of independent groups. The outcome variable is shown in the columns of the table; c denotes the number of response options in the outcome variable. Each combination of a row (group) and column (response) is called a cell of the table. The table has r*c cells and is sometimes called an r x c ("r by c") table. For example, if there are 4 groups and 5 categories in the outcome variable, the data are organized in a 4 X 5 table. The row and column totals are shown along the right-hand margin and the bottom of the table, respectively. The total sample size, N, can be computed by summing the row totals or the column totals. Similar to ANOVA, N does not refer to a population size here but rather to the total sample size in the analysis. The sample data can be organized into a table like the above. The numbers of participants within each group who select each response option are shown in the cells of the table and these are the observed frequencies used in the test statistic.

The test statistic for the χ 2 test of independence involves comparing observed (sample data) and expected frequencies in each cell of the table. The expected frequencies are computed assuming that the null hypothesis is true. The null hypothesis states that the two variables (the grouping variable and the outcome) are independent. The definition of independence is as follows:

Two events, A and B, are independent if P(A|B) = P(A), or equivalently, if P(A and B) = P(A) P(B).

The second statement indicates that if two events, A and B, are independent then the probability of their intersection can be computed by multiplying the probability of each individual event. To conduct the χ 2 test of independence, we need to compute expected frequencies in each cell of the table. Expected frequencies are computed by assuming that the grouping variable and outcome are independent (i.e., under the null hypothesis). Thus, if the null hypothesis is true, using the definition of independence:

P(Group 1 and Response Option 1) = P(Group 1) P(Response Option 1).

The above states that the probability that an individual is in Group 1 and their outcome is Response Option 1 is computed by multiplying the probability that person is in Group 1 by the probability that a person is in Response Option 1. To conduct the χ 2 test of independence, we need expected frequencies and not expected probabilities . To convert the above probability to a frequency, we multiply by N. Consider the following small example.

|

|

|

|

|

|

|---|---|---|---|---|

|

| 10 | 8 | 7 | 25 |

|

| 22 | 15 | 13 | 50 |

|

| 30 | 28 | 17 | 75 |

|

| 62 | 51 | 37 | 150 |

The data shown above are measured in a sample of size N=150. The frequencies in the cells of the table are the observed frequencies. If Group and Response are independent, then we can compute the probability that a person in the sample is in Group 1 and Response category 1 using:

P(Group 1 and Response 1) = P(Group 1) P(Response 1),

P(Group 1 and Response 1) = (25/150) (62/150) = 0.069.

Thus if Group and Response are independent we would expect 6.9% of the sample to be in the top left cell of the table (Group 1 and Response 1). The expected frequency is 150(0.069) = 10.4. We could do the same for Group 2 and Response 1:

P(Group 2 and Response 1) = P(Group 2) P(Response 1),

P(Group 2 and Response 1) = (50/150) (62/150) = 0.138.

The expected frequency in Group 2 and Response 1 is 150(0.138) = 20.7.

Thus, the formula for determining the expected cell frequencies in the χ 2 test of independence is as follows:

Expected Cell Frequency = (Row Total * Column Total)/N.

The above computes the expected frequency in one step rather than computing the expected probability first and then converting to a frequency.

In a prior example we evaluated data from a survey of university graduates which assessed, among other things, how frequently they exercised. The survey was completed by 470 graduates. In the prior example we used the χ 2 goodness-of-fit test to assess whether there was a shift in the distribution of responses to the exercise question following the implementation of a health promotion campaign on campus. We specifically considered one sample (all students) and compared the observed distribution to the distribution of responses the prior year (a historical control). Suppose we now wish to assess whether there is a relationship between exercise on campus and students' living arrangements. As part of the same survey, graduates were asked where they lived their senior year. The response options were dormitory, on-campus apartment, off-campus apartment, and at home (i.e., commuted to and from the university). The data are shown below.

|

|

|

|

|

|

|---|---|---|---|---|

|

| 32 | 30 | 28 | 90 |

|

| 74 | 64 | 42 | 180 |

|

| 110 | 25 | 15 | 150 |

|

| 39 | 6 | 5 | 50 |

|

| 255 | 125 | 90 | 470 |

Based on the data, is there a relationship between exercise and student's living arrangement? Do you think where a person lives affect their exercise status? Here we have four independent comparison groups (living arrangement) and a discrete (ordinal) outcome variable with three response options. We specifically want to test whether living arrangement and exercise are independent. We will run the test using the five-step approach.

H 0 : Living arrangement and exercise are independent

H 1 : H 0 is false. α=0.05

The null and research hypotheses are written in words rather than in symbols. The research hypothesis is that the grouping variable (living arrangement) and the outcome variable (exercise) are dependent or related.

- Step 2. Select the appropriate test statistic.

The condition for appropriate use of the above test statistic is that each expected frequency is at least 5. In Step 4 we will compute the expected frequencies and we will ensure that the condition is met.

The decision rule depends on the level of significance and the degrees of freedom, defined as df = (r-1)(c-1), where r and c are the numbers of rows and columns in the two-way data table. The row variable is the living arrangement and there are 4 arrangements considered, thus r=4. The column variable is exercise and 3 responses are considered, thus c=3. For this test, df=(4-1)(3-1)=3(2)=6. Again, with χ 2 tests there are no upper, lower or two-tailed tests. If the null hypothesis is true, the observed and expected frequencies will be close in value and the χ 2 statistic will be close to zero. If the null hypothesis is false, then the χ 2 statistic will be large. The rejection region for the χ 2 test of independence is always in the upper (right-hand) tail of the distribution. For df=6 and a 5% level of significance, the appropriate critical value is 12.59 and the decision rule is as follows: Reject H 0 if c 2 > 12.59.

We now compute the expected frequencies using the formula,

Expected Frequency = (Row Total * Column Total)/N.

The computations can be organized in a two-way table. The top number in each cell of the table is the observed frequency and the bottom number is the expected frequency. The expected frequencies are shown in parentheses.

|

|

|

|

|

|

|---|---|---|---|---|

|

| 32 (48.8) | 30 (23.9) | 28 (17.2) | 90 |

|

| 74 (97.7) | 64 (47.9) | 42 (34.5) | 180 |

|

| 110 (81.4) | 25 (39.9) | 15 (28.7) | 150 |

|

| 39 (27.1) | 6 (13.3) | 5 (9.6) | 50 |

|

| 255 | 125 | 90 | 470 |

Notice that the expected frequencies are taken to one decimal place and that the sums of the observed frequencies are equal to the sums of the expected frequencies in each row and column of the table.

Recall in Step 2 a condition for the appropriate use of the test statistic was that each expected frequency is at least 5. This is true for this sample (the smallest expected frequency is 9.6) and therefore it is appropriate to use the test statistic.

We reject H 0 because 60.5 > 12.59. We have statistically significant evidence at a =0.05 to show that H 0 is false or that living arrangement and exercise are not independent (i.e., they are dependent or related), p < 0.005.

Again, the χ 2 test of independence is used to test whether the distribution of the outcome variable is similar across the comparison groups. Here we rejected H 0 and concluded that the distribution of exercise is not independent of living arrangement, or that there is a relationship between living arrangement and exercise. The test provides an overall assessment of statistical significance. When the null hypothesis is rejected, it is important to review the sample data to understand the nature of the relationship. Consider again the sample data.

Because there are different numbers of students in each living situation, it makes the comparisons of exercise patterns difficult on the basis of the frequencies alone. The following table displays the percentages of students in each exercise category by living arrangement. The percentages sum to 100% in each row of the table. For comparison purposes, percentages are also shown for the total sample along the bottom row of the table.

|

|

|

|

|

|---|---|---|---|

|

| 36% | 33% | 31% |

|

| 41% | 36% | 23% |

|

| 73% | 17% | 10% |

|

| 78% | 12% | 10% |

|

| 54% | 27% | 19% |

From the above, it is clear that higher percentages of students living in dormitories and in on-campus apartments reported regular exercise (31% and 23%) as compared to students living in off-campus apartments and at home (10% each).

Test Yourself

Pancreaticoduodenectomy (PD) is a procedure that is associated with considerable morbidity. A study was recently conducted on 553 patients who had a successful PD between January 2000 and December 2010 to determine whether their Surgical Apgar Score (SAS) is related to 30-day perioperative morbidity and mortality. The table below gives the number of patients experiencing no, minor, or major morbidity by SAS category.

|

|

|

|

|

|---|---|---|---|

| 0-4 | 21 | 20 | 16 |

| 5-6 | 135 | 71 | 35 |

| 7-10 | 158 | 62 | 35 |

Question: What would be an appropriate statistical test to examine whether there is an association between Surgical Apgar Score and patient outcome? Using 14.13 as the value of the test statistic for these data, carry out the appropriate test at a 5% level of significance. Show all parts of your test.

In the module on hypothesis testing for means and proportions, we discussed hypothesis testing applications with a dichotomous outcome variable and two independent comparison groups. We presented a test using a test statistic Z to test for equality of independent proportions. The chi-square test of independence can also be used with a dichotomous outcome and the results are mathematically equivalent.

In the prior module, we considered the following example. Here we show the equivalence to the chi-square test of independence.

A randomized trial is designed to evaluate the effectiveness of a newly developed pain reliever designed to reduce pain in patients following joint replacement surgery. The trial compares the new pain reliever to the pain reliever currently in use (called the standard of care). A total of 100 patients undergoing joint replacement surgery agreed to participate in the trial. Patients were randomly assigned to receive either the new pain reliever or the standard pain reliever following surgery and were blind to the treatment assignment. Before receiving the assigned treatment, patients were asked to rate their pain on a scale of 0-10 with higher scores indicative of more pain. Each patient was then given the assigned treatment and after 30 minutes was again asked to rate their pain on the same scale. The primary outcome was a reduction in pain of 3 or more scale points (defined by clinicians as a clinically meaningful reduction). The following data were observed in the trial.

|

|

|

|

|

|---|---|---|---|

|

| 50 | 23 | 0.46 |

|

| 50 | 11 | 0.22 |

We tested whether there was a significant difference in the proportions of patients reporting a meaningful reduction (i.e., a reduction of 3 or more scale points) using a Z statistic, as follows.

H 0 : p 1 = p 2

H 1 : p 1 ≠ p 2 α=0.05

Here the new or experimental pain reliever is group 1 and the standard pain reliever is group 2.

We must first check that the sample size is adequate. Specifically, we need to ensure that we have at least 5 successes and 5 failures in each comparison group or that:

In this example, we have

Therefore, the sample size is adequate, so the following formula can be used:

Reject H 0 if Z < -1.960 or if Z > 1.960.

We now substitute the sample data into the formula for the test statistic identified in Step 2. We first compute the overall proportion of successes:

We now substitute to compute the test statistic.

- Step 5. Conclusion.

We now conduct the same test using the chi-square test of independence.

H 0 : Treatment and outcome (meaningful reduction in pain) are independent

H 1 : H 0 is false. α=0.05

The formula for the test statistic is:

For this test, df=(2-1)(2-1)=1. At a 5% level of significance, the appropriate critical value is 3.84 and the decision rule is as follows: Reject H0 if χ 2 > 3.84. (Note that 1.96 2 = 3.84, where 1.96 was the critical value used in the Z test for proportions shown above.)

We now compute the expected frequencies using:

The computations can be organized in a two-way table. The top number in each cell of the table is the observed frequency and the bottom number is the expected frequency. The expected frequencies are shown in parentheses.

|

|

|

|

|

|---|---|---|---|

|

| 23 (17.0) | 27 (33.0) | 50 |

|

| 11 (17.0) | 39 (33.0) | 50 |

|

| 34 | 66 | 100 |

A condition for the appropriate use of the test statistic was that each expected frequency is at least 5. This is true for this sample (the smallest expected frequency is 22.0) and therefore it is appropriate to use the test statistic.

(Note that (2.53) 2 = 6.4, where 2.53 was the value of the Z statistic in the test for proportions shown above.)

Chi-Squared Tests in R

The video below by Mike Marin demonstrates how to perform chi-squared tests in the R programming language.

Answer to Problem on Pancreaticoduodenectomy and Surgical Apgar Scores

We have 3 independent comparison groups (Surgical Apgar Score) and a categorical outcome variable (morbidity/mortality). We can run a Chi-Squared test of independence.

H 0 : Apgar scores and patient outcome are independent of one another.

H A : Apgar scores and patient outcome are not independent.

Chi-squared = 14.3

Since 14.3 is greater than 9.49, we reject H 0.

There is an association between Apgar scores and patient outcome. The lowest Apgar score group (0 to 4) experienced the highest percentage of major morbidity or mortality (16 out of 57=28%) compared to the other Apgar score groups.

LEARN STATISTICS EASILY

Learn Data Analysis Now!

Understanding the Null Hypothesis in Chi-Square

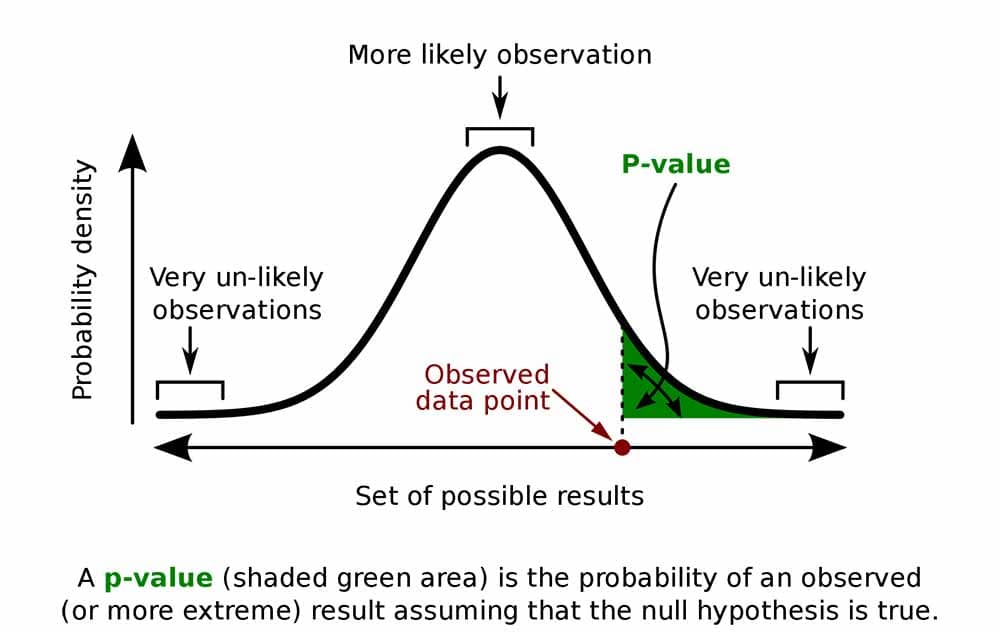

The null hypothesis in chi square testing suggests no significant difference between a study’s observed and expected frequencies. It assumes any observed difference is due to chance and not because of a meaningful statistical relationship.

Introduction

The chi-square test is a valuable tool in statistical analysis. It’s a non-parametric test applied when the data are qualitative or categorical. This test helps to establish whether there is a significant association between 2 categorical variables in a sample population.

Central to any chi-square test is the concept of the null hypothesis. In the context of chi-square, the null hypothesis assumes no significant difference exists between the categories’ observed and expected frequencies. Any difference seen is likely due to chance or random error rather than a meaningful statistical difference.

- The chi-square null hypothesis assumes no significant difference between observed and expected frequencies.

- Failing to reject the null hypothesis doesn’t prove it true, only that data lacks strong evidence against it.

- A p-value < the significance level indicates a significant association between variables.

Ad description. Lorem ipsum dolor sit amet, consectetur adipiscing elit.

Understanding the Concept of Null Hypothesis in Chi Square

The null hypothesis in chi-square tests is essentially a statement of no effect or no relationship. When it comes to categorical data, it indicates that the distribution of categories for one variable is not affected by the distribution of categories of the other variable.

For example, if we compare the preference for different types of fruit among men and women, the null hypothesis would state that the preference is independent of gender. The alternative hypothesis, on the other hand, would suggest a dependency between the two.

Steps to Formulate the Null Hypothesis in Chi-Square Tests

Formulating the null hypothesis is a critical step in any chi-square test. First, identify the variables being tested. Then, once the variables are determined, the null hypothesis can be formulated to state no association between them.

Next, collect your data. This data must be frequencies or counts of categories, not percentages or averages. Once the data is collected, you can calculate the expected frequency for each category under the null hypothesis.

Finally, use the chi-square formula to calculate the chi-square statistic. This will help determine whether to reject or fail to reject the null hypothesis.

| Step | Description |

|---|---|

| 1. Identify Variables | Determine the variables being tested in your study. |

| 2. State the Null Hypothesis | Formulate the null hypothesis to state that there is no association between the variables. |

| 3. Collect Data | Gather your data. Remember, this must be frequencies or counts of categories, not percentages or averages. |

| 4. Calculate Expected Frequencies | Under the null hypothesis, calculate the expected frequency for each category. |

| 5. Compute Chi Square Statistic | Use the chi square formula to calculate the chi square statistic. This will help determine whether to reject or fail to reject the null hypothesis. |

Practical Example and Case Study

Consider a study evaluating whether smoking status is independent of a lung cancer diagnosis. The null hypothesis would state that smoking status (smoker or non-smoker) is independent of cancer diagnosis (yes or no).

If we find a p-value less than our significance level (typically 0.05) after conducting the chi-square test, we would reject the null hypothesis and conclude that smoking status is not independent of lung cancer diagnosis, suggesting a significant association between the two.

Observed Table

| Smoking Status | Cancer Diagnosis | No Cancer Diagnosis |

|---|---|---|

| Smoker | 70 | 30 |

| Non-Smoker | 20 | 80 |

Expected Table

| Smoking Status | Cancer Diagnosis | No Cancer Diagnosis |

|---|---|---|

| Smoker | 50 | 50 |

| Non-Smoker | 40 | 60 |

Common Misunderstandings and Pitfalls

One common misunderstanding is the interpretation of failing to reject the null hypothesis. It’s important to remember that failing to reject the null does not prove it true. Instead, it merely suggests that our data do not provide strong enough evidence against it.

Another pitfall is applying the chi-square test to inappropriate data. The chi-square test requires categorical or nominal data. Applying it to ordinal or continuous data without proper binning or categorization can lead to incorrect results.

The null hypothesis in chi-square testing is a powerful tool in statistical analysis. It provides a means to differentiate between observed variations due to random chance versus those that may signify a significant effect or relationship. As we continue to generate more data in various fields, the importance of understanding and correctly applying chi-square tests and the concept of the null hypothesis grows.

Recommended Articles

Interested in diving deeper into statistics? Explore our range of statistical analysis and data science articles to broaden your understanding. Visit our blog now!

- Simple Null Hypothesis – an overview (External Link)

- Chi-Square Calculator: Enhance Your Data Analysis Skills

- Effect Size for Chi-Square Tests: Unveiling its Significance

- What is the Difference Between the T-Test vs. Chi-Square Test?

- Understanding the Assumptions for Chi-Square Test of Independence

How to Report Chi-Square Test Results in APA Style: A Step-By-Step Guide

Frequently asked questions (faqs).

It’s a statistical test used to determine if there’s a significant association between two categorical variables.

The null hypothesis suggests no significant difference between observed and expected frequencies exists. The alternative hypothesis suggests a significant difference.

No, we never “accept” the null hypothesis. We only fail to reject it if the data doesn’t provide strong evidence against it.

Rejecting the null hypothesis implies a significant difference between observed and expected frequencies, suggesting an association between variables.

Chi-Square tests are appropriate for categorical or nominal data.

The significance level, often 0.05, is the probability threshold below which the null hypothesis can be rejected.

A p-value < the significance level indicates a significant association between variables, leading to rejecting the null hypothesis.

Using the Chi-Square test for improper data, like ordinal or continuous data, without proper categorization can lead to incorrect results.

Identify the variables, state their independence, collect data, calculate expected frequencies, and apply the Chi-Square formula.

Understanding the null hypothesis is essential for correctly interpreting and applying Chi-Square tests, helping to make informed decisions based on data.

Similar Posts

Chebyshev’s Theorem Calculator: A Tool for Unlocking Statistical Insights

Explore Chebyshev’s Theorem Calculator to unlock statistical insights and enhance your data analysis with precision and clarity.

What Makes a Variable Qualitative or Quantitative?

Explore the critical distinctions between Qualitative vs Quantitative variables, their research significance, and common misunderstandings.

This is a concise guide on how to report Chi-Square Test results in APA style, including significance, p-value, and effect size.

Florence Nightingale: How Data Visualization in the Form of Pie Charts Saved Lives

Discover how Florence Nightingale used data visualization and pie charts to revolutionize healthcare during the Crimean War.

How To Lie With Statistics?

Is It Possible To Lie With Statistics? Of Course, It Is! But, How? There Are Several Techniques Presented Here — Never Fall For Such Lies!

Principal Component Analysis: Transforming Data into Truthful Insights

This comprehensive guide explores how Principal Component Analysis transforms complex data into insightful, truthful information.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

AP®︎/College Statistics

Course: ap®︎/college statistics > unit 12, chi-square statistic for hypothesis testing.

- Chi-square goodness-of-fit example

- Expected counts in a goodness-of-fit test

- Conditions for a goodness-of-fit test

- Test statistic and P-value in a goodness-of-fit test

- Conclusions in a goodness-of-fit test

Want to join the conversation?

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

Video transcript

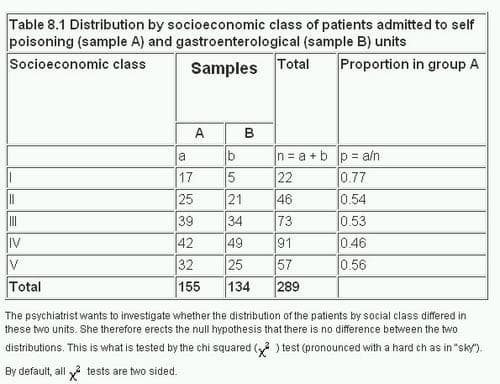

8. The Chi squared tests

The χ²tests.

H : proportion of flavors of candy are the same

H : proportions of flavors are not the same

H : proportion of people who buy snacks is independent of the movie type

H : proportion of people who buy snacks is different for different types of movies

Number of categories minus 1

Number of categories for first variable minus 1, multiplied by number of categories for second variable minus 1

How to perform a Chi-square test

For both the Chi-square goodness of fit test and the Chi-square test of independence , you perform the same analysis steps, listed below. Visit the pages for each type of test to see these steps in action.

- Define your null and alternative hypotheses before collecting your data.

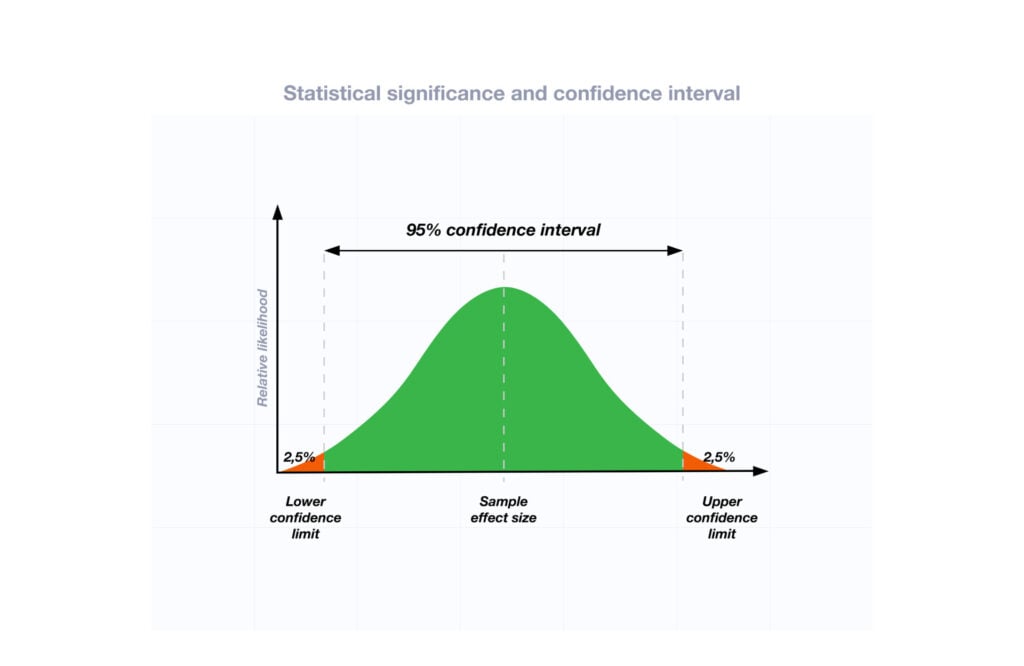

- Decide on the alpha value. This involves deciding the risk you are willing to take of drawing the wrong conclusion. For example, suppose you set α=0.05 when testing for independence. Here, you have decided on a 5% risk of concluding the two variables are independent when in reality they are not.

- Check the data for errors.

- Check the assumptions for the test. (Visit the pages for each test type for more detail on assumptions.)

- Perform the test and draw your conclusion.

Both Chi-square tests in the table above involve calculating a test statistic. The basic idea behind the tests is that you compare the actual data values with what would be expected if the null hypothesis is true. The test statistic involves finding the squared difference between actual and expected data values, and dividing that difference by the expected data values. You do this for each data point and add up the values.

Then, you compare the test statistic to a theoretical value from the Chi-square distribution . The theoretical value depends on both the alpha value and the degrees of freedom for your data. Visit the pages for each test type for detailed examples.

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Chi-Square Test of Independence and an Example

By Jim Frost 87 Comments

The Chi-square test of independence determines whether there is a statistically significant relationship between categorical variables . It is a hypothesis test that answers the question—do the values of one categorical variable depend on the value of other categorical variables? This test is also known as the chi-square test of association.

In this post, I’ll show you how the Chi-square test of independence works. Then, I’ll show you how to perform the analysis and interpret the results by working through the example. I’ll use this test to determine whether wearing the dreaded red shirt in Star Trek is the kiss of death!

If you need a primer on the basics, read my hypothesis testing overview .

Overview of the Chi-Square Test of Independence

The Chi-square test of association evaluates relationships between categorical variables. Like any statistical hypothesis test , the Chi-square test has both a null hypothesis and an alternative hypothesis.

- Null hypothesis: There are no relationships between the categorical variables. If you know the value of one variable, it does not help you predict the value of another variable.

- Alternative hypothesis: There are relationships between the categorical variables. Knowing the value of one variable does help you predict the value of another variable.

The Chi-square test of association works by comparing the distribution that you observe to the distribution that you expect if there is no relationship between the categorical variables. In the Chi-square context, the word “expected” is equivalent to what you’d expect if the null hypothesis is true. If your observed distribution is sufficiently different than the expected distribution (no relationship), you can reject the null hypothesis and infer that the variables are related.

For a Chi-square test, a p-value that is less than or equal to your significance level indicates there is sufficient evidence to conclude that the observed distribution is not the same as the expected distribution. You can conclude that a relationship exists between the categorical variables.

When you have smaller sample sizes, you might need to use Fisher’s exact test instead of the chi-square version. To learn more, read my post, Fisher’s Exact Test: Using and Interpreting .

Star Trek Fatalities by Uniform Colors

We’ll perform a Chi-square test of independence to determine whether there is a statistically significant association between shirt color and deaths. We need to use this test because these variables are both categorical variables. Shirt color can be only blue, gold, or red. Fatalities can be only dead or alive.

The color of the uniform represents each crewmember’s work area. We will statistically assess whether there is a connection between uniform color and the fatality rate. Believe it or not, there are “real” data about the crew from authoritative sources and the show portrayed the deaths onscreen. The table below shows how many crewmembers are in each area and how many have died.

| Blue | Science and Medical | 136 | 7 |

| Gold | Command and Helm | 55 | 9 |

| Red | Operations, Engineering, and Security | 239 | 24 |

| Ship’s total | All | 430 | 40 |

Tip: Because the chi-square test of association assesses the relationship between categorical variables, bar charts are a great way to graph the data. Use clustering or stacking to compare subgroups within the categories.

Related post : Bar Charts: Using, Examples, and Interpreting

Performing the Chi-Square Test of Independence for Uniform Color and Fatalities

For our example, we will determine whether the observed counts of deaths by uniform color are different from the distribution that we’d expect if there is no association between the two variables.

The table below shows how I’ve entered the data into the worksheet. You can also download the CSV dataset for StarTrekFatalities .

| Blue | Dead | 7 |

| Blue | Alive | 129 |

| Gold | Dead | 9 |

| Gold | Alive | 46 |

| Red | Dead | 24 |

| Red | Alive | 215 |

You can use the dataset to perform the analysis in your preferred statistical software. The Chi-squared test of independence results are below. As an aside, I use this example in my post about degrees of freedom in statistics . Learn why there are two degrees of freedom for the table below.

In our statistical results, both p-values are less than 0.05. We can reject the null hypothesis and conclude there is a relationship between shirt color and deaths. The next step is to define that relationship.

Describing the relationship between categorical variables involves comparing the observed count to the expected count in each cell of the Dead column. I’ve annotated this comparison in the statistical output above.

Statisticians refer to this type of table as a contingency table. To learn more about them and how to use them to calculate probabilities, read my post Using Contingency Tables to Calculate Probabilities .

Related post : Chi-Square Table

Graphical Results for the Chi-Square Test of Association

Additionally, you can use bar charts to graph each cell’s contribution to the Chi-square statistic, which is below.

Surprise! It’s the blue and gold uniforms that contribute the most to the Chi-square statistic and produce the statistical significance! Red shirts add almost nothing. In the statistical output, the comparison of observed counts to expected counts shows that blue shirts die less frequently than expected, gold shirts die more often than expected, and red shirts die at the expected rate.

The graph below reiterates these conclusions by displaying fatality percentages by uniform color along with the overall death rate.

The Chi-square test indicates that red shirts don’t die more frequently than expected. Hold on. There’s more to this story!

Time for a bonus lesson and a bonus analysis in this blog post!

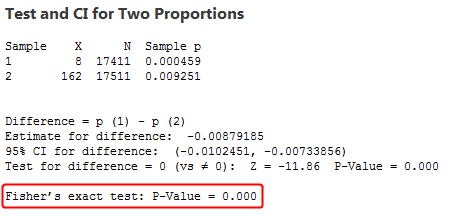

2 Proportions test to compare Security Red-Shirts to Non-Security Red-Shirts

The bonus lesson is that it is vital to include the genuinely pertinent variables in the analysis. Perhaps the color of the shirt is not the critical variable but rather the crewmember’s work area. Crewmembers in Security, Engineering, and Operations all wear red shirts. Maybe only security guards have a higher death rate?

We can test this theory using the 2 Proportions test. We’ll compare the fatality rates of red-shirts in security to red-shirts who are not in security.

The summary data are below. In the table, the events represent the counts of deaths, while the trials are the number of personnel.

| Events | Trials | |

| Security | 18 | 90 |

| Not security | 6 | 149 |

The p-value of 0.000 signifies that the difference between the two proportions is statistically significant. Security has a mortality rate of 20% while the other red-shirts are only at 4%.

Security officers have the highest mortality rate on the ship, closely followed by the gold-shirts. Red-shirts that are not in security have a fatality rate similar to the blue-shirts.

As it turns out, it’s not the color of the shirt that affects fatality rates; it’s the duty area. That makes more sense.

Risk by Work Area Summary

The Chi-square test of independence and the 2 Proportions test both indicate that the death rate varies by work area on the U.S.S. Enterprise. Doctors, scientists, engineers, and those in ship operations are the safest with about a 5% fatality rate. Crewmembers that are in command or security have death rates that exceed 15%!

Share this:

Reader Interactions

February 6, 2024 at 9:55 pm

Hi Jim. I am using R to calclate a chi sqaure of independence. I have an value of 1.486444 with a P value greater than 0.05. My question is how do I interpet the value of 1.48644? Is this a strong association between two variables or a weak association?

February 6, 2024 at 10:19 pm

You really just look at the p-value. If you assess the chi-square value, you need as the chi-square value in conjunction with a chi-square distribution with the correct degrees of freedom and use that to calculate the probability. But the p-value does that for you!

In your case, the p-value is greater than your significance level. So, you fail to reject the null hypothesis. You have insufficient evidence to conclude that an association exists between your variables.

Also, it’s important to note that this test doesn’t indicate the strength of association. It only tells you whether your sample data provide sufficient evidence to conclude that an association exists in the population. Unfortunately, you can’t conclude that an association exists.

September 1, 2022 at 5:01 am

Thank you this was such a helpful article.

I’m not sure if you check these comments anymore, yet if you do I did have a quick question for you. I was trying to follow along in SPSS to reproduce your example and I managed to do most of it. I put your data in, used Weight Cases by Frequency of Deaths, and then was able to do the Chi Square analysis that achieved the exact same results as yours.

Unfortunately, I am totally stuck on the next part where you do the 2 graphs, especially the Percentage of Fatalities by Shirt Color. The math makes sense – it’s just e.g., Gold deaths / (Gold deaths + Gold Alive). However, I cannot seem to figure out how to create a bar chart like that in SPSS!? I’ve tried every combination of variables and settings I can think of in the Chart Builder and no luck. I’ve also tried the Compute Variable option with various formulas to create a new column with the death percentages by shirt color but can’t find a way to sum the frequencies.. The best I can get is using an IF statement so it only calculates on the rows with a Death statistic and then I can get the first part: Frequency / ???, but can’t sum the 2 frequencies of Deaths & Alive per shirt colour to calculate the figure properly. And I’m not sure what other things I can try.

So basically I’m totally stuck at the moment. If by some chance you see this, is there any chance you might please be able to help me figure out how to do that Percentage of Fatalities by Shirt Color bar graph in SPSS? The only way I can see at the moment is to manually type the calculated figures into a new dataset and graph it. That would work but doesn’t seem a very practical way of doing things if this was a large dataset instead of a small example one. Hence I’m assuming this must be a better way of doing this?

Thank you in advance for any help you can give me.

September 1, 2022 at 3:38 pm

Yes, I definitely check these comments!

Unfortunately, I don’t have much experience using SPSS, so I’ll be of limited help with that. There must be some way to do that in SPSS though. Worst case scenario, calculate the percentages by hand or in Excel and then enter them into SPSS and graph them. That shouldn’t be necessary but would work in a pinch.

Perhaps someone with more SPSS experience can provide some tips?

September 18, 2021 at 6:09 pm

Hi. This comment relates to Warren’s post. The null hypothesis is that there is no statistically significant relationship between “Uniform color” and “Status”. During the summing used to calculate the Chi-squared statistic, each of the (6) contributions are included. (3 Uniform colors x 2 status possibilities) The “Alive” column gives the small contributions that bring the total contribution from 5.6129 up to 6.189. Any reasoning specific to the “Dead” column only begins after the 2-dimensional Chi-squared calculation has been completed.

September 19, 2021 at 12:38 am

Hi Bill, thanks for your clarifications. I got confused with whom you were replying!

September 17, 2021 at 5:53 pm

The chi-square formula is: χ2 = ∑(Oi – Ei)2/Ei, where Oi = observed value (actual value) and Ei = expected value.

September 17, 2021 at 5:56 pm

Hi Bill, thanks. I do cover the formula and example calculations in my other post on the topic, How Chi-Squared Works .

September 16, 2021 at 6:24 pm

Why is the Pearson Chi Square statistic not equal to the sum of the contributions to Chi-Square? I get 5.6129. The p-value for that Chi-Squre statistic is .0604 which is NOT significant in this century OR the 24th.

September 14, 2021 at 8:25 am

Thank you JIm, Excellent concept teaching!

July 15, 2021 at 1:05 pm

Thank you so much for the Star Trek example! As a long-time Trek fan and Stats student, I absolutely love the debunking of the red shirt theory!

July 19, 2021 at 10:19 pm

I’m so glad you liked my example. I’m a life-long Trek fan as well! I found the red shirt question to be interesting. One the one hand, part of the answer of the answer is that red shirts comprise just over 50% of the crew, so of course they’ll have more deaths. And then on the other hand, it’s only certain red shirts that actually have an elevated risk, those in security.

May 16, 2021 at 1:42 pm

Got this response from the gentleman who did the calculation using a Chi Square. Would you mind commenting? “The numbers reported are nominate (counting) numbers not ordinate (measurement) numbers. As such chi-square analysis must be used to statistically compare outcomes. Two-sample student t-tests cannot be used for ordinate numbers. Correlations are also not usually used for ordinate numbers and most importantly correlations do NOT show cause and effect.”

May 16, 2021 at 3:13 pm

I agree with the first comment. However, please note that I recommended the 2-sample proportions test and the other person is mentioning the 2-sample t-test. Very different tests! And, I agree that the t-test is not appropriate for the Pfizer data. Basically, he’s saying you have categorical data and the t-test is for continuous data. That’s all correct. And that’s why I recommended the the proportions test.

As for the other part about “correlations do NOT show cause and effect.” That’s not quite correct. More accurately, you’d say that correlations do not NECESSARILY imply causation. Sometimes they do and sometimes they don’t imply causation. It depends on the context in which the data were collected. Correlations DO suggest causation when you use a randomized controlled trial (RCT) for the experiment and data collection, which is exactly what Pfizer did. Consequently, the Pfizer data DO suggest that the vaccine caused a reduction in the proportion of COVID infections in the vaccine group compared to the control group (no vaccine). RCTs are intentionally designed so you can draw causal inferences, which is why the FDA requires them for vaccine and other medical trials.

If you’re interested, I’ve written an article about why randomized controlled trials allow you to make causal inferences .

May 16, 2021 at 12:41 pm

Mr. Jim Frost…You are Da Man!! Thank you!! Yes, this is the same document I have been looking at, just did not know how to interpret Table 9. Sorry, never intended to ask you for medical advice, just wanted to understand the statistics and feel confident that the calculations were performed correctly. You have made my day! Now just a purely statistics question, assuming I have not worn out your patience with my dumb questions…Can you explain the criteria used to determine when a Chi Square should be used versus a 2-samples proportions test? I think I saw a comment from someone on your website stating that the Chi Sqaure is often misused in the medical field. Fascinating, fascinating field you are in. Thank you so much for sharing your knowledge and expertise.

May 16, 2021 at 3:00 pm

You bet! That’s why I’m here . . . to educate and clarify statistics and statistical analyses!

The chi-squared test of independence (or association) and the two-sample proportions test are related. The main difference is that the chi-squared test is more general while the 2-sample proportions test is more specific. And, it happens that the proportions test it more targeted at specifically the type of data you have.

The chi-squared test handles two categorical variables where each one can have two or more values. And, it tests whether there is an association between the categorical variables. However, it does not provide an estimate of the effect size or a CI. If you used the chi-squared test with the Pfizer data, you’d presumably obtain significant results and know that an association exists, but not the nature or strength of that association.

The two proportions test also works with categorical data but you must have two variables that each have two levels. In other words, you’re dealing with binary data and, hence, the binomial distribution. The Pfizer data you had fits this exactly. One of the variables is experimental group: control or vaccine. The other variable is COVID status: infected or not infected. Where it really shines in comparison to the chi-squared test is that it gives you an effect size and a CI for the effect size. Proportions and percentages are basically the same thing, but displayed differently: 0.75 vs. 75%.

What you’re interested in answering is whether the percentage (or proportion) of infections amongst those in the vaccinated group is significantly different than the percentage of infections for those in control group. And, that’s the exact question that the proportions test answers. Basically, it provides a more germane answer to that question.

With the Pfizer data, the answer is yes, those in the vaccinated group have a significantly lower proportion of infections than those in the control group (no vaccine). Additionally, you’ll see the proportion for each group listed, and the effect size is the difference between the proportion, which you can find on a separate line, along with the CI of the difference.

Compare that more specific and helpful answer to the one that chi-squared provides: yes, there’s an association between vaccinations and infections. Both are correct but because the proportions test is more applicable to the specific data at hand, it gives a more useful answer.

I see you have an additional comment with questions, so I’m off to that one!

May 15, 2021 at 1:00 pm

Hi Jim, So sorry if my response came off as anything but appreciative of your input. I tried to duplicate your results in your Flu Vaccine article using the 2 Proportion test as you recommended. I was able to duplicate your Estimate for Difference of -0.01942, but I could not duplicate your value for Z, so clearly I am not doing the calculation correctly – even when using Z calculators. So since I couldn’t duplicate your correct results for your flu example, I did not have confidence to proceed to Moderna. I was able to calculate effectiveness (the hazard ratio that is widely reported), but as I have reviewed the EUA documents presented to the FDA in December 2020, I know that there is no regression analysis, and most importantly, no data to show an antibody response produced by the vaccine. So they are not showing the vaccine was successful in producing an immune response, just giving simplistic proportions of how many got covid and how many didn’t. And as they did not even factor in the number of people who had had covid prior to vaccine, I just cant understand how these numbers have any significance at all. I mention the PCR test because it too is under an EUA, and has severe limitations. I would think that those limitations would be statistically significant, as are the symptoms which can indicate any bacterial or viral infection. And you state “I’m sure you can find a journal article or documentation that shows the thorough results if you’re interested”. Clearly I am VERY interested, as I love my parents more than life itself, and have seen the VAERS data, and I don’t want them to be the next statistic. But I CANT find the thorough results that you say are so easy to find. If I could I would not be trying to learn to perform statistical calculations. So I went out on a limb, as you are a fellow trekky and seem like a super nice guy, sharing your expertise with others, and thought you might be able to help me understand the statistics so I can help my parents make an informed choice. We are at a point that children and pregnant women are getting these vaccines. Unhealthy, elderly people in nursing homes (all the people excluded in the trials) are getting these vaccines. I simply ask the question…..do these vaccines provide more protection than NOT getting the vaccine? The ENTIRE POPULATION is being forced to get these vaccines. And you tell me “I’m sure you can find a journal article or documentation that shows the thorough results if you’re interested.” I can only ask…how are you NOT interested? This is the most important statistical question of our lifetime, and of your children’s and granchildren’s lifetime. And I find that no physician or statistician able or willing to answer these questions. Respectfully, Chris

May 15, 2021 at 11:00 pm

No worries. On my website, I’m just discussing the statistical nature of Moderna’s study. Of course, everyone is free to make their own determination and decide accordingly.

You’re obviously free to question the methods and analysis, but as a statistician, I’m satisfied that Moderna performed an appropriate clinical trial and followed that up with a rigorous and appropriate statistical analysis. In my opinion, they have demonstrated that their vaccine is safe and effective. The only caveat is that we don’t have long-term safety data because not enough time has gone by. However, most side effects for vaccines show up in the first 45 days. That timeframe occurred during the trial and all side effects were recorded.

However, I’m not going to get into a debate about whether anyone should get the vaccine or not. I run a statistics website and that’s the aspect I’m focusing on. There are other places to debate the merits of being vaccinated.

May 14, 2021 at 8:05 pm

Hi Jim, thanks for the reply. I have to admit the detail of all the statistical methods you mention are over my head, but by scanning the document it appears you did not actually calculate the vaccine’s efficacy, just stated how the analysis should be done. I am referring to comments like “To analyze the COVID-19 vaccine data, statisticians will use a stratified Cox proportional hazard regression model to assess the magnitude of the difference between treatment and control groups using a one-sided 0.025 significance level”. And “The full data and analyses are currently unavailable, but we can evaluate their interim analysis report. Moderna (and Pfizer) are still assessing the data and will present their analyses to Federal agencies in December 2020.” I am looking at the December 2020 reports that both Pfizer and Moderna presented to the FDA, and I see no “stratified Cox proportional hazard regression model”, just the simplistic hazard ratio you mention in your paper. I don’t see how that shows the results are statistically significant and not chance. Also the PCR test does not confirm disease, just presence of virus (dead or alive) and virus presence doesnt indicate disease. And the symptoms are symptoms of any viral or bacterial infection, or cancer. Just sort of suprised to see no statistical analysis in the December 2020 reports. Was hoping you had done the heavy lifting…lol

May 14, 2021 at 11:38 pm

Hi Christine,

You had asked if Chi-square would work for your data and my response was no, but here are two methods that would. No, I didn’t analyze the Moderna data myself. I don’t have access to their complete data that would allow me to replicate their results. However, in my post, I did calculate the effectiveness, which you can do using the numbers I had, but not the significance.

Based on the data you indicated you had, I’d recommend the two-sample proportions test that I illustrate in the flu vaccine post. That won’t replicate the more complex analyses but is doable with the data that you have.

The Cox proportional hazard regression model analyzes the hazard ratio. The hazard ratio is the outcome measure in this context. They’re tied together and it’s the regression analysis that indicate significance. I’d imagine you’d have to read a thorough report to get the nitty gritty details. I got the details of their analysis straight from Moderna.

I’m not sure what your point with the PCR test. But, I’m just reporting how they did their analysis.

Moderna, Pfizer, and the others have done the “heavy lifting.” When I wrote the post about the COVID vaccination, it was before it was approved for emergency use. By this point, I’m sure you can find a journal article or documentation that shows the thorough results if you’re interested.

May 14, 2021 at 2:56 pm

Hi Jim, my parents are looking into getting the Pfizer vaccine, and I was wondering if I could use a chi square analysis to see if its statistically effective. From the EUA document, 17411 people got the Pfizer vaccine, and of those people – 8 got covid, and 17403 did not. Of the control group of 17511 that did not get the vaccine, 162 got covid, and 17349 did not. My calculations show this is not statistically significant, but wasn’t sure if I did my calculation correctly, or if I can even use a chi square for this data. Can you help? PS. As a Trekky family, I love your analysis…but we all know its the new guy with a speaking part that gets axed…lol

May 14, 2021 at 3:28 pm

There are several ways you can analyze the effectiveness. I write about how they assessed the Moderna vaccine’s effectiveness , which uses a special type of regression analysis.

The other approach is to use a two-sample proportions test. I don’t write about that in the COVID context but I show how it works for flu vaccinations . The same ideas apply to COVID vaccinations. You’re dealing comparing the proportion of infections in the control group to the treatment group. Hence, a two-sample proportions test.

A chi-square analysis won’t get you where you want to go. It would tell you if there is an association, but it’s not going to tell you the effect size.

I’d read those two posts that I wrote. They’ll give you a good insight for possible ways to analyze the data. I also show how they calculate effectiveness for both the COVID and flu shots!

I hope that helps!

April 9, 2021 at 2:49 am

thank you so much for your response and advice! I will probably go for the logistic regression then 🙂

All the best for you!

April 10, 2021 at 12:39 am

You’re very welcome! Best of luck with your study! 🙂

April 7, 2021 at 4:18 am

thank you so much for your quick response! This actually helps me a lot and I also already thought about doing a binary logistic regression. However, my supervisor wanted me to use a chi-square test, as he thinks it is easier to perform and less work. So now I am struggling to decide, which option would be more feasible.

Coming back to the chi-square test – could I create a new variable which differentiates between the four experimental conditions and use this as a new ID? Or can I use the DV to weight the frequencies in the chi-square test? – I did that once in a analysis using a continuous DV as weight. Yet, I am not sure if or how that works with a binary variable. Do you have an idea what would work best in the case of a chi-square test?

Thank you so much!!

April 8, 2021 at 11:25 pm

You’re very welcome!

I don’t think either binary logistic regression or chi-square are more less work than the other. However, Chi-square won’t give you the answers you want. You can’t do interaction effects with chi-square. You won’t get nice odds ratios which are a much more intuitive way to interpret the results than chi-square, at least in my opinion. With chi-square, you don’t get a p-value/significance for each variable, just the overall analysis. With logistic regression, you get p-values for each variable and the interaction term if you include it.

I think you can do chi-square analyses with more than one independent variable. You’d essentially have a three dimensional table rather than a two-dimensional table. I’ve never done that myself so I don’t have much advice to offer you there. But, I strongly recommend using logistic regression. You’ll get results that are more useful.

April 6, 2021 at 10:59 am

thank you so much for this helpful post!

April 6, 2021 at 5:36 am

thank you for this very helpful post. Currently, I am working on my master’s thesis and I am struggling with identifying the right way to test my hypothesis as in my case I have three dummy variables (2 independent and 1 dependent).

The experiment was on the topic advice taking. It was a 2×2 between sample design manipulating the source of advice to be a human (0) or an algorithm (1) and the task to be easy (0) or difficult (1). Then, I measured whether the participants followed (1) or not followed (0) the advice. Now, I want to test if there is an interaction effect. In the easy task I expect that the participants rather follow the human advice and in the difficult task the participants rather follow the algorithmic advice.

I want to test this using a chi-square independence test, but I am not sure how to do that with three variables. Should I rather use the variable “Follow/Notfollow” as a weight or should I combine two of the variables so that I have a new variable with four categories, e.g. Easy.Human, Easy.Algorithm, Difficult.Human, Difficult.Algorithm or Human.Follow, Human.NotFollow, Algorithm.Follow, Algorithm.NotFollow

I am not sure, if this is scientifically correct. I would highly appreciate your help and your advice.

Thank you so much in advance! Best, Anni

April 7, 2021 at 1:58 am

I think using binary logistic regression would be your best bet. You can use your dummy DV with that type. And have two dummy IVs also works. You can also include an interaction term, which isn’t possible in chi-square tests. This model would tell you whether source of advice, difficulty of task, and their interaction relate to the probability of participants following the advice.

March 29, 2021 at 12:43 pm