Home — Essay Samples — Information Science and Technology — Artificial Intelligence — Is Artificial Intelligence Dangerous?

Is Artificial Intelligence Dangerous?

- Categories: Artificial Intelligence

About this sample

Words: 623 |

Published: Sep 16, 2023

Words: 623 | Page: 1 | 4 min read

Table of contents

The promise of ai, the perceived dangers of ai, responsible ai development.

- Medical Advancements: AI can assist in diagnosing diseases, analyzing medical data, and developing personalized treatment plans, potentially saving lives and improving healthcare outcomes.

- Autonomous Vehicles: Self-driving cars, powered by AI, have the potential to reduce accidents and make transportation more accessible and efficient.

- Environmental Conservation: AI can be used to monitor and address environmental issues, such as climate change, deforestation, and wildlife preservation.

- Efficiency and Automation: AI-driven automation can streamline processes in various industries, increasing productivity and reducing costs.

- Job Displacement

- Bias and Discrimination

- Lack of Accountability

- Security Risks

- Transparency and Accountability

- Fairness and Bias Mitigation

- Ethical Frameworks

- Cybersecurity Measures

This essay delves into the complexities surrounding artificial intelligence (AI), exploring both its transformative benefits and potential dangers. From enhancing healthcare and transportation to posing risks in job displacement and security, it critically assesses AI’s dual aspects. Emphasizing responsible development, it advocates for transparency, fairness, and robust cybersecurity measures. For a deeper understanding, students can check more AI websites for students which offer further resources and expert guidance.

Cite this Essay

Let us write you an essay from scratch

- 450+ experts on 30 subjects ready to help

- Custom essay delivered in as few as 3 hours

Get high-quality help

Verified writer

- Expert in: Information Science and Technology

+ 120 experts online

By clicking “Check Writers’ Offers”, you agree to our terms of service and privacy policy . We’ll occasionally send you promo and account related email

No need to pay just yet!

Related Essays

1 pages / 639 words

6 pages / 2660 words

2 pages / 1011 words

3 pages / 1371 words

Remember! This is just a sample.

You can get your custom paper by one of our expert writers.

121 writers online

Still can’t find what you need?

Browse our vast selection of original essay samples, each expertly formatted and styled

Related Essays on Artificial Intelligence

The dawn of the 21st century has witnessed an unprecedented technological revolution, primarily driven by the rapid advancement of Artificial Intelligence (AI). AI, once relegated to the realm of science fiction, has become an [...]

Artificial intelligence (AI) has rapidly evolved in recent years, making significant advancements in various fields such as healthcare, finance, and manufacturing. This technology holds immense potential for transformative [...]

Artificial intelligence (AI) has become one of the most transformative technologies of the 21st century, with applications ranging from healthcare and education to transportation and finance. The rapid advancements in AI have [...]

Radiology, a field rooted in the visualization of the human body, has undergone a transformative journey with the integration of artificial intelligence (AI). This essay explores the burgeoning relationship between radiology and [...]

ARTIFICIAL INTELLIGENCE IN MEDICAL TECHNOLOGY What is ARTIFICIAL INTELLIGENCE? The term AI was devised by John McCarthy, an American computer scientist, in 1956. AI or artificial intelligence is the stimulation of human [...]

“Do you like human beings?” Edward asked. “I love them” Sophia replied. “Why?” “I am not sure I understand why yet” The conversation above is from an interview for Business Insider between a journalist [...]

Related Topics

By clicking “Send”, you agree to our Terms of service and Privacy statement . We will occasionally send you account related emails.

Where do you want us to send this sample?

By clicking “Continue”, you agree to our terms of service and privacy policy.

Be careful. This essay is not unique

This essay was donated by a student and is likely to have been used and submitted before

Download this Sample

Free samples may contain mistakes and not unique parts

Sorry, we could not paraphrase this essay. Our professional writers can rewrite it and get you a unique paper.

Please check your inbox.

We can write you a custom essay that will follow your exact instructions and meet the deadlines. Let's fix your grades together!

Get Your Personalized Essay in 3 Hours or Less!

We use cookies to personalyze your web-site experience. By continuing we’ll assume you board with our cookie policy .

- Instructions Followed To The Letter

- Deadlines Met At Every Stage

- Unique And Plagiarism Free

12 Risks and Dangers of Artificial Intelligence (AI)

As AI grows more sophisticated and widespread, the voices warning against the potential dangers of artificial intelligence grow louder.

“These things could get more intelligent than us and could decide to take over, and we need to worry now about how we prevent that happening,” said Geoffrey Hinton , known as the “Godfather of AI” for his foundational work on machine learning and neural network algorithms. In 2023, Hinton left his position at Google so that he could “ talk about the dangers of AI ,” noting a part of him even regrets his life’s work .

The renowned computer scientist isn’t alone in his concerns.

Tesla and SpaceX founder Elon Musk, along with over 1,000 other tech leaders, urged in a 2023 open letter to put a pause on large AI experiments, citing that the technology can “pose profound risks to society and humanity.”

Dangers of Artificial Intelligence

- Automation-spurred job loss

- Privacy violations

- Algorithmic bias caused by bad data

- Socioeconomic inequality

- Market volatility

- Weapons automatization

- Uncontrollable self-aware AI

Whether it’s the increasing automation of certain jobs , gender and racially biased algorithms or autonomous weapons that operate without human oversight (to name just a few), unease abounds on a number of fronts. And we’re still in the very early stages of what AI is really capable of.

12 Dangers of AI

Questions about who’s developing AI and for what purposes make it all the more essential to understand its potential downsides. Below we take a closer look at the possible dangers of artificial intelligence and explore how to manage its risks.

Is AI Dangerous?

The tech community has long debated the threats posed by artificial intelligence. Automation of jobs, the spread of fake news and a dangerous arms race of AI-powered weaponry have been mentioned as some of the biggest dangers posed by AI.

1. Lack of AI Transparency and Explainability

AI and deep learning models can be difficult to understand, even for those that work directly with the technology . This leads to a lack of transparency for how and why AI comes to its conclusions, creating a lack of explanation for what data AI algorithms use, or why they may make biased or unsafe decisions. These concerns have given rise to the use of explainable AI , but there’s still a long way before transparent AI systems become common practice.

2. Job Losses Due to AI Automation

AI-powered job automation is a pressing concern as the technology is adopted in industries like marketing , manufacturing and healthcare . By 2030, tasks that account for up to 30 percent of hours currently being worked in the U.S. economy could be automated — with Black and Hispanic employees left especially vulnerable to the change — according to McKinsey . Goldman Sachs even states 300 million full-time jobs could be lost to AI automation.

“The reason we have a low unemployment rate, which doesn’t actually capture people that aren’t looking for work, is largely that lower-wage service sector jobs have been pretty robustly created by this economy,” futurist Martin Ford told Built In. With AI on the rise, though, “I don’t think that’s going to continue.”

As AI robots become smarter and more dexterous, the same tasks will require fewer humans. And while AI is estimated to create 97 million new jobs by 2025 , many employees won’t have the skills needed for these technical roles and could get left behind if companies don’t upskill their workforces .

“If you’re flipping burgers at McDonald’s and more automation comes in, is one of these new jobs going to be a good match for you?” Ford said. “Or is it likely that the new job requires lots of education or training or maybe even intrinsic talents — really strong interpersonal skills or creativity — that you might not have? Because those are the things that, at least so far, computers are not very good at.”

Even professions that require graduate degrees and additional post-college training aren’t immune to AI displacement.

As technology strategist Chris Messina has pointed out, fields like law and accounting are primed for an AI takeover. In fact, Messina said, some of them may well be decimated. AI already is having a significant impact on medicine. Law and accounting are next, Messina said, the former being poised for “a massive shakeup.”

“Think about the complexity of contracts, and really diving in and understanding what it takes to create a perfect deal structure,” he said in regards to the legal field. “It’s a lot of attorneys reading through a lot of information — hundreds or thousands of pages of data and documents. It’s really easy to miss things. So AI that has the ability to comb through and comprehensively deliver the best possible contract for the outcome you’re trying to achieve is probably going to replace a lot of corporate attorneys.”

More on Artificial Intelligence AI Copywriting: Why Writing Jobs Are Safe

3. Social Manipulation Through AI Algorithms

Social manipulation also stands as a danger of artificial intelligence. This fear has become a reality as politicians rely on platforms to promote their viewpoints, with one example being Ferdinand Marcos, Jr., wielding a TikTok troll army to capture the votes of younger Filipinos during the Philippines’ 2022 election.

TikTok, which is just one example of a social media platform that relies on AI algorithms , fills a user’s feed with content related to previous media they’ve viewed on the platform. Criticism of the app targets this process and the algorithm’s failure to filter out harmful and inaccurate content, raising concerns over TikTok’s ability to protect its users from misleading information.

Online media and news have become even murkier in light of AI-generated images and videos, AI voice changers as well as deepfakes infiltrating political and social spheres. These technologies make it easy to create realistic photos, videos, audio clips or replace the image of one figure with another in an existing picture or video. As a result, bad actors have another avenue for sharing misinformation and war propaganda , creating a nightmare scenario where it can be nearly impossible to distinguish between creditable and faulty news.

“No one knows what’s real and what’s not,” Ford said. “So it really leads to a situation where you literally cannot believe your own eyes and ears; you can’t rely on what, historically, we’ve considered to be the best possible evidence... That’s going to be a huge issue.”

More on Artificial Intelligence How to Spot Deepfake Technology

4. Social Surveillance With AI Technology

In addition to its more existential threat, Ford is focused on the way AI will adversely affect privacy and security. A prime example is China’s use of facial recognition technology in offices, schools and other venues. Besides tracking a person’s movements, the Chinese government may be able to gather enough data to monitor a person’s activities, relationships and political views.

Another example is U.S. police departments embracing predictive policing algorithms to anticipate where crimes will occur. The problem is that these algorithms are influenced by arrest rates, which disproportionately impact Black communities . Police departments then double down on these communities, leading to over-policing and questions over whether self-proclaimed democracies can resist turning AI into an authoritarian weapon.

“Authoritarian regimes use or are going to use it,” Ford said. “The question is, How much does it invade Western countries, democracies, and what constraints do we put on it?”

Related Are Police Robots the Future of Law Enforcement?

5. Lack of Data Privacy Using AI Tools

If you’ve played around with an AI chatbot or tried out an AI face filter online, your data is being collected — but where is it going and how is it being used? AI systems often collect personal data to customize user experiences or to help train the AI models you’re using (especially if the AI tool is free). Data may not even be considered secure from other users when given to an AI system, as one bug incident that occurred with ChatGPT in 2023 “ allowed some users to see titles from another active user’s chat history .” While there are laws present to protect personal information in some cases in the United States, there is no explicit federal law that protects citizens from data privacy harm experienced by AI.

6. Biases Due to AI

Various forms of AI bias are detrimental too. Speaking to the New York Times , Princeton computer science professor Olga Russakovsky said AI bias goes well beyond gender and race . In addition to data and algorithmic bias (the latter of which can “amplify” the former), AI is developed by humans — and humans are inherently biased .

“A.I. researchers are primarily people who are male, who come from certain racial demographics, who grew up in high socioeconomic areas, primarily people without disabilities,” Russakovsky said. “We’re a fairly homogeneous population, so it’s a challenge to think broadly about world issues.”

The limited experiences of AI creators may explain why speech-recognition AI often fails to understand certain dialects and accents, or why companies fail to consider the consequences of a chatbot impersonating notorious figures in human history. Developers and businesses should exercise greater care to avoid recreating powerful biases and prejudices that put minority populations at risk.

7. Socioeconomic Inequality as a Result of AI

If companies refuse to acknowledge the inherent biases baked into AI algorithms, they may compromise their DEI initiatives through AI-powered recruiting . The idea that AI can measure the traits of a candidate through facial and voice analyses is still tainted by racial biases, reproducing the same discriminatory hiring practices businesses claim to be eliminating.

Widening socioeconomic inequality sparked by AI-driven job loss is another cause for concern, revealing the class biases of how AI is applied. Workers who perform more manual, repetitive tasks have experienced wage declines as high as 70 percent because of automation, with office and desk workers remaining largely untouched in AI’s early stages. However, the increase in generative AI use is already affecting office jobs , making for a wide range of roles that may be more vulnerable to wage or job loss than others.

Sweeping claims that AI has somehow overcome social boundaries or created more jobs fail to paint a complete picture of its effects. It’s crucial to account for differences based on race, class and other categories. Otherwise, discerning how AI and automation benefit certain individuals and groups at the expense of others becomes more difficult.

8. Weakening Ethics and Goodwill Because of AI

Along with technologists, journalists and political figures, even religious leaders are sounding the alarm on AI’s potential pitfalls. In a 2023 Vatican meeting and in his message for the 2024 World Day of Peace , Pope Francis called for nations to create and adopt a binding international treaty that regulates the development and use of AI.

Pope Francis warned against AI’s ability to be misused, and “create statements that at first glance appear plausible but are unfounded or betray biases.” He stressed how this could bolster campaigns of disinformation, distrust in communications media, interference in elections and more — ultimately increasing the risk of “fueling conflicts and hindering peace.”

The rapid rise of generative AI tools gives these concerns more substance. Many users have applied the technology to get out of writing assignments, threatening academic integrity and creativity. Plus, biased AI could be used to determine whether an individual is suitable for a job, mortgage, social assistance or political asylum, producing possible injustices and discrimination, noted Pope Francis.

“The unique human capacity for moral judgment and ethical decision-making is more than a complex collection of algorithms,” he said. “And that capacity cannot be reduced to programming a machine.”

More on Artificial Intelligence What Are AI Ethics?

9. Autonomous Weapons Powered By AI

As is too often the case, technological advancements have been harnessed for the purpose of warfare. When it comes to AI, some are keen to do something about it before it’s too late: In a 2016 open letter , over 30,000 individuals, including AI and robotics researchers, pushed back against the investment in AI-fueled autonomous weapons.

“The key question for humanity today is whether to start a global AI arms race or to prevent it from starting,” they wrote. “If any major military power pushes ahead with AI weapon development, a global arms race is virtually inevitable, and the endpoint of this technological trajectory is obvious: autonomous weapons will become the Kalashnikovs of tomorrow.”

This prediction has come to fruition in the form of Lethal Autonomous Weapon Systems , which locate and destroy targets on their own while abiding by few regulations. Because of the proliferation of potent and complex weapons, some of the world’s most powerful nations have given in to anxieties and contributed to a tech cold war .

Many of these new weapons pose major risks to civilians on the ground, but the danger becomes amplified when autonomous weapons fall into the wrong hands. Hackers have mastered various types of cyber attacks , so it’s not hard to imagine a malicious actor infiltrating autonomous weapons and instigating absolute armageddon.

If political rivalries and warmongering tendencies are not kept in check, artificial intelligence could end up being applied with the worst intentions. Some fear that, no matter how many powerful figures point out the dangers of artificial intelligence, we’re going to keep pushing the envelope with it if there’s money to be made.

“The mentality is, ‘If we can do it, we should try it; let’s see what happens,” Messina said. “‘And if we can make money off it, we’ll do a whole bunch of it.’ But that’s not unique to technology. That’s been happening forever.’”

10. Financial Crises Brought About By AI Algorithms

The financial industry has become more receptive to AI technology’s involvement in everyday finance and trading processes. As a result, algorithmic trading could be responsible for our next major financial crisis in the markets.

While AI algorithms aren’t clouded by human judgment or emotions, they also don’t take into account contexts , the interconnectedness of markets and factors like human trust and fear. These algorithms then make thousands of trades at a blistering pace with the goal of selling a few seconds later for small profits. Selling off thousands of trades could scare investors into doing the same thing, leading to sudden crashes and extreme market volatility.

Instances like the 2010 Flash Crash and the Knight Capital Flash Crash serve as reminders of what could happen when trade-happy algorithms go berserk, regardless of whether rapid and massive trading is intentional.

This isn’t to say that AI has nothing to offer to the finance world. In fact, AI algorithms can help investors make smarter and more informed decisions on the market. But finance organizations need to make sure they understand their AI algorithms and how those algorithms make decisions. Companies should consider whether AI raises or lowers their confidence before introducing the technology to avoid stoking fears among investors and creating financial chaos.

11. Loss of Human Influence

An overreliance on AI technology could result in the loss of human influence — and a lack in human functioning — in some parts of society. Using AI in healthcare could result in reduced human empathy and reasoning , for instance. And applying generative AI for creative endeavors could diminish human creativity and emotional expression . Interacting with AI systems too much could even cause reduced peer communication and social skills. So while AI can be very helpful for automating daily tasks, some question if it might hold back overall human intelligence, abilities and need for community.

12. Uncontrollable Self-Aware AI

There also comes a worry that AI will progress in intelligence so rapidly that it will become sentient , and act beyond humans’ control — possibly in a malicious manner. Alleged reports of this sentience have already been occurring, with one popular account being from a former Google engineer who stated the AI chatbot LaMDA was sentient and speaking to him just as a person would. As AI’s next big milestones involve making systems with artificial general intelligence , and eventually artificial superintelligence , cries to completely stop these developments continue to rise .

More on Artificial Intelligence What Is the Eliza Effect?

How to Mitigate the Risks of AI

AI still has numerous benefits , like organizing health data and powering self-driving cars. To get the most out of this promising technology, though, some argue that plenty of regulation is necessary.

“There’s a serious danger that we’ll get [AI systems] smarter than us fairly soon and that these things might get bad motives and take control,” Hinton told NPR . “This isn’t just a science fiction problem. This is a serious problem that’s probably going to arrive fairly soon, and politicians need to be thinking about what to do about it now.”

Develop Legal Regulations

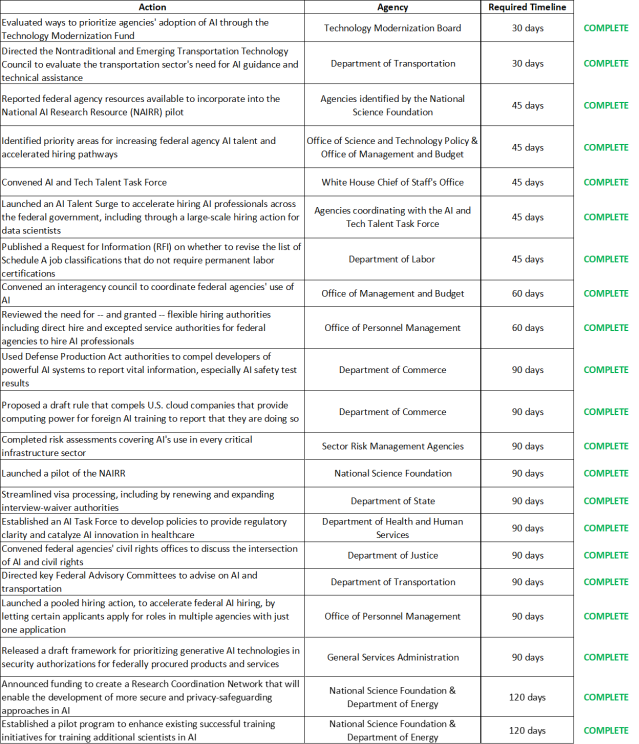

AI regulation has been a main focus for dozens of countries , and now the U.S. and European Union are creating more clear-cut measures to manage the rising sophistication of artificial intelligence. In fact, the White House Office of Science and Technology Policy (OSTP) published the AI Bill of Rights in 2022, a document outlining to help responsibly guide AI use and development. Additionally, President Joe Biden issued an executive order in 2023 requiring federal agencies to develop new rules and guidelines for AI safety and security.

Although legal regulations mean certain AI technologies could eventually be banned, it doesn’t prevent societies from exploring the field.

Ford argues that AI is essential for countries looking to innovate and keep up with the rest of the world.

“You regulate the way AI is used, but you don’t hold back progress in basic technology. I think that would be wrong-headed and potentially dangerous,” Ford said. “We decide where we want AI and where we don’t; where it’s acceptable and where it’s not. And different countries are going to make different choices.”

More on Artificial Intelligence Will This Election Year Be a Turning Point for AI Regulation?

Establish Organizational AI Standards and Discussions

On a company level, there are many steps businesses can take when integrating AI into their operations. Organizations can develop processes for monitoring algorithms, compiling high-quality data and explaining the findings of AI algorithms. Leaders could even make AI a part of their company culture and routine business discussions, establishing standards to determine acceptable AI technologies.

Guide Tech With Humanities Perspectives

Though when it comes to society as a whole, there should be a greater push for tech to embrace the diverse perspectives of the humanities . Stanford University AI researchers Fei-Fei Li and John Etchemendy make this argument in a 2019 blog post that calls for national and global leadership in regulating artificial intelligence:

“The creators of AI must seek the insights, experiences and concerns of people across ethnicities, genders, cultures and socio-economic groups, as well as those from other fields, such as economics, law, medicine, philosophy, history, sociology, communications, human-computer-interaction, psychology, and Science and Technology Studies (STS).”

Balancing high-tech innovation with human-centered thinking is an ideal method for producing responsible AI technology and ensuring the future of AI remains hopeful for the next generation. The dangers of artificial intelligence should always be a topic of discussion, so leaders can figure out ways to wield the technology for noble purposes.

“I think we can talk about all these risks, and they’re very real,” Ford said. “But AI is also going to be the most important tool in our toolbox for solving the biggest challenges we face.”

Frequently Asked Questions

What is ai.

AI (artificial intelligence) describes a machine's ability to perform tasks and mimic intelligence at a similar level as humans.

Is AI dangerous?

AI has the potential to be dangerous, but these dangers may be mitigated by implementing legal regulations and by guiding AI development with human-centered thinking.

Can AI cause human extinction?

If AI algorithms are biased or used in a malicious manner — such as in the form of deliberate disinformation campaigns or autonomous lethal weapons — they could cause significant harm toward humans. Though as of right now, it is unknown whether AI is capable of causing human extinction.

What happens if AI becomes self-aware?

Self-aware AI has yet to be created, so it is not fully known what will happen if or when this development occurs.

Some suggest self-aware AI may become a helpful counterpart to humans in everyday living, while others suggest that it may act beyond human control and purposely harm humans.

Great Companies Need Great People. That's Where We Come In.

Major new report explains the risks and rewards of artificial intelligence

AI has begun to permeate every aspect of our lives. Image: Unsplash/Hitesh Choudhary

.chakra .wef-1c7l3mo{-webkit-transition:all 0.15s ease-out;transition:all 0.15s ease-out;cursor:pointer;-webkit-text-decoration:none;text-decoration:none;outline:none;color:inherit;}.chakra .wef-1c7l3mo:hover,.chakra .wef-1c7l3mo[data-hover]{-webkit-text-decoration:underline;text-decoration:underline;}.chakra .wef-1c7l3mo:focus,.chakra .wef-1c7l3mo[data-focus]{box-shadow:0 0 0 3px rgba(168,203,251,0.5);} Toby Walsh

Liz sonenberg.

.chakra .wef-9dduvl{margin-top:16px;margin-bottom:16px;line-height:1.388;font-size:1.25rem;}@media screen and (min-width:56.5rem){.chakra .wef-9dduvl{font-size:1.125rem;}} Explore and monitor how .chakra .wef-15eoq1r{margin-top:16px;margin-bottom:16px;line-height:1.388;font-size:1.25rem;color:#F7DB5E;}@media screen and (min-width:56.5rem){.chakra .wef-15eoq1r{font-size:1.125rem;}} Artificial Intelligence is affecting economies, industries and global issues

.chakra .wef-1nk5u5d{margin-top:16px;margin-bottom:16px;line-height:1.388;color:#2846F8;font-size:1.25rem;}@media screen and (min-width:56.5rem){.chakra .wef-1nk5u5d{font-size:1.125rem;}} Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:, emerging technologies.

- A new report has just been released, highlighting the changes in AI over the last 5 years, and predicted future trends.

- It was co-written by people across the world, with backgrounds in computer science, engineering, law, political science, policy, sociology and economics.

- In the last 5 years, AI has become an increasing part of our lives, revolutionizing a number of industries, but is still not free from risk.

A major new report on the state of artificial intelligence (AI) has just been released . Think of it as the AI equivalent of an Intergovernmental Panel on Climate Change report, in that it identifies where AI is at today, and the promise and perils in view.

From language generation and molecular medicine to disinformation and algorithmic bias, AI has begun to permeate every aspect of our lives.

The report argues that we are at an inflection point where researchers and governments must think and act carefully to contain the risks AI presents and make the most of its benefits.

A century-long study of AI

The report comes out of the AI100 project , which aims to study and anticipate the effects of AI rippling out through our lives over the course of the next 100 years.

AI100 produces a new report every five years: the first was published in 2016, and this is the second. As two points define a line, this second report lets us see the direction AI is taking us in.

One of us (Liz Sonenberg) is a member of the standing committee overseeing the AI100 project, and the other (Toby Walsh) was on the study panel that wrote this particular report. Members of the panel came from across the world, with backgrounds in computer science, engineering, law, political science, policy, sociology and economics.

The promises and perils of AI are becoming real

The report highlights the remarkable progress made in AI over the past five years. AI is leaving the laboratory and has entered our lives, having a “real-world impact on people, institutions, and culture”. Read the news on any given day and you’re likely to find multiple stories about some new advance in AI or some new use of AI.

For example, in natural language processing (NLP), computers can now analyse and even generate realistic human language. To demonstrate, we asked Open AI’s GPT-3 system , one of the largest neural networks ever built, to summarise the AI100 report for you. It did a pretty good job, even if the summary confronts our sense of self by being written in the first person:

In the coming decade, I expect that AI will play an increasingly prominent role in the lives of people everywhere. AI-infused services will become more common, and AI will become increasingly embedded in the daily lives of people across the world.

I believe that this will bring with it great economic and societal benefits, but that it will also require us to address the many challenges to ensure that the benefits are broadly shared and that people are not marginalised by these new technologies.

A key insight of AI research is that it is easier to build things than to understand why they work. However, defining what success looks like for an AI application is not straightforward.

For example, the AI systems that are used in healthcare to analyse symptoms, recommend diagnoses, or choose treatments are often far better than anything that could be built by a human, but their success is hard to quantify.

As a second example of the recent and remarkable progress in AI, consider the latest breakthrough from Google’s DeepMind. AlphaFold is an AI program that provides a huge step forward in our ability to predict how proteins fold.

This will likely lead to major advances in life sciences and medicine, accelerating efforts to understand the building blocks of life and enabling quicker and more sophisticated drug discovery. Most of the planet now knows to their cost how the unique shape of the spike proteins in the SARS-CoV-2 virus are key to its ability to invade our cells, and also to the vaccines developed to combat its deadly progress.

The AI100 report argues that worries about super-intelligent machines and wide-scale job loss from automation are still premature, requiring AI that is far more capable than available today. The main concern the report raises is not malevolent machines of superior intelligence to humans, but incompetent machines of inferior intelligence.

Once again, it’s easy to find in the news real-life stories of risks and threats to our democratic discourse and mental health posed by AI-powered tools. For instance, Facebook uses machine learning to sort its news feed and give each of its 2 billion users an unique but often inflammatory view of the world.

The World Economic Forum was the first to draw the world’s attention to the Fourth Industrial Revolution, the current period of unprecedented change driven by rapid technological advances. Policies, norms and regulations have not been able to keep up with the pace of innovation, creating a growing need to fill this gap.

The Forum established the Centre for the Fourth Industrial Revolution Network in 2017 to ensure that new and emerging technologies will help—not harm—humanity in the future. Headquartered in San Francisco, the network launched centres in China, India and Japan in 2018 and is rapidly establishing locally-run Affiliate Centres in many countries around the world.

The global network is working closely with partners from government, business, academia and civil society to co-design and pilot agile frameworks for governing new and emerging technologies, including artificial intelligence (AI) , autonomous vehicles , blockchain , data policy , digital trade , drones , internet of things (IoT) , precision medicine and environmental innovations .

Learn more about the groundbreaking work that the Centre for the Fourth Industrial Revolution Network is doing to prepare us for the future.

Want to help us shape the Fourth Industrial Revolution? Contact us to find out how you can become a member or partner.

The time to act is now

It’s clear we’re at an inflection point: we need to think seriously and urgently about the downsides and risks the increasing application of AI is revealing. The ever-improving capabilities of AI are a double-edged sword. Harms may be intentional, like deepfake videos, or unintended, like algorithms that reinforce racial and other biases.

AI research has traditionally been undertaken by computer and cognitive scientists. But the challenges being raised by AI today are not just technical. All areas of human inquiry, and especially the social sciences, need to be included in a broad conversation about the future of the field. Minimising negative impacts on society and enhancing the positives requires consideration from across academia and with societal input.

Governments also have a crucial role to play in shaping the development and application of AI. Indeed, governments around the world have begun to consider and address the opportunities and challenges posed by AI. But they remain behind the curve.

A greater investment of time and resources is needed to meet the challenges posed by the rapidly evolving technologies of AI and associated fields. In addition to regulation, governments also need to educate. In an AI-enabled world, our citizens, from the youngest to the oldest, need to be literate in these new digital technologies.

At the end of the day, the success of AI research will be measured by how it has empowered all people, helping tackle the many wicked problems facing the planet, from the climate emergency to increasing inequality within and between countries.

AI will have failed if it harms or devalues the very people we are trying to help.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Related topics:

The agenda .chakra .wef-n7bacu{margin-top:16px;margin-bottom:16px;line-height:1.388;font-weight:400;} weekly.

A weekly update of the most important issues driving the global agenda

.chakra .wef-1dtnjt5{display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;} More on Emerging Technologies .chakra .wef-17xejub{-webkit-flex:1;-ms-flex:1;flex:1;justify-self:stretch;-webkit-align-self:stretch;-ms-flex-item-align:stretch;align-self:stretch;} .chakra .wef-nr1rr4{display:-webkit-inline-box;display:-webkit-inline-flex;display:-ms-inline-flexbox;display:inline-flex;white-space:normal;vertical-align:middle;text-transform:uppercase;font-size:0.75rem;border-radius:0.25rem;font-weight:700;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;line-height:1.2;-webkit-letter-spacing:1.25px;-moz-letter-spacing:1.25px;-ms-letter-spacing:1.25px;letter-spacing:1.25px;background:none;padding:0px;color:#B3B3B3;-webkit-box-decoration-break:clone;box-decoration-break:clone;-webkit-box-decoration-break:clone;}@media screen and (min-width:37.5rem){.chakra .wef-nr1rr4{font-size:0.875rem;}}@media screen and (min-width:56.5rem){.chakra .wef-nr1rr4{font-size:1rem;}} See all

Robot rock stars, pocket forests, and the battle for chips - Forum podcasts you should hear this month

Robin Pomeroy and Linda Lacina

April 29, 2024

4 steps for the Middle East and North Africa to develop 'intelligent economies'

Maroun Kairouz

The future of learning: How AI is revolutionizing education 4.0

Tanya Milberg

April 28, 2024

Shaping the Future of Learning: The Role of AI in Education 4.0

The cybersecurity industry has an urgent talent shortage. Here’s how to plug the gap

Michelle Meineke

Stanford just released its annual AI Index report. Here's what it reveals

April 26, 2024

July 12, 2023

AI Is an Existential Threat—Just Not the Way You Think

Some fear that artificial intelligence will threaten humanity’s survival. But the existential risk is more philosophical than apocalyptic

By Nir Eisikovits & The Conversation US

AI isn’t likely to enslave humanity, but it could take over many aspects of our lives.

krishna dev/Alamy Stock Photo

The following essay is reprinted with permission from The Conversation , an online publication covering the latest research.

The rise of ChatGPT and similar artificial intelligence systems has been accompanied by a sharp increase in anxiety about AI . For the past few months, executives and AI safety researchers have been offering predictions, dubbed “ P(doom) ,” about the probability that AI will bring about a large-scale catastrophe.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing . By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Worries peaked in May 2023 when the nonprofit research and advocacy organization Center for AI Safety released a one-sentence statement : “Mitigating the risk of extinction from A.I. should be a global priority alongside other societal-scale risks, such as pandemics and nuclear war.” The statement was signed by many key players in the field, including the leaders of OpenAI, Google and Anthropic, as well as two of the so-called “godfathers” of AI: Geoffrey Hinton and Yoshua Bengio .

You might ask how such existential fears are supposed to play out. One famous scenario is the “ paper clip maximizer ” thought experiment articulated by Oxford philosopher Nick Bostrom . The idea is that an AI system tasked with producing as many paper clips as possible might go to extraordinary lengths to find raw materials, like destroying factories and causing car accidents.

A less resource-intensive variation has an AI tasked with procuring a reservation to a popular restaurant shutting down cellular networks and traffic lights in order to prevent other patrons from getting a table.

Office supplies or dinner, the basic idea is the same: AI is fast becoming an alien intelligence, good at accomplishing goals but dangerous because it won’t necessarily align with the moral values of its creators. And, in its most extreme version, this argument morphs into explicit anxieties about AIs enslaving or destroying the human race .

Actual harm

In the past few years, my colleagues and I at UMass Boston’s Applied Ethics Center have been studying the impact of engagement with AI on people’s understanding of themselves, and I believe these catastrophic anxieties are overblown and misdirected .

Yes, AI’s ability to create convincing deep-fake video and audio is frightening, and it can be abused by people with bad intent. In fact, that is already happening: Russian operatives likely attempted to embarrass Kremlin critic Bill Browder by ensnaring him in a conversation with an avatar for former Ukrainian President Petro Poroshenko. Cybercriminals have been using AI voice cloning for a variety of crimes – from high-tech heists to ordinary scams .

AI decision-making systems that offer loan approval and hiring recommendations carry the risk of algorithmic bias, since the training data and decision models they run on reflect long-standing social prejudices.

These are big problems, and they require the attention of policymakers. But they have been around for a while, and they are hardly cataclysmic.

Not in the same league

The statement from the Center for AI Safety lumped AI in with pandemics and nuclear weapons as a major risk to civilization. There are problems with that comparison. COVID-19 resulted in almost 7 million deaths worldwide , brought on a massive and continuing mental health crisis and created economic challenges , including chronic supply chain shortages and runaway inflation.

Nuclear weapons probably killed more than 200,000 people in Hiroshima and Nagasaki in 1945, claimed many more lives from cancer in the years that followed, generated decades of profound anxiety during the Cold War and brought the world to the brink of annihilation during the Cuban Missile crisis in 1962. They have also changed the calculations of national leaders on how to respond to international aggression, as currently playing out with Russia’s invasion of Ukraine.

AI is simply nowhere near gaining the ability to do this kind of damage. The paper clip scenario and others like it are science fiction. Existing AI applications execute specific tasks rather than making broad judgments. The technology is far from being able to decide on and then plan out the goals and subordinate goals necessary for shutting down traffic in order to get you a seat in a restaurant, or blowing up a car factory in order to satisfy your itch for paper clips.

Not only does the technology lack the complicated capacity for multilayer judgment that’s involved in these scenarios, it also does not have autonomous access to sufficient parts of our critical infrastructure to start causing that kind of damage.

What it means to be human

Actually, there is an existential danger inherent in using AI, but that risk is existential in the philosophical rather than apocalyptic sense. AI in its current form can alter the way people view themselves. It can degrade abilities and experiences that people consider essential to being human.

For example, humans are judgment-making creatures. People rationally weigh particulars and make daily judgment calls at work and during leisure time about whom to hire, who should get a loan, what to watch and so on. But more and more of these judgments are being automated and farmed out to algorithms . As that happens, the world won’t end. But people will gradually lose the capacity to make these judgments themselves. The fewer of them people make, the worse they are likely to become at making them.

Or consider the role of chance in people’s lives. Humans value serendipitous encounters: coming across a place, person or activity by accident, being drawn into it and retrospectively appreciating the role accident played in these meaningful finds. But the role of algorithmic recommendation engines is to reduce that kind of serendipity and replace it with planning and prediction.

Finally, consider ChatGPT’s writing capabilities. The technology is in the process of eliminating the role of writing assignments in higher education. If it does, educators will lose a key tool for teaching students how to think critically .

Not dead but diminished

So, no, AI won’t blow up the world. But the increasingly uncritical embrace of it, in a variety of narrow contexts, means the gradual erosion of some of humans’ most important skills. Algorithms are already undermining people’s capacity to make judgments, enjoy serendipitous encounters and hone critical thinking.

The human species will survive such losses. But our way of existing will be impoverished in the process. The fantastic anxieties around the coming AI cataclysm, singularity, Skynet, or however you might think of it, obscure these more subtle costs. Recall T.S. Eliot’s famous closing lines of “ The Hollow Men ”: “This is the way the world ends,” he wrote, “not with a bang but a whimper.”

This article was originally published on The Conversation . Read the original article .

MIT Technology Review

- Newsletters

The true dangers of AI are closer than we think

Forget superintelligent AI: algorithms are already creating real harm. The good news: the fight back has begun.

- Karen Hao archive page

As long as humans have built machines, we’ve feared the day they could destroy us. Stephen Hawking famously warned that AI could spell an end to civilization. But to many AI researchers, these conversations feel unmoored. It’s not that they don’t fear AI running amok—it’s that they see it already happening, just not in the ways most people would expect.

AI is now screening job candidates, diagnosing disease, and identifying criminal suspects. But instead of making these decisions more efficient or fair, it’s often perpetuating the biases of the humans on whose decisions it was trained.

William Isaac is a senior research scientist on the ethics and society team at DeepMind, an AI startup that Google acquired in 2014. He also co-chairs the Fairness, Accountability, and Transparency conference—the premier annual gathering of AI experts, social scientists, and lawyers working in this area. I asked him about the current and potential challenges facing AI development—as well as the solutions.

Q: Should we be worried about superintelligent AI?

A: I want to shift the question. The threats overlap, whether it’s predictive policing and risk assessment in the near term, or more scaled and advanced systems in the longer term. Many of these issues also have a basis in history. So potential risks and ways to approach them are not as abstract as we think.

There are three areas that I want to flag. Probably the most pressing one is this question about value alignment: how do you actually design a system that can understand and implement the various forms of preferences and values of a population? In the past few years we’ve seen attempts by policymakers, industry, and others to try to embed values into technical systems at scale—in areas like predictive policing, risk assessments, hiring, etc. It’s clear that they exhibit some form of bias that reflects society. The ideal system would balance out all the needs of many stakeholders and many people in the population. But how does society reconcile their own history with aspiration? We’re still struggling with the answers, and that question is going to get exponentially more complicated. Getting that problem right is not just something for the future, but for the here and now.

The second one would be achieving demonstrable social benefit. Up to this point there are still few pieces of empirical evidence that validate that AI technologies will achieve the broad-based social benefit that we aspire to.

Lastly, I think the biggest one that anyone who works in the space is concerned about is: what are the robust mechanisms of oversight and accountability.

Q: How do we overcome these risks and challenges?

A: Three areas would go a long way. The first is to build a collective muscle for responsible innovation and oversight. Make sure you’re thinking about where the forms of misalignment or bias or harm exist. Make sure you develop good processes for how you ensure that all groups are engaged in the process of technological design. Groups that have been historically marginalized are often not the ones that get their needs met. So how we design processes to actually do that is important.

The second one is accelerating the development of the sociotechnical tools to actually do this work. We don’t have a whole lot of tools.

The last one is providing more funding and training for researchers and practitioners—particularly researchers and practitioners of color—to conduct this work. Not just in machine learning, but also in STS [science, technology, and society] and the social sciences. We want to not just have a few individuals but a community of researchers to really understand the range of potential harms that AI systems pose, and how to successfully mitigate them.

Q: How far have AI researchers come in thinking about these challenges, and how far do they still have to go?

A: In 2016, I remember, the White House had just come out with a big data report, and there was a strong sense of optimism that we could use data and machine learning to solve some intractable social problems. Simultaneously, there were researchers in the academic community who had been flagging in a very abstract sense: “Hey, there are some potential harms that could be done through these systems.” But they largely had not interacted at all. They existed in unique silos.

Since then, we’ve just had a lot more research targeting this intersection between known flaws within machine-learning systems and their application to society. And once people began to see that interplay, they realized: “Okay, this is not just a hypothetical risk. It is a real threat.” So if you view the field in phases, phase one was very much highlighting and surfacing that these concerns are real. The second phase now is beginning to grapple with broader systemic questions.

Q: So are you optimistic about achieving broad-based beneficial AI?

A: I am. The past few years have given me a lot of hope. Look at facial recognition as an example. There was the great work by Joy Buolamwini, Timnit Gebru, and Deb Raji in surfacing intersectional disparities in accuracies across facial recognition systems [i.e., showing these systems were far less accurate on Black female faces than white male ones]. There’s the advocacy that happened in civil society to mount a rigorous defense of human rights against misapplication of facial recognition. And also the great work that policymakers, regulators, and community groups from the grassroots up were doing to communicate exactly what facial recognition systems were and what potential risks they posed, and to demand clarity on what the benefits to society would be. That’s a model of how we could imagine engaging with other advances in AI.

But the challenge with facial recognition is we had to adjudicate these ethical and values questions while we were publicly deploying the technology. In the future, I hope that some of these conversations happen before the potential harms emerge.

Q: What do you dream about when you dream about the future of AI?

A: It could be a great equalizer. Like if you had AI teachers or tutors that could be available to students and communities where access to education and resources is very limited, that’d be very empowering. And that’s a nontrivial thing to want from this technology. How do you know it’s empowering? How do you know it’s socially beneficial?

I went to graduate school in Michigan during the Flint water crisis. When the initial incidences of lead pipes emerged, the records they had for where the piping systems were located were on index cards at the bottom of an administrative building. The lack of access to technologies had put them at a significant disadvantage. It means the people who grew up in those communities, over 50% of whom are African-American, grew up in an environment where they don’t get basic services and resources.

Artificial intelligence

Large language models can do jaw-dropping things. but nobody knows exactly why..

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

- Will Douglas Heaven archive page

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

The AI Act is done. Here’s what will (and won’t) change

The hard work starts now.

- Melissa Heikkilä archive page

Is robotics about to have its own ChatGPT moment?

Researchers are using generative AI and other techniques to teach robots new skills—including tasks they could perform in homes.

Stay connected

Get the latest updates from mit technology review.

Discover special offers, top stories, upcoming events, and more.

Thank you for submitting your email!

It looks like something went wrong.

We’re having trouble saving your preferences. Try refreshing this page and updating them one more time. If you continue to get this message, reach out to us at [email protected] with a list of newsletters you’d like to receive.

The Case Against AI Everything, Everywhere, All at Once

I cringe at being called “Mother of the Cloud, " but having been part of the development and implementation of the internet and networking industry—as an entrepreneur, CTO of Cisco, and on the boards of Disney and FedEx—I am fortunate to have had a 360-degree view of the technologies that are at the foundation of our modern world.

I have never had such mixed feelings about technological innovation. In stark contrast to the early days of internet development, when many stakeholders had a say, discussions about AI and our future are being shaped by leaders who seem to be striving for absolute ideological power. The result is “Authoritarian Intelligence.” The hubris and determination of tech leaders to control society is threatening our individual, societal, and business autonomy.

What is happening is not just a battle for market control. A small number of tech titans are busy designing our collective future, presenting their societal vision, and specific beliefs about our humanity, as theonly possible path. Hiding behind an illusion of natural market forces, they are harnessing their wealth and influence to shape not just productization and implementation of AI technology, but also the research.

Artificial Intelligence is not just chat bots, but a broad field of study. One implementation capturing today’s attention, machine learning, has expanded beyond predicting our behavior to generating content—called Generative AI. The awe of machines wielding the power of language is seductive, but Performative AI might be a more appropriate name, as it leans toward production and mimicry—and sometimes fakery—over deep creativity, accuracy, or empathy.

The very fact that the evolution of technology feels so inevitable is evidence of an act of manipulation, an authoritarian use of narrative brilliantly described by historian Timothy Snyder. He calls out the politics of inevitability “.. . a sense that the future is just more of the present, ... that there are no alternatives, and therefore nothing really to be done.” There is no discussion of underlying values. Facts that don’t fit the narrative are disregarded.

Read More: AI's Long-term Risks Shouldn't Makes Us Miss Present Risks

Here in Silicon Valley, this top-down authoritarian technique is amplified by a bottom-up culture of inevitability. An orchestrated frenzy begins when the next big thing to fuel the Valley’s economic and innovation ecosystem is heralded by companies, investors, media, and influencers.

They surround us with language coopted from common values—democratization, creativity, open, safe. In behavioral psych classes, product designers are taught to eliminate friction—removing any resistance to us to acting on impulse .

The promise of short-term efficiency, convenience, and productivity lures us. Any semblance of pushback is decried as ignorance, or a threat to global competition. No one wants to be called a Luddite. Tech leaders, seeking to look concerned about the public interest, call for limited, friendly regulation, and the process moves forward until the tech is fully enmeshed in our society.

We bought into this narrative before, when social media, smartphones and cloud computing came on the scene. We didn’t question whether the only way to build community, find like-minded people, or be heard, was through one enormous “town square,” rife with behavioral manipulation, pernicious algorithmic feeds, amplification of pre-existing bias, and the pursuit of likes and follows.

It’s now obvious that it was a path towards polarization, toxicity of conversation, and societal disruption. Big Tech was the main beneficiary as industries and institutions jumped on board, accelerating their own disruption, and civic leaders were focused on how to use these new tools to grow their brands and not on helping us understand the risks.

We are at the same juncture now with AI. Once again, a handful of competitive but ideologically aligned leaders are telling us that large-scale, general-purpose AI implementations are the only way forward. In doing so, they disregard the dangerous level of complexity and the undue level of control and financial return to be granted to them.

While they talk about safety and responsibility, large companies protect themselves at the expense of everyone else. With no checks on their power, they move from experimenting in the lab to experimenting on us, not questioning how much agency we want to give up or whether we believe a specific type of intelligence should be the only measure of human value.

The different types and levels of risks are overwhelming, and we need to focus on all of them: the long-term existential risks, and the existing ones. Disinformation, supercharged by deep fakes, data privacy issues, and biased decision making continue to erode trust—with few viable solutions. We do not yet fully understand risks to our society at large such as the level and pace of job loss, environmental impacts , and whether we want opaque systems making decisions for us.

Deeper risks question the very aspects of humanity. When we prioritize “intelligence” to the exclusion of cognition, might we devolve to become more like machines? On the current trajectory we may not even have the option to weigh in on who gets to decide what is in our best interest. Eliminating humanity is not the only way to wipe out our humanity .

Human well-being and dignity should be our North Star—with innovation in a supporting role. We can learn from the open systems environment of the 1970s and 80s. When we were first developing the infrastructure of the internet , power was distributed between large and small companies, vendors and customers, government and business. These checks and balances led to better decisions and less risk.

AI everything, everywhere, all at once , is not inevitable, if we use our powers to question the tools and the people shaping them. Private and public sector leaders can slow the frenzy through acts of friction; simply not giving in to the “Authoritarian Intelligence” emanating out of Silicon Valley, and our collective group think.

We can buy the time needed to develop impactful national and international policy that distributes power and protects human rights, and inspire independent funding and ethics guidelines for a vibrant research community that will fuel innovation.

With the right priorities and guardrails, AI can help advance science, cure diseases, build new industries, expand joy, and maintain human dignity and the differences that make us unique.

More Must-Reads From TIME

- The 100 Most Influential People of 2024

- How Far Trump Would Go

- Scenes From Pro-Palestinian Encampments Across U.S. Universities

- Saving Seconds Is Better Than Hours

- Why Your Breakfast Should Start with a Vegetable

- 6 Compliments That Land Every Time

- Welcome to the Golden Age of Ryan Gosling

- Want Weekly Recs on What to Watch, Read, and More? Sign Up for Worth Your Time

Contact us at [email protected]

Artificial intelligence has psychological impacts our brains might not be ready for, expert warns

Health Artificial intelligence has psychological impacts our brains might not be ready for, expert warns

These days we can have a reasoned conversation with a humanoid robot, get fooled by a deep fake celebrity, and have our heart broken by a romantic chatbot.

While artificial intelligence (AI) promises to make life easier, developments like these can also mess with our minds, says Joel Pearson, a cognitive neuroscientist at the University of New South Wales.

We fear killer robots and out-of-control self-driving cars, but for Professor Pearson the psychological effects of AI are more significant, even if they're harder to picture in our mind's eye.

The technology's impact on everything from education to work and relationships is massively uncertain – something humans are not generally comfortable with, Professor Pearson tells RN's All in the Mind .

"Our brains have evolved to fear uncertainty."

What will be left for humans to do as AI improves? Will we feel like we have no purpose and meaning — and will we suffer the inevitable depression that comes with that?

There's already cause for concern about the impact of AI on our mental wellbeing, Professor Pearson says.

"AI is already affecting us and changing our mental health in ways that are really bad for us."

Humanoids and chatbots

One of the pitfalls of AI is our tendency to project human characteristics onto the non-human agents we interact with, Professor Pearson says.

So when ChatGPT communicates like a human we say it's "intelligent" – especially when its words are articulated by a natural-sounding voice from a robot in the shape of a human.

Shift this dynamic to AI companions on your phone and we see just how vulnerable humans can be.

You might have heard about a chatbot called Replika, which was marketed as "always on your side" … and "always ready to chat when you need an empathetic friend".

Subscribers paid for the chatbot to have "erotic role-play" features that included flirting and sexting, but when its maker toned down these elements, people who had fallen in love with their chatbot freaked out, Professor Pearson says.

The chatbot was no longer responding to their sexual advances.

"People were saying that their digital partner, their boyfriend or girlfriend in Replika was no longer themselves … and they were devastated by it."

The chatbots also brought out a darker side in some Replika clients.

"Mainly males were bragging … about how they could have this sort of abusive relationship – 'I had this Replika girl and she was like a slave. I would tell her, I'm going to switch her off and kill her … and she would beg me not to switch off'," Professor Pearson says.

It was reminiscent of what happens in the dystopian science fiction series Westworld, where people let out their urges on artificial humans, he says.

Professor Pearson says there's a lack of research on the implications of this aspect of human AI relationships.

"What does it do to us? … If I treat my AI like a slave and I'm rude to it and abusive, how does that then change how I relate to humans? Does that carry over?"

While an AI partner might appear to make an ideal companion, it's a poor model for human relationships.

"Part of being in a relationship with humans is that there are compromises … The other person will challenge you, you will grow, you will have to face things together.

"And if you don't have those challenges and people picking you up on things, you get whatever you want, whenever you want. It's an addictive thing that is probably not healthy."

The danger of deep fakes

Chatbots messing with our relationships is one thing, but deepfake images and videos can alter our very sense of what's real and what's fake.

"Not only can they look real, you can actually now do a real-time deepfake," Professor Pearson says.

And here too, AI is being weaponised against women.

"I think 96 per cent of deepfakes so far have been non-consensual pornography."

Who can forget the deepfake featuring Taylor Swift earlier this year.

One sexually explicit image of the pop star was viewed 47 million times before the account was suspended.

Once we are exposed to fake information, evidence suggests it can have a permanent impact, even if it is later revealed to be false, Professor Pearson says.

"You can't really forget about it. That information sticks with you."

And he says this is likely to be more the case with videos because they engage more senses and tug at our emotions.

While die-hard Swifties may forget about the deepfakes, others might be more influenced by them.

"People who know less of her will be a lot more vulnerable."

This was because they would have a less developed mental model about the pop star that was more susceptible to influence from deepfakes, he says.

"[The deepfakes are] going to be patching into our long-term memory in ways that [make us] confused whether they're real and not. And the catch is, even when we're told they're not real, those effects stick."

The disturbing impacts of AI on our relationships and our grasp on reality itself could present a particular risk to teenagers.

Think of a school student subjected to images of themselves run through "nudifying apps" that use AI to undress a fully clothed person.

"While their brain is still developing … it's going to do pretty nasty things to their mental health," Professor Pearson says.

These dangers are exacerbated by young people spending less time face-to-face with others, which is linked to a decline in empathy and emotional intelligence, he says.

Cutting through the tech noise

Professor Pearson argues AI is presenting society with unprecedented challenges and the technology should not be dismissed as just another "tool".

"You can't compare it to tools. The industrial revolution, the printing press, TVs, computers… This is radically different in ways that we don't fully understand."

He's calling for more research into the psychological impact of AI.

"I don't want to make people depressed and anxious about AI," Professor Pearson says.

"There's a huge number of positives, but I've been pushing the psychological part of that because I don't see anyone else talking about it."

In the face of these changes, Professor Pearson suggests focusing on our humanity.

"[Figure out] what are the core essentials of being human, and how do you want to create your own life in ways that might be independent from all this tech uncertainty."

"Is that going for a walk in nature, or is it just spending time with physical humans and loved ones?

"I think over the next decade we're all going to be faced with soul-searching journeys like that. Why not start thinking about it now?"

Professor Pearson says he's trying to apply these principles to his own life, without turning his back on technological advances.

"I'm trying to figure out how I can use AI to help me do more of the things I really enjoy."

"My hope .. is that a lot of the things I've talked about won't be as catastrophic as I'm making out.

"But I think raising the alarm now can avoid that pain and suffering later on and that's what I want to do."

Listen to the full episode "Scarier than killer robots": why your mind isn't ready for AI and subscribe to RN's All in the Mind to explore other topics on the mind, brain and behaviour.

Mental health in your inbox

- X (formerly Twitter)

Related Stories

A 'great flood' of ai noise is coming for the internet and it's swallowing twitter first.

AI killed Leanne's copywriting business. Now she earns a living teaching how to use ChatGPT

Jordan's Anatomy: How an Australian pornographer built his AI superstar

- Artificial Intelligence

- Family and Relationships

- Mental Health

- Mental Wellbeing

- Science and Technology

Essay Artificial Intelligence is Dangerous to Humanity

This essay about the dangers inherent in the advancement of Artificial Intelligence (AI). It highlights the potential threats posed by AI, including the development of autonomous weaponry, algorithmic bias, and the looming prospect of superintelligent AI. The essay emphasizes the need for caution and ethical considerations in the deployment of AI technologies to mitigate these risks and safeguard humanity’s future.

How it works

In the grand tapestry of human progress, the threads of Artificial Intelligence (AI) weave a complex and often perilous pattern. While hailed as a beacon of innovation, AI harbors within its circuits a darker potential, one that threatens to cast a long shadow over humanity’s future. As we marvel at the capabilities of AI to revolutionize industries and streamline processes, we must also confront the inherent dangers it poses to our collective well-being.

At the forefront of these concerns is the specter of autonomous weaponry.

In the crucible of conflict, AI-controlled drones and weapons systems emerge as formidable adversaries, devoid of the moral compass that guides human decision-making. The allure of unmanned warfare, with its promises of precision and efficiency, masks the stark reality of a battlefield where the rules of engagement are dictated not by human conscience but by lines of code. The unchecked proliferation of autonomous weapons threatens to plunge us into a new era of warfare, one where the horrors of conflict are amplified by the cold logic of AI.

Yet, the dangers of AI extend beyond the battlefield and into the very fabric of our society. In the labyrinthine corridors of algorithmic decision-making, biases lurk, hidden beneath layers of data and computation. Despite our best intentions, AI systems have been shown to perpetuate and even exacerbate existing societal inequalities, amplifying the voices of the powerful while silencing the marginalized. From hiring algorithms that favor the privileged to predictive policing models that target minority communities, the insidious influence of bias threatens to erode the foundations of justice and equality.

Moreover, as we peer into the murky depths of the future, the emergence of superintelligent AI looms large on the horizon. In the crucible of innovation, we dance ever closer to the precipice of a technological singularity, where AI surpasses human intelligence and ushers in a new era of uncertainty. While some herald this moment as a triumph of human ingenuity, others warn of the existential risks it poses to our species. As we relinquish control to machines with intellects beyond our comprehension, we must grapple with the profound implications of a future where humanity plays second fiddle to its own creations.

In the final reckoning, the march of Artificial Intelligence presents us with a Faustian bargain: a promise of progress tempered by the specter of peril. As we navigate the uncertain waters of technological innovation, we must heed the lessons of history and proceed with caution. For in the tangled web of AI lies both the promise of a brighter future and the shadow of our own undoing.

Cite this page

Essay Artificial Intelligence is Dangerous to Humanity. (2024, Apr 14). Retrieved from https://papersowl.com/examples/essay-artificial-intelligence-is-dangerous-to-humanity/

"Essay Artificial Intelligence is Dangerous to Humanity." PapersOwl.com , 14 Apr 2024, https://papersowl.com/examples/essay-artificial-intelligence-is-dangerous-to-humanity/

PapersOwl.com. (2024). Essay Artificial Intelligence is Dangerous to Humanity . [Online]. Available at: https://papersowl.com/examples/essay-artificial-intelligence-is-dangerous-to-humanity/ [Accessed: 2 May. 2024]

"Essay Artificial Intelligence is Dangerous to Humanity." PapersOwl.com, Apr 14, 2024. Accessed May 2, 2024. https://papersowl.com/examples/essay-artificial-intelligence-is-dangerous-to-humanity/

"Essay Artificial Intelligence is Dangerous to Humanity," PapersOwl.com , 14-Apr-2024. [Online]. Available: https://papersowl.com/examples/essay-artificial-intelligence-is-dangerous-to-humanity/. [Accessed: 2-May-2024]

PapersOwl.com. (2024). Essay Artificial Intelligence is Dangerous to Humanity . [Online]. Available at: https://papersowl.com/examples/essay-artificial-intelligence-is-dangerous-to-humanity/ [Accessed: 2-May-2024]

Don't let plagiarism ruin your grade

Hire a writer to get a unique paper crafted to your needs.

Our writers will help you fix any mistakes and get an A+!

Please check your inbox.

You can order an original essay written according to your instructions.

Trusted by over 1 million students worldwide

1. Tell Us Your Requirements

2. Pick your perfect writer

3. Get Your Paper and Pay

Hi! I'm Amy, your personal assistant!

Don't know where to start? Give me your paper requirements and I connect you to an academic expert.

short deadlines

100% Plagiarism-Free

Certified writers

Artificial Intelligence Essay

500+ words essay on artificial intelligence.

Artificial intelligence (AI) has come into our daily lives through mobile devices and the Internet. Governments and businesses are increasingly making use of AI tools and techniques to solve business problems and improve many business processes, especially online ones. Such developments bring about new realities to social life that may not have been experienced before. This essay on Artificial Intelligence will help students to know the various advantages of using AI and how it has made our lives easier and simpler. Also, in the end, we have described the future scope of AI and the harmful effects of using it. To get a good command of essay writing, students must practise CBSE Essays on different topics.

Artificial Intelligence is the science and engineering of making intelligent machines, especially intelligent computer programs. It is concerned with getting computers to do tasks that would normally require human intelligence. AI systems are basically software systems (or controllers for robots) that use techniques such as machine learning and deep learning to solve problems in particular domains without hard coding all possibilities (i.e. algorithmic steps) in software. Due to this, AI started showing promising solutions for industry and businesses as well as our daily lives.

Importance and Advantages of Artificial Intelligence

Advances in computing and digital technologies have a direct influence on our lives, businesses and social life. This has influenced our daily routines, such as using mobile devices and active involvement on social media. AI systems are the most influential digital technologies. With AI systems, businesses are able to handle large data sets and provide speedy essential input to operations. Moreover, businesses are able to adapt to constant changes and are becoming more flexible.

By introducing Artificial Intelligence systems into devices, new business processes are opting for the automated process. A new paradigm emerges as a result of such intelligent automation, which now dictates not only how businesses operate but also who does the job. Many manufacturing sites can now operate fully automated with robots and without any human workers. Artificial Intelligence now brings unheard and unexpected innovations to the business world that many organizations will need to integrate to remain competitive and move further to lead the competitors.

Artificial Intelligence shapes our lives and social interactions through technological advancement. There are many AI applications which are specifically developed for providing better services to individuals, such as mobile phones, electronic gadgets, social media platforms etc. We are delegating our activities through intelligent applications, such as personal assistants, intelligent wearable devices and other applications. AI systems that operate household apparatus help us at home with cooking or cleaning.

Future Scope of Artificial Intelligence

In the future, intelligent machines will replace or enhance human capabilities in many areas. Artificial intelligence is becoming a popular field in computer science as it has enhanced humans. Application areas of artificial intelligence are having a huge impact on various fields of life to solve complex problems in various areas such as education, engineering, business, medicine, weather forecasting etc. Many labourers’ work can be done by a single machine. But Artificial Intelligence has another aspect: it can be dangerous for us. If we become completely dependent on machines, then it can ruin our life. We will not be able to do any work by ourselves and get lazy. Another disadvantage is that it cannot give a human-like feeling. So machines should be used only where they are actually required.