Level Up Your MTSS With Our Free Interventions and Progress Monitoring Toolkit.

- Multi-Tiered System of Supports Build effective, district-wide MTSS

- School Climate & Culture Create a safe, supportive learning environment

- Positive Behavior Interventions & Supports Promote positive behavior and climate

- Family Engagement Engage families as partners in education

- Platform Holistic data and student support tools

- Integrations Daily syncs with district data systems and assessments

- Professional Development Strategic advising, workshop facilitation, and ongoing support

- Surveys and Toolkits

18 Research-Based MTSS Interventions

Download step-by-step guides for intervention strategies across literacy, math, behavior, and SEL.

- Connecticut

- Massachusetts

- Mississippi

- New Hampshire

- North Carolina

- North Dakota

- Pennsylvania

- Rhode Island

- South Carolina

- South Dakota

- West Virginia

- Testimonials

- Success Stories

- About Panorama

- Data Privacy

- Leadership Team

- In the Press

- Request a Demo

- Popular Posts

- Multi-Tiered System of Supports

- Family Engagement

- Social-Emotional Well-Being

- College and Career Readiness

Show Categories

School Climate

45 survey questions to understand student engagement in online learning.

In our work with K-12 school districts during the COVID-19 pandemic, countless district leaders and school administrators have told us how challenging it's been to build student engagement outside of the traditional classroom.

Not only that, but the challenges associated with online learning may have the largest impact on students from marginalized communities. Research suggests that some groups of students experience more difficulty with academic performance and engagement when course content is delivered online vs. face-to-face.

As you look to improve the online learning experience for students, take a moment to understand how students, caregivers, and staff are currently experiencing virtual learning. Where are the areas for improvement? How supported do students feel in their online coursework? Do teachers feel equipped to support students through synchronous and asynchronous facilitation? How confident do families feel in supporting their children at home?

Below, we've compiled a bank of 45 questions to understand student engagement in online learning. Interested in running a student, family, or staff engagement survey? Click here to learn about Panorama's survey analytics platform for K-12 school districts.

Download Toolkit: 9 Virtual Learning Resources to Engage Students, Families, and Staff

45 Questions to Understand Student Engagement in Online Learning

For students (grades 3-5 and 6-12):.

1. How excited are you about going to your classes?

2. How often do you get so focused on activities in your classes that you lose track of time?

3. In your classes, how eager are you to participate?

4. When you are not in school, how often do you talk about ideas from your classes?

5. Overall, how interested are you in your classes?

6. What are the most engaging activities that happen in this class?

7. Which aspects of class have you found least engaging?

8. If you were teaching class, what is the one thing you would do to make it more engaging for all students?

9. How do you know when you are feeling engaged in class?

10. What projects/assignments/activities do you find most engaging in this class?

11. What does this teacher do to make this class engaging?

12. How much effort are you putting into your classes right now?

13. How difficult or easy is it for you to try hard on your schoolwork right now?

14. How difficult or easy is it for you to stay focused on your schoolwork right now?

15. If you have missed in-person school recently, why did you miss school?

16. If you have missed online classes recently, why did you miss class?

17. How would you like to be learning right now?

18. How happy are you with the amount of time you spend speaking with your teacher?

19. How difficult or easy is it to use the distance learning technology (computer, tablet, video calls, learning applications, etc.)?

20. What do you like about school right now?

21. What do you not like about school right now?

22. When you have online schoolwork, how often do you have the technology (laptop, tablet, computer, etc) you need?

23. How difficult or easy is it for you to connect to the internet to access your schoolwork?

24. What has been the hardest part about completing your schoolwork?

25. How happy are you with how much time you spend in specials or enrichment (art, music, PE, etc.)?

26. Are you getting all the help you need with your schoolwork right now?

27. How sure are you that you can do well in school right now?

28. Are there adults at your school you can go to for help if you need it right now?

29. If you are participating in distance learning, how often do you hear from your teachers individually?

For Families, Parents, and Caregivers:

30 How satisfied are you with the way learning is structured at your child’s school right now?

31. Do you think your child should spend less or more time learning in person at school right now?

32. How difficult or easy is it for your child to use the distance learning tools (video calls, learning applications, etc.)?

33. How confident are you in your ability to support your child's education during distance learning?

34. How confident are you that teachers can motivate students to learn in the current model?

35. What is working well with your child’s education that you would like to see continued?

36. What is challenging with your child’s education that you would like to see improved?

37. Does your child have their own tablet, laptop, or computer available for schoolwork when they need it?

38. What best describes your child's typical internet access?

39. Is there anything else you would like us to know about your family’s needs at this time?

For Teachers and Staff:

40. In the past week, how many of your students regularly participated in your virtual classes?

41. In the past week, how engaged have students been in your virtual classes?

42. In the past week, how engaged have students been in your in-person classes?

43. Is there anything else you would like to share about student engagement at this time?

44. What is working well with the current learning model that you would like to see continued?

45. What is challenging about the current learning model that you would like to see improved?

Elevate Student, Family, and Staff Voices This Year With Panorama

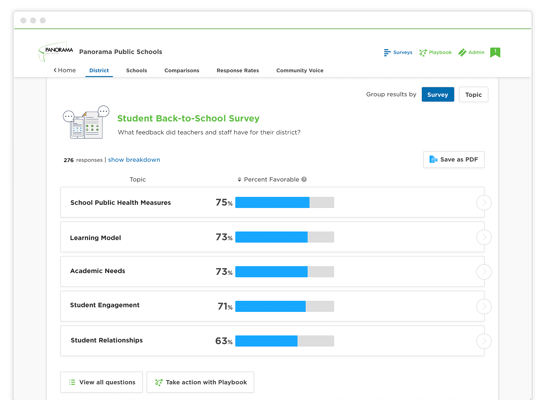

Schools and districts can use Panorama’s leading survey administration and analytics platform to quickly gather and take action on information from students, families, teachers, and staff. The questions are applicable to all types of K-12 school settings and grade levels, as well as to communities serving students from a range of socioeconomic backgrounds.

In the Panorama platform, educators can view and disaggregate results by topic, question, demographic group, grade level, school, and more to inform priority areas and action plans. Districts may use the data to improve teaching and learning models, build stronger academic and social-emotional support systems, improve stakeholder communication, and inform staff professional development.

To learn more about Panorama's survey platform, get in touch with our team.

Related Articles

Engaging Your School Community in Survey Results (Q&A Ep. 4)

Learn how to engage principals, staff, families, and students in the survey results when running a stakeholder feedback program around school climate.

La Cañada Shares Survey Results

La Cañada Unified School District, Panorama's first client, shares results from its surveys, used to collect feedback from students, families, and staff.

44 Questions to Ask Students, Families, and Staff During the Pandemic

Identify ways to support students, families, and staff in your school district during the pandemic with these 44 questions.

Featured Resource

9 virtual learning resources to connect with students, families, and staff.

We've bundled our top resources for building belonging in hybrid or distance learning environments.

Join 90,000+ education leaders on our weekly newsletter.

- Twin Cities

University of Minnesota

- Bachelor's Degrees

- Master's Degrees

- Doctorate Degrees

- Certificates

- Coursera Online Courses

- Licensing Programs

- Post-Secondary Enrollment Options (PSEO)

- Credit Online Courses

- Professional Development Online Courses

- Student Stories

- Health and Well-being

- Learn Online

Top 6 Questions People Ask About Online Learning

Since the invention of the internet, we have witnessed a huge change in the accessibility and flexibility of higher education. Not only can students earn their degrees at a distance and on their own schedule but they can also complete certifications and trade programs with more ease than ever before.

If you’re considering online classes as a means to achieving your goals, you likely have questions. Here are some of the most common ones, with answers!

What Is Online Learning?

So, just what is online learning? This term refers to education that takes place in a completely virtual environment using an internet connection and a computer or device to connect to the school. In the online "classroom," you can do all the same things that in-person students do, such as:

- Listening to lectures

- Answering questions from a professor

- Completing readings

- Turning in assignments

- Taking quizzes and tests

- Meeting as a group

Some schools, programs, or courses combine online learning with in-person learning experiences. This model is known as "hybrid education," wherein students participate online most of the time. However, when learning objectives call for hands-on experience (say, practicing skills for a health profession or laboratory experiments), they can head to campus.

That said, many programs allow their students to complete the entire curriculum virtually. Degrees such as a Bachelor of Science in Software Engineering, for example, may not call for in-person learning at all. You can always contact admissions or the specific department if you want to learn more about delivery format.

Why Online Learning Is Good for Students

Despite the widespread accessibility of remote education, some students remain skeptical about online classes. Are you really learning if there’s not a professor present at the front of a lecture hall? Can you really learn the skills you need without the in-person interaction between students and faculty?

Ease and Accessibility

While some people feel online education lacks the intimacy and immediacy of a "real" classroom, it offers an educational channel to students who might otherwise not have the time or resources to attend. Online access has made it possible for students to enroll and participate in online classes with greater ease, from nearly anywhere, in a way that fits their schedules.

Affordability

Online courses are usually more affordable as well. According to the Education Data Initiative , an online degree is $36,595 cheaper than an in-person degree when the cost of tuition and attendance are compared. The average cost of attending a private university is $129,800 for an in-person degree and only $60,593 for an online degree.

It’s also estimated that students who commute to college for in-person classes pay $1,360 per year in transportation costs that an online student wouldn’t have to pay. Add in factors such as cheaper meals at home and more time to work, and it’s not hard to see why many students opt for online learning.

Top Questions About Online Learning

Despite the benefits, you likely still have some questions about online learning. Let’s take a look at six of the most common.

1. Are You Able to Earn Your Degree Completely Online? Yes, many (but not all) schools do offer this as an option. We’re not just talking about certificates or minors, either.

For instance, you can earn a Master of Science in Electrical and Computer Engineering from U of M Online. If you complete the entire program virtually, you will pay in-state tuition costs from anywhere in the United States – a major bonus. A good school should offer you a searchable course catalog to compare options and view which have a required on-campus component.

2. How Long Does It Take to Earn a Degree Online? Most online programs mirror their in-person counterparts in terms of how long it takes to earn the degree. From certificates and minors to bachelor’s or master’s degrees, you’re looking at roughly the same timeline for equivalent programs. Some programs offer students the flexibility for part time options if that is needed to accommodate work and family responsibilities.

Some schools or programs may limit how quickly you can move through the material. However, given the freedom and flexibility of online learning, it’s possible you can complete more coursework in less time than you could on campus. Talk to your admissions officer or program coordinator about specifics.

When first researching your options, you can again turn to the searchable course catalog. On each degree page, you should find the recommended timeline clearly listed.

3. Is an Online Degree Viewed Differently Than a Traditional Degree? Among the most common and pressing questions for online learning is whether future employers view online degrees with skepticism. The answer is an emphatic "no." Most online programs appear on your transcript the same as on-campus programs would.

You may also wonder if an online program will impact your plans for a higher degree later. As long as your degree is from an accredited institution, it won’t harm your chances of acceptance.

4. What Are Some Benefits of Online Learning? When you choose to learn online, you can:

- Study more, due to the lack of commuting to, from, and around campus

- Potentially take more classes, again because of the time savings

- Get more immediate feedback from professors on assignments

- Leverage the online resources that come with your course portal

- Spend less money on your degree overall

- Continue working or caring for family while going to school

5. Do Instructors Offer Help and Support to Students? Instructors are required to give the same amount of time and energy to their online classes as they do to in-person groups. In fact, many professors are enthusiastic about virtual learning because it means they have more flexibility and don’t have to commute either.

6. Can Students Have Success and Excel in Online Learning? Lastly, can you learn new skills, attain knowledge, and become successful in online learning? Unequivocally, the answer is yes! Online degree programs still afford you tutoring and career resources as well as full access to academic resources such as the library .

Plus, you will have the ability to transfer credits either to or from the degree program, just as you would with an on-campus one. In other words, you will find yourself and your goals in no way hampered by taking the online approach.

Online Learning

In summary, online learning offers you a ton of freedom and savings. It allows you to complete your work anywhere, from the office to the living room to on the road. And you can rest assured that you’ll get the same level of professorial support as you would from an on-campus program, as well as a degree that’s worth just as much.

Learn More, Today

Ready to learn more? Reach out to U of M Online to ask questions or get information about specific programs today!

- Cost of Online Education vs. Traditional Education

- The top 5 questions people ask about online learning

- https://online.umn.edu/programs-search

- https://online.umn.edu/tuition-fees-and-financial-aid

- https://online.umn.edu/story/academic-tutoring-and-career-resources

- https://online.umn.edu/story/u-m-libraries

- https://online.umn.edu/transfer-credit

- https://online.umn.edu/

- Research article

- Open access

- Published: 02 December 2020

Integrating students’ perspectives about online learning: a hierarchy of factors

- Montgomery Van Wart 1 ,

- Anna Ni 1 ,

- Pamela Medina 1 ,

- Jesus Canelon 1 ,

- Melika Kordrostami 1 ,

- Jing Zhang 1 &

International Journal of Educational Technology in Higher Education volume 17 , Article number: 53 ( 2020 ) Cite this article

148k Accesses

49 Citations

24 Altmetric

Metrics details

This article reports on a large-scale ( n = 987), exploratory factor analysis study incorporating various concepts identified in the literature as critical success factors for online learning from the students’ perspective, and then determines their hierarchical significance. Seven factors--Basic Online Modality, Instructional Support, Teaching Presence, Cognitive Presence, Online Social Comfort, Online Interactive Modality, and Social Presence--were identified as significant and reliable. Regression analysis indicates the minimal factors for enrollment in future classes—when students consider convenience and scheduling—were Basic Online Modality, Cognitive Presence, and Online Social Comfort. Students who accepted or embraced online courses on their own merits wanted a minimum of Basic Online Modality, Teaching Presence, Cognitive Presence, Online Social Comfort, and Social Presence. Students, who preferred face-to-face classes and demanded a comparable experience, valued Online Interactive Modality and Instructional Support more highly. Recommendations for online course design, policy, and future research are provided.

Introduction

While there are different perspectives of the learning process such as learning achievement and faculty perspectives, students’ perspectives are especially critical since they are ultimately the raison d’être of the educational endeavor (Chickering & Gamson, 1987 ). More pragmatically, students’ perspectives provide invaluable, first-hand insights into their experiences and expectations (Dawson et al., 2019 ). The student perspective is especially important when new teaching approaches are used and when new technologies are being introduced (Arthur, 2009 ; Crews & Butterfield, 2014 ; Van Wart, Ni, Ready, Shayo, & Court, 2020 ). With the renewed interest in “active” education in general (Arruabarrena, Sánchez, Blanco, et al., 2019 ; Kay, MacDonald, & DiGiuseppe, 2019 ; Nouri, 2016 ; Vlachopoulos & Makri, 2017 ) and the flipped classroom approach in particular (Flores, del-Arco, & Silva, 2016 ; Gong, Yang, & Cai, 2020 ; Lundin, et al., 2018 ; Maycock, 2019 ; McGivney-Burelle, 2013 ; O’Flaherty & Phillips, 2015 ; Tucker , 2012 ) along with extraordinary shifts in the technology, the student perspective on online education is profoundly important. What shapes students’ perceptions of quality integrate are their own sense of learning achievement, satisfaction with the support they receive, technical proficiency of the process, intellectual and emotional stimulation, comfort with the process, and sense of learning community. The factors that students perceive as quality online teaching, however, has not been as clear as it might be for at least two reasons.

First, it is important to note that the overall online learning experience for students is also composed of non-teaching factors which we briefly mention. Three such factors are (1) convenience, (2) learner characteristics and readiness, and (3) antecedent conditions that may foster teaching quality but are not directly responsible for it. (1) Convenience is an enormous non-quality factor for students (Artino, 2010 ) which has driven up online demand around the world (Fidalgo, Thormann, Kulyk, et al., 2020 ; Inside Higher Education and Gallup, 2019 ; Legon & Garrett, 2019 ; Ortagus, 2017 ). This is important since satisfaction with online classes is frequently somewhat lower than face-to-face classes (Macon, 2011 ). However, the literature generally supports the relative equivalence of face-to-face and online modes regarding learning achievement criteria (Bernard et al., 2004 ; Nguyen, 2015 ; Ni, 2013 ; Sitzmann, Kraiger, Stewart, & Wisher, 2006 ; see Xu & Jaggars, 2014 for an alternate perspective). These contrasts are exemplified in a recent study of business students, in which online students using a flipped classroom approach outperformed their face-to-face peers, but ironically rated instructor performance lower (Harjoto, 2017 ). (2) Learner characteristics also affect the experience related to self-regulation in an active learning model, comfort with technology, and age, among others,which affect both receptiveness and readiness of online instruction. (Alqurashi, 2016 ; Cohen & Baruth, 2017 ; Kintu, Zhu, & Kagambe, 2017 ; Kuo, Walker, Schroder, & Belland, 2013 ; Ventura & Moscoloni, 2015 ) (3) Finally, numerous antecedent factors may lead to improved instruction, but are not themselves directly perceived by students such as instructor training (Brinkley-Etzkorn, 2018 ), and the sources of faculty motivation (e.g., incentives, recognition, social influence, and voluntariness) (Wingo, Ivankova, & Moss, 2017 ). Important as these factors are, mixing them with the perceptions of quality tends to obfuscate the quality factors directly perceived by students.

Second, while student perceptions of quality are used in innumerable studies, our overall understanding still needs to integrate them more holistically. Many studies use student perceptions of quality and overall effectiveness of individual tools and strategies in online contexts such as mobile devices (Drew & Mann, 2018 ), small groups (Choi, Land, & Turgeon, 2005 ), journals (Nair, Tay, & Koh, 2013 ), simulations (Vlachopoulos & Makri, 2017 ), video (Lange & Costley, 2020 ), etc. Such studies, however, cannot provide the overall context and comparative importance. Some studies have examined the overall learning experience of students with exploratory lists, but have mixed non-quality factors with quality of teaching factors making it difficult to discern the instructor’s versus contextual roles in quality (e.g., Asoodar, Vaezi, & Izanloo, 2016 ; Bollinger & Martindale, 2004 ; Farrell & Brunton, 2020 ; Hong, 2002 ; Song, Singleton, Hill, & Koh, 2004 ; Sun, Tsai, Finger, Chen, & Yeh, 2008 ). The application of technology adoption studies also fall into this category by essentially aggregating all teaching quality in the single category of performance ( Al-Gahtani, 2016 ; Artino, 2010 ). Some studies have used high-level teaching-oriented models, primarily the Community of Inquiry model (le Roux & Nagel, 2018 ), but empirical support has been mixed (Arbaugh et al., 2008 ); and its elegance (i.e., relying on only three factors) has not provided much insight to practitioners (Anderson, 2016 ; Cleveland-Innes & Campbell, 2012 ).

Research questions

Integration of studies and concepts explored continues to be fragmented and confusing despite the fact that the number of empirical studies related to student perceptions of quality factors has increased. It is important to have an empirical view of what students’ value in a single comprehensive study and, also, to know if there is a hierarchy of factors, ranging from students who are least to most critical of the online learning experience. This research study has two research questions.

The first research question is: What are the significant factors in creating a high-quality online learning experience from students’ perspectives? That is important to know because it should have a significant effect on the instructor’s design of online classes. The goal of this research question is identify a more articulated and empirically-supported set of factors capturing the full range of student expectations.

The second research question is: Is there a priority or hierarchy of factors related to students’ perceptions of online teaching quality that relate to their decisions to enroll in online classes? For example, is it possible to distinguish which factors are critical for enrollment decisions when students are primarily motivated by convenience and scheduling flexibility (minimum threshold)? Do these factors differ from students with a genuine acceptance of the general quality of online courses (a moderate threshold)? What are the factors that are important for the students who are the most critical of online course delivery (highest threshold)?

This article next reviews the literature on online education quality, focusing on the student perspective and reviews eight factors derived from it. The research methods section discusses the study structure and methods. Demographic data related to the sample are next, followed by the results, discussion, and conclusion.

Literature review

Online education is much discussed (Prinsloo, 2016 ; Van Wart et al., 2019 ; Zawacki-Richter & Naidu, 2016 ), but its perception is substantially influenced by where you stand and what you value (Otter et al., 2013 ; Tanner, Noser, & Totaro, 2009 ). Accrediting bodies care about meeting technical standards, proof of effectiveness, and consistency (Grandzol & Grandzol, 2006 ). Institutions care about reputation, rigor, student satisfaction, and institutional efficiency (Jung, 2011 ). Faculty care about subject coverage, student participation, faculty satisfaction, and faculty workload (Horvitz, Beach, Anderson, & Xia, 2015 ; Mansbach & Austin, 2018 ). For their part, students care about learning achievement (Marks, Sibley, & Arbaugh, 2005 ; O’Neill & Sai, 2014 ; Shen, Cho, Tsai, & Marra, 2013 ), but also view online education as a function of their enjoyment of classes, instructor capability and responsiveness, and comfort in the learning environment (e.g., Asoodar et al., 2016 ; Sebastianelli, Swift, & Tamimi, 2015 ). It is this last perspective, of students, upon which we focus.

It is important to note students do not sign up for online classes solely based on perceived quality. Perceptions of quality derive from notions of the capacity of online learning when ideal—relative to both learning achievement and satisfaction/enjoyment, and perceptions about the likelihood and experience of classes living up to expectations. Students also sign up because of convenience and flexibility, and personal notions of suitability about learning. Convenience and flexibility are enormous drivers of online registration (Lee, Stringer, & Du, 2017 ; Mann & Henneberry, 2012 ). Even when students say they prefer face-to-face classes to online, many enroll in online classes and re-enroll in the future if the experience meets minimum expectations. This study examines the threshold expectations of students when they are considering taking online classes.

When discussing students’ perceptions of quality, there is little clarity about the actual range of concepts because no integrated empirical studies exist comparing major factors found throughout the literature. Rather, there are practitioner-generated lists of micro-competencies such as the Quality Matters consortium for higher education (Quality Matters, 2018 ), or broad frameworks encompassing many aspects of quality beyond teaching (Open and Distant Learning Quality Council, 2012 ). While checklists are useful for practitioners and accreditation processes, they do not provide robust, theoretical bases for scholarly development. Overarching frameworks are heuristically useful, but not for pragmatic purposes or theory building arenas. The most prominent theoretical framework used in online literature is the Community of Inquiry (CoI) model (Arbaugh et al., 2008 ; Garrison, Anderson, & Archer, 2003 ), which divides instruction into teaching, cognitive, and social presence. Like deductive theories, however, the supportive evidence is mixed (Rourke & Kanuka, 2009 ), especially regarding the importance of social presence (Annand, 2011 ; Armellini and De Stefani, 2016 ). Conceptually, the problem is not so much with the narrow articulation of cognitive or social presence; cognitive presence is how the instructor provides opportunities for students to interact with material in robust, thought-provoking ways, and social presence refers to building a community of learning that incorporates student-to-student interactions. However, teaching presence includes everything else the instructor does—structuring the course, providing lectures, explaining assignments, creating rehearsal opportunities, supplying tests, grading, answering questions, and so on. These challenges become even more prominent in the online context. While the lecture as a single medium is paramount in face-to-face classes, it fades as the primary vehicle in online classes with increased use of detailed syllabi, electronic announcements, recorded and synchronous lectures, 24/7 communications related to student questions, etc. Amassing the pedagogical and technological elements related to teaching under a single concept provides little insight.

In addition to the CoI model, numerous concepts are suggested in single-factor empirical studies when focusing on quality from a student’s perspective, with overlapping conceptualizations and nonstandardized naming conventions. Seven distinct factors are derived here from the literature of student perceptions of online quality: Instructional Support, Teaching Presence, Basic Online Modality, Social Presence, Online Social Comfort, cognitive Presence, and Interactive Online Modality.

Instructional support

Instructional Support refers to students’ perceptions of techniques by the instructor used for input, rehearsal, feedback, and evaluation. Specifically, this entails providing detailed instructions, designed use of multimedia, and the balance between repetitive class features for ease of use, and techniques to prevent boredom. Instructional Support is often included as an element of Teaching Presence, but is also labeled “structure” (Lee & Rha, 2009 ; So & Brush, 2008 ) and instructor facilitation (Eom, Wen, & Ashill, 2006 ). A prime example of the difference between face-to-face and online education is the extensive use of the “flipped classroom” (Maycock, 2019 ; Wang, Huang, & Schunn, 2019 ) in which students move to rehearsal activities faster and more frequently than traditional classrooms, with less instructor lecture (Jung, 2011 ; Martin, Wang, & Sadaf, 2018 ). It has been consistently supported as an element of student perceptions of quality (Espasa & Meneses, 2010 ).

- Teaching presence

Teaching Presence refers to students’ perceptions about the quality of communication in lectures, directions, and individual feedback including encouragement (Jaggars & Xu, 2016 ; Marks et al., 2005 ). Specifically, instructor communication is clear, focused, and encouraging, and instructor feedback is customized and timely. If Instructional Support is what an instructor does before the course begins and in carrying out those plans, then Teaching Presence is what the instructor does while the class is conducted and in response to specific circumstances. For example, a course could be well designed but poorly delivered because the instructor is distracted; or a course could be poorly designed but an instructor might make up for the deficit by spending time and energy in elaborate communications and ad hoc teaching techniques. It is especially important in student satisfaction (Sebastianelli et al., 2015 ; Young, 2006 ) and also referred to as instructor presence (Asoodar et al., 2016 ), learner-instructor interaction (Marks et al., 2005 ), and staff support (Jung, 2011 ). As with Instructional Support, it has been consistently supported as an element of student perceptions of quality.

Basic online modality

Basic Online Modality refers to the competent use of basic online class tools—online grading, navigation methods, online grade book, and the announcements function. It is frequently clumped with instructional quality (Artino, 2010 ), service quality (Mohammadi, 2015 ), instructor expertise in e-teaching (Paechter, Maier, & Macher, 2010 ), and similar terms. As a narrowly defined concept, it is sometimes called technology (Asoodar et al., 2016 ; Bollinger & Martindale, 2004 ; Sun et al., 2008 ). The only empirical study that did not find Basic Online Modality significant, as technology, was Sun et al. ( 2008 ). Because Basic Online Modality is addressed with basic instructor training, some studies assert the importance of training (e.g., Asoodar et al., 2016 ).

Social presence

Social Presence refers to students’ perceptions of the quality of student-to-student interaction. Social Presence focuses on the quality of shared learning and collaboration among students, such as in threaded discussion responses (Garrison et al., 2003 ; Kehrwald, 2008 ). Much emphasized but challenged in the CoI literature (Rourke & Kanuka, 2009 ), it has mixed support in the online literature. While some studies found Social Presence or related concepts to be significant (e.g., Asoodar et al., 2016 ; Bollinger & Martindale, 2004 ; Eom et al., 2006 ; Richardson, Maeda, Lv, & Caskurlu, 2017 ), others found Social Presence insignificant (Joo, Lim, & Kim, 2011 ; So & Brush, 2008 ; Sun et al., 2008 ).

Online social comfort

Online Social Comfort refers to the instructor’s ability to provide an environment in which anxiety is low, and students feel comfortable interacting even when expressing opposing viewpoints. While numerous studies have examined anxiety (e.g., Liaw & Huang, 2013 ; Otter et al., 2013 ; Sun et al., 2008 ), only one found anxiety insignificant (Asoodar et al., 2016 ); many others have not examined the concept.

- Cognitive presence

Cognitive Presence refers to the engagement of students such that they perceive they are stimulated by the material and instructor to reflect deeply and critically, and seek to understand different perspectives (Garrison et al., 2003 ). The instructor provides instructional materials and facilitates an environment that piques interest, is reflective, and enhances inclusiveness of perspectives (Durabi, Arrastia, Nelson, Cornille, & Liang, 2011 ). Cognitive Presence includes enhancing the applicability of material for student’s potential or current careers. Cognitive Presence is supported as significant in many online studies (e.g., Artino, 2010 ; Asoodar et al., 2016 ; Joo et al., 2011 ; Marks et al., 2005 ; Sebastianelli et al., 2015 ; Sun et al., 2008 ). Further, while many instructors perceive that cognitive presence is diminished in online settings, neuroscientific studies indicate this need not be the case (Takamine, 2017 ). While numerous studies failed to examine Cognitive Presence, this review found no studies that lessened its significance for students.

Interactive online modality

Interactive Online Modality refers to the “high-end” usage of online functionality. That is, the instructor uses interactive online class tools—video lectures, videoconferencing, and small group discussions—well. It is often included in concepts such as instructional quality (Artino, 2010 ; Asoodar et al., 2016 ; Mohammadi, 2015 ; Otter et al., 2013 ; Paechter et al., 2010 ) or engagement (Clayton, Blumberg, & Anthony, 2018 ). While individual methods have been investigated (e.g. Durabi et al., 2011 ), high-end engagement methods have not.

Other independent variables affecting perceptions of quality include age, undergraduate versus graduate status, gender, ethnicity/race, discipline, educational motivation of students, and previous online experience. While age has been found to be small or insignificant, more notable effects have been reported at the level-of-study, with graduate students reporting higher “success” (Macon, 2011 ), and community college students having greater difficulty with online classes (Legon & Garrett, 2019 ; Xu & Jaggars, 2014 ). Ethnicity and race have also been small or insignificant. Some situational variations and student preferences can be captured by paying attention to disciplinary differences (Arbaugh, 2005 ; Macon, 2011 ). Motivation levels of students have been reported to be significant in completion and achievement, with better students doing as well across face-to-face and online modes, and weaker students having greater completion and achievement challenges (Clayton et al., 2018 ; Lu & Lemonde, 2013 ).

Research methods

To examine the various quality factors, we apply a critical success factor methodology, initially introduced to schools of business research in the 1970s. In 1981, Rockhart and Bullen codified an approach embodying principles of critical success factors (CSFs) as a way to identify the information needs of executives, detailing steps for the collection and analyzation of data to create a set of organizational CSFs (Rockhart & Bullen, 1981 ). CSFs describe the underlying or guiding principles which must be incorporated to ensure success.

Utilizing this methodology, CSFs in the context of this paper define key areas of instruction and design essential for an online class to be successful from a student’s perspective. Instructors implicitly know and consider these areas when setting up an online class and designing and directing activities and tasks important to achieving learning goals. CSFs make explicit those things good instructors may intuitively know and (should) do to enhance student learning. When made explicit, CSFs not only confirm the knowledge of successful instructors, but tap their intuition to guide and direct the accomplishment of quality instruction for entire programs. In addition, CSFs are linked with goals and objectives, helping generate a small number of truly important matters an instructor should focus attention on to achieve different thresholds of online success.

After a comprehensive literature review, an instrument was created to measure students’ perceptions about the importance of techniques and indicators leading to quality online classes. Items were designed to capture the major factors in the literature. The instrument was pilot studied during academic year 2017–18 with a 397 student sample, facilitating an exploratory factor analysis leading to important preliminary findings (reference withheld for review). Based on the pilot, survey items were added and refined to include seven groups of quality teaching factors and two groups of items related to students’ overall acceptance of online classes as well as a variable on their future online class enrollment. Demographic information was gathered to determine their effects on students’ levels of acceptance of online classes based on age, year in program, major, distance from university, number of online classes taken, high school experience with online classes, and communication preferences.

This paper draws evidence from a sample of students enrolled in educational programs at Jack H. Brown College of Business and Public Administration (JHBC), California State University San Bernardino (CSUSB). The JHBC offers a wide range of online courses for undergraduate and graduate programs. To ensure comparable learning outcomes, online classes and face-to-face classes of a certain subject are similar in size—undergraduate classes are generally capped at 60 and graduate classes at 30, and often taught by the same instructors. Students sometimes have the option to choose between both face-to-face and online modes of learning.

A Qualtrics survey link was sent out by 11 instructors to students who were unlikely to be cross-enrolled in classes during the 2018–19 academic year. 1 Approximately 2500 students were contacted, with some instructors providing class time to complete the anonymous survey. All students, whether they had taken an online class or not, were encouraged to respond. Nine hundred eighty-seven students responded, representing a 40% response rate. Although drawn from a single business school, it is a broad sample representing students from several disciplines—management, accounting and finance, marketing, information decision sciences, and public administration, as well as both graduate and undergraduate programs of study.

The sample age of students is young, with 78% being under 30. The sample has almost no lower division students (i.e., freshman and sophomore), 73% upper division students (i.e., junior and senior) and 24% graduate students (master’s level). Only 17% reported having taken a hybrid or online class in high school. There was a wide range of exposure to university level online courses, with 47% reporting having taken 1 to 4 classes, and 21% reporting no online class experience. As a Hispanic-serving institution, 54% self-identified as Latino, 18% White, and 13% Asian and Pacific Islander. The five largest majors were accounting & finance (25%), management (21%), master of public administration (16%), marketing (12%), and information decision sciences (10%). Seventy-four percent work full- or part-time. See Table 1 for demographic data.

Measures and procedure

To increase the reliability of evaluation scores, composite evaluation variables are formed after an exploratory factor analysis of individual evaluation items. A principle component method with Quartimin (oblique) rotation was applied to explore the factor construct of student perceptions of online teaching CSFs. The item correlations for student perceptions of importance coefficients greater than .30 were included, a commonly acceptable ratio in factor analysis. A simple least-squares regression analysis was applied to test the significance levels of factors on students’ impression of online classes.

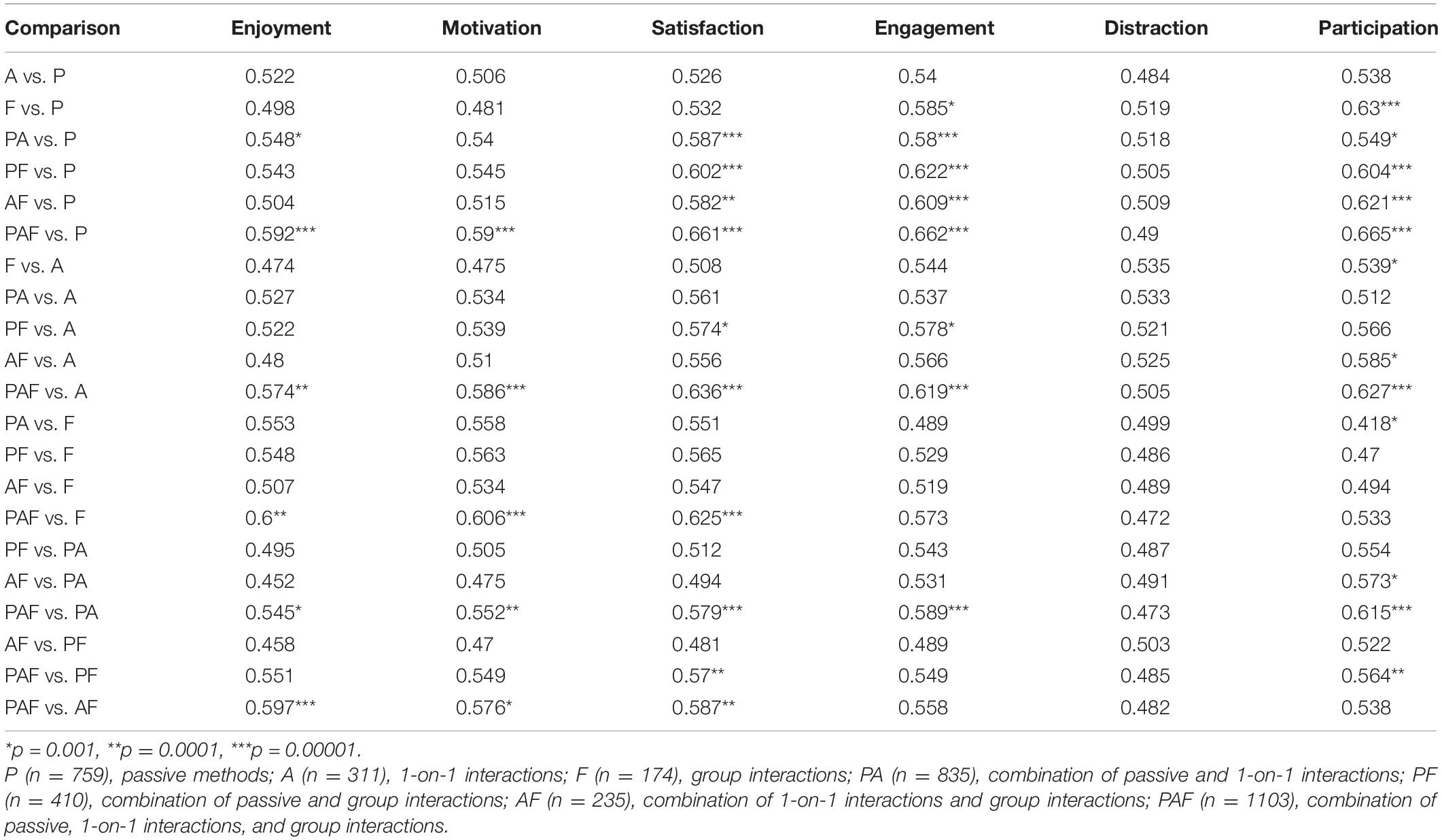

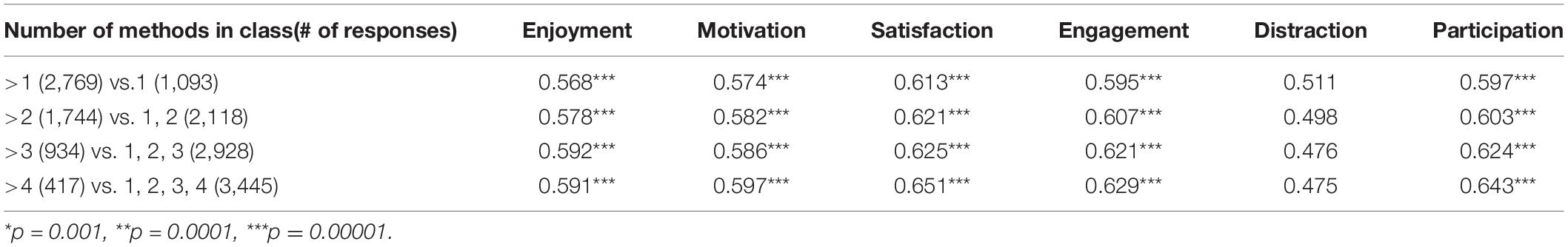

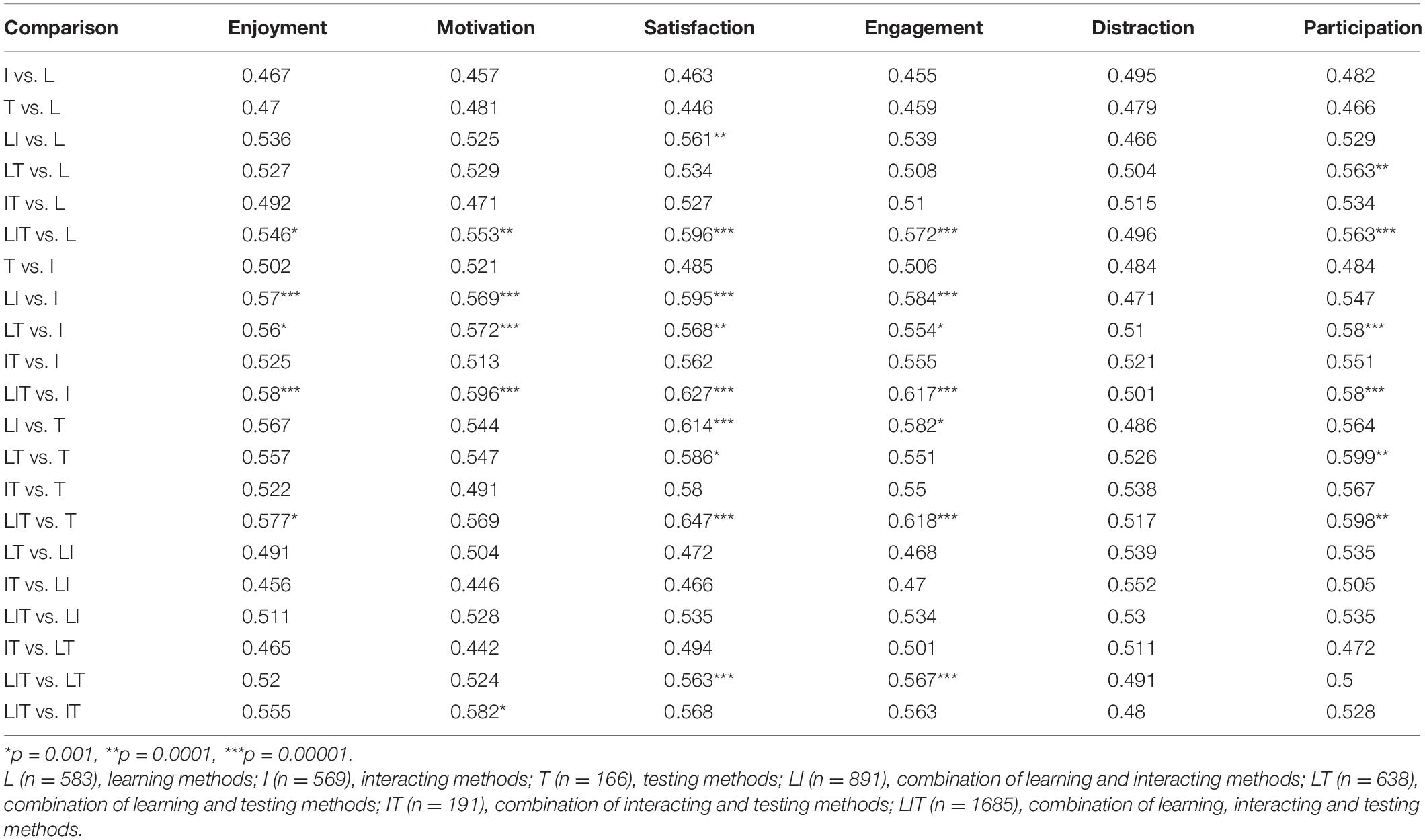

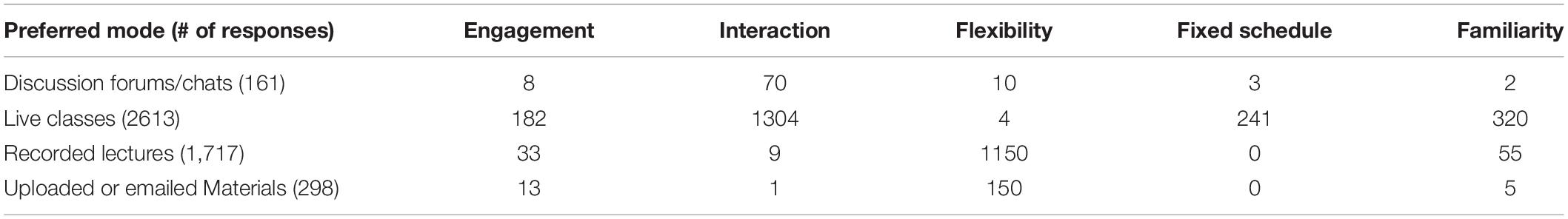

Exploratory factor constructs

Using a threshold loading of 0.3 for items, 37 items loaded on seven factors. All factors were logically consistent. The first factor, with eight items, was labeled Teaching Presence. Items included providing clear instructions, staying on task, clear deadlines, and customized feedback on strengths and weaknesses. Teaching Presence items all related to instructor involvement during the course as a director, monitor, and learning facilitator. The second factor, with seven items, aligned with Cognitive Presence. Items included stimulating curiosity, opportunities for reflection, helping students construct explanations posed in online courses, and the applicability of material. The third factor, with six items, aligned with Social Presence defined as providing student-to-student learning opportunities. Items included getting to know course participants for sense of belonging, forming impressions of other students, and interacting with others. The fourth factor, with six new items as well as two (“interaction with other students” and “a sense of community in the class”) shared with the third factor, was Instructional Support which related to the instructor’s roles in providing students a cohesive learning experience. They included providing sufficient rehearsal, structured feedback, techniques for communication, navigation guide, detailed syllabus, and coordinating student interaction and creating a sense of online community. This factor also included enthusiasm which students generally interpreted as a robustly designed course, rather than animation in a traditional lecture. The fifth factor was labeled Basic Online Modality and focused on the basic technological requirements for a functional online course. Three items included allowing students to make online submissions, use of online gradebooks, and online grading. A fourth item is the use of online quizzes, viewed by students as mechanical practice opportunities rather than small tests and a fifth is navigation, a key component of Online Modality. The sixth factor, loaded on four items, was labeled Online Social Comfort. Items here included comfort discussing ideas online, comfort disagreeing, developing a sense of collaboration via discussion, and considering online communication as an excellent medium for social interaction. The final factor was called Interactive Online Modality because it included items for “richer” communications or interactions, no matter whether one- or two-way. Items included videoconferencing, instructor-generated videos, and small group discussions. Taken together, these seven explained 67% of the variance which is considered in the acceptable range in social science research for a robust model (Hair, Black, Babin, & Anderson, 2014 ). See Table 2 for the full list.

To test for factor reliability, the Cronbach alpha of variables were calculated. All produced values greater than 0.7, the standard threshold used for reliability, except for system trust which was therefore dropped. To gauge students’ sense of factor importance, all items were means averaged. Factor means (lower means indicating higher importance to students), ranged from 1.5 to 2.6 on a 5-point scale. Basic Online Modality was most important, followed by Instructional Support and Teaching Presence. Students deemed Cognitive Presence, Social Online Comfort, and Online Interactive Modality less important. The least important for this sample was Social Presence. Table 3 arrays the critical success factor means, standard deviations, and Cronbach alpha.

To determine whether particular subgroups of respondents viewed factors differently, a series of ANOVAs were conducted using factor means as dependent variables. Six demographic variables were used as independent variables: graduate vs. undergraduate, age, work status, ethnicity, discipline, and past online experience. To determine strength of association of the independent variables to each of the seven CSFs, eta squared was calculated for each ANOVA. Eta squared indicates the proportion of variance in the dependent variable explained by the independent variable. Eta squared values greater than .01, .06, and .14 are conventionally interpreted as small, medium, and large effect sizes, respectively (Green & Salkind, 2003 ). Table 4 summarizes the eta squared values for the ANOVA tests with Eta squared values less than .01 omitted.

While no significant differences in factor means among students in different disciplines in the College occur, all five other independent variables have some small effect on some or all CSFs. Graduate students tend to rate Online Interactive Modality, Instructional Support, Teaching Presence, and Cognitive Presence higher than undergraduates. Elder students value more Online Interactive Modality. Full-time working students rate all factors, except Social Online Comfort, slightly higher than part-timers and non-working students. Latino and White rate Basic Online Modality and Instructional Support higher; Asian and Pacific Islanders rate Social Presence higher. Students who have taken more online classes rate all factors higher.

In addition to factor scores, two variables are constructed to identify the resultant impressions labeled online experience. Both were logically consistent with a Cronbach’s α greater than 0.75. The first variable, with six items, labeled “online acceptance,” included items such as “I enjoy online learning,” “My overall impression of hybrid/online learning is very good,” and “the instructors of online/hybrid classes are generally responsive.” The second variable was labeled “face-to-face preference” and combines four items, including enjoying, learning, and communicating more in face-to-face classes, as well as perceiving greater fairness and equity. In addition to these two constructed variables, a one-item variable was also used subsequently in the regression analysis: “online enrollment.” That question asked: if hybrid/online classes are well taught and available, how much would online education make up your entire course selection going forward?

Regression results

As noted above, two constructed variables and one item were used as dependent variables for purposes of regression analysis. They were online acceptance, F2F preference, and the selection of online classes. In addition to seven quality-of-teaching factors identified by factor analysis, control variables included level of education (graduate versus undergraduate), age, ethnicity, work status, distance to university, and number of online/hybrid classes taken in the past. See Table 5 .

When the ETA squared values for ANOVA significance were measured for control factors, only one was close to a medium effect. Graduate versus undergraduate status had a .05 effect (considered medium) related to Online Interactive Modality, meaning graduate students were more sensitive to interactive modality than undergraduates. Multiple regression analysis of critical success factors and online impressions were conducted to compare under what conditions factors were significant. The only consistently significant control factor was number of online classes taken. The more classes students had taken online, the more inclined they were to take future classes. Level of program, age, ethnicity, and working status do not significantly affect students’ choice or overall acceptance of online classes.

The least restrictive condition was online enrollment (Table 6 ). That is, students might not feel online courses were ideal, but because of convenience and scheduling might enroll in them if minimum threshold expectations were met. When considering online enrollment three factors were significant and positive (at the 0.1 level): Basic Online Modality, Cognitive Presence, and Online Social Comfort. These least-demanding students expected classes to have basic technological functionality, provide good opportunities for knowledge acquisition, and provide comfortable interaction in small groups. Students who demand good Instructional Support (e.g., rehearsal opportunities, standardized feedback, clear syllabus) are less likely to enroll.

Online acceptance was more restrictive (see Table 7 ). This variable captured the idea that students not only enrolled in online classes out of necessity, but with an appreciation of the positive attributes of online instruction, which balanced the negative aspects. When this standard was applied, students expected not only Basic Online Modality, Cognitive Presence, and Online Social Comfort, but expected their instructors to be highly engaged virtually as the course progressed (Teaching Presence), and to create strong student-to-student dynamics (Social Presence). Students who rated Instructional Support higher are less accepting of online classes.

Another restrictive condition was catering to the needs of students who preferred face-to-face classes (see Table 8 ). That is, they preferred face-to-face classes even when online classes were well taught. Unlike students more accepting of, or more likely to enroll in, online classes, this group rates Instructional Support as critical to enrolling, rather than a negative factor when absent. Again different from the other two groups, these students demand appropriate interactive mechanisms (Online Interactive Modality) to enable richer communication (e.g., videoconferencing). Student-to-student collaboration (Social Presence) was also significant. This group also rated Cognitive Presence and Online Social Comfort as significant, but only in their absence. That is, these students were most attached to direct interaction with the instructor and other students rather than specific teaching methods. Interestingly, Basic Online Modality and Teaching Presence were not significant. Our interpretation here is this student group, most critical of online classes for its loss of physical interaction, are beyond being concerned with mechanical technical interaction and demand higher levels of interactivity and instructional sophistication.

Discussion and study limitations

Some past studies have used robust empirical methods to identify a single factor or a small number of factors related to quality from a student’s perspective, but have not sought to be relatively comprehensive. Others have used a longer series of itemized factors, but have less used less robust methods, and have not tied those factors back to the literature. This study has used the literature to develop a relatively comprehensive list of items focused on quality teaching in a single rigorous protocol. That is, while a Beta test had identified five coherent factors, substantial changes to the current survey that sharpened the focus on quality factors rather than antecedent factors, as well as better articulating the array of factors often lumped under the mantle of “teaching presence.” In addition, it has also examined them based on threshold expectations: from minimal, such as when flexibility is the driving consideration, to modest, such as when students want a “good” online class, to high, when students demand an interactive virtual experience equivalent to face-to-face.

Exploratory factor analysis identified seven factors that were reliable, coherent, and significant under different conditions. When considering students’ overall sense of importance, they are, in order: Basic Online Modality, Instructional Support, Teaching Presence, Cognitive Presence, Social Online Comfort, Interactive Online Modality, and Social Presence. Students are most concerned with the basics of a course first, that is the technological and instructor competence. Next they want engagement and virtual comfort. Social Presence, while valued, is the least critical from this overall perspective.

The factor analysis is quite consistent with the range of factors identified in the literature, pointing to the fact that students can differentiate among different aspects of what have been clumped as larger concepts, such as teaching presence. Essentially, the instructor’s role in quality can be divided into her/his command of basic online functionality, good design, and good presence during the class. The instructor’s command of basic functionality is paramount. Because so much of online classes must be built in advance of the class, quality of the class design is rated more highly than the instructor’s role in facilitating the class. Taken as a whole, the instructor’s role in traditional teaching elements is primary, as we would expect it to be. Cognitive presence, especially as pertinence of the instructional material and its applicability to student interests, has always been found significant when studied, and was highly rated as well in a single factor. Finally, the degree to which students feel comfortable with the online environment and enjoy the learner-learner aspect has been less supported in empirical studies, was found significant here, but rated the lowest among the factors of quality to students.

Regression analysis paints a more nuanced picture, depending on student focus. It also helps explain some of the heterogeneity of previous studies, depending on what the dependent variables were. If convenience and scheduling are critical and students are less demanding, minimum requirements are Basic Online Modality, Cognitive Presence, and Online Social Comfort. That is, students’ expect an instructor who knows how to use an online platform, delivers useful information, and who provides a comfortable learning environment. However, they do not expect to get poor design. They do not expect much in terms of the quality teaching presence, learner-to-learner interaction, or interactive teaching.

When students are signing up for critical classes, or they have both F2F and online options, they have a higher standard. That is, they not only expect the factors for decisions about enrolling in noncritical classes, but they also expect good Teaching and Social Presence. Students who simply need a class may be willing to teach themselves a bit more, but students who want a good class expect a highly present instructor in terms responsiveness and immediacy. “Good” classes must not only create a comfortable atmosphere, but in social science classes at least, must provide strong learner-to-learner interactions as well. At the time of the research, most students believe that you can have a good class without high interactivity via pre-recorded video and videoconference. That may, or may not, change over time as technology thresholds of various video media become easier to use, more reliable, and more commonplace.

The most demanding students are those who prefer F2F classes because of learning style preferences, poor past experiences, or both. Such students (seem to) assume that a worthwhile online class has basic functionality and that the instructor provides a strong presence. They are also critical of the absence of Cognitive Presence and Online Social Comfort. They want strong Instructional Support and Social Presence. But in addition, and uniquely, they expect Online Interactive Modality which provides the greatest verisimilitude to the traditional classroom as possible. More than the other two groups, these students crave human interaction in the learning process, both with the instructor and other students.

These findings shed light on the possible ramifications of the COVID-19 aftermath. Many universities around the world jumped from relatively low levels of online instruction in the beginning of spring 2020 to nearly 100% by mandate by the end of the spring term. The question becomes, what will happen after the mandate is removed? Will demand resume pre-crisis levels, will it increase modestly, or will it skyrocket? Time will be the best judge, but the findings here would suggest that the ability/interest of instructors and institutions to “rise to the occasion” with quality teaching will have as much effect on demand as students becoming more acclimated to online learning. If in the rush to get classes online many students experience shoddy basic functional competence, poor instructional design, sporadic teaching presence, and poorly implemented cognitive and social aspects, they may be quite willing to return to the traditional classroom. If faculty and institutions supporting them are able to increase the quality of classes despite time pressures, then most students may be interested in more hybrid and fully online classes. If instructors are able to introduce high quality interactive teaching, nearly the entire student population will be interested in more online classes. Of course students will have a variety of experiences, but this analysis suggests that those instructors, departments, and institutions that put greater effort into the temporary adjustment (and who resist less), will be substantially more likely to have increases in demand beyond what the modest national trajectory has been for the last decade or so.

There are several study limitations. First, the study does not include a sample of non-respondents. Non-responders may have a somewhat different profile. Second, the study draws from a single college and university. The profile derived here may vary significantly by type of student. Third, some survey statements may have led respondents to rate quality based upon experience rather than assess the general importance of online course elements. “I felt comfortable participating in the course discussions,” could be revised to “comfort in participating in course discussions.” The authors weighed differences among subgroups (e.g., among majors) as small and statistically insignificant. However, it is possible differences between biology and marketing students would be significant, leading factors to be differently ordered. Emphasis and ordering might vary at a community college versus research-oriented university (Gonzalez, 2009 ).

Availability of data and materials

We will make the data available.

Al-Gahtani, S. S. (2016). Empirical investigation of e-learning acceptance and assimilation: A structural equation model. Applied Comput Information , 12 , 27–50.

Google Scholar

Alqurashi, E. (2016). Self-efficacy in online learning environments: A literature review. Contemporary Issues Educ Res (CIER) , 9 (1), 45–52.

Anderson, T. (2016). A fourth presence for the Community of Inquiry model? Retrieved from https://virtualcanuck.ca/2016/01/04/a-fourth-presence-for-the-community-of-inquiry-model/ .

Annand, D. (2011). Social presence within the community of inquiry framework. The International Review of Research in Open and Distributed Learning , 12 (5), 40.

Arbaugh, J. B. (2005). How much does “subject matter” matter? A study of disciplinary effects in on-line MBA courses. Academy of Management Learning & Education , 4 (1), 57–73.

Arbaugh, J. B., Cleveland-Innes, M., Diaz, S. R., Garrison, D. R., Ice, P., Richardson, J. C., & Swan, K. P. (2008). Developing a community of inquiry instrument: Testing a measure of the Community of Inquiry framework using a multi-institutional sample. Internet and Higher Education , 11 , 133–136.

Armellini, A., & De Stefani, M. (2016). Social presence in the 21st century: An adjustment to the Community of Inquiry framework. British Journal of Educational Technology , 47 (6), 1202–1216.

Arruabarrena, R., Sánchez, A., Blanco, J. M., et al. (2019). Integration of good practices of active methodologies with the reuse of student-generated content. International Journal of Educational Technology in Higher Education , 16 , #10.

Arthur, L. (2009). From performativity to professionalism: Lecturers’ responses to student feedback. Teaching in Higher Education , 14 (4), 441–454.

Artino, A. R. (2010). Online or face-to-face learning? Exploring the personal factors that predict students’ choice of instructional format. Internet and Higher Education , 13 , 272–276.

Asoodar, M., Vaezi, S., & Izanloo, B. (2016). Framework to improve e-learner satisfaction and further strengthen e-learning implementation. Computers in Human Behavior , 63 , 704–716.

Bernard, R. M., et al. (2004). How does distance education compare with classroom instruction? A meta-analysis of the empirical literature. Review of Educational Research , 74 (3), 379–439.

Bollinger, D., & Martindale, T. (2004). Key factors for determining student satisfaction in online courses. Int J E-learning , 3 (1), 61–67.

Brinkley-Etzkorn, K. E. (2018). Learning to teach online: Measuring the influence of faculty development training on teaching effectiveness through a TPACK lens. The Internet and Higher Education , 38 , 28–35.

Chickering, A. W., & Gamson, Z. F. (1987). Seven principles for good practice in undergraduate education. AAHE Bulletin , 3 , 7.

Choi, I., Land, S. M., & Turgeon, A. J. (2005). Scaffolding peer-questioning strategies to facilitate metacognition during online small group discussion. Instructional Science , 33 , 483–511.

Clayton, K. E., Blumberg, F. C., & Anthony, J. A. (2018). Linkages between course status, perceived course value, and students’ preferences for traditional versus non-traditional learning environments. Computers & Education , 125 , 175–181.

Cleveland-Innes, M., & Campbell, P. (2012). Emotional presence, learning, and the online learning environment. The International Review of Research in Open and Distributed Learning , 13 (4), 269–292.

Cohen, A., & Baruth, O. (2017). Personality, learning, and satisfaction in fully online academic courses. Computers in Human Behavior , 72 , 1–12.

Crews, T., & Butterfield, J. (2014). Data for flipped classroom design: Using student feedback to identify the best components from online and face-to-face classes. Higher Education Studies , 4 (3), 38–47.

Dawson, P., Henderson, M., Mahoney, P., Phillips, M., Ryan, T., Boud, D., & Molloy, E. (2019). What makes for effective feedback: Staff and student perspectives. Assessment & Evaluation in Higher Education , 44 (1), 25–36.

Drew, C., & Mann, A. (2018). Unfitting, uncomfortable, unacademic: A sociological reading of an interactive mobile phone app in university lectures. International Journal of Educational Technology in Higher Education , 15 , #43.

Durabi, A., Arrastia, M., Nelson, D., Cornille, T., & Liang, X. (2011). Cognitive presence in asynchronous online learning: A comparison of four discussion strategies. Journal of Computer Assisted Learning , 27 (3), 216–227.

Eom, S. B., Wen, H. J., & Ashill, N. (2006). The determinants of students’ perceived learning outcomes and satisfaction in university online education: An empirical investigation. Decision Sciences Journal of Innovative Education , 4 (2), 215–235.

Espasa, A., & Meneses, J. (2010). Analysing feedback processes in an online teaching and learning environment: An exploratory study. Higher Education , 59 (3), 277–292.

Farrell, O., & Brunton, J. (2020). A balancing act: A window into online student engagement experiences. International Journal of Educational Technology in High Education , 17 , #25.

Fidalgo, P., Thormann, J., Kulyk, O., et al. (2020). Students’ perceptions on distance education: A multinational study. International Journal of Educational Technology in High Education , 17 , #18.

Flores, Ò., del-Arco, I., & Silva, P. (2016). The flipped classroom model at the university: Analysis based on professors’ and students’ assessment in the educational field. International Journal of Educational Technology in Higher Education , 13 , #21.

Garrison, D. R., Anderson, T., & Archer, W. (2003). A theory of critical inquiry in online distance education. Handbook of Distance Education , 1 , 113–127.

Gong, D., Yang, H. H., & Cai, J. (2020). Exploring the key influencing factors on college students’ computational thinking skills through flipped-classroom instruction. International Journal of Educational Technology in Higher Education , 17 , #19.

Gonzalez, C. (2009). Conceptions of, and approaches to, teaching online: A study of lecturers teaching postgraduate distance courses. Higher Education , 57 (3), 299–314.

Grandzol, J. R., & Grandzol, C. J. (2006). Best practices for online business Education. International Review of Research in Open and Distance Learning , 7 (1), 1–18.

Green, S. B., & Salkind, N. J. (2003). Using SPSS: Analyzing and understanding data , (3rd ed., ). Upper Saddle River: Prentice Hall.

Hair, J. F., Black, W. C., Babin, B. J., & Anderson, R. E. (2014). Multivariate data analysis: Pearson new international edition . Essex: Pearson Education Limited.

Harjoto, M. A. (2017). Blended versus face-to-face: Evidence from a graduate corporate finance class. Journal of Education for Business , 92 (3), 129–137.

Hong, K.-S. (2002). Relationships between students’ instructional variables with satisfaction and learning from a web-based course. The Internet and Higher Education , 5 , 267–281.

Horvitz, B. S., Beach, A. L., Anderson, M. L., & Xia, J. (2015). Examination of faculty self-efficacy related to online teaching. Innovation Higher Education , 40 , 305–316.

Inside Higher Education and Gallup. (2019). The 2019 survey of faculty attitudes on technology. Author .

Jaggars, S. S., & Xu, D. (2016). How do online course design features influence student performance? Computers and Education , 95 , 270–284.

Joo, Y. J., Lim, K. Y., & Kim, E. K. (2011). Online university students’ satisfaction and persistence: Examining perceived level of presence, usefulness and ease of use as predictor in a structural model. Computers & Education , 57 (2), 1654–1664.

Jung, I. (2011). The dimensions of e-learning quality: From the learner’s perspective. Educational Technology Research and Development , 59 (4), 445–464.

Kay, R., MacDonald, T., & DiGiuseppe, M. (2019). A comparison of lecture-based, active, and flipped classroom teaching approaches in higher education. Journal of Computing in Higher Education , 31 , 449–471.

Kehrwald, B. (2008). Understanding social presence in text-based online learning environments. Distance Education , 29 (1), 89–106.

Kintu, M. J., Zhu, C., & Kagambe, E. (2017). Blended learning effectiveness: The relationship between student characteristics, design features and outcomes. International Journal of Educational Technology in Higher Education , 14 , #7.

Kuo, Y.-C., Walker, A. E., Schroder, K. E., & Belland, B. R. (2013). Interaction, internet self-efficacy, and self-regulated learning as predictors of student satisfaction in online education courses. Internet and Education , 20 , 35–50.

Lange, C., & Costley, J. (2020). Improving online video lectures: Learning challenges created by media. International Journal of Educational Technology in Higher Education , 17 , #16.

le Roux, I., & Nagel, L. (2018). Seeking the best blend for deep learning in a flipped classroom – Viewing student perceptions through the Community of Inquiry lens. International Journal of Educational Technology in High Education , 15 , #16.

Lee, H.-J., & Rha, I. (2009). Influence of structure and interaction on student achievement and satisfaction in web-based distance learning. Educational Technology & Society , 12 (4), 372–382.

Lee, Y., Stringer, D., & Du, J. (2017). What determines students’ preference of online to F2F class? Business Education Innovation Journal , 9 (2), 97–102.

Legon, R., & Garrett, R. (2019). CHLOE 3: Behind the numbers . Published online by Quality Matters and Eduventures. https://www.qualitymatters.org/sites/default/files/research-docs-pdfs/CHLOE-3-Report-2019-Behind-the-Numbers.pdf

Liaw, S.-S., & Huang, H.-M. (2013). Perceived satisfaction, perceived usefulness and interactive learning environments as predictors of self-regulation in e-learning environments. Computers & Education , 60 (1), 14–24.

Lu, F., & Lemonde, M. (2013). A comparison of online versus face-to-face students teaching delivery in statistics instruction for undergraduate health science students. Advances in Health Science Education , 18 , 963–973.

Lundin, M., Bergviken Rensfeldt, A., Hillman, T., Lantz-Andersson, A., & Peterson, L. (2018). Higher education dominance and siloed knowledge: a systematic review of flipped classroom research. International Journal of Educational Technology in Higher Education , 15 (1).

Macon, D. K. (2011). Student satisfaction with online courses versus traditional courses: A meta-analysis . Disssertation: Northcentral University, CA.

Mann, J., & Henneberry, S. (2012). What characteristics of college students influence their decisions to select online courses? Online Journal of Distance Learning Administration , 15 (5), 1–14.

Mansbach, J., & Austin, A. E. (2018). Nuanced perspectives about online teaching: Mid-career senior faculty voices reflecting on academic work in the digital age. Innovative Higher Education , 43 (4), 257–272.

Marks, R. B., Sibley, S. D., & Arbaugh, J. B. (2005). A structural equation model of predictors for effective online learning. Journal of Management Education , 29 (4), 531–563.

Martin, F., Wang, C., & Sadaf, A. (2018). Student perception of facilitation strategies that enhance instructor presence, connectedness, engagement and learning in online courses. Internet and Higher Education , 37 , 52–65.

Maycock, K. W. (2019). Chalk and talk versus flipped learning: A case study. Journal of Computer Assisted Learning , 35 , 121–126.

McGivney-Burelle, J. (2013). Flipping Calculus. PRIMUS Problems, Resources, and Issues in Mathematics Undergraduate . Studies , 23 (5), 477–486.

Mohammadi, H. (2015). Investigating users’ perspectives on e-learning: An integration of TAM and IS success model. Computers in Human Behavior , 45 , 359–374.

Nair, S. S., Tay, L. Y., & Koh, J. H. L. (2013). Students’ motivation and teachers’ teaching practices towards the use of blogs for writing of online journals. Educational Media International , 50 (2), 108–119.

Nguyen, T. (2015). The effectiveness of online learning: Beyond no significant difference and future horizons. MERLOT Journal of Online Learning and Teaching , 11 (2), 309–319.

Ni, A. Y. (2013). Comparing the effectiveness of classroom and online learning: Teaching research methods. Journal of Public Affairs Education , 19 (2), 199–215.

Nouri, J. (2016). The flipped classroom: For active, effective and increased learning – Especially for low achievers. International Journal of Educational Technology in Higher Education , 13 , #33.

O’Neill, D. K., & Sai, T. H. (2014). Why not? Examining college students’ reasons for avoiding an online course. Higher Education , 68 (1), 1–14.

O'Flaherty, J., & Phillips, C. (2015). The use of flipped classrooms in higher education: A scoping review. The Internet and Higher Education , 25 , 85–95.

Open & Distant Learning Quality Council (2012). ODLQC standards . England: Author https://www.odlqc.org.uk/odlqc-standards .

Ortagus, J. C. (2017). From the periphery to prominence: An examination of the changing profile of online students in American higher education. Internet and Higher Education , 32 , 47–57.

Otter, R. R., Seipel, S., Graef, T., Alexander, B., Boraiko, C., Gray, J., … Sadler, K. (2013). Comparing student and faculty perceptions of online and traditional courses. Internet and Higher Education , 19 , 27–35.

Paechter, M., Maier, B., & Macher, D. (2010). Online or face-to-face? Students’ experiences and preferences in e-learning. Internet and Higher Education , 13 , 292–329.

Prinsloo, P. (2016). (re)considering distance education: Exploring its relevance, sustainability and value contribution. Distance Education , 37 (2), 139–145.

Quality Matters (2018). Specific review standards from the QM higher Education rubric , (6th ed., ). MD: MarylandOnline.

Richardson, J. C., Maeda, Y., Lv, J., & Caskurlu, S. (2017). Social presence in relation to students’ satisfaction and learning in the online environment: A meta-analysis. Computers in Human Behavior , 71 , 402–417.

Rockhart, J. F., & Bullen, C. V. (1981). A primer on critical success factors . Cambridge: Center for Information Systems Research, Massachusetts Institute of Technology.

Rourke, L., & Kanuka, H. (2009). Learning in Communities of Inquiry: A Review of the Literature. The Journal of Distance Education / Revue de l'ducation Distance , 23 (1), 19–48 Athabasca University Press. Retrieved August 2, 2020 from https://www.learntechlib.org/p/105542/ .

Sebastianelli, R., Swift, C., & Tamimi, N. (2015). Factors affecting perceived learning, satisfaction, and quality in the online MBA: A structural equation modeling approach. Journal of Education for Business , 90 (6), 296–305.

Shen, D., Cho, M.-H., Tsai, C.-L., & Marra, R. (2013). Unpacking online learning experiences: Online learning self-efficacy and learning satisfaction. Internet and Higher Education , 19 , 10–17.

Sitzmann, T., Kraiger, K., Stewart, D., & Wisher, R. (2006). The comparative effectiveness of web-based and classroom instruction: A meta-analysis. Personnel Psychology , 59 (3), 623–664.

So, H. J., & Brush, T. A. (2008). Student perceptions of collaborative learning, social presence and satisfaction in a blended learning environment: Relationships and critical factors. Computers & Education , 51 (1), 318–336.

Song, L., Singleton, E. S., Hill, J. R., & Koh, M. H. (2004). Improving online learning: Student perceptions of useful and challenging characteristics. The Internet and Higher Education , 7 (1), 59–70.

Sun, P. C., Tsai, R. J., Finger, G., Chen, Y. Y., & Yeh, D. (2008). What drives a successful e-learning? An empirical investigation of the critical factors influencing learner satisfaction. Computers & Education , 50 (4), 1183–1202.

Takamine, K. (2017). Michelle D. miller: Minds online: Teaching effectively with technology. Higher Education , 73 , 789–791.

Tanner, J. R., Noser, T. C., & Totaro, M. W. (2009). Business faculty and undergraduate students’ perceptions of online learning: A comparative study. Journal of Information Systems Education , 20 (1), 29.

Tucker, B. (2012). The flipped classroom. Education Next , 12 (1), 82–83.

Van Wart, M., Ni, A., Ready, D., Shayo, C., & Court, J. (2020). Factors leading to online learner satisfaction. Business Educational Innovation Journal , 12 (1), 15–24.

Van Wart, M., Ni, A., Rose, L., McWeeney, T., & Worrell, R. A. (2019). Literature review and model of online teaching effectiveness integrating concerns for learning achievement, student satisfaction, faculty satisfaction, and institutional results. Pan-Pacific . Journal of Business Research , 10 (1), 1–22.

Ventura, A. C., & Moscoloni, N. (2015). Learning styles and disciplinary differences: A cross-sectional study of undergraduate students. International Journal of Learning and Teaching , 1 (2), 88–93.

Vlachopoulos, D., & Makri, A. (2017). The effect of games and simulations on higher education: A systematic literature review. International Journal of Educational Technology in Higher Education , 14 , #22.

Wang, Y., Huang, X., & Schunn, C. D. (2019). Redesigning flipped classrooms: A learning model and its effects on student perceptions. Higher Education , 78 , 711–728.

Wingo, N. P., Ivankova, N. V., & Moss, J. A. (2017). Faculty perceptions about teaching online: Exploring the literature using the technology acceptance model as an organizing framework. Online Learning , 21 (1), 15–35.

Xu, D., & Jaggars, S. S. (2014). Performance gaps between online and face-to-face courses: Differences across types of students and academic subject areas. Journal of Higher Education , 85 (5), 633–659.

Young, S. (2006). Student views of effective online teaching in higher education. American Journal of Distance Education , 20 (2), 65–77.

Zawacki-Richter, O., & Naidu, S. (2016). Mapping research trends from 35 years of publications in distance Education. Distance Education , 37 (3), 245–269.

Download references

Acknowledgements

No external funding/ NA.

Author information

Authors and affiliations.

Development for the JHB College of Business and Public Administration, 5500 University Parkway, San Bernardino, California, 92407, USA

Montgomery Van Wart, Anna Ni, Pamela Medina, Jesus Canelon, Melika Kordrostami, Jing Zhang & Yu Liu

You can also search for this author in PubMed Google Scholar

Contributions

Equal. The author(s) read and approved the final manuscript.

Corresponding author

Correspondence to Montgomery Van Wart .

Ethics declarations

Competing interests.

We have no competing interests.

Additional information

Publisher’s note.