Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 01 May 2024

Novel applications of Convolutional Neural Networks in the age of Transformers

- Tansel Ersavas 1 ,

- Martin A. Smith 1 , 2 , 3 , 4 &

- John S. Mattick 1

Scientific Reports volume 14 , Article number: 10000 ( 2024 ) Cite this article

177 Accesses

3 Altmetric

Metrics details

- Computational science

- Machine learning

Convolutional Neural Networks (CNNs) have been central to the Deep Learning revolution and played a key role in initiating the new age of Artificial Intelligence. However, in recent years newer architectures such as Transformers have dominated both research and practical applications. While CNNs still play critical roles in many of the newer developments such as Generative AI, they are far from being thoroughly understood and utilised to their full potential. Here we show that CNNs can recognise patterns in images with scattered pixels and can be used to analyse complex datasets by transforming them into pseudo images with minimal processing for any high dimensional dataset, representing a more general approach to the application of CNNs to datasets such as in molecular biology, text, and speech. We introduce a pipeline called DeepMapper , which allows analysis of very high dimensional datasets without intermediate filtering and dimension reduction, thus preserving the full texture of the data, enabling detection of small variations normally deemed ‘noise’. We demonstrate that DeepMapper can identify very small perturbations in large datasets with mostly random variables, and that it is superior in speed and on par in accuracy to prior work in processing large datasets with large numbers of features.

Similar content being viewed by others

Deep learning for cellular image analysis

Off-the-shelf deep learning is not enough, and requires parsimony, Bayesianity, and causality

A guide to machine learning for biologists

Introduction.

There are exponential increases in data 1 especially from highly complex systems, whose non-linear interactions and relationships are not well understood, and which can display major or unexpected changes in response to small perturbations, known as the ‘Butterfly effect’ 2 .

In domains characterised by high-dimensional data, traditional statistical methods and Machine Learning (ML) techniques make heavy use of feature engineering that incorporates extensive filtering, selection of highly variable parameters, and dimension reduction techniques such as Principal Component Analysis (PCA) 3 . Most current tools filter out smaller changes in data, mostly considered artefacts or `noise`, which may contain information that is paramount to understanding the nature and behaviour of such highly complex systems 4 .

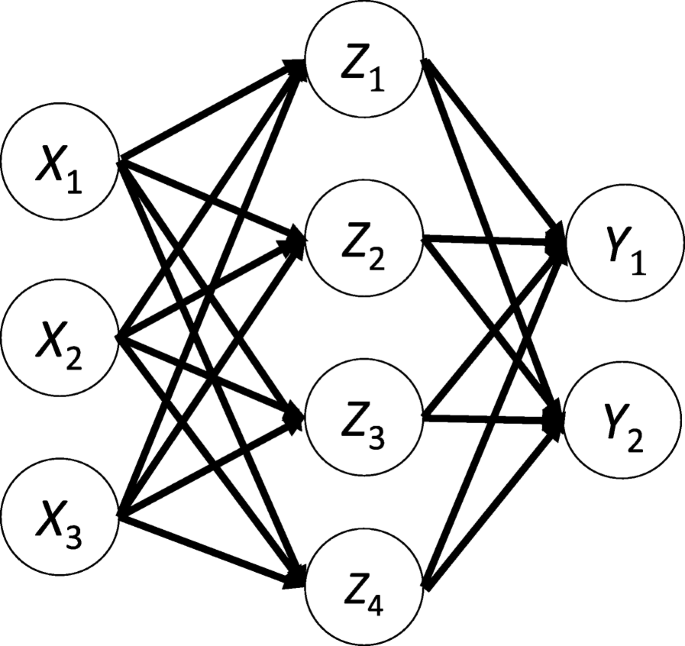

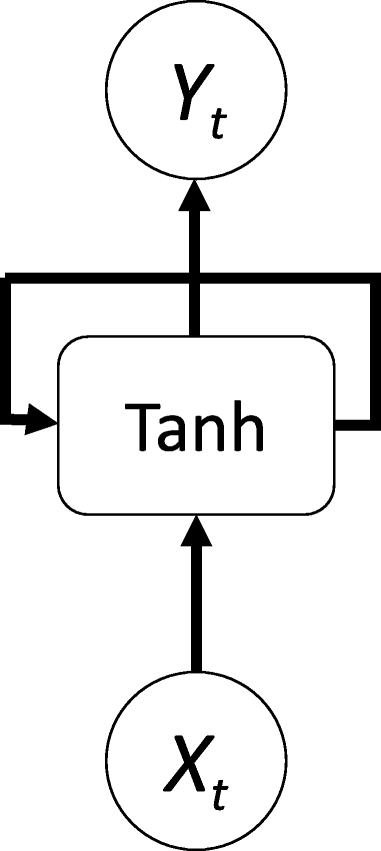

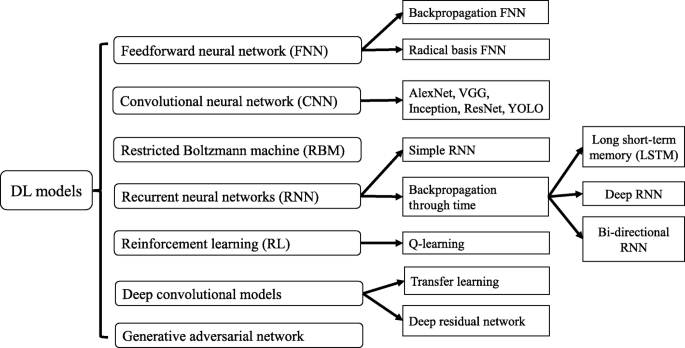

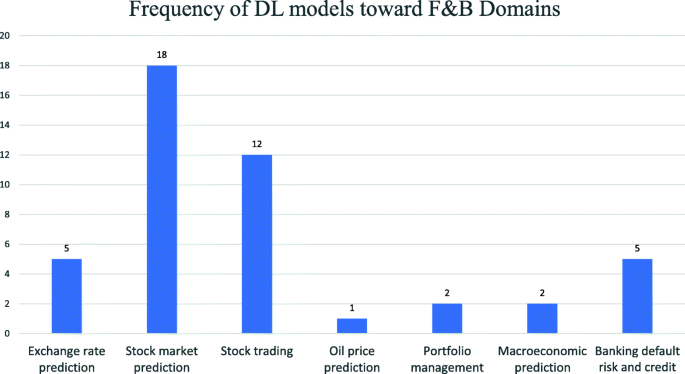

The emergence of Deep Learning (DL) offers a paradigm shift. DL algorithms, underpinned by adaptive learning mechanisms, can discern both linear and non-linear data intricacies, and open avenues to analyse data that is not possible or practical by conventional techniques 5 , particularly in complex domains such as image, temporal sequence analysis, molecular biology, and astronomy 6 . DL models, such as Convolutional Neural Networks (CNNs) 7 , Recurrent Neural Networks (RNNs) 8 , Generative Network s 9 and Transformers 10 , have demonstrated exceptional performance in various domains, such as image and speech recognition, natural language processing, and game playing 6 . CNNs and LSTMs were found to be great tools to predict behaviour of so called `chaotic` systems 11 . Modern DL systems often surpass human-level performance, and challenge humans even in creative endeavours.

CNNs utilise a unique architecture that comprises several layers, including convolutional layers, pooling layers, and fully connected layers, to process and transform the input data hierarchically 5 . CNNs have no knowledge of sequence, and therefore are generally not used in analysing time-series or similar data, which is traditionally attempted with Recurrent Neural Networks (RNNs) 12 and Long Short-Term Memory networks (LSTMs) 8 due to their ability to capture temporal patterns. Where CNNs have been employed for sequence or time-series analysis, 1-dimensional (1D) CNNs have been selected because of their vector based 1D input structure 13 . However, attempts to analyse such data in 1D CNNs do not always give superior results 14 . In addition, GPU (Graphical Processing Units) systems are not always optimised for processing 1D CNNs, therefore even though 1D CNNs have fewer parameters than 2-dimensional (2D) CNNs, 2D CNNs can outperform 1D CNNs 15 .

Transformers , introduced by Vaswani et al. 10 , have recently come to prominence, particularly for tasks where data are in the form of time series or sequences, in domains ranging from language modelling to stock market prediction 16 . Transformers leverage self-attention, a key component that allows a model to weigh and focus on various parts of an input sequence when producing an output, enabling the capture of long-range dependencies in data. Unlike CNNs, which use local receptive fields, self-attention weighs the significance of various parts of the input data 17 .

Following success with sequence-based tasks, Transformers are being extended to image processing. Vision-Transformers in object detection 18 , Detection Transformers 19 and lately Real-time Detection Transformers all claim superiority over CNNs 20 . However, their inference operations demand far more resources than CNNs and trail CNNs in flexibility. They also suffer similar augmentation problems as CNNs. More recently, Retentive-Networks have been offered as an alternative to Transformers 21 and may soon challenge the Transformer architecture.

CNNs can recognise dispersed patterns

Even though CNNs are widely used, there are some misconceptions, notably that CNNs are largely limited to image data, and require established spatial relationships between pixels in images, both of which are open to challenge. The latter is of particular importance when considering the potential of CNNs to analyse complex non-image datasets, whose data structures are arbitrary.

Moreover, while CNNs are universal function approximators 22 , they may not always generalise 23 , especially if they are trained on data that is insufficient to cover the solution space 24 . It is also known that they can spontaneously generalise even when supplied with a small number of samples during training after overfitting, called ‘grokking’ 25 , 26 . CNNs can generalise from scattered data if given enough samples, or if they grok, and this can be determined by observing changes to training versus testing accuracy and loss.

Non-image processing with CNNs

While CNNs have achieved remarkable success in computer vision applications, such as image classification and object detection 7 , 27 , they have also been employed in other domains to a lesser degree with impressive results, including: (1) natural language processing, text classification, sentiment analysis and named entity recognition, by treating text data as a one-dimensional image with characters represented as pixels 16 , 28 ; (2) audio processing, such as speech recognition, speaker identification and audio event detection, by applying convolutions over time frequency representations of audio signals 29 ; (3) time series analysis, such as financial market prediction, human activity recognition and medical signal analysis, using one-dimensional convolutions to capture local temporal patterns and learn features from time series data 30 ; and (4) biopolymer (e.g., DNA) sequencing, using 2D CNNs to accurately classify molecular barcodes in raw signals from Oxford Nanopore sequencers using a transformation to turn a 1D signal into 2D images—improving barcode identification recovery from 38 to over 85% 31 .

Indeed, CNNs are not perfect tools for image processing as they do not develop semantic understanding of images even though they can be trained to do semantic segmentation 32 . They cannot easily recognise negative images when trained with positive images 33 . CNNs are also sensitive to the orientation and scale of objects and must rely on augmentation of image datasets, often involving hundreds of variations of the same image 34 . There are no such changes in the perspective and orientation of data converted into flat 2D images.

In the realm of complex domains that generate huge amounts of data, augmentation is usually not required for non-image datasets, as the datasets will be rich enough. Moreover, introducing arbitrary augmentation does not always improve accuracy; indeed, introducing hand-tailored augmentation may hinder analysis 35 . If augmentation is required, it can be introduced in a data-oriented form, but even when using automated augmentation such as AutoAugment 35 or FasterAutoAugment 36 , many of the augmentations (such as shearing, translation, rotation, inversion, etc.) should not be used, and the result should be tested carefully, as augmentation may introduce artefacts.

A frequent problem with handling non-image datasets with many variables is noise. Many algorithms have been developed for noise elimination, most of which are domain specific. CNNs can be trained to use the whole input space with minimal filtering and no dimension reduction, and can find useful information in what might be ascribed as ‘noise’ 4 , 37 . Indeed, a key reason to retain ‘noise’ is to allow discovery of small perturbations that cannot be detected by other methods 11 .

Conversion of non-image data to artificial images for CNN processing

Transforming sequence data to images without resorting to dimension reduction or filtering offers a potent toolset for discerning complex patterns in time series and sequence data, which potentiates the two major advantages of CNNs compared to RNNs, LSTMs and Transformers . First, CNNs do not depend on past data to recognise current patterns, which increases sensitivity to detect patterns that appear in the beginning of time-series or sequence data. Second, 2D CNNs are better optimised for GPUs and highly parallelizable, and are consequently faster than other current architectures, which accelerates training and inference, while reducing resource and energy consumption during in all phases including image transformation, training, and inference significantly.

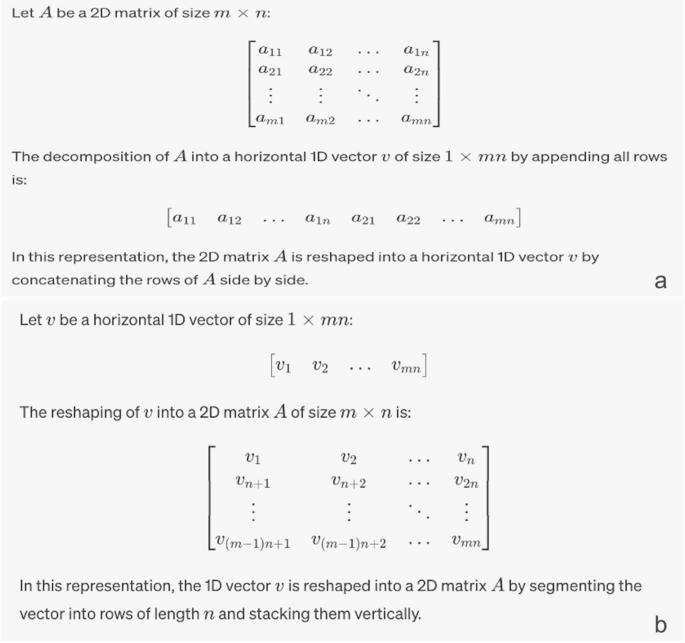

Image data such as MNIST represented in a matrix can be classified by basic deep networks such as Multi-level Perceptrons (MLP) by turning their matrix representation to vectors (Fig. 1 a). Using this approach analysis of images becomes increasingly complex as the image size grows, increasing the input parameters of MLP and the computational cost exponentially. On the other hand, 2D CNNs can handle the original matrix much faster than MLP with equal or better accuracy and scale to much larger images.

Conversion of images to vectors and vice versa. ( a ) Basic operation of transformation of an image to a vector, forming a sequence representation of the numeric values of pixels. ( b ) Transforming a vector to a matrix, forming an image by encoding numerical values as pixels. During this operation if the vector size cannot be mapped to m X n because vector size is smaller than the nearest m X n, then it is padded with zeroes to the nearest m X n.

Just like how a simple neural network analyses a 2D image by turning it into a vector, the reciprocal is also true—data in a vector can be converted to a 2D matrix (Fig. 1 b). Vectors converted to such matrices form arbitrary patterns that are incomprehensible to human eye. A similar technique for such mapping has also been proposed by Kovelarchuk et al. using another algorithm called CPC-R 38 .

Attribution

An important aspect of any analysis is to be able to identify those variables that are most important and the degree to which they contribute to a given classification. Identifying these variables is particularly challenging in CNNs due to their complex hierarchical architecture, and many non-linear transformations 39 . To address this problem many ‘attribution methods’ have been developed to try to quantify the contribution of each variable (e.g., pixels in images) to the final output for deep neural networks and CNNs 40 .

Saliency maps serve as an intuitive attribution and visualisation tool for CNNs, spotlighting regions in input data that significantly influence the model's predictions 27 . By offering a heatmap representation, these maps illuminate key features that the model deems crucial, thus aiding in demystifying the model's decision-making process. For instance, when analysing an image of a cat, the saliency map would emphasise the cat's distinct features over the background. While their simplicity facilitates understanding even for those less acquainted with deep learning, saliency maps do face challenges, particularly their sensitivity to noise and occasional misalignment with human intuition 41 , 42 , 43 . Nonetheless, they remain a pivotal tool in enhancing model transparency and bridging the interpretability gap between ML models and human comprehension.

Several methods have been proposed for attribution, including Guided Backpropagation 44 , Layer-wise Relevance Propagation 45 , Gradient-weighted Class Activation Mapping 46 , Integrated Gradients 47 , DeepLIFT 48 , and SHAP (SHapley Additive exPlanations) 49 . Many of these methods were developed because it is challenging to identify important input features when there are different images with the same label (e.g., ‘bird’ with many species) presented at different scales, colours, and perspectives. In contrast, most non-image data does not have such variations, as each pixel corresponds to the same feature. For this reason, choosing attributions with minimal processing is sufficient to identify the salient input variables that have the maximal impact on classification.

Here we introduce a new analytical pipeline, DeepMapper , which applies a non-indexed or indexed mapping to the data representing each data point with one pixel, enabling the classification or clustering of data using 2D CNNs. This simple direct mapping has been tried by others but has not been tested with datasets with sufficiently large amounts of data in various conditions. We use raw data with minimal filtering and no dimension reduction to preserve small perturbations in data that are normally removed, in order to assess their impact.

The pipeline includes conversion of data, separation to training and validation, assessment of training quality, attribution, and accumulation of results in a pipeline. The pipeline is run multiple times until a consensus is reached. The significant variables can then be identified using attribution and exported appropriately.

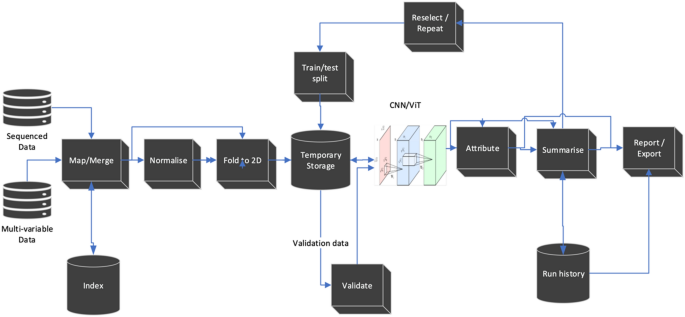

The DeepMapper architecture is shown in Fig. 2 . The complete algorithm of DeepMapper is detailed in the “ Methods ” section and the Python source code is supplied at GitHub 50 .

DeepMapper architecture. DeepMapper uses sequence or multi-variate data as input. The first step of DeepMapper is to merge and if required index input files to prepare them into matrix format. The data are normalised using log normalisation, then folded to a matrix. Folding is performed either directly with the natural order of the data or by using the index that is generated or supplied during the data import. After folding, the data are kept in temporary storage and separated to ‘train’ and ‘test’ using SciPy train test split. Training is done using either using CNNs that are supplied by the PyTorch libraries, or a custom CNN supplied ( ResNet18 is used by default). Intermediary results are run through attribution algorithms supplied by the Captum 51 and saved to run history log. The run is then repeated until convergence is achieved, or until a pre-determined number of iterations are performed by shuffling training testing and validation data. Results are summarised in a report with exportable tables and graphics. Attribution is applied to true positives and true negatives, and these are translated back to features to be added to reports. Further details can be directly found in the accompanying code 50 .

DeepMapper is developed to implement an approach to process high-dimensional data without resorting to excessive filtering and dimension reduction techniques that eliminate smaller perturbations in data to be able to identify those differences that would otherwise be filtered out. The following algorithm is used to achieve this result:

Read and setup the running parameters.

Read the data into a tabulated form in the form of observations, features, and outcome (in the form of labels, or if self-supervised, the input itself).

If the input data includes categorical features, these features should be converted to numbers and normalised before feeding to DeepMapper .

Identify features and labels.

Do only basic filtering that eliminates observations or features if all of them are 0 or empty.

Normalise features.

Transform tabulated data to 2-dimensional matrices as illustrated in Fig. 1 a by applying a vector to matrix transformation.

If the analysis is supervised, then transform class labels to output matrices.

Begin iteration:

Separate the data into training and validation groups.

Train on the dataset for required number of epochs, until reaching satisfactory testing accuracy and loss, or maximum a pre-determined number of iterations.

If satisfactory testing results are obtained, then:

Perform attributions by associating each result to contributing input pixels using Captum, a Python library for attributions 51 .

Accumulate attribution results by collecting the attribution results for each class.

If training is satisfactory:

Tabulate attribution results by averaging accumulated attributions.

Save the model.

Report results.

The results of DeepMapper analysis can be used in 2 ways:

Supervised: DeepMapper produces a list of features that played a prominent role in the differentiation of classes.

Self-supervised: Highlights the most important features in differentiating observations from each other in a non-linear fashion. The output can be used as an alternative feature selection tool for dimension reduction.

In both modes, any hidden layer can be examined as latent space. A special bottleneck layer can be introduced to reduce dimensions for clustering purposes.

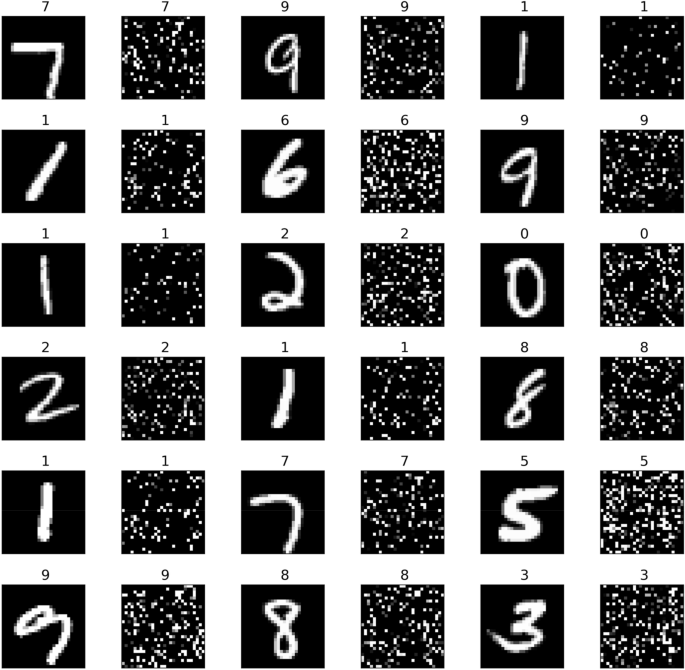

We present a simple example to demonstrate that CNNs can readily interpret data with a well dispersed pattern of pixels, using the MNIST dataset, which is widely used for hand-written image recognition and which humans as well as CNNs can easily recognise and classify based on the obvious spatial relationships between pixels (Fig. 3 ). This dataset is a more complicated problem than datasets such as the Gisette dataset 52 that was developed to distinguish between 4 and 9. It includes all digits and uses a full randomisation of pixels, and can be regenerated with the script supplied 50 and changing the seed will generate different patterns.

A sample from MNIST dataset (left side of each image) and its shuffled counterpart (right side).

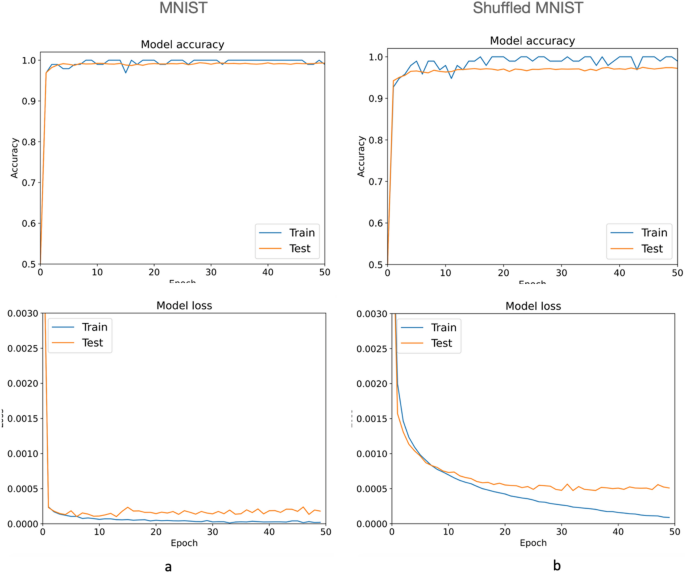

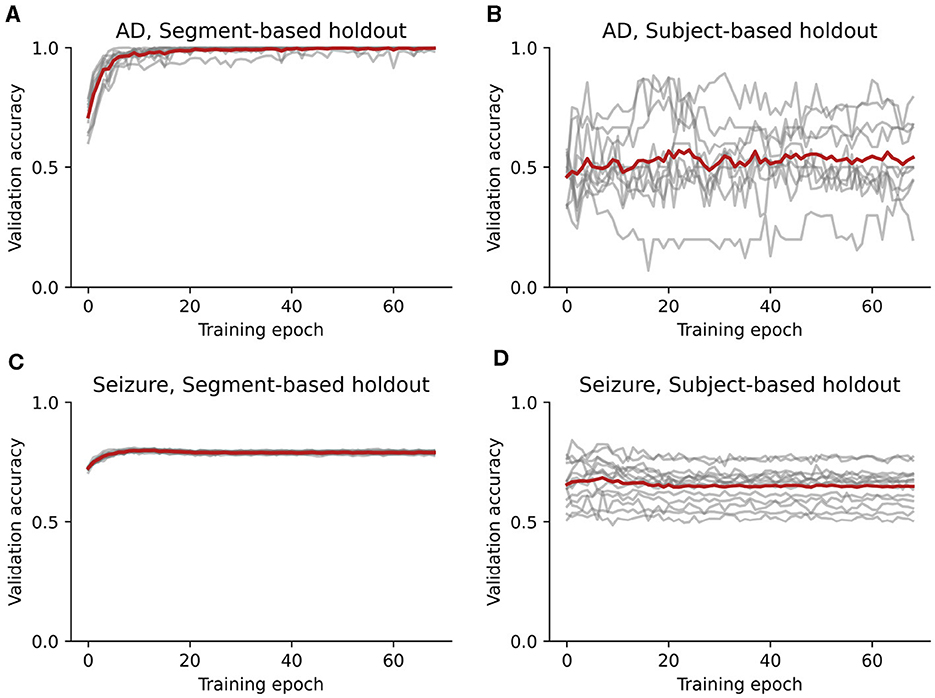

We randomly shuffled the data in Fig. 3 using the same seed 50 to obtain 60,000 training images such as those shown on the right side of each digit, and validated the results with a separate batch of 20,000 images (Fig. 3 ). Although the resulting images are no longer recognizable by eye, a CNN has no difficulty distinguishing and classifying each pattern with ~ 2% testing error compared to the reference data (Fig. 4 ). This result demonstrates that CNNs can accurately recognise global patterns in images without reliance on local relationships between neighbouring pixels. It also confirms the finding that shuffling images only marginally increases training loss 23 and extends it to testing loss (Fig. 4 ).

Results of training MNIST dataset ( a ) and the shuffled dataset ( b ) with PyTorch model ResNet18 50 . The charts demonstrate although the training continued for 50 epochs, about 15 epochs for shuffled images ( b ) would be enough, as further training starts causing overfitting. The decrease of accuracy between normal and shuffled images is about 3%, and this difference cannot be improved by using more sophisticated CNNs with more layers, meaning shuffling images cause a measurable loss of information, yet still hold patterns recognisable by CNNs.

Testing DeepMapper

Finding slight changes in very few variables in otherwise seemingly random datasets with large numbers of variables is like finding a needle in a haystack. Such differences in data are almost impossible to detect using traditional analysis tools because small variations are usually filtered out before analysis.

We devised a simple test case to determine if DeepMapper can detect one or more variables with small but distinct variations in otherwise randomly generated data. We generated a dataset with 10,000 data items with 18,225 numeric variables as an example of a high-dimensional dataset using PyTorch’s uniform random algorithms 53 . The algorithm sets 18,223 of these variables to random numbers in the range of 0–1, and two of the variables into two distinct groups as seen in Table 1 .

We call this type of dataset ‘Needle in a haystack’ (NIHS) dataset, where very small amounts of data with small variance is hidden among a set of random variables that is order(s) of magnitude greater than the meaningful components. We provide a script that can generate this and similar datasets among the source supplied 50 .

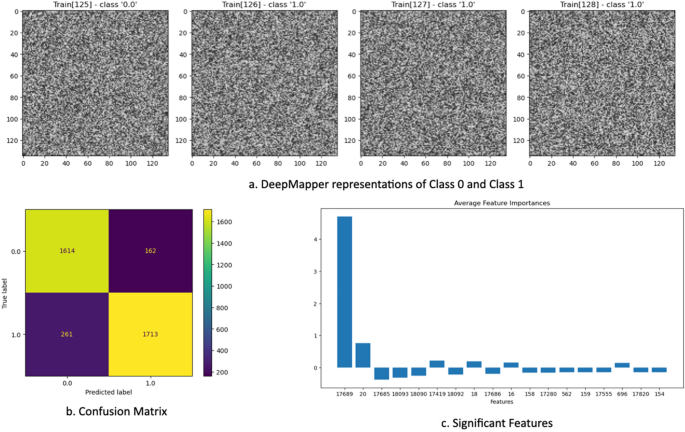

DeepMapper was able to accurately classify the two datasets (Fig. 5 ). Furthermore, using attribution DeepMapper was also able to determine the two datapoints that have different variances in the two classes. Note that DeepMapper may not always find all the changes in the first attempt as neural network initialisation of weights is a stochastic process. However, DeepMapper o vercomes this matter via multiple iterations to establish acceptable training and testing accuracies as described in the Methods.

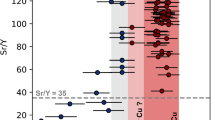

In this demonstration of analysis of high dimensional data with very small perturbations, DeepMapper can find these small variations in a few (in this example two) variables out of very large number of random variables (here 18,225). ( a ) DeepMapper representations of each record. ( b ) The result of the test run of the classification with unseen data (3750 elements). ( c ) The first and second variables in the graph are measurably higher than the other variables.

Comparison of DeepMapper with DeepInsight

DeepInsight 54 is the most general approach published to date for converting non-image data into image-like structures, with the claim that these processed structures allow CNNs to capture complex patterns and features in the data. DeepInsight offers an algorithm to create images that have similar features collated into a “well organised image form”, or by applying one of several dimensionality reduction algorithms (e.g., t-SNE, PCA or KPCA) 54 . However, these algorithms add computational complexity, potentially eliminate valuable information, limit the abilities of CNNs to find small perturbations, and make it more difficult to use attribution to determine most notable features impacting analysis as multiple features may overlap in the transformed image. In contrast DeepMapper uses a direct mapping mechanism where each feature corresponds to one pixel.

To identify important input variables, DeepInsight authors later developed DeepFeature 55 using an elaborate mechanism to associate image areas identified by attribution methods to the input variables. DeepMapper uses a simpler approach as each pixel corresponds to only one variable and can use any of the attribution methods to link results to its input space. While both DeepMapper and DeepInsight follow the general idea that non-image data can be processed with 2D CNNs, DeepMapper uses a much simpler and faster algorithm, while DeepInsight chooses a sophisticated set of algorithms to convert non-image data to images, dramatically increasing computational cost. The DeepInsight conversion process is not designed to utilise GPUs so cannot be accelerated by better hardware, and the obtained images may be larger than the number of data points, also impacting performance.

One of the biggest differences between DeepFeature and DeepMapper is that DeepFeature in many cases selects multiple features during attribution because DeepInsight pixels represent multiple values, whereas each DeepMapper pixel represents one input feature, therefore it can determine differentiating features with pinpoint accuracy at a resolution of 1 pixel per feature.

The DeepInsight manuscript offers various examples of data to demonstrate its abilities. However, many of the examples use low dimensions (20–4000 features) while today’s complex datasets may regularly require tens of thousands to millions of features such as in genome analysis in biology and radio-telescope analysis in astronomy. As such, several examples provided by DeepInsight have insufficient dimensions for a sophisticated mechanism such as DeepMapper , which should ideally have 10,000 or more dimensions as required by modern complex datasets. DeepInsight examples include a speech dataset from the TIMIT corpus with 39 dimensions, Relathe (text) dataset, which is derived from newsgroup documents and partitioned evenly across different newsgroups. It contains 1427 samples and 4322 dimensions. The ringnorm-DELVE , which is an implementation of Leo Breiman’s ringnorm example, is a 20 dimensional, 2 class classification with 7400 samples 54 . Another example, Madelon , introduced an artificially generated dataset 2600 samples and 500 dimensions, where only 5 principal and 20 derived variables containing information. Instead, we used a much more complicated example than Madelon , an NIHS dataset 50 that we used to test DeepMapper in the first place. We attempted to run DeepInsight with NIHS data, but we could not get it to train properly and for this reason we cannot supply a comparison.

The most complex problem published by DeepInsight was the analysis of a public RNA sequencing gene expression dataset from TCGA ( https://cancergenome.nih.gov/ ) containing 6216 samples of 60,483 genes or dimensions, of which DeepInsight used 19,319. We selected this example as the second demonstration of application of DeepMapper to high dimensional data, as well as a benchmark for comparison with DeepInsight .

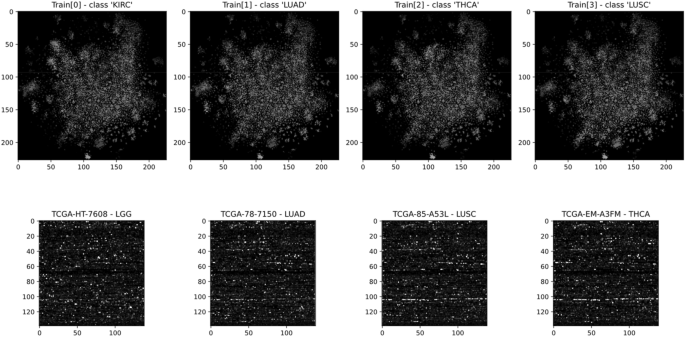

We generated the data using the R script offered by DeepInsight 54 and ran DeepMapper as well as DeepInsight using the generated dataset to compare accuracy and speed. In this test DeepMapper exhibited much improved processing speed with near identical accuracy (Table 2 , Fig. 6 ).

Analysis of TCGA data by DeepInsight vs DeepMapper: The image on the top was generated by DeepInsight using its default values and a t-SNE transformer supplied by DeepInsight . The image at the bottom was generated by DeepMapper. Image conversion and training speeds and the analysis results can be found in Table 2 .

CNNs are fundamentally sophisticated pattern matchers that can establish intricate mappings between input features and output representations 6 . They excel at transforming various inputs into outputs, including identifying classes or bounding boxes, through a series of operations involving convolution, pooling, and activation functions 7 , 56 .

Even though CNNs are in the centre of many of today’s revolutionary AI systems from self-driving cars to generative AI systems such as Dall-E-2 , MidJourney and Stable Diffusion , they are still not well understood nor efficiently utilised, and their usage beyond image analysis has been limited.

While CNNs used in image analysis are constrained historically and practically to a 224 × 224 matrix or a similar fixed size input, this limitation arises for pre-trained models. When CNNs have not been pre-trained, one can select a much wider variety of sizes as input shape depending on the CNN architecture. Some CNNs are more flexible in their input size that implemented with adaptive pooling layers such as ResNet18 using adaptive pooling 57 . This provides flexibility to choose optimal sizes for the task in hand for non-image applications, as most non-image applications will not use pre-trained CNNs.

Here we have demonstrated uses of CNNs that are outside the norm. There is a need for analysis of complex data with many thousands of features that are not primarily images. There is also a lack of tools that offer minimal conversion of non-image data to image-like formats that then can easily be processed with CNNs in classification and clustering tasks. As a lot of this data is coming from complex systems that have a lot of features, DeepMapper offers a way of investigating such data in ways that may not be possible with traditional approaches.

Although DeepMapper currently uses CNN as its AI component, alternative analytic strategies can easily be substituted in lieu of CNN with minimal changes, such as Vision Transformers 18 or RetNets 21 , which have great potential for this application. While Transformers and RetNets have input size limitations for inference in terms of number of tokens. Vision Transformers can handle much larger inputs by dividing images to segments that incorporate multiple pixels 18 . This type of approach would be applicable to both Transformers and RetNets , and future architectures. DeepMapping can leverage these newer architectures, and others, in the future 57 .

Data availability

DeepMapper is released as an open source tool on GitHub https://github.com/tansel/deepmapper . Data that is not available from GitHub because of size constraints can be requested from the authors.

Taylor, P. Volume of data/information created, captured, copied, and consumed worldwide from 2010 to 2020, with forecasts from 2021 to 2025. https://www.statista.com/statistics/871513/worldwide-data-created/ (2023).

Ghys, É. The butterfly effect. in The Proceedings of the 12th International Congress on Mathematical Education: Intellectual and attitudinal challenges, pp. 19–39 (Springer). (2015).

Jolliffe, I. T. Mathematical and statistical properties of sample principal components. Principal Component Analysis , pp. 29–61 (Springer). https://doi.org/10.1007/0-387-22440-8_3 (2002).

Landauer, R. The noise is the signal. Nature 392 , 658–659. https://doi.org/10.1038/33551 (1998).

Article ADS CAS Google Scholar

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (MIT Press). http://www.deeplearningbook.org (2016).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521 , 436–444. https://doi.org/10.1038/nature14539 (2015).

Article ADS CAS PubMed Google Scholar

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. Commun. ACM 60 , 84–90. https://doi.org/10.1145/3065386 (2017).

Article Google Scholar

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural Comput. 9 , 1735–1780. https://doi.org/10.1162/neco.1997.9.8.1735 (1997).

Article CAS PubMed Google Scholar

Goodfellow, I. et al. Generative adversarial nets. Commun. ACM 63 , 139–144. https://doi.org/10.1145/3422622 (2020).

Vaswani, A. et al. Attention is all you need. NIPS'17: Proceedings of the 31st International Conference on Neural Information Processing Systems , pp. 6000–6010. https://doi.org/10.5555/3295222.3295349 (2017).

Barrio, R. et al. Deep learning for chaos detection. Chaos 33 , 073146. https://doi.org/10.1063/5.0143876 (2023).

Article ADS MathSciNet PubMed Google Scholar

Levin, E. A recurrent neural network: limitations and training. Neural Netw. 3 , 641–650. https://doi.org/10.1016/0893-6080(90)90054-O (1990).

LeCun, Y. & Bengio, Y. Convolutional networks for images, speech, and time series. in The handbook of brain theory and neural networks, pp. 255–258. https://doi.org/10.5555/303568.303704 (MIT Press, 1998).

Wu, Y., Yang, F., Liu, Y., Zha, X. & Yuan, S. A comparison of 1-D and 2-D deep convolutional neural networks in ECG classification. arXiv preprint arXiv:1810.07088 . https://doi.org/10.48550/arXiv.1810.07088 (2018).

Hu, J. et al. A multichannel 2D convolutional neural network model for task-evoked fMRI data classification. Comput. Intell. Neurosci. 2019 , 5065214. https://doi.org/10.1155/2019/5065214 (2019).

Article PubMed PubMed Central Google Scholar

Zhang, S. et al. A deep learning framework for modeling structural features of RNA-binding protein targets. Nucleic Acids Res. 44 , e32. https://doi.org/10.1093/nar/gkv1025 (2016).

Article PubMed Google Scholar

Maurício, J., Domingues, I. & Bernardino, J. Comparing vision transformers and convolutional neural networks for image classification: A literature review. Appl. Sci. 13 , 5521. https://doi.org/10.3390/app13095521 (2023).

Article CAS Google Scholar

Dosovitskiy, A. et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 . https://doi.org/10.48550/arXiv.2010.11929 (2020).

Carion, N. et al. End-to-end object detection with transformers. Computer Vision-ECCV 2020 (Springer), pp. 213–229. https://doi.org/10.1007/978-3-030-58452-8_13 (2020).

Lv, W. et al. DETRs beat YOLOs on real-time object detection. arXiv preprint arXiv:2304.08069 . https://doi.org/10.48550/arXiv.2304.08069 (2023).

Sun, Y. et al. Retentive network: A successor to transformer for large language models. arXiv preprint arXiv:2307.08621 . https://doi.org/10.48550/arXiv.2307.08621 (2023).

Zhou, D.-X. Universality of deep convolutional neural networks. Appl. Comput. Harmonic Anal. 48 , 787–794. https://doi.org/10.1016/j.acha.2019.06.004 (2020).

Article MathSciNet Google Scholar

Chiyuan, Z., Bengio, S., Hardt, M., Recht, B. & Vinyals, O. Understanding deep learning (still) requires rethinking generalization. Commun. ACM 64 , 107–115. https://doi.org/10.1145/3446776 (2021).

Ma, W., Papadakis, M., Tsakmalis, A., Cordy, M. & Traon, Y. L. Test selection for deep learning systems. ACM Trans. Softw. Eng. Methodol. 30 , 13. https://doi.org/10.1145/3417330 (2021).

Liu, Z., Michaud, E. J. & Tegmark, M. Omnigrok: grokking beyond algorithmic data. arXiv preprint arXiv:2210.01117 . https://doi.org/10.48550/arXiv.2210.01117 (2022).

Power, A., Burda, Y., Edwards, H., Babuschkin, I. & Misra, V. Grokking: generalization beyond overfitting on small algorithmic datasets. arXiv preprint arXiv:2201.02177 . https://doi.org/10.48550/arXiv.2201.02177 (2022).

Simonyan, K., Vedaldi, A. & Zisserman, A. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv preprint arXiv:1312.6034 . https://doi.org/10.48550/arXiv.1312.6034 (2013).

Kim, Y. Convolutional neural networks for sentence classification. arXiv preprint arXiv:1408.5882 . https://doi.org/10.48550/arXiv.1408.5882 (2014).

Abdel-Hamid, O. et al. Convolutional neural networks for speech recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 22 , 1533–1545. https://doi.org/10.1109/TASLP.2014.2339736 (2014).

Hatami, N., Gavet, Y. & Debayle, J. Classification of time-series images using deep convolutional neural networks. in Proceedings Tenth International Conference on Machine Vision (ICMV 2017) 10696 , 106960Y. https://doi.org/10.1117/12.2309486 (2018).

Smith, M. A. et al. Molecular barcoding of native RNAs using nanopore sequencing and deep learning. Genome Res. 30 , 1345–1353. https://doi.org/10.1101/gr.260836.120 (2020).

Article CAS PubMed PubMed Central Google Scholar

Emek Soylu, B. et al. Deep-Learning-based approaches for semantic segmentation of natural scene images: A review. Electronics 12 , 2730. https://doi.org/10.3390/electronics12122730 (2023).

Hosseini, H., Xiao, B., Jaiswal, M. & Poovendran, R. On the limitation of Convolutional Neural Networks in recognizing negative images. in 16th IEEE International Conference on Machine Learning and Applications, pp. 352–358. https://ieeexplore.ieee.org/document/8260656 (2017).

Montserrat, D. M., Lin, Q., Allebach, J. & Delp, E. J. Training object detection and recognition CNN models using data augmentation. Electron. Imaging 2017 , 27–36. https://doi.org/10.2352/ISSN.2470-1173.2017.10.IMAWM-163 (2017).

Cubuk, E. D., Zoph, B., Mane, D., Vasudevan, V. & Le, Q. V. Autoaugment: learning augmentation policies from data. arXiv preprint arXiv:1805.09501 . https://doi.org/10.48550/arXiv.1805.09501 (2018).

Hataya, R., Zdenek, J., Yoshizoe, K. & Nakayama, H. Faster AutoAugment: Learning augmentation strategies using backpropagation, in Computer Vision–ECCV 2020: 16th European Conference, Proceedings, Part XXV, pp. 1–16 (Springer). https://doi.org/10.1007/978-3-030-58595-2_1 (2020).

Xiao, K., Engstrom, L., Ilyas, A. & Madry, A. Noise or signal: the role of image backgrounds in object recognition. arXiv preprint arXiv:2006.09994 . https://doi.org/10.48550/arXiv.2006.09994 (2020).

Kovalerchuk, B., Kalla, D. C. & Agarwal, B., Deep learning image recognition for non-images, in Integrating artificial intelligence and visualization for visual knowledge discovery (eds. Kovalerchuk, B., et al. ) pp. 63–100 (Springer). https://doi.org/10.1007/978-3-030-93119-3_3 (2022).

Samek, W., Binder, A., Montavon, G., Lapuschkin, S. & Muller, K. R. Evaluating the visualization of what a deep neural network has learned. IEEE Trans. Neural Netw. Learn. Syst. 28 , 2660–2673. https://doi.org/10.1109/tnnls.2016.2599820 (2017).

Article MathSciNet PubMed Google Scholar

Montavon, G., Samek, W. & Müller, K.-R. Methods for interpreting and understanding deep neural networks. Digital Signal Process. 73 , 1–15. https://doi.org/10.1016/j.dsp.2017.10.011 (2018).

De Cesarei, A., Cavicchi, S., Cristadoro, G. & Lippi, M. Do humans and deep convolutional neural networks use visual information similarly for the categorization of natural scenes?. Cognit. Sci. 45 , e13009. https://doi.org/10.1111/cogs.13009 (2021).

Kindermans, P.-J. et al. The (un) reliability of saliency methods, in Explainable AI: Interpreting, explaining and visualizing deep learning. Lecture Notes in Computer Science 11700 , pp. 267–280 (Springer). https://doi.org/10.1007/978-3-030-28954-6_14 (2019).

Zeiler, M. D. & Fergus, R. Visualizing and understanding convolutional networks. Computer Vision—ECCV 2014, pp. 818–833 (Fleet, D., Pajdla T., Schiele, B., & Tuytelaars, T., eds) (Springer). https://doi.org/10.1007/978-3-319-10590-1_53 (2014).

Springenberg, J. T., Dosovitskiy, A., Brox, T. & Riedmiller, M. Striving for simplicity: The all convolutional net. arXiv preprint arXiv:1412.6806 . https://doi.org/10.48550/arXiv.1412.6806 (2014).

Binder, A., Montavon, G., Lapuschkin, S., Müller, K.-R. & Samek, W. Layer-wise relevance propagation for neural networks with local renormalization layers, in Artificial Neural Networks and Machine Learning–ICANN 2016: Proceedings 25th International Conference on Artificial Neural Networks, pp. 63–71 (Springer). https://doi.org/10.1007/978-3-319-44781-0_8 (2016).

Selvaraju, R. R. et al. Grad-cam: visual explanations from deep networks via gradient-based localization. Proceedings of the 2017 IEEE international conference on computer vision, pp. 618–626. https://ieeexplore.ieee.org/document/8237336 (2017).

Sundararajan, M., Taly, A. & Yan, Q. (2017) Axiomatic attribution for deep networks. in Proceedings of the 34th International Conference on Machine Learning 70 , 3319–3328. https://doi.org/10.5555/3305890.3306024 .

Shrikumar, A., Greenside, P. & Kundaje, A. Learning important features through propagating activation differences. in Proceedings of the 34th International Conference on Machine Learning 70 , 3145–3153. https://doi.org/10.5555/3305890.3306006 (2017).

Lundberg, S. M. & Lee, S.-I. A unified approach to interpreting model predictions. in Proceedings of the 31st International Conference on Machine Learning, pp . 4768–4777. https://doi.org/10.5555/3295222.3295230 (2017).

Ersavas, T. Deepmapper. https://github.com/tansel/deepmapper (2023).

Kokhlikyan, N. et al. Captum: A unified and generic model interpretability library for pytorch. arXiv preprint arXiv:2009.07896 . https://doi.org/10.48550/arXiv.2009.07896 (2020).

Guyon, I. G. S. B.-H. A. & Dror, G. Gisette. UCI Machine Learning Repository . https://archive.ics.uci.edu/dataset/170/gisette (2008).

PyTorch, torch.rand. https://pytorch.org/docs/stable/generated/torch.rand.html (2023).

Sharma, A., Vans, E., Shigemizu, D., Boroevich, K. A. & Tsunoda, T. DeepInsight: A methodology to transform a non-image data to an image for convolution neural network architecture. Sci. Rep. 9 , 11399. https://doi.org/10.1038/s41598-019-47765-6 (2019).

Article ADS CAS PubMed PubMed Central Google Scholar

Sharma, A., Lysenko, A., Boroevich, K. A., Vans, E. & Tsunoda, T. DeepFeature: feature selection in nonimage data using convolutional neural network. Brief. Bioinform. 22 , bbab297. https://doi.org/10.1093/bib/bbab297 (2021).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 . https://doi.org/10.48550/arXiv.1409.1556 (2014).

Pytorch2, AdaptiveAvgPool2d. https://pytorch.org/docs/stable/generated/torch.nn.AdaptiveAvgPool2d.html (2023).

Download references

Acknowledgements

We thank Murat Karaorman, Mitchell Cummins, and Fatemeh Vafaee for helpful advice and comments on the manuscript. This research is supported by an Australian Government Research Training Program Scholarships RSAI8000 and RSAP1000 to T.E., a Fonds de Recherche du Quebec Santé Junior 1 Award 284217 to M.A.S., and UNSW SHARP Grant RG193211 to J.S.M.

Author information

Authors and affiliations.

School of Biotechnology and Biomolecular Sciences, UNSW Sydney, Sydney, NSW, 2052, Australia

Tansel Ersavas, Martin A. Smith & John S. Mattick

Department of Biochemistry and Molecular Medicine, Faculty of Medicine, Université de Montréal, Montréal, QC, H3C 3J7, Canada

Martin A. Smith

CHU Sainte-Justine Research Centre, Montreal, Canada

UNSW RNA Institute, UNSW Sydney, Australia

You can also search for this author in PubMed Google Scholar

Contributions

T.E. developed the methods, implemented DeepMapper and produced the first draft of the paper. J.S.M. provided advice, structured the paper, and edited it for improved readability and clarity. M.A.S. provided advice and edited the paper.

Corresponding authors

Correspondence to Tansel Ersavas or John S. Mattick .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Ersavas, T., Smith, M.A. & Mattick, J.S. Novel applications of Convolutional Neural Networks in the age of Transformers. Sci Rep 14 , 10000 (2024). https://doi.org/10.1038/s41598-024-60709-z

Download citation

Received : 16 January 2024

Accepted : 26 April 2024

Published : 01 May 2024

DOI : https://doi.org/10.1038/s41598-024-60709-z

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

By submitting a comment you agree to abide by our Terms and Community Guidelines . If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing: AI and Robotics newsletter — what matters in AI and robotics research, free to your inbox weekly.

- Open access

- Published: 16 January 2024

A review of graph neural networks: concepts, architectures, techniques, challenges, datasets, applications, and future directions

- Bharti Khemani 1 ,

- Shruti Patil 2 ,

- Ketan Kotecha 2 &

- Sudeep Tanwar 3

Journal of Big Data volume 11 , Article number: 18 ( 2024 ) Cite this article

8865 Accesses

4 Citations

Metrics details

Deep learning has seen significant growth recently and is now applied to a wide range of conventional use cases, including graphs. Graph data provides relational information between elements and is a standard data format for various machine learning and deep learning tasks. Models that can learn from such inputs are essential for working with graph data effectively. This paper identifies nodes and edges within specific applications, such as text, entities, and relations, to create graph structures. Different applications may require various graph neural network (GNN) models. GNNs facilitate the exchange of information between nodes in a graph, enabling them to understand dependencies within the nodes and edges. The paper delves into specific GNN models like graph convolution networks (GCNs), GraphSAGE, and graph attention networks (GATs), which are widely used in various applications today. It also discusses the message-passing mechanism employed by GNN models and examines the strengths and limitations of these models in different domains. Furthermore, the paper explores the diverse applications of GNNs, the datasets commonly used with them, and the Python libraries that support GNN models. It offers an extensive overview of the landscape of GNN research and its practical implementations.

Introduction

Graph Neural Networks (GNNs) have emerged as a transformative paradigm in machine learning and artificial intelligence. The ubiquitous presence of interconnected data in various domains, from social networks and biology to recommendation systems and cybersecurity, has fueled the rapid evolution of GNNs. These networks have displayed remarkable capabilities in modeling and understanding complex relationships, making them pivotal in solving real-world problems that traditional machine-learning models struggle to address. GNNs’ unique ability to capture intricate structural information inherent in graph-structured data is significant. This information often manifests as dependencies, connections, and contextual relationships essential for making informed predictions and decisions. Consequently, GNNs have been adopted and extended across various applications, redefining what is possible in machine learning.

In this comprehensive review, we embark on a journey through the multifaceted landscape of Graph Neural Networks, encompassing an array of critical aspects. Our study is motivated by the ever-increasing literature and diverse perspectives within the field. We aim to provide researchers, practitioners, and students with a holistic understanding of GNNs, serving as an invaluable resource to navigate the intricacies of this dynamic field. The scope of this review is extensive, covering fundamental concepts that underlie GNNs, various architectural designs, techniques for training and inference, prevalent challenges and limitations, the diversity of datasets utilized, and practical applications spanning a myriad of domains. Furthermore, we delve into the intriguing future directions that GNN research will likely explore, shedding light on the exciting possibilities.

In recent years, deep learning (DL) has been called the gold standard in machine learning (ML). It has also steadily evolved into the most widely used computational technique in ML, producing excellent results on various challenging cognitive tasks, sometimes even matching or outperforming human ability. One benefit of DL is its capacity to learn enormous amounts of data [ 1 ]. GNN variations such as graph convolutional networks (GCNs), graph attention networks (GATs), and GraphSAGE have shown groundbreaking performance on various deep learning tasks in recent years [ 2 ].

A graph is a data structure that consists of nodes (also called vertices) and edges. Mathematically, it is defined as G = (V, E), where V denotes the nodes and E denotes the edges. Edges in a graph can be directed or undirected based on whether directional dependencies exist between nodes. A graph can represent various data structures, such as social networks, knowledge graphs, and protein–protein interaction networks. Graphs are non-Euclidean spaces, meaning that the distance between two nodes in a graph is not necessarily equal to the distance between their coordinates in an Euclidean space. This makes applying traditional neural networks to graph data difficult, as they are typically designed for Euclidean data.

Graph neural networks (GNNs) are a type of deep learning model that can be used to learn from graph data. GNNs use a message-passing mechanism to aggregate information from neighboring nodes, allowing them to capture the complex relationships in graphs. GNNs are effective for various tasks, including node classification, link prediction, and clustering.

Organization of paper

The paper is organized as follows:

The primary focus of this research is to comprehensively examine Concepts, Architectures, Techniques, Challenges, Datasets, Applications, and Future Directions within the realm of Graph Neural Networks.

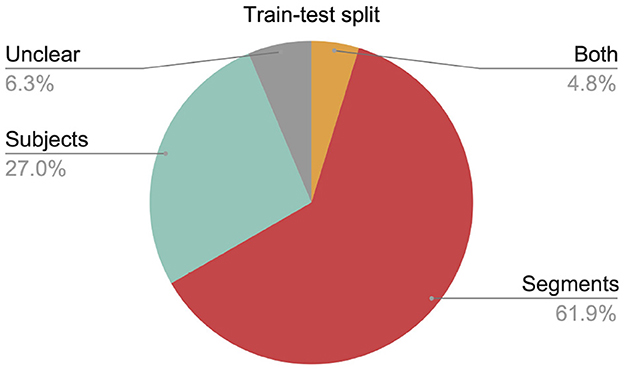

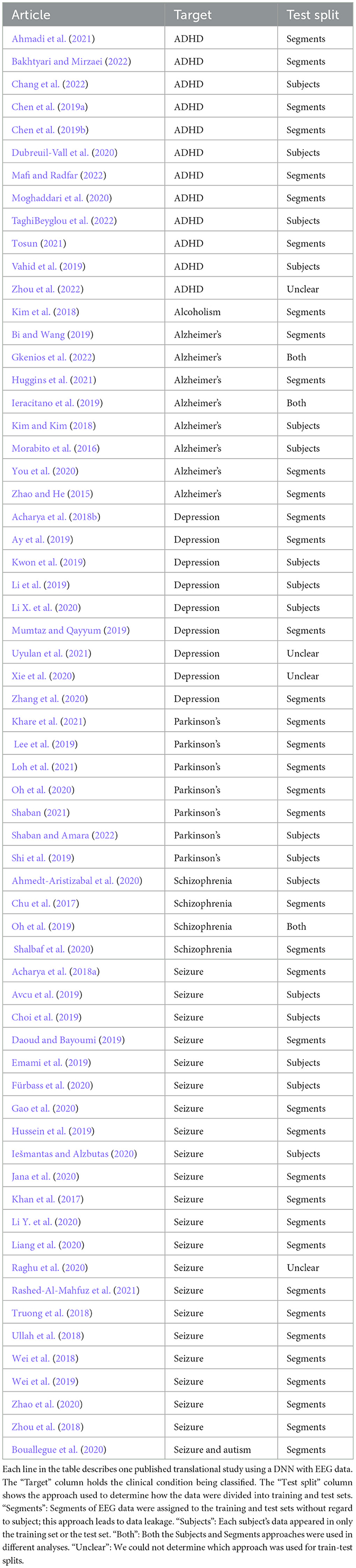

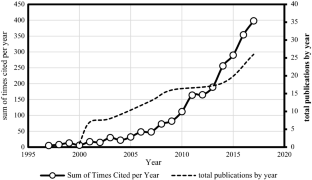

The paper delves into the Evolution and Motivation behind the development of Graph Neural Networks, including an analysis of the growth of publication counts over the years.

It provides an in-depth exploration of the Message Passing Mechanism used in Graph Neural Networks.

The study presents a concise summary of GNN learning styles and GNN models, complemented by an extensive literature review.

The paper thoroughly analyzes the Advantages and Limitations of GNN models when applied to various domains.

It offers a comprehensive overview of GNN applications, the datasets commonly used with GNNs, and the array of Python libraries that support GNN models.

In addition, the research identifies and addresses specific research gaps, outlining potential future directions in the field.

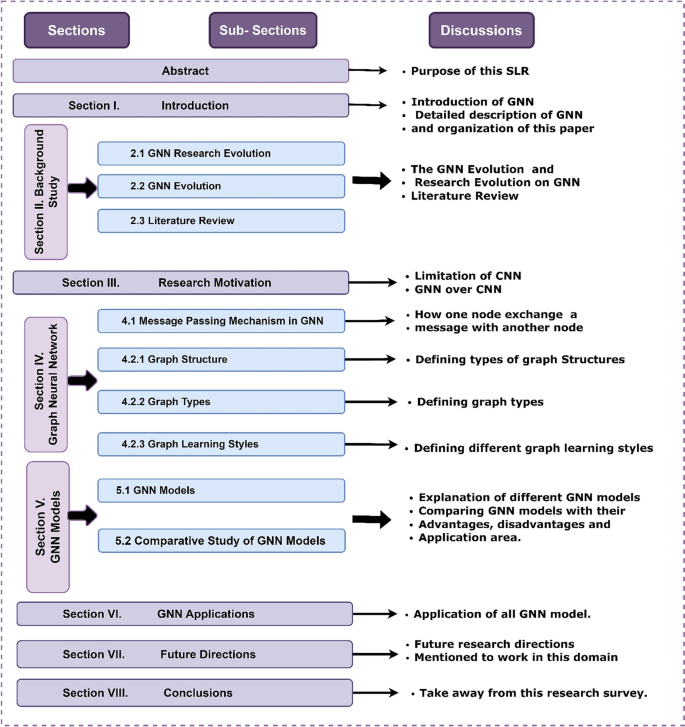

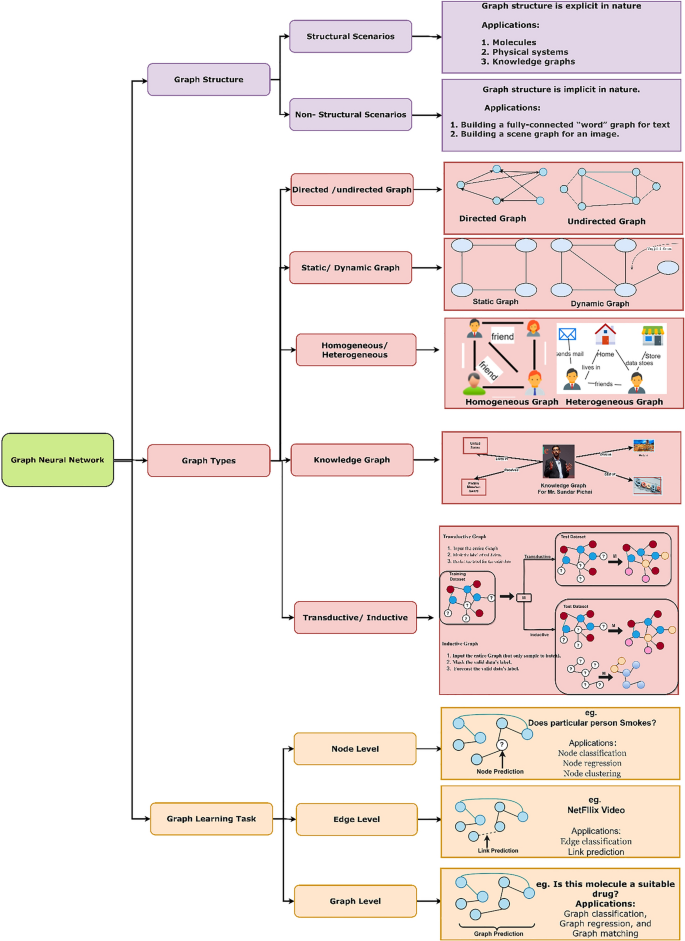

" Introduction " section describes the Introduction to GNN. " Background study " section provides background details in terms of the Evolution of GNN. " Research motivation " section describes the research motivation behind GNN. Section IV describes the GNN message-passing mechanism and the detailed description of GNN with its Structure, Learning Styles, and Types of tasks. " GNN Models and Comparative Analysis of GNN Models " section describes the GNN models with their literature review details and comparative study of different GNN models. " Graph Neural Network Applications " section describes the application of GNN. And finally, future direction and conclusions are defined in " Future Directions of Graph Neural Network " and " Conclusions " sections, respectively. Figure 1 gives the overall structure of the paper.

The overall structure of the paper

Background study

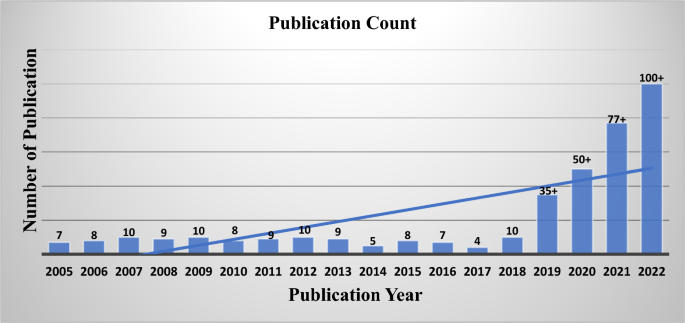

As shown in Fig. 2 below, the evolution of GNNs started in 2005. For the past 5 years, research in this area has been going into great detail. Neural graph networks are being used by practically all researchers in fields such as NLP, computer vision, and healthcare.

Year-wise publication count of GNN (2005–2022)

Graph neural network research evolution

Graph neural networks (GNNs) were first proposed in 2005, but only recently have they begun to gain traction. GNNs were first introduced by Gori [2005] and Scarselli [2004, 2009]. A node's attributes and connected nodes in the graph serve as its natural definitions. A GNN aims to learn a state embedding h v ε R s that encapsulates each node's neighborhood data. The distribution of the expected node label is one example of the output. An s-dimension vector of node v, the state embedding h v , can be utilized to generate an output O v , such as the anticipated distribution node name. The predicted node label (O v ) distribution is created using the state embedding h v [ 30 ]. Thomas Kipf and Max Welling introduced the convolutional graph network (GCN) in 2017. A GCN layer defines a localized spectral filter's first-order approximation on graphs. GCNs can be thought of as convolutional neural networks that have been expanded to handle graph-structured data.

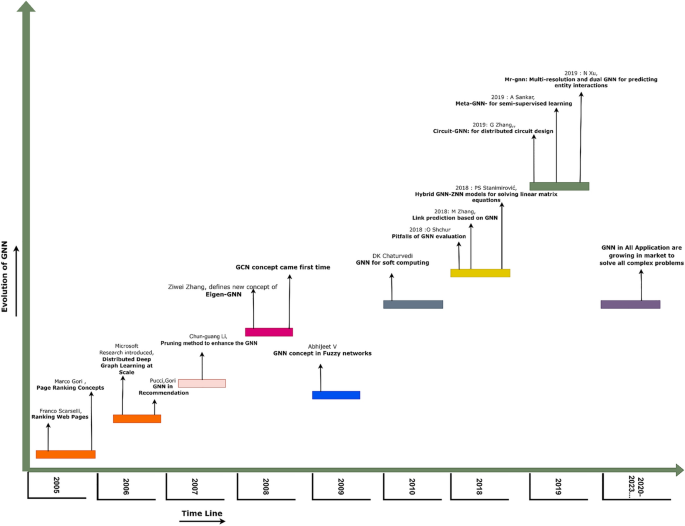

Graph neural network evolution

As shown in Fig. 3 below, research on graph neural networks (GNNs) began in 2005 and is still ongoing. GNNs can define a broader class of graphs that can be used for node-focused tasks, edge-focused tasks, graph-focused tasks, and many other applications. In 2005, Marco Gori introduced the concept of GNNs and defined recursive neural networks extended by GNNs [ 4 ]. Franco Scarselli also explained the concepts for ranking web pages with the help of GNNs in 2005 [ 5 ]. In 2006, Swapnil Gandhi and Anand Padmanabha Iyer of Microsoft Research introduced distributed deep graph learning at scale, which defines a deep graph neural network [ 6 ]. They explained new concepts such as GCN, GAT, etc. [ 1 ]. Pucci and Gori used GNN concepts in the recommendation system.

Graph Neural Network Evolution

2007 Chun Guang Li, Jun Guo, and Hong-gang Zhang used a semi-supervised learning concept with GNNs [ 7 ]. They proposed a pruning method to enhance the basic GNN to resolve the problem of choosing the neighborhood scale parameter. In 2008, Ziwei Zhang introduced a new concept of Eigen-GNN [ 8 ], which works well with several GNN models. In 2009, Abhijeet V introduced the GNN concept in fuzzy networks [ 9 ], proposing a granular reflex fuzzy min–max neural network for classification. In 2010, DK Chaturvedi explained the concept of GNN for soft computing techniques [ 10 ]. Also, in 2010, GNNs were widely used in many applications. In 2010, Tanzima Hashem discussed privacy-preserving group nearest neighbor queries [ 11 ]. The first initiative to use GNNs for knowledge graph embedding is R-GCN, which suggests a relation-specific transformation in the message-passing phases to deal with various relations.

Similarly, from 2011 to 2017, all authors surveyed a new concept of GNNs, and the survey linearly increased from 2018 onwards. Our paper shows that GNN models such as GCN, GAT, RGCN, and so on are helpful [ 12 ].

Literature review

In the Table 1 describe the literature survey on graph neural networks, including the application area, the data set used, the model applied, and performance evaluation. The literature is from the years 2018 to 2023.

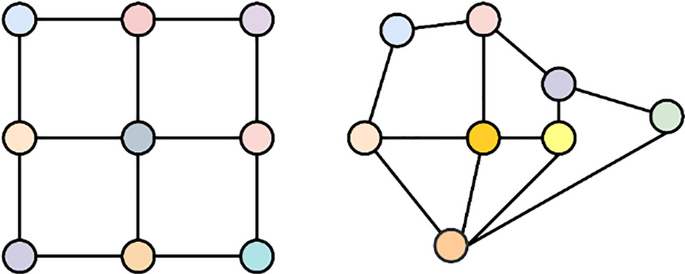

Research motivation

We employ grid data structures for normalization of image inputs, typically using an n*n-sized filter. The result is computed by applying an aggregation or maximum function. This process works effectively due to the inherent fixed structure of images. We position the grid over the image, move the filter across it, and derive the output vector as depicted on the left side of Fig. 4 . In contrast, this approach is unsuitable when working with graphs. Graphs lack a predefined structure for data storage, and there is no inherent knowledge of node-to-neighbor relationships, as illustrated on the right side of Fig. 4 . To overcome this limitation, we focus on graph convolution.

CNN In Euclidean Space (Left), GNN In Euclidean Space (Right)

In the context of GCNs, convolutional operations are adapted to handle graphs’ irregular and non-grid-like structures. These operations typically involve aggregating information from neighboring nodes to update the features of a central node. CNNs are primarily used for grid-like data structures, such as images. They are well-suited for tasks where spatial relationships between neighboring elements are crucial, as in image processing. CNNs use convolutional layers to scan small local receptive fields and learn hierarchical representations. GNNs are designed for graph-structured data, where edges connect entities (nodes). Graphs can represent various relationships, such as social networks, citation networks, or molecular structures. GNNs perform operations that aggregate information from neighboring nodes to update the features of a central node. CNNs excel in processing grid-like data with spatial dependencies; GNNs are designed to handle graph-structured data with complex relationships and dependencies between entities.

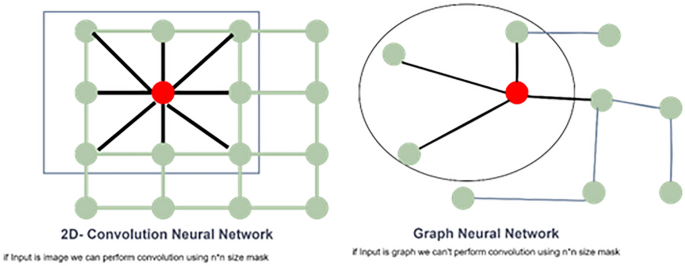

Limitation of CNN over GNN

Graph Neural Networks (GNNs) draw inspiration from Convolutional Neural Networks (CNNs). Before delving into the intricacies of GNNs, it is essential to understand why Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) may not suffice for effectively handling data structured as graphs. As illustrated in Fig. 5 , Convolutional Neural Networks (CNNs) are designed for data that exhibits a grid structure, such as images. Conversely, Recurrent Neural Networks (RNNs) are tailored to sequences, like text.

Convolution can be performed if the input is an image using an n*n mask (Left). Convolution can't be achieved if the input is a graph using an n*n mask. (Right)

Typically, we use arrays for storage when working with text data. Likewise, for image data, matrices are the preferred choice. However, as depicted in Fig. 5 , arrays and matrices fall short when dealing with graph data. In the case of graphs, we require a specialized technique known as Graph Convolution. This approach enables deep neural networks to handle graph-structured data directly, leading to a graph neural network.

Fig. 5 illustrates that we can employ masking techniques and apply filtering operations to transform the data into vector form when we have images. Conversely, traditional masking methods are not applicable when dealing with graph data as input, as shown in the right image.

Graph neural network

Graph Neural Networks, or GNNs, are a class of neural networks tailored for handling data organized in graph structures. Graphs are mathematical representations of nodes connected by edges, making them ideal for modeling relationships and dependencies in complex systems. GNNs have the inherent ability to learn and reason about graph-structured data, enabling diverse applications. In this section, we first explained the passing mechanism of GNN (" Message Passing Mechanism in Graph Neural Network Section "), then described graphs related to the structure of graphs, graph types, and graph learning styles (" Description of GNN Taxonomy " Section).

Message passing mechanism in graph neural network

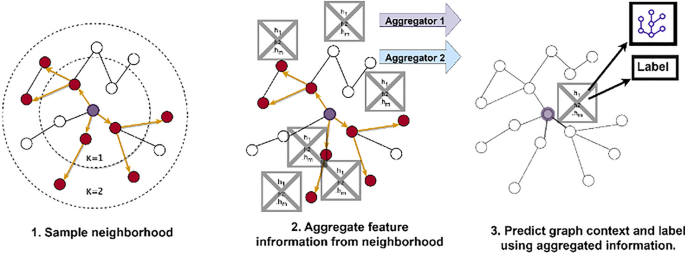

Graph symmetries are maintained using a GNN, an optimizable transformation on all graph properties (nodes, edges, and global context) (permutation invariances). Because a GNN does not alter the connectivity of the input graph, the output may be characterized using the same adjacency list and feature vector count as the input graph. However, the output graph has updated embeddings because the GNN modified each node, edge, and global-context representation.

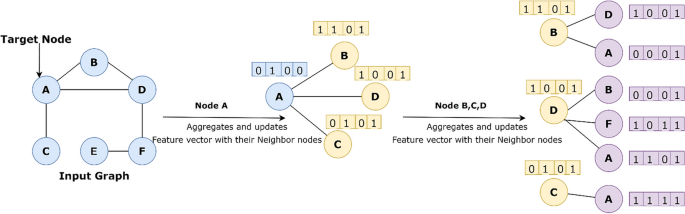

In Fig. 6 , circles are nodes, and empty boxes show aggregation of neighbor/adjacent nodes. The model aggregates messages from A's local graph neighbors (i.e., B, C, and D). In turn, the messages coming from neighbors are based on information aggregated from their respective neighborhoods, and so on. This visualization shows a two-layer version of a message-passing model. Notice that the computation graph of the GNN forms a tree structure by unfolding the neighborhood around the target node [ 17 ]. Graph neural networks (GNNs) are neural models that capture the dependence of graphs via message passing between the nodes of graphs [ 30 ].

How a single node aggregates messages from its adjacent neighbor nodes

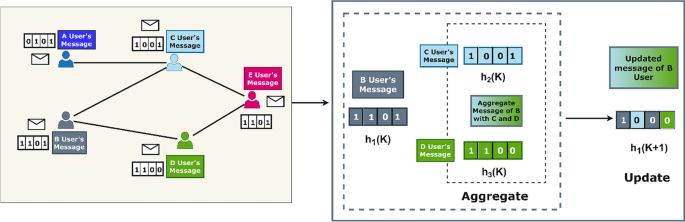

The message-passing mechanism of Graph Neural Networks is shown in Fig. 7 . In this, we take an input graph with a set of node features X ε R d ⇥ |V| and Use this knowledge to produce node embeddings z u . However, we will also review how the GNN framework may embed subgraphs and whole graphs.

Message passing mechanism in GNN

At each iteration, each node collects information from the neighborhood around it. Each node embedding has more data from distant reaches of the graph as these iterations progress. After the first iteration (k = 1), each node embedding expressly retains information from its 1-hop neighborhood, which may be accessed via a path in the length graph 1. [ 31 ]. After the second iteration (k = 2), each node embedding contains data from its 2-hop neighborhood; generally, after k iterations, each node embedding includes data from its k-hop setting. The kind of “information” this message passes consists of two main parts: structural information about the graph (i.e., degree of nodes, etc.), and the other is feature-based.

In the message-passing mechanism of a neural network, each node has its message stored in the form of feature vectors, and each time, the neighbor updates the information in the form of the feature vector [ 1 ]. This process aggregates the information, which means the grey node is connected to the blue node. Both features are aggregated and form new feature vectors by updating the values to include the new message.

Equations 4.1 and 4.2 shows that h denotes the message, u represents the node number, and k indicates the iteration number. Where AGGREGATE and UPDATE are arbitrarily differentiable functions (i.e., neural networks), and mN(u) is the “message,” which is aggregated from u's graph neighborhood N(u). We employ superscripts to identify the embeddings and functions at various message-passing iterations. The AGGREGATE function receives as input the set of embeddings of the nodes in the u's graph neighborhood N (u) at each iteration k of the GNN and generates a message. \({m}_{N(u)}^{k}\) . Based on this aggregated neighborhood information. The update function first UPDATES the message and then combines the message. \({m}_{N(u)}^{k}\) with the previous message \({h}_{u}^{(k-1)}\) of node, u to generate the updated message \({h}_{u}^{k}\) .

Description of GNN taxonomy

We can see from Fig. 8 below shows that we have divided our GNN taxonomy into 3 parts [ 30 ].

Graph Neural Network Taxonomy

1. Graph Structures 2. Graph Types 3. Graph Learning Tasks

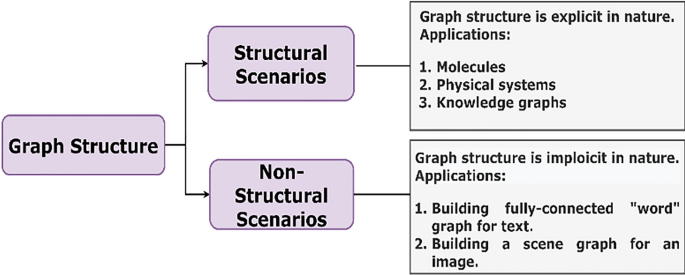

Graph structure

The two scenarios shown in Fig. 9 typically present are structural and non-structural. Applications involving molecular and physical systems, knowledge graphs, and other objects explicitly state the graph structure in structural contexts.

Graph Structure

Graphs are implicit in non-structural situations. As a result, we must first construct the graph from the current task. For text, we must build a fully connected “a word” graph and a scene graph for images.

Graph types

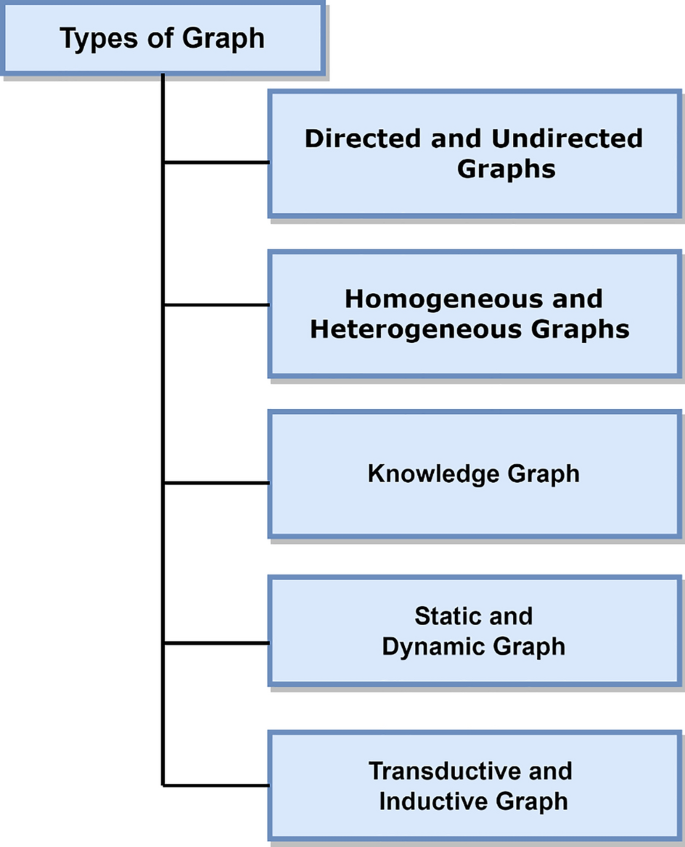

There may be more information about nodes and links in complex graph types. Graphs are typically divided into 5 categories, as shown in Fig. 10 .

Types of Graphs

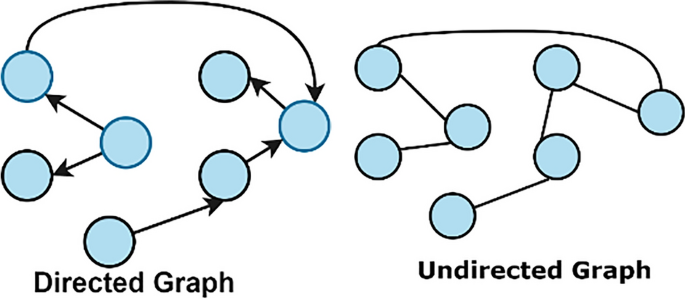

Directed/undirected graphs

A directed graph is characterized by edges with a specific direction, indicating the flow from one node to another. Conversely, in an undirected graph, the edges lack a designated direction, allowing nodes to interact bidirectionally. As illustrated in Fig. 11 (left side), the directed graph exhibits directed edges, while in Fig. 11 (right side), the undirected graph conspicuously lacks directional edges. In undirected graphs, it's important to note that each edge can be considered to comprise two directed edges, allowing for mutual interaction between connected nodes.

Directed/Undirected Graph

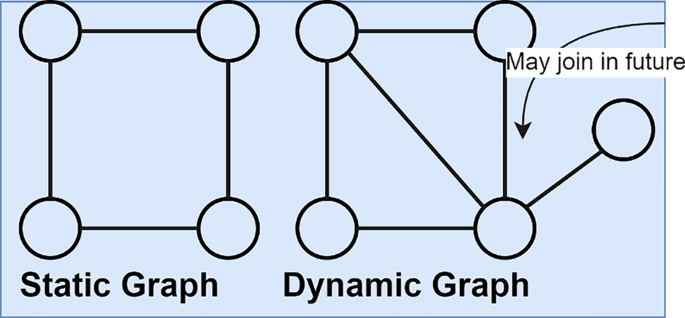

Static/dynamic graphs

The term “dynamic graph” pertains to a graph in which the properties or structure of the graph change with time. In dynamic graphs shown in Fig. 12 , it is essential to account for the temporal dimension appropriately. These dynamic graphs represent time-dependent events, such as the addition and removal of nodes and edges, typically presented as an ordered sequence or an asynchronous stream.

A noteworthy example of a dynamic graph can be observed in social networks like Twitter. In such networks, a new node is created each time a new user joins, and when a user follows another individual, a following edge is established. Furthermore, when users update their profiles, the respective nodes are also modified, reflecting the evolving nature of the graph. It's worth noting that different deep-learning libraries handle graph dynamics differently. TensorFlow, for instance, employs a static graph, while PyTorch utilizes a dynamic graph.

Static/Dynamic Graph

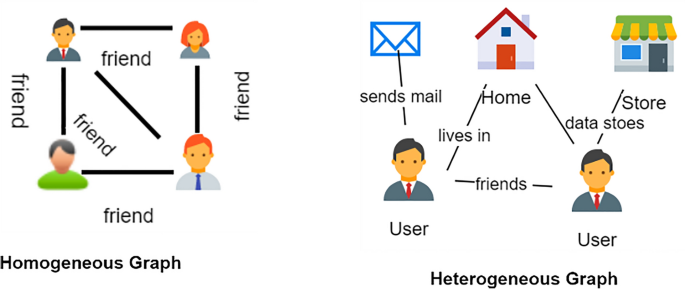

Homogeneous/heterogeneous graphs

Homogeneous graphs have only one type of node and one type of edge shown in Fig. 13 (Left). A homogeneous graph is one with the same type of nodes and edges, such as an online social network with friendship as edges and nodes representing people. In homogeneous networks, nodes and edges have the same types.

Heterogeneous graphs shown in Fig. 13 (Right) , however, have two or more different kinds of nodes and edges. A heterogeneous network is an online social network with various edges between nodes of the ‘person’ type, such as ‘friendship’ and ‘co-worker.’ Nodes and edges in heterogeneous graphs come in several varieties. Types of nodes and edges play critical functions in heterogeneous networks that require further consideration.

Homogeneous (Left), Heterogeneous (Right) Graph

Knowledge graphs

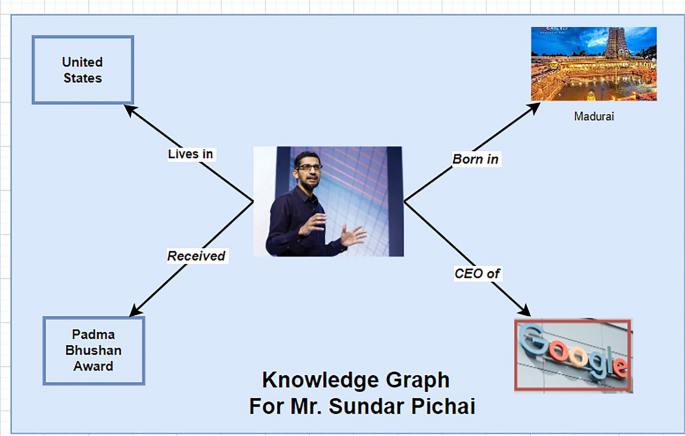

An array of triples in the form of (h, r, t) or (s, r, o) can be represented as a Knowledge Graph (KG), which is a network of entity nodes and relationship edges, with each triple (h, r, t) representing a single entity node. The relationship between an entity’s head (h) and tail (t) is denoted by the r. Knowledge Graph can be considered a heterogeneous graph from this perspective. The Knowledge Graph visually depicts several real-world objects and their relationships [ 32 ]. It can be used for many new aspects, including information retrieval, knowledge-guided innovation, and answering questions [ 30 ]. Entities are objects or things that exist in the real world, including individuals, organizations, places, music tracks, movies, and people. Each relation type describes a particular relationship between various elements similarly. We can see from Fig. 14 the Knowledge graph for Mr. Sundar Pichai.

Knowledge graph

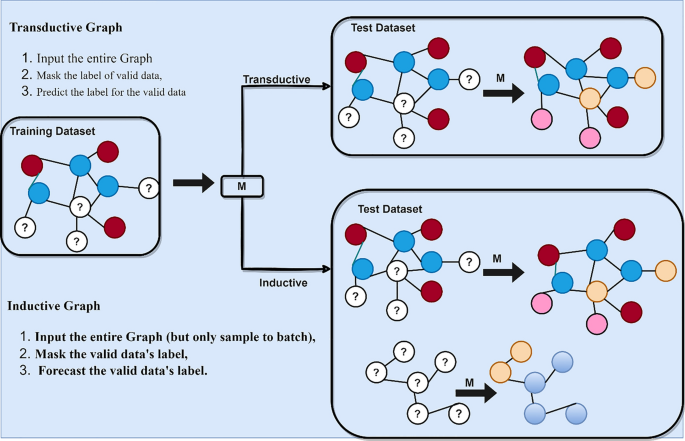

Transductive/inductive graphs

In a transductive scenario shown in Fig. 15 (up), the entire graph is input, the label of the valid data is hidden, and finally, the label for the correct data is predicted. However, with an inductive graph shown in Fig. 15 (down), we also input the entire graph (but only sample to batch), mask the valid data’s label, and forecast the valuable data’s label. The model must forecast the labels of the given unlabeled nodes in a transductive context. In the inductive situation, it is possible to infer new unlabeled nodes from the same distribution.

Transductive/Inductive Graphs

Transductive Graph:

In the transductive approach, the entire graph is provided as input.

This method involves concealing the labels of the valid data.

The primary objective is to predict the labels for the valid data.

Inductive Graph:

The inductive approach still uses the complete graph, but only a sample within a batch is considered.

A crucial step in this process is masking the labels of the valid data.

The key aim here is to make predictions for the labels of the valid data.

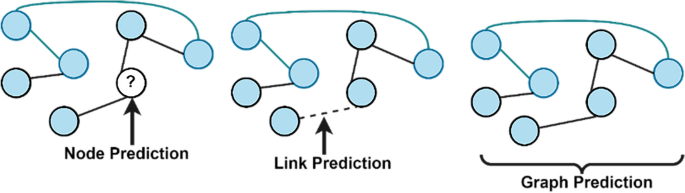

Graph learning tasks

We perform three tasks with graphs: node classification, link prediction, and Graph Classification shown in Fig. 16 .

Node Level Prediction (e.g., social network) (LEFT), Edge Level Prediction (e.g., Next YouTube Video?) (MIDDLE), Graph Level Prediction (e.g., molecule) (Right)

Node-level task

Node-level tasks are primarily concerned with determining the identity or function of each node within a graph. The core objective of a node-level task is to predict specific properties associated with individual nodes. For example, a node-level task in social networks could involve predicting which social group a new member is likely to join based on their connections and the characteristics of their friends' memberships. Node-level tasks are typically used when working with unlabeled data, such as identifying whether a particular individual is a smoker.

Edge-level task (link prediction)

Edge-level tasks revolve around analyzing relationships between pairs of nodes in a graph. An illustrative application of an edge-level task is assessing the compatibility or likelihood of a connection between two entities, as seen in matchmaking or dating apps. Another instance of an edge-level task is evident when using platforms like Netflix, where the task involves predicting the following video to be recommended based on viewing history and user preferences.

Graph-level

In graph-level tasks, the objective is to make predictions about a characteristic or property that encompasses the entire graph. For example, using a graph-based representation, one might aim to predict attributes like the olfactory quality of a molecule or its potential to bind with a disease-associated receptor. The essence of a graph-level task is to provide predictions that pertain to the graph as a whole. For instance, when assessing a newly synthesized chemical compound, a graph-level task might seek to determine whether the molecule has the potential to be an effective drug. The summary of all three learning tasks are shown in Fig. 17 .

Graph Learning Tasks Summary

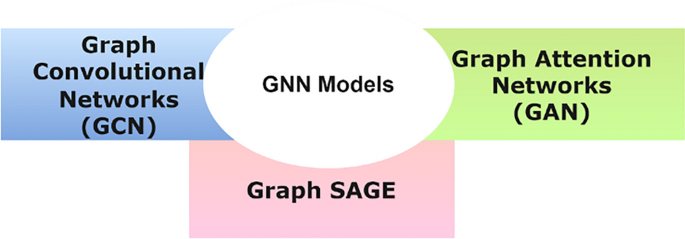

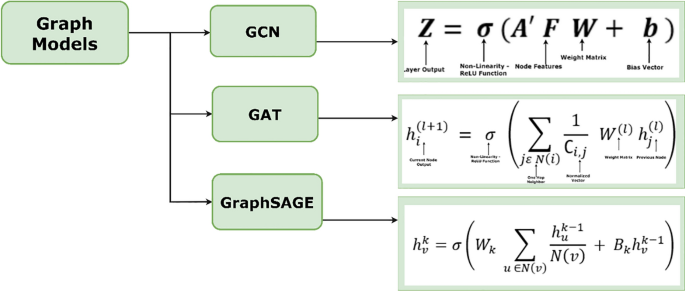

GNN models and comparative analysis of GNN models

Graph Neural Network (GNN) models represent a category of neural networks specially crafted to process data organized in graph structures. They've garnered substantial acclaim across various domains, primarily due to their exceptional capability to grasp intricate relationships and patterns within graph data. As illustrated in Fig. 18 , we've outlined three distinct GNN models. A comprehensive description of these GNN models, specifically Graph Convolutional Networks (GCN), Graph Attention Networks (GAT/GAN), and GraphSAGE models can be found in the reference [ 33 ]. In Sect. " GNN models ", we delve into these GNN models' intricacies; in " Comparative Study of GNN Models " section, we provide an in-depth analysis that explores their theoretical and practical aspects.

Graph convolution neural network (GCN)

GCN is one of the basic graph neural network variants. Thomas Kipf and Max Welling developed GCN networks. Convolution layers in Convolutional Neural Networks are essentially the same process as 'convolution' in GCNs. The input neurons are multiplied by weights called filters or kernels. The filters act as a sliding window across the image, allowing CNN to learn information from nearby cells. Weight sharing uses the same filter within the same layer throughout the image; when CNN is used to identify photos of cats vs. non-cats, the same filter is employed in the same layer to detect the cat's nose and ears. Throughout the image, the same weight (or kernel or filter in CNNs) is applied [ 33 ]. GCNs were first introduced in “Spectral Networks and Deep Locally Connected Networks on Graphs” [ 34 ].

GCNs, which learn features by analyzing neighboring nodes, carry out similar behaviors. The primary difference between CNNs and GNNs is that CNNs are made to operate on regular (Euclidean) ordered data. GNNs, on the other hand, are a generalized version of CNNs with different numbers of node connections and unordered nodes (irregular on non-Euclidean structured data). GCNs have been applied to solve many problems, for example, image classification [ 35 ], traffic forecasting [ 36 ], recommendation systems [ 17 ], scene graph generation [ 37 ], and visual question answering [ 38 ].

GCNs are particularly well-suited for tasks that involve data represented as graphs, such as social networks, citation networks, recommendation systems, and more. These networks are an extension of traditional CNNs, widely used for tasks involving grid-like data, such as images. The key idea behind GCNs is to perform convolution operations on the graph data. This enables them to capture and propagate information through the nodes in a graph by considering both a node’s features and those of its neighboring nodes. GCNs typically consist of several layers, each performing convolution and aggregation steps to refine the node representations in the graph. By applying these layers iteratively, GCNs can capture complex patterns and dependencies within the graph data.

Working of graph convolutional network

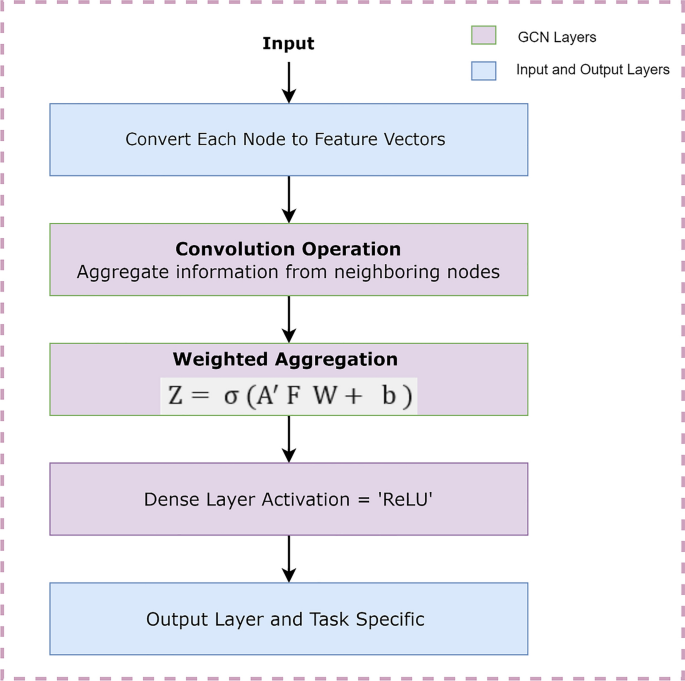

A Graph Convolutional Network (GCN) is a type of neural network architecture designed for processing and analyzing graph-structured data. GCNs work by aggregating and propagating information through the nodes in a graph. GCN works with the following steps shown in Fig. 19 :

Initialization:

Working of GCN

Each node in the graph is associated with a feature vector. Depending on the application, these feature vectors can represent various attributes or characteristics of the nodes. For example, in a social network, each node might represent a user, and the features could include user profile information.

Convolution Operation:

The core of a GCN is the convolution operation, which is adapted from convolutional neural networks (CNNs). It aims to aggregate information from neighboring nodes. This is done by taking a weighted sum of the feature vectors of neighboring nodes. The graph's adjacency matrix determines the weights. The resulting aggregated information is a new feature vector for each node.

Weighted Aggregation:

The graph's adjacency matrix, typically after normalization, provides weights for the aggregation process. In this context, for a given node, the features of its neighboring nodes are scaled by the corresponding values within the adjacency matrix, and the outcomes are then accumulated. A precise mathematical elucidation of this aggregation step is described in " Equation of GCN " section.

Activation function and learning weights:

The aggregated features are typically passed through an activation function (e.g., ReLU) to introduce non-linearity. The weight matrix W used in the aggregation step is learned during training. This learning process allows the GCN to adapt to the specific graph and task it is designed for.

Stacking Layers:

GCNs are often used in multiple layers. This allows the network to capture more complex relationships and higher-level features in the graph. The output of one GCN layer becomes the input for the next, and this process is repeated for a predefined number of layers.

Task-Specific Output:

The final output of the GCN can be used for various graph-based tasks, such as node classification, link prediction, or graph classification, depending on the specific application.

Equation of GCN

The Graph Convolutional Network (GCN) is based on a message-passing mechanism that can be described using mathematical equations. The core equation of a superficial, first-order GCN layer can be expressed as follows: For a graph with N nodes, let's define the following terms:

Equation 5.1 depicts a GCN layer's design. The normalized graph adjacency matrix A' and the nodes feature matrix F serve as the layer's inputs. The bias vector b and the weight matrix W are trainable parameters for the layer.

When used with the design matrix, the normalized adjacency matrix effectively smoothes a node’s feature vector based on the feature vectors of its close graph neighbors. This matrix captures the graph structure. A’ is normalized to make each neighboring node’s contribution proportional to the network's connectivity.

The layer definition is finished by applying A'FW + b to an element-wise non-linear function, such as ReLU. The downstream node classification task requires deep neural architectures to learn a complicated hierarchy of node attributes. This layer's output matrix Z can be routed into another GCN layer or any other neural network layer to do this.

Summary of graph convolution neural network (GCN) is shown in Table 2 .

Graph attention network (gat/gan).

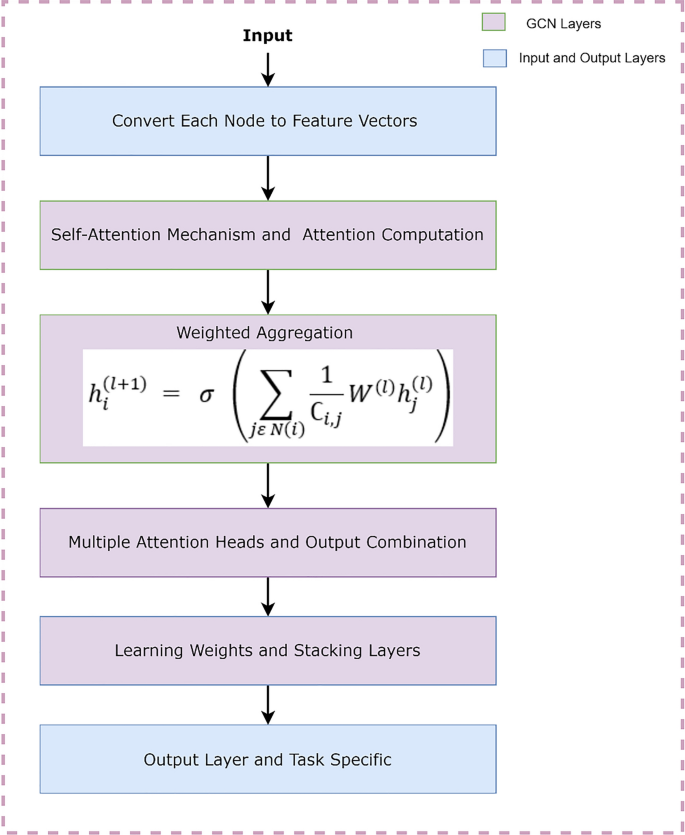

Graph Attention Network (GAT/GAN) is a new neural network that works with graph-structured data. It uses masked self-attentional layers to address the shortcomings of past methods that depended on graph convolutions or their approximations. By stacking layers, the process makes it possible (implicitly) to assign various nodes in a neighborhood different weights, allowing nodes to focus on the characteristics of their neighborhoods without having to perform an expensive matrix operation (like inversion) or rely on prior knowledge of the graph's structure. GAT concurrently tackles numerous significant limitations of spectral-based graph neural networks, making the model suitable for both inductive and transductive applications.

Working of GAT