- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

- Portuguese de Portugal ( portugues )

Home Market Research

Data Analysis in Research: Types & Methods

Content Index

Why analyze data in research?

Types of data in research, finding patterns in the qualitative data, methods used for data analysis in qualitative research, preparing data for analysis, methods used for data analysis in quantitative research, considerations in research data analysis, what is data analysis in research.

Definition of research in data analysis: According to LeCompte and Schensul, research data analysis is a process used by researchers to reduce data to a story and interpret it to derive insights. The data analysis process helps reduce a large chunk of data into smaller fragments, which makes sense.

Three essential things occur during the data analysis process — the first is data organization . Summarization and categorization together contribute to becoming the second known method used for data reduction. It helps find patterns and themes in the data for easy identification and linking. The third and last way is data analysis – researchers do it in both top-down and bottom-up fashion.

LEARN ABOUT: Research Process Steps

On the other hand, Marshall and Rossman describe data analysis as a messy, ambiguous, and time-consuming but creative and fascinating process through which a mass of collected data is brought to order, structure and meaning.

We can say that “the data analysis and data interpretation is a process representing the application of deductive and inductive logic to the research and data analysis.”

Researchers rely heavily on data as they have a story to tell or research problems to solve. It starts with a question, and data is nothing but an answer to that question. But, what if there is no question to ask? Well! It is possible to explore data even without a problem – we call it ‘Data Mining’, which often reveals some interesting patterns within the data that are worth exploring.

Irrelevant to the type of data researchers explore, their mission and audiences’ vision guide them to find the patterns to shape the story they want to tell. One of the essential things expected from researchers while analyzing data is to stay open and remain unbiased toward unexpected patterns, expressions, and results. Remember, sometimes, data analysis tells the most unforeseen yet exciting stories that were not expected when initiating data analysis. Therefore, rely on the data you have at hand and enjoy the journey of exploratory research.

Create a Free Account

Every kind of data has a rare quality of describing things after assigning a specific value to it. For analysis, you need to organize these values, processed and presented in a given context, to make it useful. Data can be in different forms; here are the primary data types.

- Qualitative data: When the data presented has words and descriptions, then we call it qualitative data . Although you can observe this data, it is subjective and harder to analyze data in research, especially for comparison. Example: Quality data represents everything describing taste, experience, texture, or an opinion that is considered quality data. This type of data is usually collected through focus groups, personal qualitative interviews , qualitative observation or using open-ended questions in surveys.

- Quantitative data: Any data expressed in numbers of numerical figures are called quantitative data . This type of data can be distinguished into categories, grouped, measured, calculated, or ranked. Example: questions such as age, rank, cost, length, weight, scores, etc. everything comes under this type of data. You can present such data in graphical format, charts, or apply statistical analysis methods to this data. The (Outcomes Measurement Systems) OMS questionnaires in surveys are a significant source of collecting numeric data.

- Categorical data: It is data presented in groups. However, an item included in the categorical data cannot belong to more than one group. Example: A person responding to a survey by telling his living style, marital status, smoking habit, or drinking habit comes under the categorical data. A chi-square test is a standard method used to analyze this data.

Learn More : Examples of Qualitative Data in Education

Data analysis in qualitative research

Data analysis and qualitative data research work a little differently from the numerical data as the quality data is made up of words, descriptions, images, objects, and sometimes symbols. Getting insight from such complicated information is a complicated process. Hence it is typically used for exploratory research and data analysis .

Although there are several ways to find patterns in the textual information, a word-based method is the most relied and widely used global technique for research and data analysis. Notably, the data analysis process in qualitative research is manual. Here the researchers usually read the available data and find repetitive or commonly used words.

For example, while studying data collected from African countries to understand the most pressing issues people face, researchers might find “food” and “hunger” are the most commonly used words and will highlight them for further analysis.

LEARN ABOUT: Level of Analysis

The keyword context is another widely used word-based technique. In this method, the researcher tries to understand the concept by analyzing the context in which the participants use a particular keyword.

For example , researchers conducting research and data analysis for studying the concept of ‘diabetes’ amongst respondents might analyze the context of when and how the respondent has used or referred to the word ‘diabetes.’

The scrutiny-based technique is also one of the highly recommended text analysis methods used to identify a quality data pattern. Compare and contrast is the widely used method under this technique to differentiate how a specific text is similar or different from each other.

For example: To find out the “importance of resident doctor in a company,” the collected data is divided into people who think it is necessary to hire a resident doctor and those who think it is unnecessary. Compare and contrast is the best method that can be used to analyze the polls having single-answer questions types .

Metaphors can be used to reduce the data pile and find patterns in it so that it becomes easier to connect data with theory.

Variable Partitioning is another technique used to split variables so that researchers can find more coherent descriptions and explanations from the enormous data.

LEARN ABOUT: Qualitative Research Questions and Questionnaires

There are several techniques to analyze the data in qualitative research, but here are some commonly used methods,

- Content Analysis: It is widely accepted and the most frequently employed technique for data analysis in research methodology. It can be used to analyze the documented information from text, images, and sometimes from the physical items. It depends on the research questions to predict when and where to use this method.

- Narrative Analysis: This method is used to analyze content gathered from various sources such as personal interviews, field observation, and surveys . The majority of times, stories, or opinions shared by people are focused on finding answers to the research questions.

- Discourse Analysis: Similar to narrative analysis, discourse analysis is used to analyze the interactions with people. Nevertheless, this particular method considers the social context under which or within which the communication between the researcher and respondent takes place. In addition to that, discourse analysis also focuses on the lifestyle and day-to-day environment while deriving any conclusion.

- Grounded Theory: When you want to explain why a particular phenomenon happened, then using grounded theory for analyzing quality data is the best resort. Grounded theory is applied to study data about the host of similar cases occurring in different settings. When researchers are using this method, they might alter explanations or produce new ones until they arrive at some conclusion.

LEARN ABOUT: 12 Best Tools for Researchers

Data analysis in quantitative research

The first stage in research and data analysis is to make it for the analysis so that the nominal data can be converted into something meaningful. Data preparation consists of the below phases.

Phase I: Data Validation

Data validation is done to understand if the collected data sample is per the pre-set standards, or it is a biased data sample again divided into four different stages

- Fraud: To ensure an actual human being records each response to the survey or the questionnaire

- Screening: To make sure each participant or respondent is selected or chosen in compliance with the research criteria

- Procedure: To ensure ethical standards were maintained while collecting the data sample

- Completeness: To ensure that the respondent has answered all the questions in an online survey. Else, the interviewer had asked all the questions devised in the questionnaire.

Phase II: Data Editing

More often, an extensive research data sample comes loaded with errors. Respondents sometimes fill in some fields incorrectly or sometimes skip them accidentally. Data editing is a process wherein the researchers have to confirm that the provided data is free of such errors. They need to conduct necessary checks and outlier checks to edit the raw edit and make it ready for analysis.

Phase III: Data Coding

Out of all three, this is the most critical phase of data preparation associated with grouping and assigning values to the survey responses . If a survey is completed with a 1000 sample size, the researcher will create an age bracket to distinguish the respondents based on their age. Thus, it becomes easier to analyze small data buckets rather than deal with the massive data pile.

LEARN ABOUT: Steps in Qualitative Research

After the data is prepared for analysis, researchers are open to using different research and data analysis methods to derive meaningful insights. For sure, statistical analysis plans are the most favored to analyze numerical data. In statistical analysis, distinguishing between categorical data and numerical data is essential, as categorical data involves distinct categories or labels, while numerical data consists of measurable quantities. The method is again classified into two groups. First, ‘Descriptive Statistics’ used to describe data. Second, ‘Inferential statistics’ that helps in comparing the data .

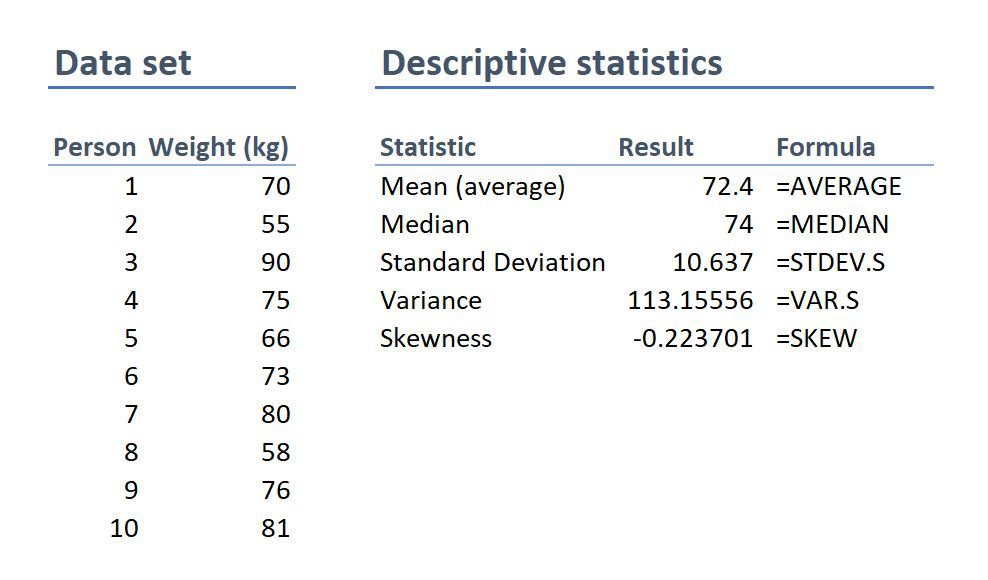

Descriptive statistics

This method is used to describe the basic features of versatile types of data in research. It presents the data in such a meaningful way that pattern in the data starts making sense. Nevertheless, the descriptive analysis does not go beyond making conclusions. The conclusions are again based on the hypothesis researchers have formulated so far. Here are a few major types of descriptive analysis methods.

Measures of Frequency

- Count, Percent, Frequency

- It is used to denote home often a particular event occurs.

- Researchers use it when they want to showcase how often a response is given.

Measures of Central Tendency

- Mean, Median, Mode

- The method is widely used to demonstrate distribution by various points.

- Researchers use this method when they want to showcase the most commonly or averagely indicated response.

Measures of Dispersion or Variation

- Range, Variance, Standard deviation

- Here the field equals high/low points.

- Variance standard deviation = difference between the observed score and mean

- It is used to identify the spread of scores by stating intervals.

- Researchers use this method to showcase data spread out. It helps them identify the depth until which the data is spread out that it directly affects the mean.

Measures of Position

- Percentile ranks, Quartile ranks

- It relies on standardized scores helping researchers to identify the relationship between different scores.

- It is often used when researchers want to compare scores with the average count.

For quantitative research use of descriptive analysis often give absolute numbers, but the in-depth analysis is never sufficient to demonstrate the rationale behind those numbers. Nevertheless, it is necessary to think of the best method for research and data analysis suiting your survey questionnaire and what story researchers want to tell. For example, the mean is the best way to demonstrate the students’ average scores in schools. It is better to rely on the descriptive statistics when the researchers intend to keep the research or outcome limited to the provided sample without generalizing it. For example, when you want to compare average voting done in two different cities, differential statistics are enough.

Descriptive analysis is also called a ‘univariate analysis’ since it is commonly used to analyze a single variable.

Inferential statistics

Inferential statistics are used to make predictions about a larger population after research and data analysis of the representing population’s collected sample. For example, you can ask some odd 100 audiences at a movie theater if they like the movie they are watching. Researchers then use inferential statistics on the collected sample to reason that about 80-90% of people like the movie.

Here are two significant areas of inferential statistics.

- Estimating parameters: It takes statistics from the sample research data and demonstrates something about the population parameter.

- Hypothesis test: I t’s about sampling research data to answer the survey research questions. For example, researchers might be interested to understand if the new shade of lipstick recently launched is good or not, or if the multivitamin capsules help children to perform better at games.

These are sophisticated analysis methods used to showcase the relationship between different variables instead of describing a single variable. It is often used when researchers want something beyond absolute numbers to understand the relationship between variables.

Here are some of the commonly used methods for data analysis in research.

- Correlation: When researchers are not conducting experimental research or quasi-experimental research wherein the researchers are interested to understand the relationship between two or more variables, they opt for correlational research methods.

- Cross-tabulation: Also called contingency tables, cross-tabulation is used to analyze the relationship between multiple variables. Suppose provided data has age and gender categories presented in rows and columns. A two-dimensional cross-tabulation helps for seamless data analysis and research by showing the number of males and females in each age category.

- Regression analysis: For understanding the strong relationship between two variables, researchers do not look beyond the primary and commonly used regression analysis method, which is also a type of predictive analysis used. In this method, you have an essential factor called the dependent variable. You also have multiple independent variables in regression analysis. You undertake efforts to find out the impact of independent variables on the dependent variable. The values of both independent and dependent variables are assumed as being ascertained in an error-free random manner.

- Frequency tables: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Analysis of variance: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Researchers must have the necessary research skills to analyze and manipulation the data , Getting trained to demonstrate a high standard of research practice. Ideally, researchers must possess more than a basic understanding of the rationale of selecting one statistical method over the other to obtain better data insights.

- Usually, research and data analytics projects differ by scientific discipline; therefore, getting statistical advice at the beginning of analysis helps design a survey questionnaire, select data collection methods , and choose samples.

LEARN ABOUT: Best Data Collection Tools

- The primary aim of data research and analysis is to derive ultimate insights that are unbiased. Any mistake in or keeping a biased mind to collect data, selecting an analysis method, or choosing audience sample il to draw a biased inference.

- Irrelevant to the sophistication used in research data and analysis is enough to rectify the poorly defined objective outcome measurements. It does not matter if the design is at fault or intentions are not clear, but lack of clarity might mislead readers, so avoid the practice.

- The motive behind data analysis in research is to present accurate and reliable data. As far as possible, avoid statistical errors, and find a way to deal with everyday challenges like outliers, missing data, data altering, data mining , or developing graphical representation.

LEARN MORE: Descriptive Research vs Correlational Research The sheer amount of data generated daily is frightening. Especially when data analysis has taken center stage. in 2018. In last year, the total data supply amounted to 2.8 trillion gigabytes. Hence, it is clear that the enterprises willing to survive in the hypercompetitive world must possess an excellent capability to analyze complex research data, derive actionable insights, and adapt to the new market needs.

LEARN ABOUT: Average Order Value

QuestionPro is an online survey platform that empowers organizations in data analysis and research and provides them a medium to collect data by creating appealing surveys.

MORE LIKE THIS

How to Weighting Survey Data to Enhance Your Data Quality?

Jun 12, 2024

Stay Interviews: What Is It, How to Conduct, 15 Questions

Jun 11, 2024

Exploring Types of Correlation for Patterns and Relationship

Jun 10, 2024

Life@QuestionPro: The Journey of Kristie Lawrence

Jun 7, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Tuesday CX Thoughts (TCXT)

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Data Analysis Techniques in Research – Methods, Tools & Examples

Varun Saharawat is a seasoned professional in the fields of SEO and content writing. With a profound knowledge of the intricate aspects of these disciplines, Varun has established himself as a valuable asset in the world of digital marketing and online content creation.

Data analysis techniques in research are essential because they allow researchers to derive meaningful insights from data sets to support their hypotheses or research objectives.

Data Analysis Techniques in Research : While various groups, institutions, and professionals may have diverse approaches to data analysis, a universal definition captures its essence. Data analysis involves refining, transforming, and interpreting raw data to derive actionable insights that guide informed decision-making for businesses.

A straightforward illustration of data analysis emerges when we make everyday decisions, basing our choices on past experiences or predictions of potential outcomes.

If you want to learn more about this topic and acquire valuable skills that will set you apart in today’s data-driven world, we highly recommend enrolling in the Data Analytics Course by Physics Wallah . And as a special offer for our readers, use the coupon code “READER” to get a discount on this course.

Table of Contents

What is Data Analysis?

Data analysis is the systematic process of inspecting, cleaning, transforming, and interpreting data with the objective of discovering valuable insights and drawing meaningful conclusions. This process involves several steps:

- Inspecting : Initial examination of data to understand its structure, quality, and completeness.

- Cleaning : Removing errors, inconsistencies, or irrelevant information to ensure accurate analysis.

- Transforming : Converting data into a format suitable for analysis, such as normalization or aggregation.

- Interpreting : Analyzing the transformed data to identify patterns, trends, and relationships.

Types of Data Analysis Techniques in Research

Data analysis techniques in research are categorized into qualitative and quantitative methods, each with its specific approaches and tools. These techniques are instrumental in extracting meaningful insights, patterns, and relationships from data to support informed decision-making, validate hypotheses, and derive actionable recommendations. Below is an in-depth exploration of the various types of data analysis techniques commonly employed in research:

1) Qualitative Analysis:

Definition: Qualitative analysis focuses on understanding non-numerical data, such as opinions, concepts, or experiences, to derive insights into human behavior, attitudes, and perceptions.

- Content Analysis: Examines textual data, such as interview transcripts, articles, or open-ended survey responses, to identify themes, patterns, or trends.

- Narrative Analysis: Analyzes personal stories or narratives to understand individuals’ experiences, emotions, or perspectives.

- Ethnographic Studies: Involves observing and analyzing cultural practices, behaviors, and norms within specific communities or settings.

2) Quantitative Analysis:

Quantitative analysis emphasizes numerical data and employs statistical methods to explore relationships, patterns, and trends. It encompasses several approaches:

Descriptive Analysis:

- Frequency Distribution: Represents the number of occurrences of distinct values within a dataset.

- Central Tendency: Measures such as mean, median, and mode provide insights into the central values of a dataset.

- Dispersion: Techniques like variance and standard deviation indicate the spread or variability of data.

Diagnostic Analysis:

- Regression Analysis: Assesses the relationship between dependent and independent variables, enabling prediction or understanding causality.

- ANOVA (Analysis of Variance): Examines differences between groups to identify significant variations or effects.

Predictive Analysis:

- Time Series Forecasting: Uses historical data points to predict future trends or outcomes.

- Machine Learning Algorithms: Techniques like decision trees, random forests, and neural networks predict outcomes based on patterns in data.

Prescriptive Analysis:

- Optimization Models: Utilizes linear programming, integer programming, or other optimization techniques to identify the best solutions or strategies.

- Simulation: Mimics real-world scenarios to evaluate various strategies or decisions and determine optimal outcomes.

Specific Techniques:

- Monte Carlo Simulation: Models probabilistic outcomes to assess risk and uncertainty.

- Factor Analysis: Reduces the dimensionality of data by identifying underlying factors or components.

- Cohort Analysis: Studies specific groups or cohorts over time to understand trends, behaviors, or patterns within these groups.

- Cluster Analysis: Classifies objects or individuals into homogeneous groups or clusters based on similarities or attributes.

- Sentiment Analysis: Uses natural language processing and machine learning techniques to determine sentiment, emotions, or opinions from textual data.

Also Read: AI and Predictive Analytics: Examples, Tools, Uses, Ai Vs Predictive Analytics

Data Analysis Techniques in Research Examples

To provide a clearer understanding of how data analysis techniques are applied in research, let’s consider a hypothetical research study focused on evaluating the impact of online learning platforms on students’ academic performance.

Research Objective:

Determine if students using online learning platforms achieve higher academic performance compared to those relying solely on traditional classroom instruction.

Data Collection:

- Quantitative Data: Academic scores (grades) of students using online platforms and those using traditional classroom methods.

- Qualitative Data: Feedback from students regarding their learning experiences, challenges faced, and preferences.

Data Analysis Techniques Applied:

1) Descriptive Analysis:

- Calculate the mean, median, and mode of academic scores for both groups.

- Create frequency distributions to represent the distribution of grades in each group.

2) Diagnostic Analysis:

- Conduct an Analysis of Variance (ANOVA) to determine if there’s a statistically significant difference in academic scores between the two groups.

- Perform Regression Analysis to assess the relationship between the time spent on online platforms and academic performance.

3) Predictive Analysis:

- Utilize Time Series Forecasting to predict future academic performance trends based on historical data.

- Implement Machine Learning algorithms to develop a predictive model that identifies factors contributing to academic success on online platforms.

4) Prescriptive Analysis:

- Apply Optimization Models to identify the optimal combination of online learning resources (e.g., video lectures, interactive quizzes) that maximize academic performance.

- Use Simulation Techniques to evaluate different scenarios, such as varying student engagement levels with online resources, to determine the most effective strategies for improving learning outcomes.

5) Specific Techniques:

- Conduct Factor Analysis on qualitative feedback to identify common themes or factors influencing students’ perceptions and experiences with online learning.

- Perform Cluster Analysis to segment students based on their engagement levels, preferences, or academic outcomes, enabling targeted interventions or personalized learning strategies.

- Apply Sentiment Analysis on textual feedback to categorize students’ sentiments as positive, negative, or neutral regarding online learning experiences.

By applying a combination of qualitative and quantitative data analysis techniques, this research example aims to provide comprehensive insights into the effectiveness of online learning platforms.

Also Read: Learning Path to Become a Data Analyst in 2024

Data Analysis Techniques in Quantitative Research

Quantitative research involves collecting numerical data to examine relationships, test hypotheses, and make predictions. Various data analysis techniques are employed to interpret and draw conclusions from quantitative data. Here are some key data analysis techniques commonly used in quantitative research:

1) Descriptive Statistics:

- Description: Descriptive statistics are used to summarize and describe the main aspects of a dataset, such as central tendency (mean, median, mode), variability (range, variance, standard deviation), and distribution (skewness, kurtosis).

- Applications: Summarizing data, identifying patterns, and providing initial insights into the dataset.

2) Inferential Statistics:

- Description: Inferential statistics involve making predictions or inferences about a population based on a sample of data. This technique includes hypothesis testing, confidence intervals, t-tests, chi-square tests, analysis of variance (ANOVA), regression analysis, and correlation analysis.

- Applications: Testing hypotheses, making predictions, and generalizing findings from a sample to a larger population.

3) Regression Analysis:

- Description: Regression analysis is a statistical technique used to model and examine the relationship between a dependent variable and one or more independent variables. Linear regression, multiple regression, logistic regression, and nonlinear regression are common types of regression analysis .

- Applications: Predicting outcomes, identifying relationships between variables, and understanding the impact of independent variables on the dependent variable.

4) Correlation Analysis:

- Description: Correlation analysis is used to measure and assess the strength and direction of the relationship between two or more variables. The Pearson correlation coefficient, Spearman rank correlation coefficient, and Kendall’s tau are commonly used measures of correlation.

- Applications: Identifying associations between variables and assessing the degree and nature of the relationship.

5) Factor Analysis:

- Description: Factor analysis is a multivariate statistical technique used to identify and analyze underlying relationships or factors among a set of observed variables. It helps in reducing the dimensionality of data and identifying latent variables or constructs.

- Applications: Identifying underlying factors or constructs, simplifying data structures, and understanding the underlying relationships among variables.

6) Time Series Analysis:

- Description: Time series analysis involves analyzing data collected or recorded over a specific period at regular intervals to identify patterns, trends, and seasonality. Techniques such as moving averages, exponential smoothing, autoregressive integrated moving average (ARIMA), and Fourier analysis are used.

- Applications: Forecasting future trends, analyzing seasonal patterns, and understanding time-dependent relationships in data.

7) ANOVA (Analysis of Variance):

- Description: Analysis of variance (ANOVA) is a statistical technique used to analyze and compare the means of two or more groups or treatments to determine if they are statistically different from each other. One-way ANOVA, two-way ANOVA, and MANOVA (Multivariate Analysis of Variance) are common types of ANOVA.

- Applications: Comparing group means, testing hypotheses, and determining the effects of categorical independent variables on a continuous dependent variable.

8) Chi-Square Tests:

- Description: Chi-square tests are non-parametric statistical tests used to assess the association between categorical variables in a contingency table. The Chi-square test of independence, goodness-of-fit test, and test of homogeneity are common chi-square tests.

- Applications: Testing relationships between categorical variables, assessing goodness-of-fit, and evaluating independence.

These quantitative data analysis techniques provide researchers with valuable tools and methods to analyze, interpret, and derive meaningful insights from numerical data. The selection of a specific technique often depends on the research objectives, the nature of the data, and the underlying assumptions of the statistical methods being used.

Also Read: Analysis vs. Analytics: How Are They Different?

Data Analysis Methods

Data analysis methods refer to the techniques and procedures used to analyze, interpret, and draw conclusions from data. These methods are essential for transforming raw data into meaningful insights, facilitating decision-making processes, and driving strategies across various fields. Here are some common data analysis methods:

- Description: Descriptive statistics summarize and organize data to provide a clear and concise overview of the dataset. Measures such as mean, median, mode, range, variance, and standard deviation are commonly used.

- Description: Inferential statistics involve making predictions or inferences about a population based on a sample of data. Techniques such as hypothesis testing, confidence intervals, and regression analysis are used.

3) Exploratory Data Analysis (EDA):

- Description: EDA techniques involve visually exploring and analyzing data to discover patterns, relationships, anomalies, and insights. Methods such as scatter plots, histograms, box plots, and correlation matrices are utilized.

- Applications: Identifying trends, patterns, outliers, and relationships within the dataset.

4) Predictive Analytics:

- Description: Predictive analytics use statistical algorithms and machine learning techniques to analyze historical data and make predictions about future events or outcomes. Techniques such as regression analysis, time series forecasting, and machine learning algorithms (e.g., decision trees, random forests, neural networks) are employed.

- Applications: Forecasting future trends, predicting outcomes, and identifying potential risks or opportunities.

5) Prescriptive Analytics:

- Description: Prescriptive analytics involve analyzing data to recommend actions or strategies that optimize specific objectives or outcomes. Optimization techniques, simulation models, and decision-making algorithms are utilized.

- Applications: Recommending optimal strategies, decision-making support, and resource allocation.

6) Qualitative Data Analysis:

- Description: Qualitative data analysis involves analyzing non-numerical data, such as text, images, videos, or audio, to identify themes, patterns, and insights. Methods such as content analysis, thematic analysis, and narrative analysis are used.

- Applications: Understanding human behavior, attitudes, perceptions, and experiences.

7) Big Data Analytics:

- Description: Big data analytics methods are designed to analyze large volumes of structured and unstructured data to extract valuable insights. Technologies such as Hadoop, Spark, and NoSQL databases are used to process and analyze big data.

- Applications: Analyzing large datasets, identifying trends, patterns, and insights from big data sources.

8) Text Analytics:

- Description: Text analytics methods involve analyzing textual data, such as customer reviews, social media posts, emails, and documents, to extract meaningful information and insights. Techniques such as sentiment analysis, text mining, and natural language processing (NLP) are used.

- Applications: Analyzing customer feedback, monitoring brand reputation, and extracting insights from textual data sources.

These data analysis methods are instrumental in transforming data into actionable insights, informing decision-making processes, and driving organizational success across various sectors, including business, healthcare, finance, marketing, and research. The selection of a specific method often depends on the nature of the data, the research objectives, and the analytical requirements of the project or organization.

Also Read: Quantitative Data Analysis: Types, Analysis & Examples

Data Analysis Tools

Data analysis tools are essential instruments that facilitate the process of examining, cleaning, transforming, and modeling data to uncover useful information, make informed decisions, and drive strategies. Here are some prominent data analysis tools widely used across various industries:

1) Microsoft Excel:

- Description: A spreadsheet software that offers basic to advanced data analysis features, including pivot tables, data visualization tools, and statistical functions.

- Applications: Data cleaning, basic statistical analysis, visualization, and reporting.

2) R Programming Language:

- Description: An open-source programming language specifically designed for statistical computing and data visualization.

- Applications: Advanced statistical analysis, data manipulation, visualization, and machine learning.

3) Python (with Libraries like Pandas, NumPy, Matplotlib, and Seaborn):

- Description: A versatile programming language with libraries that support data manipulation, analysis, and visualization.

- Applications: Data cleaning, statistical analysis, machine learning, and data visualization.

4) SPSS (Statistical Package for the Social Sciences):

- Description: A comprehensive statistical software suite used for data analysis, data mining, and predictive analytics.

- Applications: Descriptive statistics, hypothesis testing, regression analysis, and advanced analytics.

5) SAS (Statistical Analysis System):

- Description: A software suite used for advanced analytics, multivariate analysis, and predictive modeling.

- Applications: Data management, statistical analysis, predictive modeling, and business intelligence.

6) Tableau:

- Description: A data visualization tool that allows users to create interactive and shareable dashboards and reports.

- Applications: Data visualization , business intelligence , and interactive dashboard creation.

7) Power BI:

- Description: A business analytics tool developed by Microsoft that provides interactive visualizations and business intelligence capabilities.

- Applications: Data visualization, business intelligence, reporting, and dashboard creation.

8) SQL (Structured Query Language) Databases (e.g., MySQL, PostgreSQL, Microsoft SQL Server):

- Description: Database management systems that support data storage, retrieval, and manipulation using SQL queries.

- Applications: Data retrieval, data cleaning, data transformation, and database management.

9) Apache Spark:

- Description: A fast and general-purpose distributed computing system designed for big data processing and analytics.

- Applications: Big data processing, machine learning, data streaming, and real-time analytics.

10) IBM SPSS Modeler:

- Description: A data mining software application used for building predictive models and conducting advanced analytics.

- Applications: Predictive modeling, data mining, statistical analysis, and decision optimization.

These tools serve various purposes and cater to different data analysis needs, from basic statistical analysis and data visualization to advanced analytics, machine learning, and big data processing. The choice of a specific tool often depends on the nature of the data, the complexity of the analysis, and the specific requirements of the project or organization.

Also Read: How to Analyze Survey Data: Methods & Examples

Importance of Data Analysis in Research

The importance of data analysis in research cannot be overstated; it serves as the backbone of any scientific investigation or study. Here are several key reasons why data analysis is crucial in the research process:

- Data analysis helps ensure that the results obtained are valid and reliable. By systematically examining the data, researchers can identify any inconsistencies or anomalies that may affect the credibility of the findings.

- Effective data analysis provides researchers with the necessary information to make informed decisions. By interpreting the collected data, researchers can draw conclusions, make predictions, or formulate recommendations based on evidence rather than intuition or guesswork.

- Data analysis allows researchers to identify patterns, trends, and relationships within the data. This can lead to a deeper understanding of the research topic, enabling researchers to uncover insights that may not be immediately apparent.

- In empirical research, data analysis plays a critical role in testing hypotheses. Researchers collect data to either support or refute their hypotheses, and data analysis provides the tools and techniques to evaluate these hypotheses rigorously.

- Transparent and well-executed data analysis enhances the credibility of research findings. By clearly documenting the data analysis methods and procedures, researchers allow others to replicate the study, thereby contributing to the reproducibility of research findings.

- In fields such as business or healthcare, data analysis helps organizations allocate resources more efficiently. By analyzing data on consumer behavior, market trends, or patient outcomes, organizations can make strategic decisions about resource allocation, budgeting, and planning.

- In public policy and social sciences, data analysis is instrumental in developing and evaluating policies and interventions. By analyzing data on social, economic, or environmental factors, policymakers can assess the effectiveness of existing policies and inform the development of new ones.

- Data analysis allows for continuous improvement in research methods and practices. By analyzing past research projects, identifying areas for improvement, and implementing changes based on data-driven insights, researchers can refine their approaches and enhance the quality of future research endeavors.

However, it is important to remember that mastering these techniques requires practice and continuous learning. That’s why we highly recommend the Data Analytics Course by Physics Wallah . Not only does it cover all the fundamentals of data analysis, but it also provides hands-on experience with various tools such as Excel, Python, and Tableau. Plus, if you use the “ READER ” coupon code at checkout, you can get a special discount on the course.

For Latest Tech Related Information, Join Our Official Free Telegram Group : PW Skills Telegram Group

Data Analysis Techniques in Research FAQs

What are the 5 techniques for data analysis.

The five techniques for data analysis include: Descriptive Analysis Diagnostic Analysis Predictive Analysis Prescriptive Analysis Qualitative Analysis

What are techniques of data analysis in research?

Techniques of data analysis in research encompass both qualitative and quantitative methods. These techniques involve processes like summarizing raw data, investigating causes of events, forecasting future outcomes, offering recommendations based on predictions, and examining non-numerical data to understand concepts or experiences.

What are the 3 methods of data analysis?

The three primary methods of data analysis are: Qualitative Analysis Quantitative Analysis Mixed-Methods Analysis

What are the four types of data analysis techniques?

The four types of data analysis techniques are: Descriptive Analysis Diagnostic Analysis Predictive Analysis Prescriptive Analysis

45+ Most Asked Data Analyst Interview Questions With Answers

Preparing for a data analyst interview? We've compiled a list of the most frequently asked data analyst interview questions along…

Best BI Tool: Top 15 Business Intelligence Tools (BI Tools)

Discover the ultimate Business Intelligence Tools (Best BI Tools) for data-driven decision-making. Compare features, benefits, and pricing to find your…

Which Course is Best for Business Analyst? (Business Analysts Online Courses)

Many reputed platforms and institutions offer online certification courses which can help you land job offers in relevant companies. In…

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

The Beginner's Guide to Statistical Analysis | 5 Steps & Examples

Statistical analysis means investigating trends, patterns, and relationships using quantitative data . It is an important research tool used by scientists, governments, businesses, and other organizations.

To draw valid conclusions, statistical analysis requires careful planning from the very start of the research process . You need to specify your hypotheses and make decisions about your research design, sample size, and sampling procedure.

After collecting data from your sample, you can organize and summarize the data using descriptive statistics . Then, you can use inferential statistics to formally test hypotheses and make estimates about the population. Finally, you can interpret and generalize your findings.

This article is a practical introduction to statistical analysis for students and researchers. We’ll walk you through the steps using two research examples. The first investigates a potential cause-and-effect relationship, while the second investigates a potential correlation between variables.

Table of contents

Step 1: write your hypotheses and plan your research design, step 2: collect data from a sample, step 3: summarize your data with descriptive statistics, step 4: test hypotheses or make estimates with inferential statistics, step 5: interpret your results, other interesting articles.

To collect valid data for statistical analysis, you first need to specify your hypotheses and plan out your research design.

Writing statistical hypotheses

The goal of research is often to investigate a relationship between variables within a population . You start with a prediction, and use statistical analysis to test that prediction.

A statistical hypothesis is a formal way of writing a prediction about a population. Every research prediction is rephrased into null and alternative hypotheses that can be tested using sample data.

While the null hypothesis always predicts no effect or no relationship between variables, the alternative hypothesis states your research prediction of an effect or relationship.

- Null hypothesis: A 5-minute meditation exercise will have no effect on math test scores in teenagers.

- Alternative hypothesis: A 5-minute meditation exercise will improve math test scores in teenagers.

- Null hypothesis: Parental income and GPA have no relationship with each other in college students.

- Alternative hypothesis: Parental income and GPA are positively correlated in college students.

Planning your research design

A research design is your overall strategy for data collection and analysis. It determines the statistical tests you can use to test your hypothesis later on.

First, decide whether your research will use a descriptive, correlational, or experimental design. Experiments directly influence variables, whereas descriptive and correlational studies only measure variables.

- In an experimental design , you can assess a cause-and-effect relationship (e.g., the effect of meditation on test scores) using statistical tests of comparison or regression.

- In a correlational design , you can explore relationships between variables (e.g., parental income and GPA) without any assumption of causality using correlation coefficients and significance tests.

- In a descriptive design , you can study the characteristics of a population or phenomenon (e.g., the prevalence of anxiety in U.S. college students) using statistical tests to draw inferences from sample data.

Your research design also concerns whether you’ll compare participants at the group level or individual level, or both.

- In a between-subjects design , you compare the group-level outcomes of participants who have been exposed to different treatments (e.g., those who performed a meditation exercise vs those who didn’t).

- In a within-subjects design , you compare repeated measures from participants who have participated in all treatments of a study (e.g., scores from before and after performing a meditation exercise).

- In a mixed (factorial) design , one variable is altered between subjects and another is altered within subjects (e.g., pretest and posttest scores from participants who either did or didn’t do a meditation exercise).

- Experimental

- Correlational

First, you’ll take baseline test scores from participants. Then, your participants will undergo a 5-minute meditation exercise. Finally, you’ll record participants’ scores from a second math test.

In this experiment, the independent variable is the 5-minute meditation exercise, and the dependent variable is the math test score from before and after the intervention. Example: Correlational research design In a correlational study, you test whether there is a relationship between parental income and GPA in graduating college students. To collect your data, you will ask participants to fill in a survey and self-report their parents’ incomes and their own GPA.

Measuring variables

When planning a research design, you should operationalize your variables and decide exactly how you will measure them.

For statistical analysis, it’s important to consider the level of measurement of your variables, which tells you what kind of data they contain:

- Categorical data represents groupings. These may be nominal (e.g., gender) or ordinal (e.g. level of language ability).

- Quantitative data represents amounts. These may be on an interval scale (e.g. test score) or a ratio scale (e.g. age).

Many variables can be measured at different levels of precision. For example, age data can be quantitative (8 years old) or categorical (young). If a variable is coded numerically (e.g., level of agreement from 1–5), it doesn’t automatically mean that it’s quantitative instead of categorical.

Identifying the measurement level is important for choosing appropriate statistics and hypothesis tests. For example, you can calculate a mean score with quantitative data, but not with categorical data.

In a research study, along with measures of your variables of interest, you’ll often collect data on relevant participant characteristics.

| Variable | Type of data |

|---|---|

| Age | Quantitative (ratio) |

| Gender | Categorical (nominal) |

| Race or ethnicity | Categorical (nominal) |

| Baseline test scores | Quantitative (interval) |

| Final test scores | Quantitative (interval) |

| Parental income | Quantitative (ratio) |

|---|---|

| GPA | Quantitative (interval) |

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

In most cases, it’s too difficult or expensive to collect data from every member of the population you’re interested in studying. Instead, you’ll collect data from a sample.

Statistical analysis allows you to apply your findings beyond your own sample as long as you use appropriate sampling procedures . You should aim for a sample that is representative of the population.

Sampling for statistical analysis

There are two main approaches to selecting a sample.

- Probability sampling: every member of the population has a chance of being selected for the study through random selection.

- Non-probability sampling: some members of the population are more likely than others to be selected for the study because of criteria such as convenience or voluntary self-selection.

In theory, for highly generalizable findings, you should use a probability sampling method. Random selection reduces several types of research bias , like sampling bias , and ensures that data from your sample is actually typical of the population. Parametric tests can be used to make strong statistical inferences when data are collected using probability sampling.

But in practice, it’s rarely possible to gather the ideal sample. While non-probability samples are more likely to at risk for biases like self-selection bias , they are much easier to recruit and collect data from. Non-parametric tests are more appropriate for non-probability samples, but they result in weaker inferences about the population.

If you want to use parametric tests for non-probability samples, you have to make the case that:

- your sample is representative of the population you’re generalizing your findings to.

- your sample lacks systematic bias.

Keep in mind that external validity means that you can only generalize your conclusions to others who share the characteristics of your sample. For instance, results from Western, Educated, Industrialized, Rich and Democratic samples (e.g., college students in the US) aren’t automatically applicable to all non-WEIRD populations.

If you apply parametric tests to data from non-probability samples, be sure to elaborate on the limitations of how far your results can be generalized in your discussion section .

Create an appropriate sampling procedure

Based on the resources available for your research, decide on how you’ll recruit participants.

- Will you have resources to advertise your study widely, including outside of your university setting?

- Will you have the means to recruit a diverse sample that represents a broad population?

- Do you have time to contact and follow up with members of hard-to-reach groups?

Your participants are self-selected by their schools. Although you’re using a non-probability sample, you aim for a diverse and representative sample. Example: Sampling (correlational study) Your main population of interest is male college students in the US. Using social media advertising, you recruit senior-year male college students from a smaller subpopulation: seven universities in the Boston area.

Calculate sufficient sample size

Before recruiting participants, decide on your sample size either by looking at other studies in your field or using statistics. A sample that’s too small may be unrepresentative of the sample, while a sample that’s too large will be more costly than necessary.

There are many sample size calculators online. Different formulas are used depending on whether you have subgroups or how rigorous your study should be (e.g., in clinical research). As a rule of thumb, a minimum of 30 units or more per subgroup is necessary.

To use these calculators, you have to understand and input these key components:

- Significance level (alpha): the risk of rejecting a true null hypothesis that you are willing to take, usually set at 5%.

- Statistical power : the probability of your study detecting an effect of a certain size if there is one, usually 80% or higher.

- Expected effect size : a standardized indication of how large the expected result of your study will be, usually based on other similar studies.

- Population standard deviation: an estimate of the population parameter based on a previous study or a pilot study of your own.

Once you’ve collected all of your data, you can inspect them and calculate descriptive statistics that summarize them.

Inspect your data

There are various ways to inspect your data, including the following:

- Organizing data from each variable in frequency distribution tables .

- Displaying data from a key variable in a bar chart to view the distribution of responses.

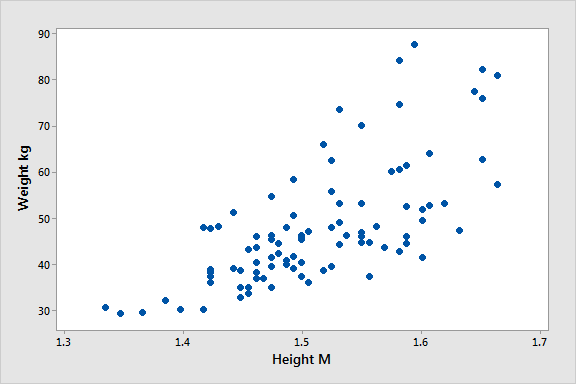

- Visualizing the relationship between two variables using a scatter plot .

By visualizing your data in tables and graphs, you can assess whether your data follow a skewed or normal distribution and whether there are any outliers or missing data.

A normal distribution means that your data are symmetrically distributed around a center where most values lie, with the values tapering off at the tail ends.

In contrast, a skewed distribution is asymmetric and has more values on one end than the other. The shape of the distribution is important to keep in mind because only some descriptive statistics should be used with skewed distributions.

Extreme outliers can also produce misleading statistics, so you may need a systematic approach to dealing with these values.

Calculate measures of central tendency

Measures of central tendency describe where most of the values in a data set lie. Three main measures of central tendency are often reported:

- Mode : the most popular response or value in the data set.

- Median : the value in the exact middle of the data set when ordered from low to high.

- Mean : the sum of all values divided by the number of values.

However, depending on the shape of the distribution and level of measurement, only one or two of these measures may be appropriate. For example, many demographic characteristics can only be described using the mode or proportions, while a variable like reaction time may not have a mode at all.

Calculate measures of variability

Measures of variability tell you how spread out the values in a data set are. Four main measures of variability are often reported:

- Range : the highest value minus the lowest value of the data set.

- Interquartile range : the range of the middle half of the data set.

- Standard deviation : the average distance between each value in your data set and the mean.

- Variance : the square of the standard deviation.

Once again, the shape of the distribution and level of measurement should guide your choice of variability statistics. The interquartile range is the best measure for skewed distributions, while standard deviation and variance provide the best information for normal distributions.

Using your table, you should check whether the units of the descriptive statistics are comparable for pretest and posttest scores. For example, are the variance levels similar across the groups? Are there any extreme values? If there are, you may need to identify and remove extreme outliers in your data set or transform your data before performing a statistical test.

| Pretest scores | Posttest scores | |

|---|---|---|

| Mean | 68.44 | 75.25 |

| Standard deviation | 9.43 | 9.88 |

| Variance | 88.96 | 97.96 |

| Range | 36.25 | 45.12 |

| 30 | ||

From this table, we can see that the mean score increased after the meditation exercise, and the variances of the two scores are comparable. Next, we can perform a statistical test to find out if this improvement in test scores is statistically significant in the population. Example: Descriptive statistics (correlational study) After collecting data from 653 students, you tabulate descriptive statistics for annual parental income and GPA.

It’s important to check whether you have a broad range of data points. If you don’t, your data may be skewed towards some groups more than others (e.g., high academic achievers), and only limited inferences can be made about a relationship.

| Parental income (USD) | GPA | |

|---|---|---|

| Mean | 62,100 | 3.12 |

| Standard deviation | 15,000 | 0.45 |

| Variance | 225,000,000 | 0.16 |

| Range | 8,000–378,000 | 2.64–4.00 |

| 653 | ||

A number that describes a sample is called a statistic , while a number describing a population is called a parameter . Using inferential statistics , you can make conclusions about population parameters based on sample statistics.

Researchers often use two main methods (simultaneously) to make inferences in statistics.

- Estimation: calculating population parameters based on sample statistics.

- Hypothesis testing: a formal process for testing research predictions about the population using samples.

You can make two types of estimates of population parameters from sample statistics:

- A point estimate : a value that represents your best guess of the exact parameter.

- An interval estimate : a range of values that represent your best guess of where the parameter lies.

If your aim is to infer and report population characteristics from sample data, it’s best to use both point and interval estimates in your paper.

You can consider a sample statistic a point estimate for the population parameter when you have a representative sample (e.g., in a wide public opinion poll, the proportion of a sample that supports the current government is taken as the population proportion of government supporters).

There’s always error involved in estimation, so you should also provide a confidence interval as an interval estimate to show the variability around a point estimate.

A confidence interval uses the standard error and the z score from the standard normal distribution to convey where you’d generally expect to find the population parameter most of the time.

Hypothesis testing

Using data from a sample, you can test hypotheses about relationships between variables in the population. Hypothesis testing starts with the assumption that the null hypothesis is true in the population, and you use statistical tests to assess whether the null hypothesis can be rejected or not.

Statistical tests determine where your sample data would lie on an expected distribution of sample data if the null hypothesis were true. These tests give two main outputs:

- A test statistic tells you how much your data differs from the null hypothesis of the test.

- A p value tells you the likelihood of obtaining your results if the null hypothesis is actually true in the population.

Statistical tests come in three main varieties:

- Comparison tests assess group differences in outcomes.

- Regression tests assess cause-and-effect relationships between variables.

- Correlation tests assess relationships between variables without assuming causation.

Your choice of statistical test depends on your research questions, research design, sampling method, and data characteristics.

Parametric tests

Parametric tests make powerful inferences about the population based on sample data. But to use them, some assumptions must be met, and only some types of variables can be used. If your data violate these assumptions, you can perform appropriate data transformations or use alternative non-parametric tests instead.

A regression models the extent to which changes in a predictor variable results in changes in outcome variable(s).

- A simple linear regression includes one predictor variable and one outcome variable.

- A multiple linear regression includes two or more predictor variables and one outcome variable.

Comparison tests usually compare the means of groups. These may be the means of different groups within a sample (e.g., a treatment and control group), the means of one sample group taken at different times (e.g., pretest and posttest scores), or a sample mean and a population mean.

- A t test is for exactly 1 or 2 groups when the sample is small (30 or less).

- A z test is for exactly 1 or 2 groups when the sample is large.

- An ANOVA is for 3 or more groups.

The z and t tests have subtypes based on the number and types of samples and the hypotheses:

- If you have only one sample that you want to compare to a population mean, use a one-sample test .

- If you have paired measurements (within-subjects design), use a dependent (paired) samples test .

- If you have completely separate measurements from two unmatched groups (between-subjects design), use an independent (unpaired) samples test .

- If you expect a difference between groups in a specific direction, use a one-tailed test .

- If you don’t have any expectations for the direction of a difference between groups, use a two-tailed test .

The only parametric correlation test is Pearson’s r . The correlation coefficient ( r ) tells you the strength of a linear relationship between two quantitative variables.

However, to test whether the correlation in the sample is strong enough to be important in the population, you also need to perform a significance test of the correlation coefficient, usually a t test, to obtain a p value. This test uses your sample size to calculate how much the correlation coefficient differs from zero in the population.

You use a dependent-samples, one-tailed t test to assess whether the meditation exercise significantly improved math test scores. The test gives you:

- a t value (test statistic) of 3.00

- a p value of 0.0028

Although Pearson’s r is a test statistic, it doesn’t tell you anything about how significant the correlation is in the population. You also need to test whether this sample correlation coefficient is large enough to demonstrate a correlation in the population.

A t test can also determine how significantly a correlation coefficient differs from zero based on sample size. Since you expect a positive correlation between parental income and GPA, you use a one-sample, one-tailed t test. The t test gives you:

- a t value of 3.08

- a p value of 0.001

Prevent plagiarism. Run a free check.

The final step of statistical analysis is interpreting your results.

Statistical significance

In hypothesis testing, statistical significance is the main criterion for forming conclusions. You compare your p value to a set significance level (usually 0.05) to decide whether your results are statistically significant or non-significant.

Statistically significant results are considered unlikely to have arisen solely due to chance. There is only a very low chance of such a result occurring if the null hypothesis is true in the population.

This means that you believe the meditation intervention, rather than random factors, directly caused the increase in test scores. Example: Interpret your results (correlational study) You compare your p value of 0.001 to your significance threshold of 0.05. With a p value under this threshold, you can reject the null hypothesis. This indicates a statistically significant correlation between parental income and GPA in male college students.

Note that correlation doesn’t always mean causation, because there are often many underlying factors contributing to a complex variable like GPA. Even if one variable is related to another, this may be because of a third variable influencing both of them, or indirect links between the two variables.

Effect size

A statistically significant result doesn’t necessarily mean that there are important real life applications or clinical outcomes for a finding.

In contrast, the effect size indicates the practical significance of your results. It’s important to report effect sizes along with your inferential statistics for a complete picture of your results. You should also report interval estimates of effect sizes if you’re writing an APA style paper .

With a Cohen’s d of 0.72, there’s medium to high practical significance to your finding that the meditation exercise improved test scores. Example: Effect size (correlational study) To determine the effect size of the correlation coefficient, you compare your Pearson’s r value to Cohen’s effect size criteria.

Decision errors

Type I and Type II errors are mistakes made in research conclusions. A Type I error means rejecting the null hypothesis when it’s actually true, while a Type II error means failing to reject the null hypothesis when it’s false.

You can aim to minimize the risk of these errors by selecting an optimal significance level and ensuring high power . However, there’s a trade-off between the two errors, so a fine balance is necessary.

Frequentist versus Bayesian statistics

Traditionally, frequentist statistics emphasizes null hypothesis significance testing and always starts with the assumption of a true null hypothesis.

However, Bayesian statistics has grown in popularity as an alternative approach in the last few decades. In this approach, you use previous research to continually update your hypotheses based on your expectations and observations.

Bayes factor compares the relative strength of evidence for the null versus the alternative hypothesis rather than making a conclusion about rejecting the null hypothesis or not.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

Methodology

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Likert scale

Research bias

- Implicit bias

- Framing effect

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hostile attribution bias

- Affect heuristic

Is this article helpful?

Other students also liked.

- Descriptive Statistics | Definitions, Types, Examples

- Inferential Statistics | An Easy Introduction & Examples

- Choosing the Right Statistical Test | Types & Examples

More interesting articles

- Akaike Information Criterion | When & How to Use It (Example)

- An Easy Introduction to Statistical Significance (With Examples)

- An Introduction to t Tests | Definitions, Formula and Examples

- ANOVA in R | A Complete Step-by-Step Guide with Examples

- Central Limit Theorem | Formula, Definition & Examples

- Central Tendency | Understanding the Mean, Median & Mode

- Chi-Square (Χ²) Distributions | Definition & Examples

- Chi-Square (Χ²) Table | Examples & Downloadable Table

- Chi-Square (Χ²) Tests | Types, Formula & Examples

- Chi-Square Goodness of Fit Test | Formula, Guide & Examples

- Chi-Square Test of Independence | Formula, Guide & Examples

- Coefficient of Determination (R²) | Calculation & Interpretation

- Correlation Coefficient | Types, Formulas & Examples

- Frequency Distribution | Tables, Types & Examples

- How to Calculate Standard Deviation (Guide) | Calculator & Examples

- How to Calculate Variance | Calculator, Analysis & Examples

- How to Find Degrees of Freedom | Definition & Formula

- How to Find Interquartile Range (IQR) | Calculator & Examples

- How to Find Outliers | 4 Ways with Examples & Explanation

- How to Find the Geometric Mean | Calculator & Formula

- How to Find the Mean | Definition, Examples & Calculator

- How to Find the Median | Definition, Examples & Calculator

- How to Find the Mode | Definition, Examples & Calculator

- How to Find the Range of a Data Set | Calculator & Formula

- Hypothesis Testing | A Step-by-Step Guide with Easy Examples

- Interval Data and How to Analyze It | Definitions & Examples

- Levels of Measurement | Nominal, Ordinal, Interval and Ratio

- Linear Regression in R | A Step-by-Step Guide & Examples

- Missing Data | Types, Explanation, & Imputation

- Multiple Linear Regression | A Quick Guide (Examples)

- Nominal Data | Definition, Examples, Data Collection & Analysis

- Normal Distribution | Examples, Formulas, & Uses

- Null and Alternative Hypotheses | Definitions & Examples

- One-way ANOVA | When and How to Use It (With Examples)

- Ordinal Data | Definition, Examples, Data Collection & Analysis

- Parameter vs Statistic | Definitions, Differences & Examples

- Pearson Correlation Coefficient (r) | Guide & Examples

- Poisson Distributions | Definition, Formula & Examples

- Probability Distribution | Formula, Types, & Examples

- Quartiles & Quantiles | Calculation, Definition & Interpretation

- Ratio Scales | Definition, Examples, & Data Analysis

- Simple Linear Regression | An Easy Introduction & Examples

- Skewness | Definition, Examples & Formula

- Statistical Power and Why It Matters | A Simple Introduction

- Student's t Table (Free Download) | Guide & Examples

- T-distribution: What it is and how to use it

- Test statistics | Definition, Interpretation, and Examples

- The Standard Normal Distribution | Calculator, Examples & Uses

- Two-Way ANOVA | Examples & When To Use It

- Type I & Type II Errors | Differences, Examples, Visualizations

- Understanding Confidence Intervals | Easy Examples & Formulas

- Understanding P values | Definition and Examples

- Variability | Calculating Range, IQR, Variance, Standard Deviation

- What is Effect Size and Why Does It Matter? (Examples)

- What Is Kurtosis? | Definition, Examples & Formula

- What Is Standard Error? | How to Calculate (Guide with Examples)

What is your plagiarism score?

Quantitative Data Analysis 101

The lingo, methods and techniques, explained simply.

By: Derek Jansen (MBA) and Kerryn Warren (PhD) | December 2020

Quantitative data analysis is one of those things that often strikes fear in students. It’s totally understandable – quantitative analysis is a complex topic, full of daunting lingo , like medians, modes, correlation and regression. Suddenly we’re all wishing we’d paid a little more attention in math class…

The good news is that while quantitative data analysis is a mammoth topic, gaining a working understanding of the basics isn’t that hard , even for those of us who avoid numbers and math . In this post, we’ll break quantitative analysis down into simple , bite-sized chunks so you can approach your research with confidence.

Overview: Quantitative Data Analysis 101

- What (exactly) is quantitative data analysis?

- When to use quantitative analysis

- How quantitative analysis works

The two “branches” of quantitative analysis

- Descriptive statistics 101

- Inferential statistics 101

- How to choose the right quantitative methods

- Recap & summary

What is quantitative data analysis?

Despite being a mouthful, quantitative data analysis simply means analysing data that is numbers-based – or data that can be easily “converted” into numbers without losing any meaning.

For example, category-based variables like gender, ethnicity, or native language could all be “converted” into numbers without losing meaning – for example, English could equal 1, French 2, etc.

This contrasts against qualitative data analysis, where the focus is on words, phrases and expressions that can’t be reduced to numbers. If you’re interested in learning about qualitative analysis, check out our post and video here .

What is quantitative analysis used for?

Quantitative analysis is generally used for three purposes.

- Firstly, it’s used to measure differences between groups . For example, the popularity of different clothing colours or brands.

- Secondly, it’s used to assess relationships between variables . For example, the relationship between weather temperature and voter turnout.

- And third, it’s used to test hypotheses in a scientifically rigorous way. For example, a hypothesis about the impact of a certain vaccine.

Again, this contrasts with qualitative analysis , which can be used to analyse people’s perceptions and feelings about an event or situation. In other words, things that can’t be reduced to numbers.

How does quantitative analysis work?

Well, since quantitative data analysis is all about analysing numbers , it’s no surprise that it involves statistics . Statistical analysis methods form the engine that powers quantitative analysis, and these methods can vary from pretty basic calculations (for example, averages and medians) to more sophisticated analyses (for example, correlations and regressions).

Sounds like gibberish? Don’t worry. We’ll explain all of that in this post. Importantly, you don’t need to be a statistician or math wiz to pull off a good quantitative analysis. We’ll break down all the technical mumbo jumbo in this post.

Need a helping hand?

As I mentioned, quantitative analysis is powered by statistical analysis methods . There are two main “branches” of statistical methods that are used – descriptive statistics and inferential statistics . In your research, you might only use descriptive statistics, or you might use a mix of both , depending on what you’re trying to figure out. In other words, depending on your research questions, aims and objectives . I’ll explain how to choose your methods later.

So, what are descriptive and inferential statistics?

Well, before I can explain that, we need to take a quick detour to explain some lingo. To understand the difference between these two branches of statistics, you need to understand two important words. These words are population and sample .

First up, population . In statistics, the population is the entire group of people (or animals or organisations or whatever) that you’re interested in researching. For example, if you were interested in researching Tesla owners in the US, then the population would be all Tesla owners in the US.

However, it’s extremely unlikely that you’re going to be able to interview or survey every single Tesla owner in the US. Realistically, you’ll likely only get access to a few hundred, or maybe a few thousand owners using an online survey. This smaller group of accessible people whose data you actually collect is called your sample .

So, to recap – the population is the entire group of people you’re interested in, and the sample is the subset of the population that you can actually get access to. In other words, the population is the full chocolate cake , whereas the sample is a slice of that cake.

So, why is this sample-population thing important?