Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

Reliability vs. Validity in Research | Difference, Types and Examples

Published on July 3, 2019 by Fiona Middleton . Revised on June 22, 2023.

Reliability and validity are concepts used to evaluate the quality of research. They indicate how well a method , technique. or test measures something. Reliability is about the consistency of a measure, and validity is about the accuracy of a measure.opt

It’s important to consider reliability and validity when you are creating your research design , planning your methods, and writing up your results, especially in quantitative research . Failing to do so can lead to several types of research bias and seriously affect your work.

| Reliability | Validity | |

|---|---|---|

| What does it tell you? | The extent to which the results can be reproduced when the research is repeated under the same conditions. | The extent to which the results really measure what they are supposed to measure. |

| How is it assessed? | By checking the consistency of results across time, across different observers, and across parts of the test itself. | By checking how well the results correspond to established theories and other measures of the same concept. |

| How do they relate? | A reliable measurement is not always valid: the results might be , but they’re not necessarily correct. | A valid measurement is generally reliable: if a test produces accurate results, they should be reproducible. |

Table of contents

Understanding reliability vs validity, how are reliability and validity assessed, how to ensure validity and reliability in your research, where to write about reliability and validity in a thesis, other interesting articles.

Reliability and validity are closely related, but they mean different things. A measurement can be reliable without being valid. However, if a measurement is valid, it is usually also reliable.

What is reliability?

Reliability refers to how consistently a method measures something. If the same result can be consistently achieved by using the same methods under the same circumstances, the measurement is considered reliable.

What is validity?

Validity refers to how accurately a method measures what it is intended to measure. If research has high validity, that means it produces results that correspond to real properties, characteristics, and variations in the physical or social world.

High reliability is one indicator that a measurement is valid. If a method is not reliable, it probably isn’t valid.

If the thermometer shows different temperatures each time, even though you have carefully controlled conditions to ensure the sample’s temperature stays the same, the thermometer is probably malfunctioning, and therefore its measurements are not valid.

However, reliability on its own is not enough to ensure validity. Even if a test is reliable, it may not accurately reflect the real situation.

Validity is harder to assess than reliability, but it is even more important. To obtain useful results, the methods you use to collect data must be valid: the research must be measuring what it claims to measure. This ensures that your discussion of the data and the conclusions you draw are also valid.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Reliability can be estimated by comparing different versions of the same measurement. Validity is harder to assess, but it can be estimated by comparing the results to other relevant data or theory. Methods of estimating reliability and validity are usually split up into different types.

Types of reliability

Different types of reliability can be estimated through various statistical methods.

| Type of reliability | What does it assess? | Example |

|---|---|---|

| The consistency of a measure : do you get the same results when you repeat the measurement? | A group of participants complete a designed to measure personality traits. If they repeat the questionnaire days, weeks or months apart and give the same answers, this indicates high test-retest reliability. | |

| The consistency of a measure : do you get the same results when different people conduct the same measurement? | Based on an assessment criteria checklist, five examiners submit substantially different results for the same student project. This indicates that the assessment checklist has low inter-rater reliability (for example, because the criteria are too subjective). | |

| The consistency of : do you get the same results from different parts of a test that are designed to measure the same thing? | You design a questionnaire to measure self-esteem. If you randomly split the results into two halves, there should be a between the two sets of results. If the two results are very different, this indicates low internal consistency. |

Types of validity

The validity of a measurement can be estimated based on three main types of evidence. Each type can be evaluated through expert judgement or statistical methods.

| Type of validity | What does it assess? | Example |

|---|---|---|

| The adherence of a measure to of the concept being measured. | A self-esteem questionnaire could be assessed by measuring other traits known or assumed to be related to the concept of self-esteem (such as social skills and ). Strong correlation between the scores for self-esteem and associated traits would indicate high construct validity. | |

| The extent to which the measurement of the concept being measured. | A test that aims to measure a class of students’ level of Spanish contains reading, writing and speaking components, but no listening component. Experts agree that listening comprehension is an essential aspect of language ability, so the test lacks content validity for measuring the overall level of ability in Spanish. | |

| The extent to which the result of a measure corresponds to of the same concept. | A is conducted to measure the political opinions of voters in a region. If the results accurately predict the later outcome of an election in that region, this indicates that the survey has high criterion validity. |

To assess the validity of a cause-and-effect relationship, you also need to consider internal validity (the design of the experiment ) and external validity (the generalizability of the results).

The reliability and validity of your results depends on creating a strong research design , choosing appropriate methods and samples, and conducting the research carefully and consistently.

Ensuring validity

If you use scores or ratings to measure variations in something (such as psychological traits, levels of ability or physical properties), it’s important that your results reflect the real variations as accurately as possible. Validity should be considered in the very earliest stages of your research, when you decide how you will collect your data.

- Choose appropriate methods of measurement

Ensure that your method and measurement technique are high quality and targeted to measure exactly what you want to know. They should be thoroughly researched and based on existing knowledge.

For example, to collect data on a personality trait, you could use a standardized questionnaire that is considered reliable and valid. If you develop your own questionnaire, it should be based on established theory or findings of previous studies, and the questions should be carefully and precisely worded.

- Use appropriate sampling methods to select your subjects

To produce valid and generalizable results, clearly define the population you are researching (e.g., people from a specific age range, geographical location, or profession). Ensure that you have enough participants and that they are representative of the population. Failing to do so can lead to sampling bias and selection bias .

Ensuring reliability

Reliability should be considered throughout the data collection process. When you use a tool or technique to collect data, it’s important that the results are precise, stable, and reproducible .

- Apply your methods consistently

Plan your method carefully to make sure you carry out the same steps in the same way for each measurement. This is especially important if multiple researchers are involved.

For example, if you are conducting interviews or observations , clearly define how specific behaviors or responses will be counted, and make sure questions are phrased the same way each time. Failing to do so can lead to errors such as omitted variable bias or information bias .

- Standardize the conditions of your research

When you collect your data, keep the circumstances as consistent as possible to reduce the influence of external factors that might create variation in the results.

For example, in an experimental setup, make sure all participants are given the same information and tested under the same conditions, preferably in a properly randomized setting. Failing to do so can lead to a placebo effect , Hawthorne effect , or other demand characteristics . If participants can guess the aims or objectives of a study, they may attempt to act in more socially desirable ways.

It’s appropriate to discuss reliability and validity in various sections of your thesis or dissertation or research paper . Showing that you have taken them into account in planning your research and interpreting the results makes your work more credible and trustworthy.

| Section | Discuss |

|---|---|

| What have other researchers done to devise and improve methods that are reliable and valid? | |

| How did you plan your research to ensure reliability and validity of the measures used? This includes the chosen sample set and size, sample preparation, external conditions and measuring techniques. | |

| If you calculate reliability and validity, state these values alongside your main results. | |

| This is the moment to talk about how reliable and valid your results actually were. Were they consistent, and did they reflect true values? If not, why not? | |

| If reliability and validity were a big problem for your findings, it might be helpful to mention this here. |

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Quantitative research

- Ecological validity

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Middleton, F. (2023, June 22). Reliability vs. Validity in Research | Difference, Types and Examples. Scribbr. Retrieved September 8, 2024, from https://www.scribbr.com/methodology/reliability-vs-validity/

Is this article helpful?

Fiona Middleton

Other students also liked, what is quantitative research | definition, uses & methods, data collection | definition, methods & examples, get unlimited documents corrected.

✔ Free APA citation check included ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- Write for Us

- BMJ Journals

You are here

- Volume 18, Issue 2

- Issues of validity and reliability in qualitative research

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- Helen Noble 1 ,

- Joanna Smith 2

- 1 School of Nursing and Midwifery, Queens's University Belfast , Belfast , UK

- 2 School of Human and Health Sciences, University of Huddersfield , Huddersfield , UK

- Correspondence to Dr Helen Noble School of Nursing and Midwifery, Queens's University Belfast, Medical Biology Centre, 97 Lisburn Rd, Belfast BT9 7BL, UK; helen.noble{at}qub.ac.uk

https://doi.org/10.1136/eb-2015-102054

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

Evaluating the quality of research is essential if findings are to be utilised in practice and incorporated into care delivery. In a previous article we explored ‘bias’ across research designs and outlined strategies to minimise bias. 1 The aim of this article is to further outline rigour, or the integrity in which a study is conducted, and ensure the credibility of findings in relation to qualitative research. Concepts such as reliability, validity and generalisability typically associated with quantitative research and alternative terminology will be compared in relation to their application to qualitative research. In addition, some of the strategies adopted by qualitative researchers to enhance the credibility of their research are outlined.

Are the terms reliability and validity relevant to ensuring credibility in qualitative research?

Although the tests and measures used to establish the validity and reliability of quantitative research cannot be applied to qualitative research, there are ongoing debates about whether terms such as validity, reliability and generalisability are appropriate to evaluate qualitative research. 2–4 In the broadest context these terms are applicable, with validity referring to the integrity and application of the methods undertaken and the precision in which the findings accurately reflect the data, while reliability describes consistency within the employed analytical procedures. 4 However, if qualitative methods are inherently different from quantitative methods in terms of philosophical positions and purpose, then alterative frameworks for establishing rigour are appropriate. 3 Lincoln and Guba 5 offer alternative criteria for demonstrating rigour within qualitative research namely truth value, consistency and neutrality and applicability. Table 1 outlines the differences in terminology and criteria used to evaluate qualitative research.

- View inline

Terminology and criteria used to evaluate the credibility of research findings

What strategies can qualitative researchers adopt to ensure the credibility of the study findings?

Unlike quantitative researchers, who apply statistical methods for establishing validity and reliability of research findings, qualitative researchers aim to design and incorporate methodological strategies to ensure the ‘trustworthiness’ of the findings. Such strategies include:

Accounting for personal biases which may have influenced findings; 6

Acknowledging biases in sampling and ongoing critical reflection of methods to ensure sufficient depth and relevance of data collection and analysis; 3

Meticulous record keeping, demonstrating a clear decision trail and ensuring interpretations of data are consistent and transparent; 3 , 4

Establishing a comparison case/seeking out similarities and differences across accounts to ensure different perspectives are represented; 6 , 7

Including rich and thick verbatim descriptions of participants’ accounts to support findings; 7

Demonstrating clarity in terms of thought processes during data analysis and subsequent interpretations 3 ;

Engaging with other researchers to reduce research bias; 3

Respondent validation: includes inviting participants to comment on the interview transcript and whether the final themes and concepts created adequately reflect the phenomena being investigated; 4

Data triangulation, 3 , 4 whereby different methods and perspectives help produce a more comprehensive set of findings. 8 , 9

Table 2 provides some specific examples of how some of these strategies were utilised to ensure rigour in a study that explored the impact of being a family carer to patients with stage 5 chronic kidney disease managed without dialysis. 10

Strategies for enhancing the credibility of qualitative research

In summary, it is imperative that all qualitative researchers incorporate strategies to enhance the credibility of a study during research design and implementation. Although there is no universally accepted terminology and criteria used to evaluate qualitative research, we have briefly outlined some of the strategies that can enhance the credibility of study findings.

- Sandelowski M

- Lincoln YS ,

- Barrett M ,

- Mayan M , et al

- Greenhalgh T

- Lingard L ,

Twitter Follow Joanna Smith at @josmith175 and Helen Noble at @helnoble

Competing interests None.

Read the full text or download the PDF:

- Subscribe to journal Subscribe

- Get new issue alerts Get alerts

Secondary Logo

Journal logo.

Colleague's E-mail is Invalid

Your message has been successfully sent to your colleague.

Save my selection

Rigor or Reliability and Validity in Qualitative Research: Perspectives, Strategies, Reconceptualization, and Recommendations

Cypress, Brigitte S. EdD, RN, CCRN

Brigitte S. Cypress, EdD, RN, CCRN , is an assistant professor of nursing, Lehman College and The Graduate Center, City University of New York.

The author has disclosed that she has no significant relationships with, or financial interest in, any commercial companies pertaining to this article.

Address correspondence and reprint requests to: Brigitte S. Cypress, EdD, RN, CCRN, Lehman College and The Graduate Center, City University of New York, PO Box 2205, Pocono Summit, PA 18346 ( [email protected] ).

Supplemental digital content is available for this article. Direct URL citations appear in the printed text and are provided in the HTML and PDF versions of this article on the journal’s Web site ( www.dccnjournal.com ).

Issues are still raised even now in the 21st century by the persistent concern with achieving rigor in qualitative research. There is also a continuing debate about the analogous terms reliability and validity in naturalistic inquiries as opposed to quantitative investigations. This article presents the concept of rigor in qualitative research using a phenomenological study as an exemplar to further illustrate the process. Elaborating on epistemological and theoretical conceptualizations by Lincoln and Guba, strategies congruent with qualitative perspective for ensuring validity to establish the credibility of the study are described. A synthesis of the historical development of validity criteria evident in the literature during the years is explored. Recommendations are made for use of the term rigor instead of trustworthiness and the reconceptualization and renewed use of the concept of reliability and validity in qualitative research, that strategies for ensuring rigor must be built into the qualitative research process rather than evaluated only after the inquiry, and that qualitative researchers and students alike must be proactive and take responsibility in ensuring the rigor of a research study. The insights garnered here will move novice researchers and doctoral students to a better conceptual grasp of the complexity of reliability and validity and its ramifications for qualitative inquiry.

Conducting a naturalistic inquiry in general is not an easy task. Qualitative studies are more complex in many ways than a traditional investigation. Quantitative research follows a structured, rigid, preset design with the methods all prescribed. In naturalistic inquiries, planning and implementation are simultaneous, and the research design can change or is emergent. Preliminary steps must be accomplished before the design is fully implemented from making initial contact and gaining entry to site, negotiating consent, building and maintaining trust, and identifying participants. The steps of a qualitative inquiry are also repeated multiple times during the process. As the design unfolds, the elements of this design are put into place, and the inquirer has minimal control and should be flexible. There is continuous reassessment and reiteration. Data collection is carried out using multiple techniques, and whatever the source maybe, it is the researcher who is the sole instrument of the study and the primary mode of collecting the information. All the while during these processes, the qualitative inquirer must be concerned with rigor. 1 Appropriate activities must be conducted to ensure that rigor had been attended to in the research process rather than only adhering to set criteria for rigor after the completion of the study. 1-4

Reliability and validity are 2 key aspects of all research. Researchers assert that rigor of qualitative research equates to the concepts reliability and validity and all are necessary components of quality. 5,6 However, the precise definition of quality has created debates among naturalistic inquirers. Other scholars consider different criteria to describe rigor in qualitative research process. 7 The 2 concepts of reliability and validity have been operationalized eloquently in quantitative texts but at the same time were deemed not pertinent to qualitative inquiries in the 1990s. Meticulous attention to the reliability and validity of research studies is particularly vital in qualitative work, where the researcher's subjectivity can so readily cloud the interpretation of the data and where research findings are often questioned or viewed with skepticism by the scientific community (Brink, 1993).

This article will discuss the issue of rigor in relation to qualitative research and further illustrate the process using a phenomenological study as an exemplar based on Lincoln and Guba's 1 (1985) techniques. This approach will clarify and define some of these complex concepts. There are numerous articles about trustworthiness in the literature that are too complex, confusing, and full of jargon. Some of these published articles also discuss rigor vis-à-vis reliability and validity in a very complicated way. Rigor will be first defined followed by how “reliability and validity” should be applied to qualitative research methods during the inquiry (constructive) rather than only post hoc evaluation. Strategies to attain reliability and validity will be described including the criteria and techniques for ensuring its attainment in a study. This discussion will critically focus on the misuse or nonuse of the concept of reliability and validity in qualitative inquiries, reestablish its importance, and relate both to the concept of rigor. Reflecting on my own research experience, recommendations for the renewed use of the concept of reliability and validity in qualitative research will be presented.

RIGOR VERSUS TRUSTWORTHINESS

Rigor of qualitative research continues to be challenged even now in the 21st century—from the very idea that qualitative research alone is open to questions, so with the terms rigor and trustworthiness . It is critical to understand rigor in research. Rigor is simply defined as the quality or state of being very exact, careful, or with strict precision 8 or the quality of being thorough and accurate. 9 The term qualitative rigor itself is an oxymoron, considering that qualitative research is a journey of explanation and discovery that does not lend to stiff boundaries. 10

Rigor and truth are always of concern for qualitative research. 11 Rigor has also been used to express attributes related to the qualitative research process. 12,13 Per Morse et al 4 (2002), without rigor, research is worthless, becomes fiction, and loses its use. The authors further defined rigor as the strength of the research design and the appropriateness of the method to answer the questions. It is expected that qualitative studies be conducted with extreme rigor because of the potential of subjectivity that is inherent in this type of research. This is a more difficult task when dealing with narratives and people than numbers and statistics. 14 Davies and Dodd 13 (2002) refer rigor to the reliability and validity of research and that, inherent to the conception, the concept is a quantitative bias. Several researchers argued that reliability and validity pertain to quantitative research, which is unrelated or not pertinent to qualitative inquiry because it is aligned with the positivist view. 15 It is also suggested that a new way of looking at reliability and validity will ensure rigor in qualitative inquiry. 1,16 From Lincoln and Guba's crucial work in the 1980s, reliability and validity were replaced with the concept “trustworthiness.” Lincoln and Guba 1 (1985) were the first to address rigor in their model of trustworthiness of qualitative research. Trustworthiness is used as the central concept in their framework to appraise the rigor of a qualitative study.

Trustworthiness is described in different ways by researchers. Trustworthiness refers to quality, authenticity, and truthfulness of findings of qualitative research. It relates to the degree of trust, or confidence, readers have in results. 14 Yin 17 (1994) describes trustworthiness as a criterion to judge the quality of a research design. Trustworthiness addressed methods that can ensure one has carried out the research process correctly. 18 Manning 19 (1997) considered trustworthiness as parallel to the empiricist concepts of internal and external validity, reliability, and objectivity. Seale 20 (1999) asserted that trustworthiness of a research study is based on the concepts of reliability and validity. Guba 2 (1981), Guba and Lincoln 3 (1982), and Lincoln and Guba 1 (1985) refer to trustworthiness as something that evolved from 4 major concerns that relate to it in which the set of criteria were based on. Trustworthiness is a goal of the study and, at the same time, something to be judged during the study and after the research is conducted. The 4 major traditional criteria are summarized into 4 questions about truth value, applicability, consistency, and neutrality. From these, they proposed 4 analogous terms within the naturalistic paradigm to replace the rationalistic terms: credibility, transferability, dependability, and confirmability. 1 For each of these 4 naturalistic terms are research activities or steps that the inquirer should be engage in to be able to safeguard or satisfy each of the previously mentioned criteria and thus attain trustworthiness (Supplemental Digital Content 1, https://links.lww.com/DCCN/A18 ). Guba and Lincoln 1 (1985) stated:

The criteria aid inquirers in monitoring themselves and in guiding activities in the field, as a way of determining whether or not various stages in the research are meeting standards for quality and rigor. Finally, the same criteria may be used to render ex-post facto judgments on the products of research, including reports, case studies, or proposed publications.

Standards and checklist were developed in the 1990s based on Lincoln and Guba's 1 (1985) established criteria, which were then discarded in favor of principles. 21 These standards and checklists consisted of long list of strategies used by qualitative researchers, which were thought to cause harm because of the confusion on which strategies were appropriate for certain designs or what type of naturalistic inquiry is being evaluated. Thus, researchers interpreted missing data as faults and flaws. 21 Morse 21 (2012) further claimed that these standards became the qualitative researchers' “worst enemies” and such an approach was not appropriate. Guba and Lincoln 18 (1989) later proposed a set of guidelines for post hoc evaluation of a naturalistic inquiry to ensure trustworthiness based on the framework of naturalism and constructivism and beyond the conventional methodological ideas. The aspects of their criteria have been fundamental to development of standards used to evaluate the quality of qualitative inquiry. 4

THE RIGOR DEBATES: TRUSTWORTHINESS OR RELIABILITY AND VALIDITY?

A research endeavor, whether quantitative or qualitative, is always evaluated for its worth and merits by peers, experts, reviewers, and readers. Does this mean that a study is differentiated between “good” and “bad”? What determines a “good” from a “bad” inquiry? For a quantitative study, this would mean determining the reliability and validity, and for qualitative inquiries, this would mean determining rigor and trustworthiness. According to Golafshani 22 (2003), if the issues of reliability, validity, trustworthiness, and rigor are meant to differentiating a “good” from “bad” research, then testing and increasing the reliability, validity, trustworthiness, and rigor will be important to the research in any paradigm. However, do reliability and validity in quantitative research equate totally to rigor and trustworthiness in qualitative research? There are many ways to assess the “goodness” of a naturalistic inquiry. Guba and Lincoln 18 (1989) asked, “‘What standards ought apply?’… goodness criteria like paradigms are rooted in certain assumptions. Thus, it is not appropriate to judge constructivist evaluations by positivistic criteria or standards or vice versa. To each its proper and appropriate set.”

Reliability and validity are analogues and are determined differently than in quantitative inquiry. 21 The nature and purpose of the quantitative and qualitative traditions are also different that it is erroneous to apply the same criteria of worthiness or merit. 23,24 The qualitative researcher should not focus on quantitatively defined indicators of reliability and validity, but that does not mean that rigorous standards are not appropriate for evaluating findings. 11 Evaluation, like democracy, is a process that, to be at its best, depends on the application of enlightened and informed self-interest. 18 Agar 24 (1986), on the other hand, suggested that terms such as reliability and validity are comparative with the quantitative view and do not fit the details of qualitative research. A different language is needed to fit the qualitative view. From Leininger 25 (1985), Krefting 23 (1991) asserted that addressing reliability and validity in qualitative research is such a different process that quantitative labels should not be used. The incorrect application of the qualitative criteria of rigor to studies is as problematic as the application of inappropriate quantitative criteria. 23 Smith 26 (1989) argued that, for qualitative research, this means that the basis of truth or trustworthiness becomes a social agreement. He emphasizes that what is judged true or trustworthy is what we can agree, conditioned by time and place, and is true or trustworthy. Validity standards in qualitative research are also even more challenging because of the necessity to incorporate rigor and subjectivity, as well as creativity into the scientific process. 27 Furthermore, Leininger 25 (1985) claimed that it is not whether the data are reliable or valid but how the terms reliability and validity are defined. Aside from the debate whether reliability and validity criteria should be used similarly in qualitative inquiries, there is also an issue of not using the concepts at all in naturalistic studies.

Designing a naturalistic inquiry is very different from a traditional quantitative notion of design and that defining a “good” qualitative inquiry is controversial and has gone through many changes. 21 First is the confusion on the use of terminologies “rigor” and “trustworthiness.” Morse 28 (2015) suggested that it is time to return to the terminology of mainstream social science and to use “rigor” rather than “trustworthiness.” Debates also continue about why some qualitative researchers do not use the concept of reliability and validity in their studies referring to Lincoln and Guba's 1 (1985) criteria for trustworthiness, namely, transferability, dependability, confirmability, and credibility. Morse 28 (2015) further suggested replacing these criteria to reliability, validity, and generalizability. The importance and centrality of reliability and validity to qualitative inquiries have in some way been disregarded even in the current times. Researchers from the United Kingdom and Europe continue to do so but not much so in North America. 4 According to Morse 21 (2012), this gives the impression that these concepts are of no concern to qualitative research. Morse 29 (1999) stated, “Is the terminology worth making a fuzz about?”, when Lincoln and Guba 1 (1985) described trustworthiness and reliability and validity as analogs. Morse 29 (1999) further articulated that:

To state that reliability and validity are not pertinent to qualitative inquiry places qualitative research in the realm of being not reliable and not valid. Science is concerned with rigor, and by definition, good rigorous research must be reliable and valid. If qualitative research is unreliable and invalid, then it must not be science. If it is not science, then why should it be funded, published, implemented, or taken seriously?

RELIABILITY AND VALIDITY IN QUALITATIVE RESEARCH

Reliability and validity should be taken into consideration by qualitative inquirers while designing a study, analyzing results, and judging the quality of the study, 30 but for too long, the criteria used for evaluating rigor are applied after a research is completed—a considerably wrong tactic. 4 Morse and colleagues 4 (2002) argued that, for reliability and validity to be actively attained, strategies for ensuring rigor must be built into the qualitative research process per se not to be proclaimed only at the end of the inquiry. The authors suggest that focusing on strategies to establish rigor at the completion of the study (post hoc), rather than during the inquiry, exposes the investigators to the risk of missing and addressing serious threats to the reliability and validity until it is too late to correct them. They further asserted that the interface between reliability and validity is important especially for the direction of the analysis process and the development of the study itself.

Reliability

In the social sciences, the whole notion of reliability in and of itself is problematic. 31 The scientific aspect of reliability assumes that repeated measures of a phenomenon (with the same results) using objective methods establish the truth of the findings. 32-35 Merriam 36 (1995) stated that, “The more times the findings of a study can be replicated, the more stable or reliable the phenomenon is thought to be.” In other words, it is the idea of replicability, 22,34,37 repeatability, 21,22,26,30,31,36,38-40 and stability of results or observation. 25,39,41 The issues are that human behaviors and interactions are never static or the same. Measurements and observations can also be repeatedly wrong. Furthermore, researchers have argued that the concept reliability is misleading and has no relevance in qualitative research related to the notion of “measurement method,” as in quantitative studies. 40,42 It is a fact that quantitative research is supported by the positivist or scientific paradigm that regards the world as made up of observable, measurable facts. Qualitative research, on the other hand, produces findings not arrived at by means of statistical procedures or other means of quantification. On the basis of the constructivist paradigm, it is a naturalistic inquiry that seeks to understand phenomena in context-specific settings in which the researcher does not attempt to manipulate the phenomenon of interest. 23 If reliability is used as a criterion in qualitative research, it would mean that the study is “not good.” A thorough description of the entire research process that allows for intersubjectivity is what indicates good quality when using qualitative methodology. Reliability is based on consistency and care in the application of research practices, which are reflected in the visibility of research practices, analysis, and conclusions, reflected in an open account that remains mindful of the partiality and limits of the research findings. 13 Reliability and similar terms are presented in Supplemental Digital Content 2 (see Supplemental Digital Content 2, https://links.lww.com/DCCN/A19 ).

Validity is broadly defined as the state of being well grounded or justifiable, relevant, meaningful, logical, confirming to accepted principles or the quality of being sound, just, and well founded. 8 The issues surrounding the use and nature of the term validity in qualitative research are controversial and many. It is a highly debated topic both in social and educational research and is still often a subject of debate. 43 The traditional criteria for validity find their roots in a positivist tradition, and to an extent, positivism has been defined by a systematic theory of validity. 22 Validity is rooted from empirical conceptions as universal laws, evidence, objectivity, truth, actuality, deduction, reason, fact, and mathematical data, to name only a few. Validity in research is concerned with the accuracy and truthfulness of scientific findings. 44 A valid study should demonstrate what actually exists and is accurate, and a valid instrument or measure should actually measure what it is supposed to measure. 5,22,29,31,42,45

Novice researchers can become easily perplexed in attempting to understand the notion of validity in qualitative inquiry. 44 There is a multiple array of terms similar to validity in the literature, which the authors equate to same such as authenticity, goodness, adequacy, trustworthiness, verisimilitude, credibility, and plausibility. 1,45-51 Validity is not a single, fixed, or universal concept but rather a contingent construct, inescapably grounded in the processes and intentions of particular research methodologies. 39 Some qualitative researchers have argued that the term validity is not applicable to qualitative research and have related it to terms such as quality, rigor, and trustworthiness. 1,13,22,38,42,52-54 I argue that the concepts of reliability and validity are overarching constructs that can be appropriately used in both quantitative and qualitative methodologies. To validate means to investigate, to question, and to theorize, which are all activities to ensure rigor in a qualitative inquiry. For Leininger 25 (1985), the term validity in a qualitative sense means gaining knowledge and understanding of the nature (ie, the meaning, attributes, and characteristics) of the phenomenon under study. A qualitative method seeks for a certain quality that is typical for a phenomenon or that makes the phenomenon different than others.

Some naturalistic inquirers agree that assuring validity is a process whereby ideals are sought through attention to specified criteria, and appropriate techniques are used to address any threats to validity of a naturalistic inquiry. However, other researchers argue that procedures and techniques are not an assurance of validity and will not necessarily produce sound data or credible conclusions. 38,48,55 Thus, some argued that they should abandon the concept of validity and seek alternative criteria with which to judge their work. Criteria are the standards or rules to be upheld as ideals in qualitative research on which a judgment or decisions may be based, 4,56 whereas the techniques are the methods used to diminish identified validity threats. 56 Criteria, for some researchers, are used to test the quality of the research design, whereas for some, they are the goal of the study. There is also the trend to treat standards, goals, and criteria synonymously. I concur with Morse 29 (1999) that introducing parallel terminology and criteria diminishes qualitative inquiry from mainstream science and scientific legitimacy. The development of alternative criteria compromises the issue of rigor. We must work to have a consensus toward criteria and terminology that are used in mainstream science and how it is attained within the qualitative inquiry during the research process rather than at the end of the study. Despite all these, researchers developed validity criteria and techniques during the years. A synthesis of validity criteria development is summarized in Supplemental Digital Content 3 (see Supplemental Digital Content 3, https://links.lww.com/DCCN/A20 ). The techniques for demonstrating validity are presented in Supplemental Digital Content 4 (see Supplemental Digital Content 4, https://links.lww.com/DCCN/A21 ).

Reliability and Validity as Means in Ensuring the Quality of Findings of a Phenomenological Study in Intensive Care Unit

Reliability and validity are 2 factors that any qualitative researcher should be concerned about while designing a study, analyzing results, and judging its quality. Just as the quantitative investigator must attend to the question of how external and internal validity, reliability, and objectivity will be provided for in the design, so must the naturalistic inquirer arrange for credibility, transferability, dependability, and confirmability. 1 Lincoln and Guba 1 (1985) clearly established these 4 criteria as benchmarks for quality based on the identification of 4 aspects of trustworthiness that are relevant to both quantitative and qualitative studies, which are truth value, applicability, consistency, and neutrality. Guba 2 (1981) stated, “It is to these concerns that the criteria must speak.”

Rigor of a naturalistic inquiry such as phenomenology may be operationalized using the criteria of credibility, transferability, dependability, and confirmability. This phenomenological study aimed to understand and illuminate the meaning of the phenomenon of the lived experiences of patients, their family members, and the nurses during critical illness in the intensive care unit (ICU). From Lincoln and Guba 1 (1985), I first asked the question, “How can I persuade my audience that the research findings of my inquiry are worth paying attention to, and worth taking account of?” My answers to these questions were based on the identified 4 criteria set forth by Lincoln and Guba 1 (1985).

Credibility, the accurate and truthful depiction of a participant's lived experience, was achieved in this study through prolonged engagement and persistent observation to learn the context of the phenomenon in which it is embedded and to minimize distortions that might creep into the data. To achieve this, I spent 6 months with nurses, patients, and their families in the ICU to become oriented to the situation and also to build trust and rapport with the participants. Peer debriefing was conducted through meetings and discussions with an expert qualitative researcher to allow for questions and critique of field journals and research activities. Triangulation was achieved by cross-checking the data and interpretations within and across each category of participants by 2 qualitative researchers. Member checks were accomplished by constantly checking data and interpretations with the participants from which data were solicited.

Transferability was enhanced by using purposive sampling method and providing a thick description and a robust data with a wide possible range of information through the detailed and accurate descriptions of the patients, their family members, and the nurses' lived ICU experiences and by continuously returning to the texts. In this study, recruitment of participants and data collection continued until the data are saturated and complete and replicate. According to Morse et al 4 (2002), interviewing additional participants is for the purpose of increasing the scope, adequacy, and appropriateness of the data. I immersed myself into the phenomenon to know, describe, and understand it fully, comprehensively, and thoroughly. Special care was given to the collection, identification, and analysis of all data pertinent to the study. The audiotaped data were meticulously transcribed by a professional transcriber for future scrutiny. During the analysis phase, every attempt was made to document all aspects of the analysis. Analysis in qualitative research refers to the categorization and ordering of information in such a way as to make sense of the data and to writing a final report that is true and accurate. 36 Every effort was made to coordinate methodological and analytical materials. After I categorized and was able to make sense of the transcribed data, all efforts were exhausted to illuminate themes and descriptors as they emerge.

Lincoln and Guba 1 (1985) use “dependability” in qualitative research, which closely corresponds to the notion of “reliability” in quantitative research. Dependability was achieved by having 2 expert qualitative nursing researchers review the transcribed material to validate the themes and descriptors identified. To be able to validate my findings related to the themes, a doctoral-prepared nursing colleague was asked to review some of the transcribed materials. Any new themes and descriptors illuminated by my colleague were acknowledged and considered. It was then compared with my own thematic analysis from the entire participant's transcribed data. If the theme identified by the colleague did not appear in my own thematic analysis, it was agreed by both analysts not to use the said theme. It was my goal that both analysts agree on the findings related to themes and meanings within the transcribed material.

Confirmability was met by maintaining a reflexive journal during the research process to keep notes and document introspections daily that would be beneficial and pertinent during the study. An audit trail also took place to examine the processes whereby data were collected and analyzed and interpretations were made. The audit trail took the form of documentation (the actual interview notes taken) and a running account of the process (my daily field journal). I maintained self-awareness of my role as the sole instrument of this study. After each interview, I retired in 1 private room to document additional perceptions and recollections from the interviews (Supplemental Digital Content 5, https://links.lww.com/DCCN/A22 ).

Through reflexivity and bracketing, I was always on guard of my own biases, assumptions, beliefs, and presuppositions that I might bring to the study but was also aware that complete reduction is not possible. Van Manen 44 (1990) stated that “if we simply try to forget or ignore what we already know, we may find that the presuppositions persistently creep back into our reflections.” During data collection and analysis, I made my orientation and preunderstanding of critical illness and critical care explicit but held them deliberately at bay and bracketed them. Aside from Lincoln and Guba's 1 (1985) 4 criteria for trustworthiness, a question arises as to the reliability of the researcher as the sole instrument of the study.

Reliability related to the researcher as the sole instrument who conducted the data collection and analysis is a limitation of any phenomenological study. The use of humans as instruments is not a new concept. Lincoln and Guba 1 (1985) articulated that humans uniquely qualify as the instrument of choice for naturalistic inquiry. Some of the giants of conventional inquiry have recognized that humans can provide data very nearly as reliable as that produced by “more” objective means. These are formidable characteristics, but they are meaningless if the human instrument is not also trustworthy. However, no human instrument is expected to be perfect. Humans have flaws, and errors could be committed. When Lincoln and Guba 1 (1985) asserted that qualitative methods come more easily to hand when the instrument is a human being, they mean that the human as instrument is inclined toward methods that are extensions of normal activities. They believe that the human will tend therefore toward interviewing, observing, mining available documents and records, taking account of nonverbal cues, and interpreting inadvertent unobtrusive measures. All of which are complex tasks. In addition, one would not expect an individual to function adequately as human instruments without an extensive background or training and experience. This study has reliability in that I have acquired knowledge and the required training for research at a doctoral level with the professional and expert guidance of a mentor. As Lincoln and Guba 1 (1985) said, “Performance can be improved…when that learning is guided by an experienced mentor, remarkable improvements in human instrumental performance can be achieved.” Whereas reliability in quantitative research depends on instrument construction, in qualitative research, the researcher is the instrument of the study. 31 A reliable research is a credible research. Credibility of a qualitative research depends on the ability and effort of the researcher. 22 We have established that a study can be reliable without being valid, but a study cannot be valid without being reliable.

Establishing validity is a major challenge when a qualitative research project is based on a single, cross-sectional, unstructured interview as the basis for data collection. How do I make judgments about the validity of the data? In qualitative research, the validity of the findings is related to the careful recording and continual verification of the data that the researcher undertakes during the investigative practice. If the validity or trustworthiness can be maximized or tested, then more credible and defensible result may lead to generalizability as the structure for both doing and documenting high-quality qualitative research. Therefore, the quality of a research is related to generalizability of the result and thereby to the testing and increasing of the validity or trustworthiness of the research.

One potential threat to validity that researchers need to consider is researcher bias. Researcher bias is frequently an issue because qualitative research is open and less structured than quantitative research. This is because qualitative research tends to be exploratory. Researcher bias tends to result from selective observation and selective recording of information and from allowing one's personal views and perspectives to affect how data are interpreted and how the research is conducted. Therefore, it is very important that the researchers are aware of their own perceptions and opinions because they may taint their research findings and conclusions. I brought all past experiences and knowledge into the study but learned to set aside my own strongly held perceptions, preconceptions, and opinions. I truly listened to the participants to learn their stories, experiences, and meanings.

The key strategy used to understand researcher bias is called reflexivity. Reflexivity means that the researchers actively engage in critical self-reflection about their potential biases and predispositions that they bring to the qualitative study. Through reflexivity, researchers become more self-aware and monitor and attempt to control their biases. Phenomenological researchers can recognize that their interpretation is correct because the reflective process awakens an inner moral impulse. 4,59 I did my best to be always on guard of my own biases, preconceptions, and assumptions that I might bring to this study. Bracketing was also applied.

Husserl 60 (1931) has made some key conceptual elaborations, which led him to assert that an attempt to hold a previous belief about the phenomena under study in suspension to perceive it more clearly is needed in phenomenological research. This technique is called bracketing. Bracketing is another strategy used to control bias. Husserl 60 (1931) explained further that phenomenological reduction is the process of defining the pure essence of a psychological phenomenon. Phenomenological reduction is a process whereby empirical subjectivity is suspended, so that pure consciousness may be defined in its essential and absolute “being.” This is accomplished by a method of bracketing empirical data away from consideration. Bracketing empirical data away from further investigation leaves pure consciousness, pure phenomena, and pure ego as the residue of phenomenological reduction. Husserl 60 (1931) uses the term epoche (Greek word for “a cessation”) to refer to this suspension of judgment regarding the true nature of reality. Bracketed judgment is an epoche or suspension of inquiry, which places in brackets whatever facts belong to essential “being.”

Bracketing was conducted to separate the assumptions and biases from the essences and therefore achieve an understanding of the phenomenon as experienced by the participants of the study. The collected and analyzed data were presented to the participants, and they were asked whether the narrative is accurate and a true reflection of their experience. My interpretation and descriptions of the narratives were presented to the participants to achieve credibility. They were given the opportunity to review the transcripts and modify it if they wished to do so. As I was the one who served as the sole instrument in obtaining data for this phenomenological study, my goal was that my perceptions would reflect the participant's ICU experiences and that the participants would be able to see their lived experience through the researcher's eyes. Because qualitative research designs are flexible and emergent in nature, there will always be study limitations.

Awareness of the limitations of a research study is crucial for researchers. The purpose of this study was to understand the ICU experiences of patients, their family members, and the nurses during critical illness. One limitation of this phenomenological study as a naturalistic inquiry was the inability of the researcher to fully design and provide specific ideas needed for the study. According to Lincoln and Guba 1 (1985), naturalistic studies are virtually impossible to design in any definitive way before the study is actually undertaken. The authors stated:

Designing a naturalistic study means something very different from the traditional notion of “design”—which as often as not meant the specification of a statistical design with its attendant field conditions and controls. Most of the requirements normally laid down for a design statement cannot be met by naturalists because the naturalistic inquiry is largely emergent.

Within the naturalistic paradigm, designs must be emergent rather than preordinate because (1) meaning is determined by context to such a great extent. For this particular study, the phenomenon and context were the experience of critical illness in the ICU; (2) the existence of multiple realities constrains the development of a design based on only 1 (the investigator's) construction; (3) what will be learned at a site is always dependent on the interaction between the investigator and the context, and the interaction is also not fully predictable; and (4) the nature of mutual shapings cannot be known until they are witnessed. These factors underscore the indeterminacy under which naturalistic inquirer functions. The design must therefore be “played by ear”; it must unfold, cascade, and emerge. It does not follow, however, that, because not all of the elements of the design can be prespecified in a naturalistic inquiry, none of them can. Design in the naturalistic sense means planning for certain broad contingencies without however indicating exactly what will be conducted on relation to each. 1

Reliability and validity are such fundamental concepts that should be continually operationalized to meet the conditions of a qualitative inquiry. Morse et al 4,29 (2002) articulated that “by refusing to acknowledge the centrality of reliability and validity in qualitative methods, qualitative methodologists have inadvertently fostered the default notion that qualitative research must therefore be unreliable and invalid, lacking in rigor, and unscientific.” Sparkes 59 (2001) asserted that Morse et al 4,26 (2002) is right in warning us that turning our backs on such fundamental concepts as validity could cost us dearly. This will in turn affect how we mentor novices, early career researchers, and doctoral students in their qualitative research works.

Reliability is inherently integrated and internally needed to attain validity. 1,26 I concur with the use of the term rigor rather than trustworthiness in naturalistic studies. I have also discussed that I accede that strategies for ensuring rigor must be built into the qualitative research process per se rather than evaluated only after the inquiry is conducted. Threats to reliability and validity cannot be actively addressed by using standards and criteria applied at the end of the study. Ensuring rigor must be upheld by the researcher during the investigation rather than the external judges of the completed study. Whether a study is quantitative or qualitative, rigor is a desired goal that is met through the inclusion of different philosophical perspectives inherent in a qualitative inquiry and the strategies that are specific to each methodological approach including the verification techniques to be observed during the research process. It also involves the researcher's creativity, sensitivity, flexibility, and skill in using the verification strategies that determine the reliability and validity of the evolving study.

Some naturalistic inquirers agree that assuring validity is a process whereby ideals are sought through attention to specified criteria, and appropriate techniques are used to address any threats to validity of a naturalistic inquiry. However, other researchers argue that procedures and techniques are not an assurance of validity and will not necessarily produce sound data or credible conclusions. 38,48,55 Thus, some argued that they should abandon the concept of validity and seek alternative criteria with which to judge their work.

Lincoln and Guba's 1 (1985) standards of validity demonstrate the necessity and convenience of overarching principles to all qualitative research, yet there is a need for a reconceptualization of criteria of validity in qualitative research. The development of validity criteria in qualitative research poses theoretical issues, not simply technical problems. 60 Whittemore et al 58 (2001) explored the historical development of validity criteria in qualitative research and synthesized the findings that reflect a contemporary reconceptualization of the debate and dialogue that have ensued in the literature during the years. The authors further presented primary (credibility, authenticity, criticality, and integrity) and secondary (explicitness, vividness, creativity, thoroughness, congruence, and sensitivity) validity criteria to be used in the evaluative process. 56 Before the work of Whittemore and colleagues, 58 Creswell and Miller 48 (2000) asserted that the constructivist lens and paradigm choice should guide validity evaluation and procedures from the perspective of the researcher (disconfirming evidence), the study participants (prolonged engagement in the field), and external reviewers/readers (thick, rich description). Morse et al 4 in 2002 presented 6 major evaluation criteria for validity and asserted that they are congruent and are appropriate within the philosophy of the qualitative tradition. These 6 criteria are credibility, confirmability, meaning in context, recurrent patterning, saturation, and transferability. Synthesis of validity criteria is presented in Supplemental Digital Content 3 (see Supplemental Digital Content 3, https://links.lww.com/DCCN/A20 ).

Common validity techniques in qualitative research refer to design consideration, data generation, analytic procedures, and presentation. 56 First is the design consideration. Developing a self-conscious design, the paradigm assumption, the purposeful choice of small sample of informants relevant to the study, and the use of inductive approach are some techniques to be considered. Purposive sampling enhances the transferability of the results. Interpretivist and constructivist inquiry follows an inductive approach that is flexible and emergent in design with some uncertainty and fluidity within the context of the phenomenon of interest 56,58 and not based on a set of determinate rules. 61 The researcher does not work with a priori theory; rather, these are expected to emerge from the inquiry. Data are analyzed inductively from specific, raw units of information to subsuming categories to define questions that can be followed up. 1 Qualitative studies also follow a naturalistic and constructivist paradigm. Creswell and Miller 48 (2000) suggest that the validity is affected by the researchers' perception of validity in the study and their choice of paradigm assumption. Determining fit of paradigm to focus is an essential aspect of a naturalistic inquiry. 1 Paradigms rest on sets of beliefs called axioms. 1 On the basis of the naturalistic axioms, the researcher should ask questions related to multiplicity or complex constructions of the phenomenon, the degree of investigator-phenomenon interaction and the indeterminacy it will introduce into the study, the degree of context dependence, whether values are likely to be crucial to the outcome, and the constraints that may be placed on the researcher by a variety of significant others. 1

Validity during data generation is evaluated through the researcher's ability to articulate data collection decisions, demonstrate prolonged engagement and persistent observation, provide verbatim transcription, and achieve data saturation. 56 Methods are means to collect evidence to support validity, and this refers to the data obtained by considering a context for a purpose. The human instrument operating in an indeterminate situation falls back on techniques such as interview, observation, unobtrusive measures, document and record analysis, and nonverbal cues. 1 Others remarked that rejecting methods or technical procedures as assurance of truth, thus validity of a qualitative study, lies in the skills and sensitivities of the researchers and how they use themselves as a knower and an inquirer. 57,62 The understanding of the phenomenon is valid if the participants are given the opportunity to speak freely according to their own knowledge structures and perceptions. Validity is therefore achieved when using the method of open-ended, unstructured interviews with strategically chosen participants. 42 We also know that a thorough description of the entire research process enabling unconditional intersubjectivity is what indicates good quality when using a qualitative method. This enhances a clearer and better analysis of data.

Analytical procedures are vital in qualitative research. 56 Not very much can be said about data analysis in advance of a qualitative study. 1 Data analysis is not an inclusive phase that can be marked out as occurring at some singular time during the inquiry. 1 It begins from the very first data collection to facilitate the emergent design and grounding of theory. Validity in a study thus is represented by truthfulness of findings after a careful analysis. 56 Consequently, qualitative researchers seek to illuminate and extrapolate findings to similar situations. 22,63 It is a fact that the interpretations of any given social phenomenon may reflect, in part, the biases and prejudices the interpreters bring to the task and the criteria and logic they follow in completing it. 64 In any case, individuals will draw different conclusions to the debate surrounding validity and will make different judgments as a result. 50 There is a wide array of analytic techniques that the qualitative researcher can choose from based on the contextual factors that will help contribute to the decision as to which technique will optimally reflect specific criteria of validity. 65 Presentation of findings is accomplished by providing an audit trail and evidence that support interpretations, acknowledging the researcher's perspective and providing thick descriptions. Morse et al 4 in 2002 set forth strategies for ensuring validity that include investigator responsiveness and verification through methodological coherence, theoretical sampling and sampling adequacy, an active analytic stance, and saturation. The authors further stated that “these strategies, when used appropriately, force the researcher to correct both the direction of the analysis and the development of the study as necessary, thus ensuring reliability and validity of the completed project (p17). Recently in 2015, Morse 28 presented that the strategies for ensuring validity in a qualitative study are prolonged engagement, persistent observation, thick and rich description, negative case analysis, peer review or debriefing, clarifying researcher's bias, member checking, external audits, and triangulation. These strategies can be upheld with the help of an expert mentor who can in turn guide and affect the reliability and validity of early career researchers and doctoral students' qualitative research works. Techniques for demonstrating validity are summarized in Supplemental Digital Content 4 (see Supplemental Digital Content 4, https://links.lww.com/DCCN/A21 ).

Qualitative researchers and students alike must be proactive and take responsibility in ensuring the rigor of a research study. A lot of times, rigor is at the backseat in some researchers and doctoral students' work related to their novice abilities, lack of proper mentorship, and issues with time and funding. Students should conduct projects that are smaller in scope guided by an expert naturalistic inquirer to come up with the product with depth and, at the same time, gain the grounding experience necessary to become an excellent researcher. Attending to rigor throughout the research process will have important ramifications for qualitative inquiry. 4,26

Qualitative research is not intended to be scary or beyond the grasp of novices and doctoral students. Conducting a naturalistic inquiry is an experience of exploration, discovery, description, and understanding of a phenomenon that transcends one's own research journey. Attending to the rigor of qualitative research is a vital part of the investigative process that offers critique and thus further development of the science.

- Cited Here |

- Google Scholar

Phenomenology; Qualitative research; Reliability; Rigor; Validity

Supplemental Digital Content

- DCCN_2017_04_11_CYPRESS_DCCN-D-16-00060_SDC1.pdf; [PDF] (3 KB)

- DCCN_2017_04_11_CYPRESS_DCCN-D-16-00060_SDC2.pdf; [PDF] (4 KB)

- DCCN_2017_04_11_CYPRESS_DCCN-D-16-00060_SDC3.pdf; [PDF] (78 KB)

- DCCN_2017_04_11_CYPRESS_DCCN-D-16-00060_SDC4.pdf; [PDF] (70 KB)

- DCCN_2017_04_11_CYPRESS_DCCN-D-16-00060_SDC5.pdf; [PDF] (4 KB)

- + Favorites

- View in Gallery

Readers Of this Article Also Read

Family presence during resuscitation: the education needs of critical care..., increasing access to palliative care services in the intensive care unit, educational interventions to improve support for family presence during..., nursing practices and policies related to family presence during resuscitation.

Criteria for Good Qualitative Research: A Comprehensive Review

- Regular Article

- Open access

- Published: 18 September 2021

- Volume 31 , pages 679–689, ( 2022 )

Cite this article

You have full access to this open access article

- Drishti Yadav ORCID: orcid.org/0000-0002-2974-0323 1

101k Accesses

47 Citations

70 Altmetric

Explore all metrics

This review aims to synthesize a published set of evaluative criteria for good qualitative research. The aim is to shed light on existing standards for assessing the rigor of qualitative research encompassing a range of epistemological and ontological standpoints. Using a systematic search strategy, published journal articles that deliberate criteria for rigorous research were identified. Then, references of relevant articles were surveyed to find noteworthy, distinct, and well-defined pointers to good qualitative research. This review presents an investigative assessment of the pivotal features in qualitative research that can permit the readers to pass judgment on its quality and to condemn it as good research when objectively and adequately utilized. Overall, this review underlines the crux of qualitative research and accentuates the necessity to evaluate such research by the very tenets of its being. It also offers some prospects and recommendations to improve the quality of qualitative research. Based on the findings of this review, it is concluded that quality criteria are the aftereffect of socio-institutional procedures and existing paradigmatic conducts. Owing to the paradigmatic diversity of qualitative research, a single and specific set of quality criteria is neither feasible nor anticipated. Since qualitative research is not a cohesive discipline, researchers need to educate and familiarize themselves with applicable norms and decisive factors to evaluate qualitative research from within its theoretical and methodological framework of origin.

Similar content being viewed by others

Good Qualitative Research: Opening up the Debate

Beyond qualitative/quantitative structuralism: the positivist qualitative research and the paradigmatic disclaimer.

What is Qualitative in Research

Avoid common mistakes on your manuscript.

Introduction

“… It is important to regularly dialogue about what makes for good qualitative research” (Tracy, 2010 , p. 837)

To decide what represents good qualitative research is highly debatable. There are numerous methods that are contained within qualitative research and that are established on diverse philosophical perspectives. Bryman et al., ( 2008 , p. 262) suggest that “It is widely assumed that whereas quality criteria for quantitative research are well‐known and widely agreed, this is not the case for qualitative research.” Hence, the question “how to evaluate the quality of qualitative research” has been continuously debated. There are many areas of science and technology wherein these debates on the assessment of qualitative research have taken place. Examples include various areas of psychology: general psychology (Madill et al., 2000 ); counseling psychology (Morrow, 2005 ); and clinical psychology (Barker & Pistrang, 2005 ), and other disciplines of social sciences: social policy (Bryman et al., 2008 ); health research (Sparkes, 2001 ); business and management research (Johnson et al., 2006 ); information systems (Klein & Myers, 1999 ); and environmental studies (Reid & Gough, 2000 ). In the literature, these debates are enthused by the impression that the blanket application of criteria for good qualitative research developed around the positivist paradigm is improper. Such debates are based on the wide range of philosophical backgrounds within which qualitative research is conducted (e.g., Sandberg, 2000 ; Schwandt, 1996 ). The existence of methodological diversity led to the formulation of different sets of criteria applicable to qualitative research.

Among qualitative researchers, the dilemma of governing the measures to assess the quality of research is not a new phenomenon, especially when the virtuous triad of objectivity, reliability, and validity (Spencer et al., 2004 ) are not adequate. Occasionally, the criteria of quantitative research are used to evaluate qualitative research (Cohen & Crabtree, 2008 ; Lather, 2004 ). Indeed, Howe ( 2004 ) claims that the prevailing paradigm in educational research is scientifically based experimental research. Hypotheses and conjectures about the preeminence of quantitative research can weaken the worth and usefulness of qualitative research by neglecting the prominence of harmonizing match for purpose on research paradigm, the epistemological stance of the researcher, and the choice of methodology. Researchers have been reprimanded concerning this in “paradigmatic controversies, contradictions, and emerging confluences” (Lincoln & Guba, 2000 ).

In general, qualitative research tends to come from a very different paradigmatic stance and intrinsically demands distinctive and out-of-the-ordinary criteria for evaluating good research and varieties of research contributions that can be made. This review attempts to present a series of evaluative criteria for qualitative researchers, arguing that their choice of criteria needs to be compatible with the unique nature of the research in question (its methodology, aims, and assumptions). This review aims to assist researchers in identifying some of the indispensable features or markers of high-quality qualitative research. In a nutshell, the purpose of this systematic literature review is to analyze the existing knowledge on high-quality qualitative research and to verify the existence of research studies dealing with the critical assessment of qualitative research based on the concept of diverse paradigmatic stances. Contrary to the existing reviews, this review also suggests some critical directions to follow to improve the quality of qualitative research in different epistemological and ontological perspectives. This review is also intended to provide guidelines for the acceleration of future developments and dialogues among qualitative researchers in the context of assessing the qualitative research.

The rest of this review article is structured in the following fashion: Sect. Methods describes the method followed for performing this review. Section Criteria for Evaluating Qualitative Studies provides a comprehensive description of the criteria for evaluating qualitative studies. This section is followed by a summary of the strategies to improve the quality of qualitative research in Sect. Improving Quality: Strategies . Section How to Assess the Quality of the Research Findings? provides details on how to assess the quality of the research findings. After that, some of the quality checklists (as tools to evaluate quality) are discussed in Sect. Quality Checklists: Tools for Assessing the Quality . At last, the review ends with the concluding remarks presented in Sect. Conclusions, Future Directions and Outlook . Some prospects in qualitative research for enhancing its quality and usefulness in the social and techno-scientific research community are also presented in Sect. Conclusions, Future Directions and Outlook .

For this review, a comprehensive literature search was performed from many databases using generic search terms such as Qualitative Research , Criteria , etc . The following databases were chosen for the literature search based on the high number of results: IEEE Explore, ScienceDirect, PubMed, Google Scholar, and Web of Science. The following keywords (and their combinations using Boolean connectives OR/AND) were adopted for the literature search: qualitative research, criteria, quality, assessment, and validity. The synonyms for these keywords were collected and arranged in a logical structure (see Table 1 ). All publications in journals and conference proceedings later than 1950 till 2021 were considered for the search. Other articles extracted from the references of the papers identified in the electronic search were also included. A large number of publications on qualitative research were retrieved during the initial screening. Hence, to include the searches with the main focus on criteria for good qualitative research, an inclusion criterion was utilized in the search string.

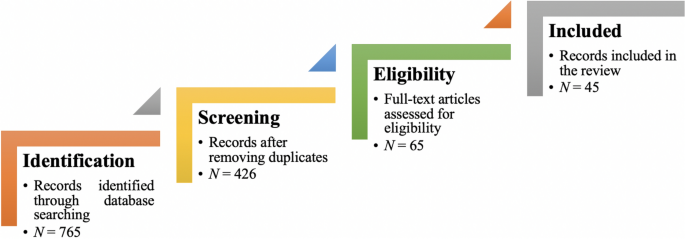

From the selected databases, the search retrieved a total of 765 publications. Then, the duplicate records were removed. After that, based on the title and abstract, the remaining 426 publications were screened for their relevance by using the following inclusion and exclusion criteria (see Table 2 ). Publications focusing on evaluation criteria for good qualitative research were included, whereas those works which delivered theoretical concepts on qualitative research were excluded. Based on the screening and eligibility, 45 research articles were identified that offered explicit criteria for evaluating the quality of qualitative research and were found to be relevant to this review.

Figure 1 illustrates the complete review process in the form of PRISMA flow diagram. PRISMA, i.e., “preferred reporting items for systematic reviews and meta-analyses” is employed in systematic reviews to refine the quality of reporting.

PRISMA flow diagram illustrating the search and inclusion process. N represents the number of records

Criteria for Evaluating Qualitative Studies

Fundamental criteria: general research quality.

Various researchers have put forward criteria for evaluating qualitative research, which have been summarized in Table 3 . Also, the criteria outlined in Table 4 effectively deliver the various approaches to evaluate and assess the quality of qualitative work. The entries in Table 4 are based on Tracy’s “Eight big‐tent criteria for excellent qualitative research” (Tracy, 2010 ). Tracy argues that high-quality qualitative work should formulate criteria focusing on the worthiness, relevance, timeliness, significance, morality, and practicality of the research topic, and the ethical stance of the research itself. Researchers have also suggested a series of questions as guiding principles to assess the quality of a qualitative study (Mays & Pope, 2020 ). Nassaji ( 2020 ) argues that good qualitative research should be robust, well informed, and thoroughly documented.

Qualitative Research: Interpretive Paradigms