Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Qualitative vs. Quantitative Research | Differences, Examples & Methods

Qualitative vs. Quantitative Research | Differences, Examples & Methods

Published on April 12, 2019 by Raimo Streefkerk . Revised on June 22, 2023.

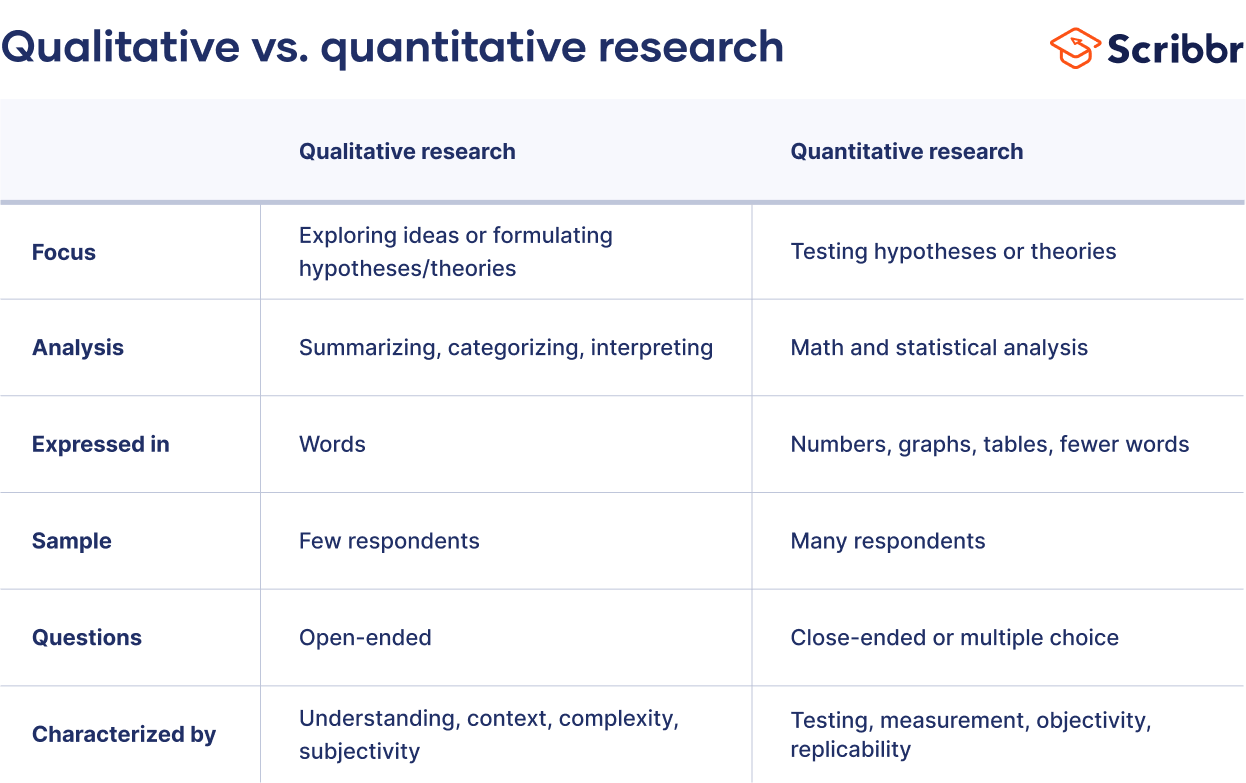

When collecting and analyzing data, quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings. Both are important for gaining different kinds of knowledge.

Common quantitative methods include experiments, observations recorded as numbers, and surveys with closed-ended questions.

Quantitative research is at risk for research biases including information bias , omitted variable bias , sampling bias , or selection bias . Qualitative research Qualitative research is expressed in words . It is used to understand concepts, thoughts or experiences. This type of research enables you to gather in-depth insights on topics that are not well understood.

Common qualitative methods include interviews with open-ended questions, observations described in words, and literature reviews that explore concepts and theories.

Table of contents

The differences between quantitative and qualitative research, data collection methods, when to use qualitative vs. quantitative research, how to analyze qualitative and quantitative data, other interesting articles, frequently asked questions about qualitative and quantitative research.

Quantitative and qualitative research use different research methods to collect and analyze data, and they allow you to answer different kinds of research questions.

Quantitative and qualitative data can be collected using various methods. It is important to use a data collection method that will help answer your research question(s).

Many data collection methods can be either qualitative or quantitative. For example, in surveys, observational studies or case studies , your data can be represented as numbers (e.g., using rating scales or counting frequencies) or as words (e.g., with open-ended questions or descriptions of what you observe).

However, some methods are more commonly used in one type or the other.

Quantitative data collection methods

- Surveys : List of closed or multiple choice questions that is distributed to a sample (online, in person, or over the phone).

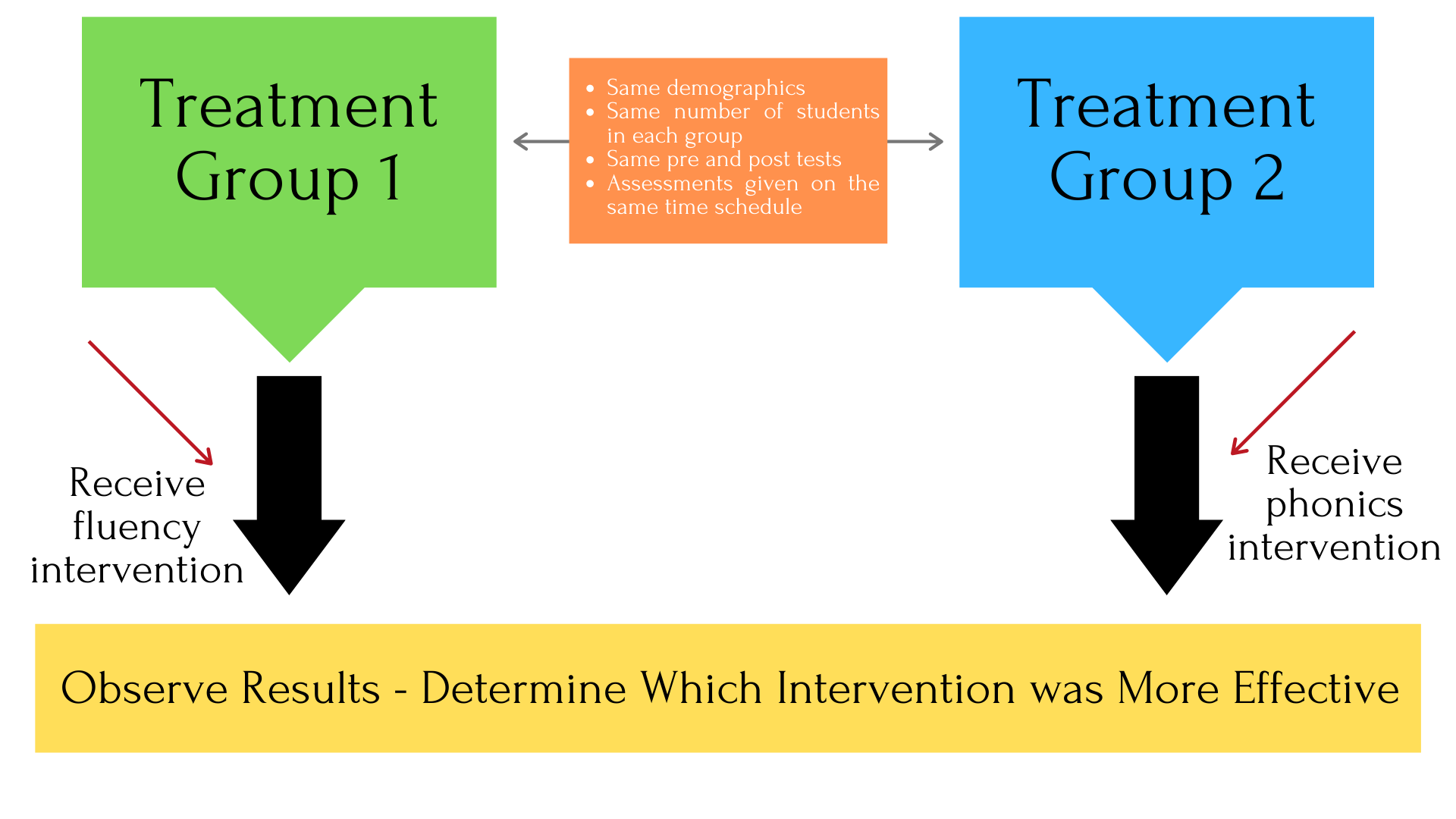

- Experiments : Situation in which different types of variables are controlled and manipulated to establish cause-and-effect relationships.

- Observations : Observing subjects in a natural environment where variables can’t be controlled.

Qualitative data collection methods

- Interviews : Asking open-ended questions verbally to respondents.

- Focus groups : Discussion among a group of people about a topic to gather opinions that can be used for further research.

- Ethnography : Participating in a community or organization for an extended period of time to closely observe culture and behavior.

- Literature review : Survey of published works by other authors.

A rule of thumb for deciding whether to use qualitative or quantitative data is:

- Use quantitative research if you want to confirm or test something (a theory or hypothesis )

- Use qualitative research if you want to understand something (concepts, thoughts, experiences)

For most research topics you can choose a qualitative, quantitative or mixed methods approach . Which type you choose depends on, among other things, whether you’re taking an inductive vs. deductive research approach ; your research question(s) ; whether you’re doing experimental , correlational , or descriptive research ; and practical considerations such as time, money, availability of data, and access to respondents.

Quantitative research approach

You survey 300 students at your university and ask them questions such as: “on a scale from 1-5, how satisfied are your with your professors?”

You can perform statistical analysis on the data and draw conclusions such as: “on average students rated their professors 4.4”.

Qualitative research approach

You conduct in-depth interviews with 15 students and ask them open-ended questions such as: “How satisfied are you with your studies?”, “What is the most positive aspect of your study program?” and “What can be done to improve the study program?”

Based on the answers you get you can ask follow-up questions to clarify things. You transcribe all interviews using transcription software and try to find commonalities and patterns.

Mixed methods approach

You conduct interviews to find out how satisfied students are with their studies. Through open-ended questions you learn things you never thought about before and gain new insights. Later, you use a survey to test these insights on a larger scale.

It’s also possible to start with a survey to find out the overall trends, followed by interviews to better understand the reasons behind the trends.

Qualitative or quantitative data by itself can’t prove or demonstrate anything, but has to be analyzed to show its meaning in relation to the research questions. The method of analysis differs for each type of data.

Analyzing quantitative data

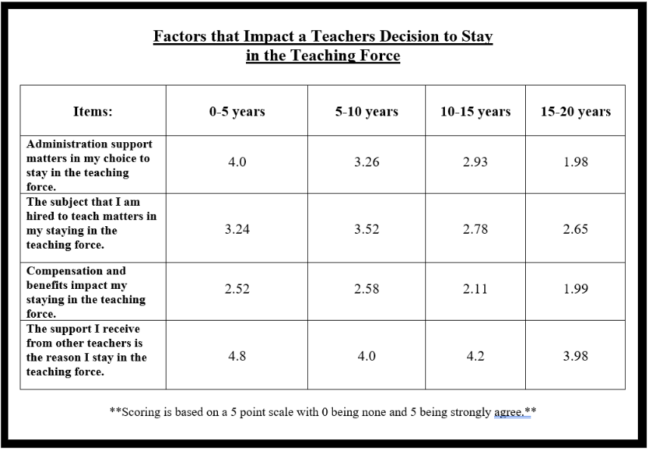

Quantitative data is based on numbers. Simple math or more advanced statistical analysis is used to discover commonalities or patterns in the data. The results are often reported in graphs and tables.

Applications such as Excel, SPSS, or R can be used to calculate things like:

- Average scores ( means )

- The number of times a particular answer was given

- The correlation or causation between two or more variables

- The reliability and validity of the results

Analyzing qualitative data

Qualitative data is more difficult to analyze than quantitative data. It consists of text, images or videos instead of numbers.

Some common approaches to analyzing qualitative data include:

- Qualitative content analysis : Tracking the occurrence, position and meaning of words or phrases

- Thematic analysis : Closely examining the data to identify the main themes and patterns

- Discourse analysis : Studying how communication works in social contexts

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Chi square goodness of fit test

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Quantitative research

- Inclusion and exclusion criteria

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to systematically measure variables and test hypotheses . Qualitative methods allow you to explore concepts and experiences in more detail.

In mixed methods research , you use both qualitative and quantitative data collection and analysis methods to answer your research question .

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts and meanings, use qualitative methods .

- If you want to analyze a large amount of readily-available data, use secondary data. If you want data specific to your purposes with control over how it is generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Data collection is the systematic process by which observations or measurements are gathered in research. It is used in many different contexts by academics, governments, businesses, and other organizations.

There are various approaches to qualitative data analysis , but they all share five steps in common:

- Prepare and organize your data.

- Review and explore your data.

- Develop a data coding system.

- Assign codes to the data.

- Identify recurring themes.

The specifics of each step depend on the focus of the analysis. Some common approaches include textual analysis , thematic analysis , and discourse analysis .

A research project is an academic, scientific, or professional undertaking to answer a research question . Research projects can take many forms, such as qualitative or quantitative , descriptive , longitudinal , experimental , or correlational . What kind of research approach you choose will depend on your topic.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Streefkerk, R. (2023, June 22). Qualitative vs. Quantitative Research | Differences, Examples & Methods. Scribbr. Retrieved April 12, 2024, from https://www.scribbr.com/methodology/qualitative-quantitative-research/

Is this article helpful?

Raimo Streefkerk

Other students also liked, what is quantitative research | definition, uses & methods, what is qualitative research | methods & examples, mixed methods research | definition, guide & examples, what is your plagiarism score.

Quantitative and Qualitative Research

- I NEED TO . . .

What is Quantitative Research?

- What is Qualitative Research?

- Quantitative vs Qualitative

- Step 1: Accessing CINAHL

- Step 2: Create a Keyword Search

- Step 3: Create a Subject Heading Search

- Step 4: Repeat Steps 1-3 for Second Concept

- Step 5: Repeat Steps 1-3 for Quantitative Terms

- Step 6: Combining All Searches

- Step 7: Adding Limiters

- Step 8: Save Your Search!

- What Kind of Article is This?

- More Research Help This link opens in a new window

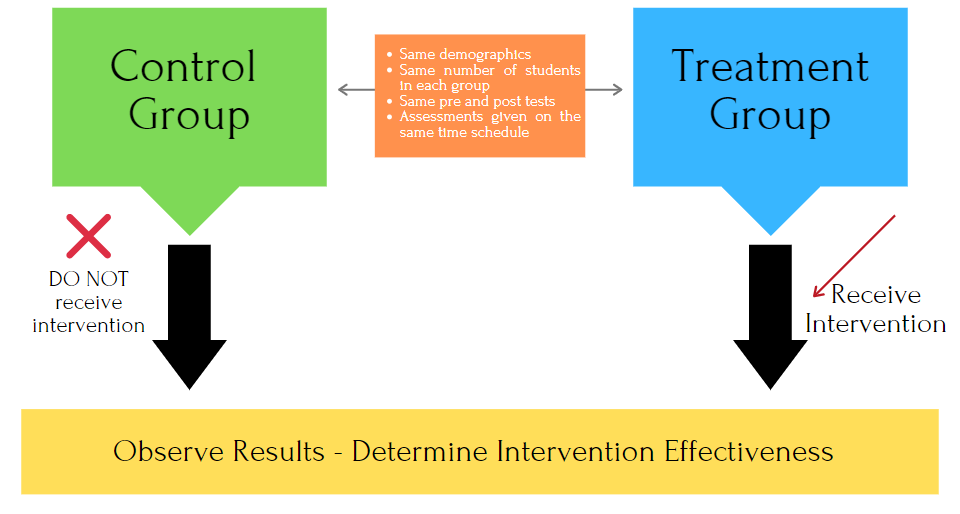

Quantitative methodology is the dominant research framework in the social sciences. It refers to a set of strategies, techniques and assumptions used to study psychological, social and economic processes through the exploration of numeric patterns . Quantitative research gathers a range of numeric data. Some of the numeric data is intrinsically quantitative (e.g. personal income), while in other cases the numeric structure is imposed (e.g. ‘On a scale from 1 to 10, how depressed did you feel last week?’). The collection of quantitative information allows researchers to conduct simple to extremely sophisticated statistical analyses that aggregate the data (e.g. averages, percentages), show relationships among the data (e.g. ‘Students with lower grade point averages tend to score lower on a depression scale’) or compare across aggregated data (e.g. the USA has a higher gross domestic product than Spain). Quantitative research includes methodologies such as questionnaires, structured observations or experiments and stands in contrast to qualitative research. Qualitative research involves the collection and analysis of narratives and/or open-ended observations through methodologies such as interviews, focus groups or ethnographies.

Coghlan, D., Brydon-Miller, M. (2014). The SAGE encyclopedia of action research (Vols. 1-2). London, : SAGE Publications Ltd doi: 10.4135/9781446294406

What is the purpose of quantitative research?

The purpose of quantitative research is to generate knowledge and create understanding about the social world. Quantitative research is used by social scientists, including communication researchers, to observe phenomena or occurrences affecting individuals. Social scientists are concerned with the study of people. Quantitative research is a way to learn about a particular group of people, known as a sample population. Using scientific inquiry, quantitative research relies on data that are observed or measured to examine questions about the sample population.

Allen, M. (2017). The SAGE encyclopedia of communication research methods (Vols. 1-4). Thousand Oaks, CA: SAGE Publications, Inc doi: 10.4135/9781483381411

How do I know if the study is a quantitative design? What type of quantitative study is it?

Quantitative Research Designs: Descriptive non-experimental, Quasi-experimental or Experimental?

Studies do not always explicitly state what kind of research design is being used. You will need to know how to decipher which design type is used. The following video will help you determine the quantitative design type.

- << Previous: I NEED TO . . .

- Next: What is Qualitative Research? >>

- Last Updated: Dec 8, 2023 10:05 PM

- URL: https://libguides.uta.edu/quantitative_and_qualitative_research

University of Texas Arlington Libraries 702 Planetarium Place · Arlington, TX 76019 · 817-272-3000

- Internet Privacy

- Accessibility

- Problems with a guide? Contact Us.

- Member Benefits

- Communities

- Grants and Scholarships

- Student Nurse Resources

- Member Directory

- Course Login

- Professional Development

- Institutions Hub

- ONS Course Catalog

- ONS Book Catalog

- ONS Oncology Nurse Orientation Program™

- Account Settings

- Help Center

- Print Membership Card

- Print NCPD Certificate

- Verify Cardholder or Certificate Status

- Trouble finding what you need?

- Check our search tips.

- Oncology Nursing Forum

- Number 4 / July 2014

Measurements in Quantitative Research: How to Select and Report on Research Instruments

Teresa L. Hagan

Measures exist to numerically represent degrees of attributes. Quantitative research is based on measurement and is conducted in a systematic, controlled manner. These measures enable researchers to perform statistical tests, analyze differences between groups, and determine the effectiveness of treatments. If something is not measurable, it cannot be tested.

Jump to a section

Related articles, systematic reviews, case study research methodology in nursing research, preferred reporting items for systematic reviews and meta-analyses.

Measurements in quantitative research: how to select and report on research instruments

Affiliation.

- 1 Department of Acute and Tertiary Care in the School of Nursing, University of Pittsburgh in Pennsylvania.

- PMID: 24969252

- DOI: 10.1188/14.ONF.431-433

Measures exist to numerically represent degrees of attributes. Quantitative research is based on measurement and is conducted in a systematic, controlled manner. These measures enable researchers to perform statistical tests, analyze differences between groups, and determine the effectiveness of treatments. If something is not measurable, it cannot be tested.

Keywords: measurements; quantitative research; reliability; validity.

- Clinical Nursing Research / methods*

- Clinical Nursing Research / standards

- Fatigue / nursing*

- Neoplasms / nursing*

- Oncology Nursing*

- Quality of Life*

- Reproducibility of Results

My Account

Donate

Research Methods

Quantitative research methods.

- Qualitative Research Methods

- Mixed Methods

- Books on Research Methods

Need Help?

- Ask us a question

- Schedule a research appointment

Quantitative research methods involve the collection of numerical data and the use of statistical analysis to draw conclusions. This method is suitable for research questions that aim to measure the relationship between variables, test hypotheses, and make predictions. Here are some tips for choosing quantitative research methods:

Identify the research question: Determine whether your research question is best answered by collecting numerical data. Quantitative research is ideal for research questions that can be quantified, such as questions that ask how much, how many, or how often.

Choose the appropriate data collection methods: Select data collection methods that allow you to collect numerical data, such as surveys, experiments, or observational studies. Surveys involve asking participants to respond to a set of standardized questions, while experiments involve manipulating variables to determine their effect on an outcome. Observational studies involve observing and recording behaviors or events in a natural setting.

When choosing a data collection method, it's important to consider the feasibility, reliability, and validity of the method. Feasibility refers to whether the method is practical and achievable within the available resources, while reliability refers to the consistency of the results over time and across different observers or settings. Validity refers to whether the method accurately measures what it's intended to measure.

The sample size: Decide on the sample size that is needed to produce statistically significant results. The sample size is the number of participants in the study. The larger the sample size, the more reliable the results are likely to be. However, a larger sample size also requires more resources and time. Therefore, it's important to determine the appropriate sample size based on the research question and available resources.

Statistical test: Choose the appropriate statistical analysis techniques based on the type of data you have collected and the research question. Common statistical analysis techniques include descriptive statistics, correlation analysis, regression analysis, and t-tests. Descriptive statistics summarize the data using measures such as mean, standard deviation, and frequency. Correlation analysis examines the relationship between two or more variables. Regression analysis examines the relationship between one dependent variable and one or more independent variables. T-tests compare the means of two groups.

When selecting a statistical analysis technique, it's important to consider the assumptions of the technique and whether they are appropriate for the data being analyzed. It's also important to consider the level of statistical significance required to draw meaningful conclusions.

Strength and Limitations

Strength and limitations of quantitative research methods:.

- The use of statistical analysis allows for the identification of patterns and relationships between variables.

- Provides a structured and standardized approach to data collection, allowing for replication of studies and comparisons across studies.

- It can produce reliable and valid results which are generalizable to larger populations.

- Allows for hypothesis testing, making it suitable for research questions that require a cause-and-effect relationship.

- It can produce numerical data, making it easy to summarize and communicate results.

Limitations:

- It may oversimplify complex phenomena by reducing them to numerical data.

- It may not capture the context and subjective experiences of individuals.

- It may not allow for the exploration of new ideas or unexpected findings.

- It may be influenced by researcher bias or the use of inappropriate statistical techniques.

- It may not account for variables that are difficult to measure or control.

- << Previous: Home

- Next: Qualitative Research Methods >>

- Seton Hall University

- 400 South Orange Avenue

- South Orange, NJ 07079

- (973) 761-9000

- Student Services

- Parents and Families

- Career Center

- Web Accessibility

- Visiting Campus

- Public Safety

- Disability Support Services

- Campus Security Report

- Report a Problem

- Login to LibApps

- Frontiers in Research Metrics and Analytics

- Research Assessment

- Research Topics

Quality and Quantity in Research Assessment: Examining the Merits of Metrics

Total Downloads

Total Views and Downloads

About this Research Topic

It is widely acknowledged that in the current academic landscape, publishing is the primary measure for assessing a researcher’s value. This is manifested by associating individuals' academic performance with different types of metrics, typically the number of publications or citations (quantity), rather

It is widely acknowledged that in the current academic landscape, publishing is the primary measure for assessing a researcher’s value. This is manifested by associating individuals' academic performance with different types of metrics, typically the number of publications or citations (quantity), rather than with the content of their works (quality). Concerns have been raised that this approach for evaluating research is causing significant ambiguity in the way science is done and how scientists' perceived performance is evaluated. For example, bibliometric indicators, such as the h-index or journal impact factor (JIF) in scientific assessments, are currently widespread. This causes multiple issues because these methods usually overlook the age or career stage of a researcher, the field size, publication, and citation cultures in different areas, any potential co-authorship, etc. Although the number of publications and citations, the h-index, the JIF, and so forth may indeed be relevant and should be considered as an indicator of visibility and popularity, they are certainly not indications of intellectual value or scientific quality by themselves.

To interrogate the conundrum between quantity and quality in research evaluation, some researchers dwell on rigorous and complementary indicators of a scientist's performance by critically analyzing a plethora of scientometric data. Others have argued that the scientific performance of an individual or group must be evaluated by peer-review processes based on their impact in their respective fields or the originality, strength, reproducibility, and relevance of their publications. Nevertheless, scientific project reviews, grant funding decisions, and university career advancement steps are often based on decisive input from non-experts who can readily use bibliometric indices. As a consequence, the newer and more robust tools or methods that consider the normalization of bibliometric indicators by the field and other influential parameters are encouraged to be shared and embraced by the research community, universities, and funding agencies. In addition, it is vital to investigate newly developed indicators or proposed quantitative methods for quality analysis and find out whether high quantity also implies high quality/significance/reputation. The role of peer review or in-depth studies in highlighting the quality based on the originality, strength, reproducibility, and relevance of the publications is additionally essential when investigating the merits of metrics in research assessment.

Keywords : conduct of research, metrics, bibliometrics, research assessment ethics, research evaluation, research quality, Responsible Research Metrics, Responsible Use of Metrics Policy

Important Note : All contributions to this Research Topic must be within the scope of the section and journal to which they are submitted, as defined in their mission statements. Frontiers reserves the right to guide an out-of-scope manuscript to a more suitable section or journal at any stage of peer review.

Topic Editors

Topic coordinators, recent articles, submission deadlines.

Submission closed.

Participating Journals

Total views.

- Demographics

No records found

total views article views downloads topic views

Top countries

Top referring sites, about frontiers research topics.

With their unique mixes of varied contributions from Original Research to Review Articles, Research Topics unify the most influential researchers, the latest key findings and historical advances in a hot research area! Find out more on how to host your own Frontiers Research Topic or contribute to one as an author.

- Help and Support

- Research Guides

Measuring Research Quality and Impact - Research Guide

- Citation Metrics

- Alternative Metrics

- Researcher Impact

- Journal Quality and Impact

- Book Quality and Impact

- University Impact

Measuring Research Quality and Impact

The activity of measuring and describing the quality and impact of academic research is increasingly important in Australia and around the world. Applications for grant funding or career advancement may require an indication of both the quantity of your research output and of the quality of your research.

Research impact measurement may be calculated using researcher specific metrics such as the h-index, or by quantitative methods such as citation counts or journal impact factors. This type of measurement is also referred to as bibliometrics.

This guide provides information on a range of bibliometrics including citation metrics, alternative metrics, researcher impact, journal quality and impact, book quality and impact, and university rankings.

Key Terms and Definitions

Altmetrics - Altmetrics (alternative metrics) are qualitative data that are complementary to traditional, citation-based metrics (bibliometrics), including citations in public policy documents, discussions on research blogs, mainstream media coverage, bookmarks on reference managers, and mentions on social media.

Author identifiers - Author identifiers are unique identifiers that distinguish individual authors from other researchers and unambiguously associate an author with their work.

Bibliometrics - Bibliometrics is the quantitative analysis of traditional academic literature, such as books, book chapters, conference papers or journal articles, to determine quality and impact.

Cited reference search - A cited reference search allows you to use appropriate library resources and citation indexes to search for works that cite a particular publication.

Citation index - A citation index is an index of citations between publications, allowing the user to easily establish which later documents cite which earlier documents.

Citation report - A citation report is a compilation of the bibliographic details for all of the publications a researcher has authored, along with the number of times those publications have been cited and any relevant author metrics.

Citation - A reference to or quotation from a publication or author, especially in a scholarly work.

CiteScore - CiteScore is a measure of an academic journal's impact and quality that analyses the number of citations received by a journal in one year to publications published in the three previous years, divided by the number of publications indexed in Scopus published in those same three years.

CNCI - The Category Normalized Citation Impact (CNCI) of a document is calculated by dividing an actual citation count by an expected citation rate for documents with the same document type, year of publication, and subject area, therefore creating an unbiased indicator of impact irrespective of age, subject focus, or document type.

FWCI - The field-weighted citation impact (FWCI) is an author metric which compares the total citations actually received by a researcher's publications to the average number of citations received by all other similar publications from the same research field.

h-index - The h-index is an author metric that attempts to measure both the productivity and citation impact of the publications of an author. A researcher with an index of h has published h papers, each of which has been cited in other papers at least h times.

Impact metrics - Impact metrics are quantitative analyses of the impact of research output, using a comprehensive set of measurement tools. Impact metrics include traditional citation metrics, altmetrics, measures of researcher impact, measures of publication quality and impact, and institutional benchmarking and ranking.

Journal rankings - Journal rankings are used to evaluate an academic journal's impact and quality. Journal rankings measure the place of a journal within its research field, the relative difficulty of being published in that journal, and the prestige associated with it.

Measures of esteem - Measures of esteem are additional factors which may provide evidence of research quality, including awards and prizes, membership of professional or academic organisations, research fellowships, patents or other commercial output, international collaborations, and successfully completed research grants or projects.

SJR - SCImago Journal Rank (SJR) is a measure of an academic journal's impact and quality that analyses both the number of citations received by a journal and the prestige of the journals in which these citations occur.

SNIP - Source Normalized Impact per Paper (SNIP) is a measure of an academic journal's impact and quality that analyses contextual citation impact by weighting citations based on the total number of citations in a subject field.

Frontiers in Research Metrics and Analytics (DOAJ) - publishes rigorously peer-reviewed research on the development, applications, and evaluation of scholarly metrics, including bibliometric, scientometric, informetric, and altmetric studies.

Metrics Toolkit (RMIT) - a resource for researchers and evaluators that provides guidance for demonstrating and evaluating claims of research impact.

Research Metrics Quick Reference (Elsevier) - a consolidated quick reference to some key research impact metrics.

Using Bibliometrics: A Guide to Evaluating Research Performance with Citation Data 2015 (Thomson Reuters) - A guide to evaluating research performance for researchers, universities and libraries.

- Meaningful Metrics : A 21st Century Librarian's Guide to Bibliometrics, Altmetrics, and Research Impact by Robin Chin Roemer & Rachel Borchardt ISBN: 9780838987568 Publication Date: 2015

- The Research Impact Handbook by Mark S. Reed Call Number: 300.72 REE 2018 ISBN: 9780993548246 Publication Date: 2018 2nd edition

Research Support

Researchers at Murdoch University are supported by the Library and the Research and Innovation Office .

Ask our Librarians for advice on measuring research quality and impact.

- Next: Citation Metrics >>

- Last Updated: Feb 28, 2024 3:25 PM

- URL: https://libguides.murdoch.edu.au/measure_research

Advertisement

- Next Article

PEER REVIEW

1. introduction, 2. background, 5. discussion, 6. conclusion, acknowledgments, author contributions, competing interests, funding information, data availability, indicators of research quality, quantity, openness, and responsibility in institutional review, promotion, and tenure policies across seven countries.

Handling Editor: Ludo Waltman

- Funder(s): H2020 Science with and for Society

- Award Id(s): 824612

- Cite Icon Cite

- Open the PDF for in another window

- Permissions

- Article contents

- Figures & tables

- Supplementary Data

- Peer Review

- Search Site

Nancy Pontika , Thomas Klebel , Antonia Correia , Hannah Metzler , Petr Knoth , Tony Ross-Hellauer; Indicators of research quality, quantity, openness, and responsibility in institutional review, promotion, and tenure policies across seven countries. Quantitative Science Studies 2022; 3 (4): 888–911. doi: https://doi.org/10.1162/qss_a_00224

Download citation file:

- Ris (Zotero)

- Reference Manager

The need to reform research assessment processes related to career advancement at research institutions has become increasingly recognized in recent years, especially to better foster open and responsible research practices. Current assessment criteria are believed to focus too heavily on inappropriate criteria related to productivity and quantity as opposed to quality, collaborative open research practices, and the socioeconomic impact of research. Evidence of the extent of these issues is urgently needed to inform actions for reform, however. We analyze current practices as revealed by documentation on institutional review, promotion, and tenure (RPT) processes in seven countries (Austria, Brazil, Germany, India, Portugal, the United Kingdom and the United States). Through systematic coding and analysis of 143 RPT policy documents from 107 institutions for the prevalence of 17 criteria (including those related to qualitative or quantitative assessment of research, service to the institution or profession, and open and responsible research practices), we compare assessment practices across a range of international institutions to significantly broaden this evidence base. Although the prevalence of indicators varies considerably between countries, overall we find that currently open and responsible research practices are minimally rewarded and problematic practices of quantification continue to dominate.

https://publons.com/publon/10.1162/qss_a_00224

The need to reform research assessment processes related to career advancement at research institutions has become increasingly recognized in recent years, especially to better foster open and responsible research practices 1 . In particular, it is claimed that current practices focus too much on quantitative measures over qualitative measures ( Colavizza, Hrynaszkiewicz et al., 2020 ; Malsch & Tessier, 2015 ), with misuse of quantitative research metrics, including the Journal Impact Factor, among the most pressing issues for equitable research assessment generally, which aims to foster open and responsible research in particular. Therefore, recent years have seen a focus on attempts to understand how principles and practices of openness and responsibility are currently valued in the reward and incentive structures of research-performing organizations, especially by direct examination of organizations’ review, promotion, and tenure 2 (RPT) policies. Such studies have heretofore focused on specific contexts, however. Work led by Erin McKiernan and Juan Pablo Alperin examined policies in place across a range of types of institutions in the United States and Canada ( Alperin, Muñoz Nieves et al., 2019 ; Alperin, Schimanski et al., 2020 ; McKiernan, Bourne et al., 2016 ; Niles, Schimanski et al., 2020 ). Rice, Raffoul et al. (2020) studied criteria used across a range of countries, but only within biomedical sciences faculties. Hence, further work is needed to describe types of criteria in place across a range of institutional types internationally.

This paper aims to fill this gap. Our primary research question can be formulated as “What quantitative and qualitative criteria for review, promotion, and tenure are in use across research institutions in a purposive sample of seven countries internationally?” Sub-questions include “How prevalent are criteria related to open and responsible research in these contexts?,” “How prevalent are potentially problematic practices (e.g., use of publication quantity or journal impact factors)?,” and “What trends can be observed across this sample?”

To answer these questions, we investigate the prevalence of qualitative and quantitative indicators in RPT policies across seven countries: Austria, Brazil, Germany, India, Portugal, the United Kingdom and the United States. This involved manually collecting 143 RPT policy documents from 107 institutions. These documents were then systematically coded for the inclusion of language related to 17 elements 3 (including those related to qualitative or quantitative assessment of research, service to the institution or profession, and open and responsible research practices) using a predefined data-charting form. Directly comparing the indicators and criteria in place at such a range of international institutions hence aims to broaden the evidence base of the range of practices currently in place 4 .

2.1. Research Assessment and Researcher Motivations

Institutional policies regarding RPT typically focus on three broad areas: research, teaching, and service (both to the profession and the institution). The relative importance of each varies across institutions and has also changed over time ( Gardner & Veliz, 2014 ; Youn & Price, 2009 ). In the European context, a recent survey of researchers investigated indicators widely used at EU institutions for review, promotion, and tenure. The most common factors used in research assessment were (according to survey respondents): number of publications (68%), patents and securing funds (35%), teaching activities (34%), collaboration with other researchers (32%), collaboration with industry (26%), participation in scientific conferences (31%), supervision of young researchers (25%), awards (23%), and contribution to institutional visibility (17%) ( European Commission, Directorate General for Research and Innovation, 2017 ).

When it comes to the assessment of research contributions, reflecting the common idiom “publish or perish,” publication in peer-reviewed venues remains central. Primary publication types vary across disciplines. Although journal articles dominate in Science, Technology, Engineering, and Mathematics (STEM) subjects, monographs or edited collections have greater importance in the Humanities and Social Sciences ( Adler, Ewing, & Taylor, 2009 ; Alperin et al., 2020 ). Within Computer Science, meanwhile, publication in conference proceedings is the most important factor ( McGill & Settle, 2011 ). However, irrespective of which type of publication is favored, institutions tend to position productivity (often quantified via metrics) as a defining feature in RPT policies ( Gardner & Veliz, 2014 ). The ways in which this emphasis on productivity and quantification influences academics’ focus and shapes behaviors, often in detrimental ways, is worth expanding upon to understand how current trends in RPT policies may be limiting the uptake of open and responsible research.

Institutional committees tasked with determining whether research contributions are sufficient for promotion, review, or tenure face something of a dilemma. Although the quantity of publications is comparatively easy to assess, measuring their quality is a more difficult challenge. Ideally, committees would be able to read each of the contributions themselves to make their own firsthand judgments on the matter. However, the mass of material created, as well as increased research specialization drastically reducing the number of experts that possess the required expertise for such quality judgments, mean that usually proxy indicators for quality are sought. Here, two factors are particularly popular: publication venue and citation counts.

In perceptions of the prestige of academic journals, the Journal Impact Factor has assumed a particularly pernicious role. Created by Eugene Garfield of the Institute for Scientific Information, the Journal Impact Factor calculates an average of citations per article within the last 2 years to provide a metric of the relative use of academic literature at the journal level. Originally created to assist library decisions regarding journal subscriptions, the Journal Impact Factor soon came to be used as a proxy for relative journal importance by research assessors and researchers themselves ( Adler et al., 2009 ; Walker, Sykes et al., 2010 ). Various criticisms have been levelled at the Journal Impact Factor, most prominently that relatively few outlier publications with many citations skew distributions such that most publications in that journal fall far below the mean. Additional criticisms include that differences in citation practices between (and even within) fields make the Journal Impact Factor a poor tool for comparison, that it is susceptible to gaming by questionable editorial practices, and suffers a lack of transparency and reproducibility ( Fleck, 2013 ). Nonetheless, its use as a proxy for research quality in research assessment became commonplace ( Gardner & Veliz, 2014 ; McKiernan, Schimanski et al., 2019 ). McKiernan et al. (2019) studied RPT documents and found that 40% of North American research-intensive institutions mentioned the Journal Impact Factor or closely related terms. Accordingly, researchers commonly list a journal’s impact factor as a key factor they take into account when deciding where to publish ( Niles et al., 2020 ).

Citation counts at the article level are also often used as a proxy for research quality within RPT processes ( Adler et al., 2009 ; Brown, 2014 ). Indeed, Alperin et al. (2019) found that such indicators were mentioned by the vast majority of institutions. However, citations have been widely criticized for being too narrow a measure of research quality ( Curry, 2018 ; Hicks, Wouters et al., 2015 ; Wilsdon, Allen et al., 2015 ). The application of particularistic standards is especially perilous for early-career researchers who have yet to build their profile. By using citation metrics to evaluate research contributions, initial positive feedback leads to the self-reinforcement loop known as the Matthew Effect ( Wang, 2014 ). Moreover, indicators such as the h -index are highly reactive ( Fleck, 2013 ) and therefore risk reifying monopolization of resources (prestige, recognition, money) in the hands of a select elite. The h -index was designed as a measurement tool to showcase the consistency of the cited researchers but creates a disadvantage for early-career researchers and neglects the diversity of citation rates across scientific disciplines and subdisciplines ( Costas & Bordons, 2007 ).

2.2. Research Assessment and Open and Reproducible Research

Multiple initiatives in the last decade have sought to raise the alarm on the overuse of quantitative indicators and highlight the need to consider a broader range of practices (beyond publications). For instance, the San Francisco Declaration on Research Assessment (DORA) specifically criticized use of the Journal Impact Factor in research assessment 5 . The 10 principles of the Leiden Manifesto for Research Metrics ( Hicks et al., 2015 ) sought to reorient the use of metrics by critiquing their “misplaced concreteness and false precision,” arguing that quantitative should be used as a support for “qualitative, expert assessment,” with strict commitments to transparency.

Such critiques of overquantification have developed alongside movements to foster open and reproducible research. These two trends meet where advocates of Open Science or Responsible Research & Innovation (RRI) identify concern among researchers that uptake of open and responsible research practices will negatively impact their career progress ( Adler et al., 2009 ; Migheli & Ramello, 2014 ; Peekhaus & Proferes, 2015 ; Rodriguez, 2014 ; Wilsdon et al., 2015 ).

As a result, recent research has investigated if and how criteria relating to open and responsible research practices are rewarded in RPT policies. In particular, the influential “Promotion, Review, and Tenure” project headed by Erin McKiernan and Juan Pablo Alperin has examined these issues in depth by studying a corpus of RPT policy documents from 129 universities in the United States and Canada. This project found that aspects related to open and reproducible research were rare or undervalued. Alperin et al. (2019) found, for example, that only 6% of RPT policies mentioned “Open Access,” often in a negative way. Public engagement, although mentioned in a large number of policies, was nonetheless undervalued by associating it with service, rather than research work. Meanwhile, 40% of policies from research-intensive institutions mentioned the Journal Impact Factor in some way, with the overwhelming majority of those (87%) supporting its use in at least one RPT document and none heavily criticizing it ( McKiernan et al., 2019 ).

A similar study by Rice et al. (2020) studied the presence of “traditional” (e.g., publication quantity) and “nontraditional” (e.g., data-sharing) criteria used for promotion and tenure in biomedical sciences faculties. In that context, the authors found that mentions of practices associated with open research were very rare (data-sharing in just 1%, with Open Access publishing, registering research, and adherence to reporting guidelines mentioned in none). Most prevalent were traditional criteria including peer reviewed publications (95%), grant funding (67%), national or international reputation (48%), authorship order (37%), Journal Impact Factor (28%), and citations (26%).

Although general trends, including prevalence of (sometimes problematic) quantitative measures and lack of recognition for open and responsible research practices, can be observed across these two groups of work, nonetheless there are important nuances we should take into account. In the biomedical context, Rice et al. (2020) saw “notable differences” in the availability of guideline documents across continents and “subtle differences in the use of specific criteria” across countries. In the United States/Canada context, meanwhile, differences across types of institutions were observed—with, for instance, “research-intensive” institutions being more likely to encourage use of the Journal Impact Factor ( McKiernan et al., 2019 ). These differences, across institutional types and national boundaries, require further investigation.

This current study complements and extends this work. Such work is crucially important, especially as reform of rewards and recognition processes is now a policy priority, particularly in Europe. Vanguard institutions such as Utrecht University in the Netherlands are already implementing such reforms ( Woolston, 2021 ). The Paris Call on Research Assessment, announced at the Paris Open Science European Conference (organized by the French Presidency of the Council of the European Union) in February 2022, calls for evaluating the “full range of research outputs in all their diversity and evaluating them on their intrinsic merits and impact” ( Paris Call on Research Assessment, 2022 ). The Paris Call also sought the formation of a “coalition of the willing” to build consensus and momentum across institutions. The European Commission is currently building such a coalition ( Research and Innovation, 2022 ). This paper further contributes to the evidence base to inform such reform.

We assembled and qualitatively analyzed RPT policy documents from academic institutions in seven countries (Austria, Brazil, Germany, India, Portugal, the United Kingdom, and the United States).

3.1. Sampling

In selecting countries, we used purposive sampling (candidate countries whose primary language was covered by the research team (i.e., English, German, or Portuguese). Although automated translations can go a long way in basic understanding, the task at hand required knowledge of the policy landscape of the studied countries, as well as the ability for precise reading of source materials. We first identified four target countries, based on our European focus and our team’s familiarity with the language (English, German, Portuguese) and policy landscapes of specific countries. In addition, the United States was included as a representative of a leading research country and to allow comparisons with previous research ( Alperin et al., 2019 ). Furthermore, we included India and Brazil as examples of large “low- and middle-income countries” based on gross national income per capita as published by the World Bank 6 . They play a growing role in research, and broaden our scope to include Asia and South America. We acknowledge that our sample of countries cannot be considered random or representative of the situation globally. However, given the current lack of knowledge of RPT criteria in place across national contexts, we nonetheless believe that our sample adds richly to current knowledge.

To include representative numbers of institutions of perceived high and low prestige, we used the Times Higher Education World University Rankings (WUR) 2020 to select institutions. Institutions from each selected country were sorted based on their relative WUR performances in the categories “Research” and “Citations.” We then divided each category into three equally sized subcategories, defining them as “High-,” “Medium-,” and “Low-” performing institutions. Next, we calculated the median of each subcategory and selected the institutions that were closest to the median as representatives of this category. We included both the “Research” and “Citations” fields from the WUR, as both of these are research-related indicators. Duplicate entries of the same institution appearing in both categories were replaced by the next available institution in the “Citations” category. Our sampling procedure resulted in a sample of 107 institutions across seven countries ( Table 1 ).

Number of institutions per country—the total number of institutions sampled relative to the number of institutions listed per country in WUR

Although selection of institutions based on university rankings is an often-used strategy (e.g., Rice et al. (2020) use the Leiden Ranking in a similar approach), it is not without flaws. First, university rankings have been criticized for their reliance on biased and unreliable reputational survey data ( Waltman, Calero-Medina et al., 2012 ) and issues of gaming and selective reporting ( Gadd, 2021 ). Second, rankings such as the WUR only include the most prominent institutions and leave out many institutions based on partly arbitrary criteria (e.g., how many yearly publications they need to be included). The reported groups of “high,” “medium,” and “low”-ranked universities are only relative to the set of institutions included in the ranking, and not academia as a whole. We see our use of the WUR as a pragmatic approach to reproducibly sampling universities. This does not negate their deficiencies for guiding prospective students to choose institutions or informing policy decisions.

3.2. Data Collection

Policy documents were collected using a shared search protocol. First, we used Google to search for the institution name along with various constellations of keywords. Table 2 shows the set of keywords identified and used for the policies identification in the three languages: English, German, and Portuguese.

Search key terms in English, German, and Portuguese that were used to retrieve related RPT policies

We did not collect advertisements for job descriptions even though these could include some insightful requirements applicable to the RPT policies.

We included RPT policies only and not other policies such as Ethics, Diversity, and OA, where similar concepts could appear.

Policies could apply to any post-PhD researcher career stage. The collected policies evaluated various research-related positions. For example, in the United Kingdom, some institutions have separate policies for associate professors, full professors, and readers. In the United States, there are separate policies for tenured and nontenured staff. In Austria, there are policies for habilitation (qualification for teaching, needed for promotion to professor) and qualification agreements for tenure track (associate professors), but no promotion to full professor exists. In India, we could often not find specific policies, but rather the evaluation forms that researchers use to apply for promotion. In these cases, we therefore analyze the evaluation forms instead.

Some institutions have separate policies for all researcher categories, (i.e., separate policies for lecturers, assistant professors, associate professors, professors, and so on), but others have a uniform policy covering all positions. Hence, the number of institutions is smaller than the total number of policies collected ( Table 1 ). Where more than one policy was identified for an institution, we assessed the indicators separately for each policy and counted an indicator as “fulfilled” when it appeared in at least one policy.

We were sometimes unable to obtain policy documents for target institutions. Specifically, where access was restricted to members of the institution only, data collectors emailed the institution’s human resources department to ask for a copy of the policy. If no response was received within 10 days, data collectors recorded this information and sampled the next institution from the list for that country and strata until a sufficient number of policies was obtained. Table 3 shows the institutions that did not have a public policy per country.

Total number of institutions we checked with no policy

An initial round of document collection occurred during the period November 2019 to March 2020. The sample was then further extended between March and April 2021. As some institutions had several distinct policies relating to different career stages, 143 total RPT policy documents were collected for analysis from the 107 institutions.

3.3. Data Charting

Data were extracted from the policy documents using a standardized data-charting form. The form was devised in multiple rounds of iteration. Key indicators for inclusion were identified from various sources, including the MoRRI indicators ( MoRRI, 2018 ) and a group of studies performed in the North American context by Alperin et al. (2019) , as well as from the surveyed literature. We collected and examined 17 different indicators ( Table 4 ), including “traditional” assessment indicators relating to quantification and quality of publications, and a set of “alternative” indicators relating to open and responsible research, and related issues such as gender equality and Citizen Science. In addition, information was gathered on the date policies came into effect, the academic positions (e.g., tenure track, professor, lecturer, senior lecturer) or types of processes (e.g., promotion, review, tenure) they governed.

Overview of data-charting form main elements (“Are the following mentioned as being taken into account in the promotion/evaluation procedures as stated in the policy?”)

Five coders were involved, all with competence in English, three in German, and one in Portuguese. Policies were assigned based on language competences. They coded the presence (1) or absence (0) of each indicator in each policy, and copied the sentence mentioning the indicator and the ones before and after. Each document was coded by one individual. To assess intercoder reliability, an independent coder (TRH) performed a reviewer audit of a random sample of 10% of the total number of institutions. Comparing this second round of review to the first responses revealed a high intercoder reliability of 96.78%.

Before carrying out the analysis, several steps were taken to ensure data integrity and consistency. Data were originally collected via spreadsheets and subsequently collated using R to avoid copy-paste errors. We checked that every indicator was present for each policy; that in cases where an indicator had been found (coded as 1) a text excerpt was present; and that in cases where no indicator had been found (coded as 0) also no text excerpt was present. The inconsistencies found were checked and resolved by TK.

To facilitate the review of our results and the reuse of the data, we translated all non-English excerpts to English in a two-step procedure. First, we used DeepL 9 to obtain an initial translation of the excerpt. A native speaker then checked the translation, revising to ensure that meaning, context, and use of special terms mirrored the original. The validated translation was then recorded alongside the original text for subsequent analysis.

3.4. Data Analysis

All data analysis was conducted using R ( R Core Team, 2021 ), with the aid of many packages from the tidyverse ( Wickham, Averick et al., 2019 ), including ggplot2 for visualizations ( Wickham, 2016 ). Computational reproducibility of the analysis is ensured through the use of the drake package ( Landau, 2018 ). The analyses presented in this paper are all exploratory and have not been preregistered. To enable the comparison of the indicators’ presence against each other, we rely on Multiple Correspondence Analysis (MCA) ( Greenacre & Nenadic, 2018 ; Nenadic & Greenacre, 2007 ). Correspondence analysis and its extension MCA are similar to principal component analysis (PCA) in mapping the relationships between variables to a high-dimensional Euclidean space. The goal of the method is then “to redefine the dimensions of the space so that the principal dimensions capture the most variance possible, allowing for lower-dimensional descriptions of the data” ( Blasius & Greenacre, 2006 , p. 5). The obtained dimensions can therefore be inspected for their alignment to specific variables, enabling conclusions about the main trends found in the data. MCA thus offers a visual representation of contingency tables and is well suited for the categorical data collected in this study. Furthermore, MCA allows us to investigate the relationship between indicators and countries jointly. Several considerations apply when analyzing data via MCA.

First, we apply MCA in a strictly exploratory fashion. Inspecting its visual output facilitates interpretation of the relationship between indicators and how they relate to countries, but we do not conduct any testing of hypotheses. Second, the graphical solution offered by MCA maximizes deviations from the average, allowing for statements of the prevalence of indicators relative to one another. For statements about absolute frequencies of indicators across countries we rely on MCA’s numerical output (see Supporting information ), as well as cell frequencies found in the corresponding contingency tables. All supporting data and required code are available via Zenodo ( Pontika, Klebel et al., 2022b ).

Assessing the prevalence of traditional and alternative (especially open/responsible research-related) criteria across policies of 107 institutions, we find substantial differences in their prevalence ( Figure 1 ). While 72% of institutions mention “service to the profession,” no institution mentions data sharing or Open Access publishing. Overall, traditional indicators, related to the profession or to scientific publications, are much more common than indicators related to open and responsible research.

Overall prevalence of indicators across all institutions/countries. In cases where an institution had more than one policy, we aggregated the policies. An institution was counted as mentioning a given indicator if at least one of the policies mentioned it.

In terms of more traditional indicators, by far the most common indicator mentioned in the policies was service to the profession, which includes activities such as organizing conferences or mentoring PhDs (72%). Extending the concept of professional service, almost half of the policies also mention peer review & editorial activities (47%). A second important aspect among the sampled policies is that of scientific publications, with frequent mentions of the number of publications, or publication quality. Although a call to rate quality over quantity is not uncommon in the policies, problematic practices were still worryingly prevalent. For example, journal metrics such as the Journal Impact Factor were mentioned in at least a quarter of the policies, while sheer productivity, as measured by quantity of publications, was present in around a fifth of cases.

Indicators relating to open and responsible research were very rare. We discovered no mentions of data sharing or Open Access publishing. Creation of software was quite well represented (13% of cases), due to its prevalence in policies in Brazil, where it is mentioned at 75% of institutions ( Figure 3 ; see Section 4.2 ). Mentions of RRI elements were more encouraging, as the RRI-related aspects of interactions with industry (37%), engagement with the public (35%), and engagement with policy makers (22%) were relatively well represented. However, issues relating to gender were mentioned only in 6–9% of cases.

4.1. Relationship Between Indicators

Institutions rely on a distinct combination of indicators and criteria to assess researchers. To investigate how these indicators are related (i.e., which aspects are commonly mentioned in tandem), we rely on MCA. This method relates criteria against each other and allows us to investigate deviations from the average, as well as which indicators commonly appear together. To further substantiate these findings, we provide bivariate correlations between all indicators in the Supporting information ( Figure S2 ). Note that these analyses are exploratory and based on a data set of moderate size.

The first apparent aspect from analyzing the variables jointly is that the studied indicators tend to be cumulative ( Figure 2 ). In broad terms, there is a basic divide between institutions that mention many criteria and those that mention few to none (see also Table 5 on how this relates to countries). Investigating relationships further, the first dimension (horizontal axis) draws heavily from engagement beyond academia: with industry, the public, and policy makers. Institutions commonly mention them together, with bivariate correlations of about .5 between the three indicators (see Figure S2 ). The same institutions also mention contributions to review & editorial activities, service to the profession, and publication quality more often than the average institution. On the other end of the spectrum (righthand side) are institutions that mention engagement beyond academia and service to the profession less frequently than the average.

Relationship between indicators for review, promotion, and tenure. The figure is a graphical representation of the relationships between indicators when considering their multivariate relationships. The figure’s origin (0, 0) represents the sample average. “++” means that an indicator is present, “—” that it is not present. Engagement is abbreviated with “E.” The horizontal axis (Dimension 1) accounts for 66.7% of variation in the data. This dimension mainly contrasts institutions that mention instances of engagement (with the public, industry, or policy makers) and citizen science, as well as service to the profession, with institutions that do neither. The vertical axis (Dimension 2) accounts for 8.4% of variation in the data. This dimension mainly contrasts institutions that value publication quality and rely on citations with institutions that value patents and journal metrics, as well as software, on the other end of the spectrum (bottom). Citizen science is found near the bottom of the axis but does not contribute strongly to this dimension. The indicators “Data sharing” and “Open Access publishing” are not included in the model, as both were not found in any of the policies. Furthermore, variables relating to gender were not included, because they relate to the composition of review panels rather than research assessment criteria per se.

Number and percentage of indicators discovered per country

Although the first distinction (between institutions mentioning many indicators and engagement beyond academia in particular) is strongest, the second dimension (vertical) provides additional insight on the interrelatedness of the indicators. The divergence along this dimension revolves around institutions that mention publication quality and reliance on citations on one side, with institutions mentioning software, patents, and journal metrics, as well as citizen science, on the other side. It is noteworthy that publication quality and citations (often seen as a proxy indicator for publication quality), are mentioned jointly at an above average rate. Mentions of publication quality, on the other hand, are unrelated to mentions of journal metrics (such as the Journal Impact Factor, r = −0.01, 95% basic bootstrap CI [−0.21, 0.17]), which are considered a much more problematic indicator of research quality. Citations and journal metrics are represented on opposite sides of the vertical spectrum, despite the criteria being weakly correlated ( r = .22, [0.02, 0.44]). This is driven by the fact that journal metrics are moderately related to patents ( r = .32, [0.14, 0.52]), but unrelated to publication quality. It should be noted that the concepts of “journal metrics” and “publication quality” might overlap in how they are applied in practice. While we coded text phrases such as “High quality scholarly outputs with significant authorship contributions” as pertaining to publication quality, this might in practice be assessed via journal metrics (e.g., Journal Impact Factor or ranking quartiles).

4.2. Country Comparison

When comparing countries, we find differences in terms of the overall prevalence of indicators, but also their relative importance. The absolute number of indicators per country varies because we sampled more institutions for larger countries than for smaller ones. Importantly, however, the relative number of indicators also varies considerably ( Table 5 ). Although just under a third of the analyzed indicators were identified in policies in Austria, Brazil, Germany, Portugal and the United Kingdom, the figures were 18% for the United States and 16% for India. The lower numbers in the United States and India may reflect the nature of the documents examined in those cases. We only examined institution-wide policies and in the United States it may be the case that detailed criteria are more often contained at departmental or faculty-level policies; in India (as stated) assessment forms were also analyzed, as few institutions had official policy documents (see Table 3 ).

Austria: A very high share of sampled institutions mention the number of publications (67%), and half also mention journal metrics. Service to the profession, while the most common concept across countries, is mentioned in only 50% of institutions in Austria. A major distinction between institutions from German-speaking countries (i.e., Austria and Germany) and all other countries is that the former frequently mention concepts of gender, with four out of six Austrian universities mentioning gender equality, while this is not found in any other country.

Brazil: All Brazilian institutions mention service to the profession, and three out of four mention patents, review & editorial activities, and software, while mentions of software are uncommon in other countries. Similar to India and Austria, journal metrics are mentioned quite frequently (42%). Sampled policies from Brazil are similar to policies from the United Kingdom in frequently mentioning service and engagement beyond academia but diametrically opposed in also frequently mentioning patents and software, both of which are very rare in the United Kingdom. Finally, both country profiles are relatively far from the sample average, indicating configurations that are less common among other countries.

Germany: Policies from German universities are very similar to their Austrian counterparts, which suggests similarities based on shared cultural and academic traditions and influences. For example, policies from both Austria and Germany commonly mention gender equity. However, the concepts of patenting and review & editorial activities appear considerably more frequently in German policies than in Austria, with the fewest mentions of service to the profession across the sample also found in Germany.

India: Contrary to all other countries, we find no evidence of policies referring to review & editorial activities as a criterion for promotion, and very few cases that refer to the number of publications that a given researcher has produced. On the other hand, mentions of journal metrics were very common in the policies sampled from Indian institutions (67%, n = 8), while less common among the other countries.

Portugal: All sampled universities mention engagement with the public, which is a strong exception in the sample. Furthermore, many institutional policies mention service to the profession, engagement with industry, patents, as well as the number of publications. Indicators that are less common across the sample, such as citizen science, software, and citations, as well as engagement with policy makers, are not found at all in Portugal.

United Kingdom: All sampled universities mention service to the profession, and four-fifths mention publication quality. Equally, institutions from the UK mention all three dimensions of engagement beyond academia (industry, public, policy makers) considerably more frequently than the average of the sample. Finally, policies mention patents and the number of publications considerably less frequently than institutions from other countries.

United States: In line with the overall finding of a low propensity of indicators across universities from the United States ( Table 5 ), all indicators were found at a slightly lower rate than in other countries. Given that the sample deliberately included more institutions from the United States, institutional policies from the United States are quite close to the average across the whole sample ( Figure 4 ). The biggest deviations from the sample average are found with engagement with industry, which is mentioned least frequently in the United States compared with all other countries.

Prevalence of indicators per country. Percentages are rounded to full integers. The number of cases (universities) per country is presented in Table 5 . Low cell frequencies and empty cells prohibit the use of common chi-square metrics for contingency tables.

Relationship between indicators with superimposed countries. The relationships between criteria displayed in this figure are the same as in Figure 3 . To allow for an investigation of which criteria are more common in a given country than in the rest of the sample, we project country profiles into this space. These “supplementary variables” do not have an influence on the layout of the indicators. The countries’ positions are to be interpreted as projections onto the respective axes by examining their distance to indicators that are central to the respective dimension (see Section 3.1 and Figure 3 for the interpretation of the axes).

Overall, we find a low uptake of alternative evaluation criteria covering open and responsible research. However, there is substantial variation between countries ( Figure 5 ). Summarizing eight core criteria (“Citizen science,” “Data,” “Engagement with industry,” “Engagement with policy makers,” “Engagement with the public,” “Gender equality,” “Open Access,” “Software”), we find the highest uptake of alternative criteria in Brazil, Portugal, and the United Kingdom, with 1.9, 1.8, and 1.8 alternative criteria per university on average. Uptake is lower in Austria and Germany, and particularly low in the United States (0.7 criteria on average) and India (0.3).

Uptake of alternative indicators. Here we display how frequently alternative indicators are found in the policies. We consider the following eight indicators: “Citizen science,” “Data,” “Engagement with industry,” “Engagement with policy makers,” “Engagement with the public,” “Gender equality,” “Open Access,” “Software”. Dots represent the mean across all universities of a given country, with bootstrapped confidence intervals (95%).

4.3. Comparison with Citation Ranking

Previous research has found no evidence of an association between universities’ ranking positions and the prevalence of traditional or alternative criteria when controlling for geographic region ( Rice et al., 2020 ). Here we conduct a similar analysis, examining differences in the citation ranking and its relationship to the set of criteria, while controlling for country. Removing the influence of countries is meaningful in this context, because an institution’s location and its citation ranking are clearly linked ( Figure S4 ).

After controlling for country, we find only small differences in the prevalence of indicators with respect to an institution’s ranking ( Figure 6 ). Institutions with a low as well as with a medium citation ranking are very close to the sample average on both dimensions. Both are characterized by slightly above-average mentions of the dimension of engagement beyond academia, as well as the dimension of service. Highly ranked institutions are characterized by slightly lower than average mentions of service, review & editorial activities, and engagement, but slightly higher mentions of publication quality, citations, and journal metrics (see also Figure S5 ).

Relationship between indicators with superimposed citation ranking groups. The relationships between criteria displayed in this figure are the same as in Figure 3 . To allow for an investigation of which criteria are more common in a given ranking group than in the rest of the sample, we project the respective profiles into this space. These “supplementary variables” do not have an influence on the layout of the indicators. Ranking categories are calculated within-country to control for the influence of country on an institution’s citation ranking. The ranking positions are to be interpreted as projections onto the respective axes, by examining their distance to indicators which are central to the respective dimension (see Section 3.1 and Figure 3 for the interpretation of the axes).

4.4. “Numbers Help”? Journal Metrics, Publication Quantities, and Publication Quality

We next look further into the ways in which two problematic practices (use of journal-level metrics and numbers of publications as indicators of quality and productivity respectively) are expressed in policies, as well as how policies discuss publication quality.

More than a quarter of the policies we examined mention the Journal Impact Factor or some other measure of journal/venue prestige as an assumed proxy for the quality of research published there. This was highest in India (67%) and Austria (50%). In the latter, unambiguous use of the Journal Impact Factor was found: “The evaluation is based on the journal rankings according to the impact factors from the unchanged ranking lists of the Institute of Scientific Information (ISI)” (Medical University of Vienna, AT_4a). Brazil (42%) also relied heavily on journal-level metrics, specifically the “QUALIS-CAPES classification,” the Brazilian official system of journal classification ( Pinto, Matias, & Moreiro González, 2016 ). Use of such metrics was least visible in the United Kingdom, where the 14% of policies that mention them also tend to be more circumspect in their language (e.g., “Excellence might be evidenced […] (in part) by proxies such as journal impact factors” (Teesside University, GB_6)).

Numbers of publications as a criterion are present in around one in five policies, invoked in various ways. This criterion is especially common in Austria (67%), (e.g., “The list of publications of a habilitation candidate must include at least 16 scientific publications in international relevant journals with peer review procedures, which have been published in the last 12 years,” Medical University of Innsbruck, AT_3). Such quantification is sometimes used in the contexts of strict formulas that also used journal-level metrics (as at the aforementioned Medical University of Vienna (AT_4a): “The basic requirement for a habilitation is 14 points, with 1 point for a standard paper and 2 points for a top paper”). In the United States, quantity of publications is mentioned in 17% of cases, but usually emphasized as just one factor amongst others (e.g., “Quantity can be a consideration but quality must be the primary one” (University of Missouri-St Louis, USA_25)). In the striking words of one US institution, however, “ numbers help ” [emphasis ours] when reporting “the total number of peer-review articles or other creative and research outputs” (University of Nevada, Las Vegas, USA_31). In Germany, only 25% of institutions focused on publication numbers, but often emphasized “not to set a fixed minimum number, but rather an approximate guideline” (TU Dortmund, DE_12). However, in Austria and Germany we also found that as a matter of course many institutions ask for full publication lists as part of their criteria. We did not code these as explicitly supporting publication quantity as an indicator for assessment. However, in practice we might assume that the length of publication lists may be used as an unofficial factor in decisions.

As with journal metrics, UK policies only very rarely mention publication numbers as a factor (just 4%). This is in stark contrast to the number of UK policies mentioning publication quality as an important criterion (79%). Here, the influence of initiatives such as DORA and the United Kingdom’s Forum for Responsible Metrics, and the way these have translated into the UK national assessment exercise, the Research Excellence Framework (REF), is clearly visible. We found, for example, exhortations to produce “high quality” work “that is judged through peer review as being internationally excellent or better in terms of originality, significance and rigour” (University of Sheffield, GB_9a). As we discuss below, this language is highly similar to that of the REF itself, suggesting that institutions have adapted their assessment policies to REF criteria.

The need to reform reward and recognition structures for researchers to mitigate effects of overquantification and incentivize uptake of open and responsible research practices is well understood. Our results show just how far there is to go.