Azure Event Hub vs. Azure Service Bus: A Comparison

In the realm of messaging and event-driven architectures within the Azure ecosystem, two prominent services take the spotlight: Azure Event Hub and Azure Service Bus. Although both services provide dependable messaging functionalities, they each possess unique features and cater to distinct use cases. In this article, we will examine the fundamental distinctions between Azure Event Hub and Azure Service Bus, while also delving into their essential components and scenarios for their utilization.

Table of Contents

What Is Azure Event Hub?

Azure Event Hub stands as a meticulously managed event streaming platform, empowering the aggregation, retention, and examination of extensive datasets. These datasets can originate from applications, devices, and Internet of Things (IoT) endpoints. This service is thoughtfully crafted for high-throughput use cases, rendering it exceptionally well-suited for tasks involving real-time event handling and the streaming of substantial volumes of big data .

Event Hub adheres to a “publish/subscribe” (pub/sub) model, wherein events are disseminated to the hub, and multiple consumers can concurrently process these events. With its robust partitioning and consumer group functionalities, Event Hub facilitates scalability and load distribution.

Key Components Of Event Hub

When it comes to producing events for Azure Event Hub, it’s important to note that there is a maximum size limit of 1 MB for a single event or a batch of events. Any events that exceed this size threshold will be rejected. This constraint ensures efficient and reliable event processing within the platform.

Consumer Groups

Consumer groups play a pivotal role in Azure Event Hub, facilitating the simultaneous consumption of events by multiple applications or services from a single Event Hub. Each consumer group retains its individual offset, which signifies its position within the event stream. This offset management empowers different applications to advance through the event data at their own distinct pace, offering flexibility and autonomy in event processing.

Azure Event Hub strategically segments the event stream into multiple partitions, where each partition represents an organized sequence of events. This partitioning scheme offers numerous advantages, including the ability for multiple consumer instances to concurrently read from different partitions. This parallelism enhances scalability and throughput, ensuring efficient event processing.

Importantly, publishers need not be preoccupied with the intricacies of the partitioning model employed by an event hub. Instead, their primary concern should be to specify a partition key. This partition key serves the crucial role of consistently assigning related events to the same partition, simplifying event organization and ensuring data coherency within the event hub.

Checkpoints In Storage

Azure Event Hub includes a valuable feature known as checkpoints, which serves to enhance fault tolerance and support resumable event processing. These checkpoints serve as storage points that retain the current offset or position of a consumer within a specific partition. This capability is instrumental in ensuring that event processing can seamlessly resume from where it left off in the event of a restart or failure, thus contributing to the reliability and robustness of the system.

What Is Azure Service Bus?

Azure Service Bus serves as a flexible messaging service, enabling seamless communication between loosely coupled applications and components. It offers support for two core messaging models: the “queue” model, designed for sending messages to specific queues and processing them with a single consumer, and the “topic/subscription” model, which permits multiple subscribers to receive and handle messages. With its commitment to dependable message delivery, Azure Service Bus proves to be an ideal solution for a wide range of enterprise messaging needs and application integration scenarios.

Key Components Of Service Bus

Azure Service Bus Queues offer dependable one-to-one messaging capabilities, characterized by first-in-first-out (FIFO) delivery semantics. These queues prioritize the processing of messages in the precise order they are received, and they provide a steadfast assurance of message persistence, ensuring the secure and orderly flow of data.

Azure Service Bus Topics facilitate the implementation of publish/subscribe messaging patterns. In this model, messages are sent to a topic and subsequently distributed to multiple subscriptions. Subscribers have the capability to filter messages according to specific criteria, enhancing the efficiency and precision of message distribution.

Configuration For Parallelism And Throttling

Azure Service Bus offers customizable configurations to manage parallel message processing and mitigate throttling. Key parameters like MaxConcurrentCalls and PrefetchCount can be adjusted to fine-tune performance and safeguard consuming applications from being overwhelmed, ensuring an optimal and controlled messaging experience.

Session-Enabled Entity

In Azure Service Bus, session-enabled entities introduce the assurance of a guaranteed First-In-First-Out (FIFO) order for both queues and topics. Senders can establish a session while sending a message by configuring the SessionID property. Once a session is accepted and maintained by a receiving client, that client gains an exclusive lock on all messages within the queue or subscription that share the same SessionID.

For instance, consider a scenario involving a decoupled employee management application where the employee and company are distinct services. The company service necessitates sending messages to update employee details, a task that is handled by the employee service.

Now, envision situations where a user rapidly updates an employee’s position multiple times or where multiple users concurrently modify the particulars of the same employee in a brief timeframe. In such cases, it’s crucial to prevent overwriting changes and maintain the order in which updates were received. To accomplish this, you can implement session functionality and utilize the employee ID as the SessionID. By doing so, you ensure that messages pertaining to the same employee are consistently processed in a sequential manner, preserving the integrity of the data and the order of updates.

Key Differences Between Azure Event Hub And Service Bus

This table offers a succinct comparison of the primary distinctions between Azure Event Hub and Azure Service Bus, shedding light on their unique attributes and capabilities. This knowledge is invaluable in making an informed choice when selecting the most suitable messaging service tailored to your precise business needs.

Business case For Azure Event Hub

Suppose you operate an online retail business and aim to enhance the shopping experience for your customers. Leveraging Azure Event Hub, you can gather and analyze real-time customer behavior data, encompassing actions like clicks, searches, and purchases. Thanks to Event Hub’s robust high-throughput capabilities, you can efficiently handle and derive valuable insights from this data.

Business Case For Azure Service Bus

In the context of financial institutions, the detection of fraudulent transactions is of paramount importance to ensure the security of customers and protect the institution’s reputation. Azure Service Bus plays a pivotal role in this scenario by facilitating real-time communication among different components of fraud detection systems. By harnessing Service Bus queues, you can effectively manage and prioritize incoming transaction data for comprehensive analysis and investigation.

Azure Event Hub vs Azure Service Bus – What To Choose?

Indeed, when considering the task of preparing NoSQL data from SQL relational data, such as loading Cosmos DB with data from MS-SQL, Azure Event Hub can serve as a valuable tool for sending messages representing individual SQL rows. Subsequently, the receiver can process these messages and efficiently populate the Cosmos DB.

However, if your intention is to utilize messaging services to decouple applications, as described earlier, Azure Service Bus presents an attractive option. It offers the necessary components and flexibility to achieve this architectural goal.

It’s crucial to emphasize that the choice between Azure Event Hub and Azure Service Bus should be made after careful evaluation of various factors. These factors include data volume, throughput requirements, messaging patterns, and the need for message ordering. By thoroughly assessing your specific use case, you can make an informed decision and leverage the full potential of Azure’s messaging services to meet your business requirements effectively.

- Azure Event Hub and Azure Service Bus

- Azure Event Hub vs. Azure Service Bus

- Differences betweem Azure Event Hub and Azure Service Bus

- what is the difference between Azure Event Hub and Azure Service Bus

- What to choose Azure Event Hub or Azure Service Bus

Share Article

Previous Post

ETL vs ELT – Understanding the Key Differences

ChatGPT + Power Platform

Related Articles

RPA Vs Hyperautomation: What Are the Key Differences and How can They Add Value?

“Hyperautomation has shifted from an option to a condition of survival,” says Fabrizio Biscotti, research vice-president at Gartner. In October...

Top AI Technologies CIOs are Investing in Right Now

8 AI technologies taking innovation to the next level in 2021 From predictive analytics, computer vision to smart devices, emerging AI technologies are...

How Data Analytics Can Improve Every Stage of the Real Estate Lifecycle

The real estate industry, like many others, is experiencing a rapid shift toward data-driven decision-making. With the growing importance of...

Business Analytics Vs Data Analytics: What’s The Difference?

There are quite a few types of “analytics” out there. There’s data analytics, diagnostic analytics, predictive analytics, descriptive analytics, prescriptive analytics, web analytics...

United Arab Emirates

[email protected] +971 522751959 / +971 56 455 7007

United States of America

United Kingdom

[email protected] +91 81050 96007

Subscribe to latest technology updates.

Get weekly email alerts on the latest technology insights, updates, tips and tricks.

- Data & AI Products

- Valuable Customers

- Career Options

- Scam Alert!

Digital Transformation

Automating tasks, know your customers.

Project Types

Our AI/ML Projects References

Our Data Platform Projects References

Our Computer Vision Projects References

Manufacturing

Energy, Oil & Gas

And you would be right. It has been around for years and has proven itself as the “big boy on the block”.

But let’s take a look at the newcomer. It is being sold as a carefree Kafka competitor, which even surpasses Kafka (according to Microsoft). So let’s have an objective look at “the good, the bad and the ugly” of this solution and if what they are selling is really as good as they claim.

What is Azure Event Hubs ?

Well if you want the boring sales pitch, have a look here . Read it? Good, now let’s recap. Basically it is a direct competitor of Kafka but offered as a PAAS solution. If that is not enough you will also have a support contract to help you with all your worries and bugs.

Sounds great right? After working with this solution for a couple of months I have found there is actually a shadow side to this story. But first, let’s start on a positive note.

Vocabulary 101

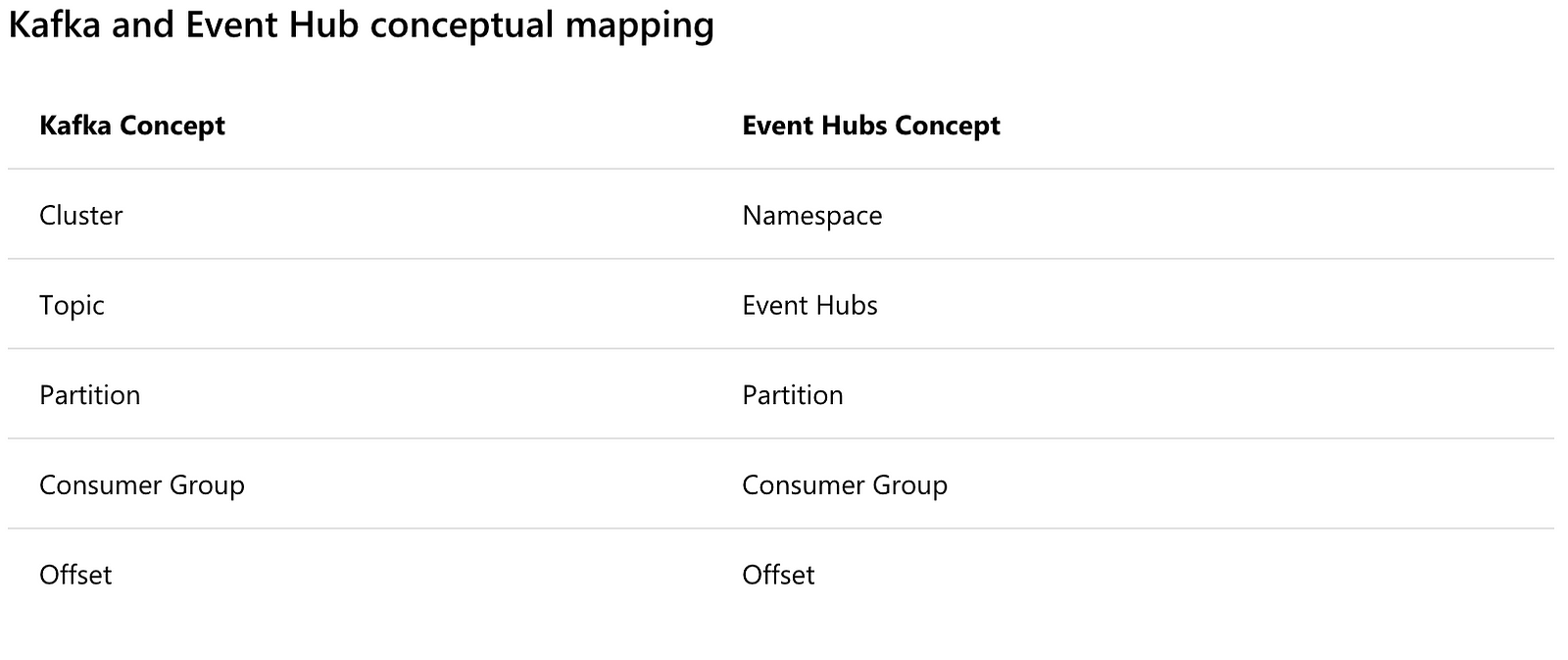

Before we start praising this product, it is good to know the nomenclature used within the platform and how the terms relate to Kafka.

Without further stalling let us dive into the good points of Event Hubs.

Yes it is a fully managed PAAS solution. Setting it up from the portal requires only a couple of clicks. With it you get some great features:

- You can create an auto-inflate cluster, which basically means that the cluster scales up to your needs

- You can set up geo disaster recovery

- You can create a Kafka enabled cluster ( more on this later)

- You can choose with how many nodes you start in the cluster

Once you have created the cluster it is available through an FQDN. I must say I was impressed with what came out of the box.

On a side note, you can also create the cluster through terraform or ARM templates.

Replication

While replication in Kafka is handled through several parameters (either server wide or on topic level) it is something you can simply not forget to configure. The main reasons are performance and if your broker crashes, and you defined only a single partition, all your data is offline.

In Event Hubs this is handled for you in the background. Replication of “Event Hubs” partitions is 3 but you can not influence this figure at this point in time.

This is a plus for all of you who spent their time doing this on Kafka. No messy kerberos, no certificates. Out of the box SSL enabled and fully integrated with AD. This means that you can choose which users have control over the cluster, while reading and writing is done through access policies. These policies are defined by the cluster administrator, yet during setup one SSL certificate is always present giving full control over the entire cluster.

The administrator(s) can generate these policies and distribute the keys to the correct persons in the organisations. If you think a key is compromised you can easily delete it and regenerate a new one.

So all those who need to argue with architects and cyber security, this is definitely a big plus.

Kafka enabled

This one might need some explanation. Until recently there was no compatibility with the Kafka clients and Event Hubs but in May 2018 this feature has been added in preview. Basically it means that your consumer and producer for Kafka now also work for Event Hubs. There are a couple of things you need to consider:

- So far only Kafka clients 1.X are supported

- You have to explicitly enable this feature during cluster creation. You can not do this afterwards.

While this is a great feature, it would be nice to see it enabled by default.

Capture mode

Instead of having a service reading your data and writing it to storage, Event Hubs comes with an integrated solution. If you create an “Event Hub” you can choose to where you want to offload the data. You have 2 options at this point in time: Azure Blob Storage or Azure Datalake. Files will be created on an interval specified by you (a size in MB or by time).

Note that this feature will only work with “Standard” created clusters, not a basic one.

The bad and the ugly

Well unfortunately it is not all sunshine in Microsoft land. Next is an overview of issues I encountered ( some of them solved at the time of writing) ranging from “shrug” to “screen smashing rage”.

We said this was a plus, well it also has a drawback. The directory structure is in a fixed format and can not be changed. So if you want all your files in 1 daily directory forget it. Given that they work with a pattern it would be more useful if they let the end user decide how the file structure looks like.

Quotas, quotas everywhere… It seems Azure has a fixation on locking everything down with quotas. So basically you will run in the following limitations:

- Messages can’t be bigger than 256 KB

- Number of machines in a cluster is maximum 10

- Number of Event Hubs is limited to 10 per namespace

Well the list goes on and on, more info can be found here . From the support page it is unclear which quotas you will be able to extend or not, but worst case you loose a couple of days getting certain quotas increased.

Kafka enabled flag terraform

While an ARM template allows us to automate the cluster deployment, I was also looking into automatic deployment through terraform.

Unfortunately it wasn’t available which lead to the following series of tickets I had to create:

- https://github.com/Azure/azure-sdk-for-go/issues/2099

- https://github.com/terraform-providers/terraform-provider-azurerm/issues/1417

If both these tickets get resolved we can use terraform to create a Kafka enabled cluster.

Serialisation bug

This one has been fixed according to Microsoft in the week 30th of July. The problem occurred when producing messages through HTTP requests, as described here . The messages were posted successfully on the Event Hubs but we were unable to read the messages using Kafka client libraries. When we used the Event Hubs native libraries it worked fine.

While this was more of nuisance then a blocking issue, I still believe it was poor compatibility testing from Microsoft.

Spark performace

When we were trying to write data into Event Hubs using spark, we used the native spark drivers from Microsoft and found that writing never exceeded 3KB/s, which was … slow and frustrating. From 2nd of July 2018 this was fixed and throughput is better ( still not ideal). As an alternative I suggest using the Kafka libraries as they seem to get a good throughput (for the dataset I tested it went up to 1.3 MB/s)

Retention period

The retention per Event Hub is no longer than 7 days. I have heard that they will update this to 30 days in the future, still I believe they should leave it to the customer.

Event Hubs namespace listens only on port 9093 for the client libraries and port 443 if you produce messages through an HTTP client. While this has some advantages for Microsoft it also gives some disadvantages to customers. Other technologies let you change the port, why hardcode this port ?

“Exactly one time” — semtantic

This feature has been introduced in Kafka 0.11 and has been described at length by confluence . While it is complex to implement, it gives the end user so much more flexibility when joining streams and building real-time applications. So hopefully we will get this feature soon.

Kafka connect and Kafka streams

This one is easy, it is not supported. While there are plans to support Kafka Streams there is no mention of Kafka Connect. While it is clear that these 2 frameworks have leveraged the Kafka platform to it’s popularity today, I am uncertain why Microsoft didn’t support this from day one.

While it is obvious that Microsoft is trying to challenge the dominance of Apache Kafka (or Confluent) they dropped the ball on this one. The platform is riddled with bugs and a lot of features are still missing.

In 2015 Microsoft released an article comparing both Eventhub and Kafka, if you want you can find it here , yet most of the info is inaccurate, out of date or twisted.

Time to update or remove it Microsoft, this is just shooting yourself in the foot. To give a couple of examples:

- Throttling: Microsoft states it is a good idea to throttle messages and that Kafka does not support this. Yet you have quotas to throttle network and clients for Kafka. Microsoft stating that you can abuse Kafka is simply untrue. If you enable SSL on your cluster, abuse becomes impossible.

- Security: They state there is no security, yet Kafka supports SSL and Kerberos

Well if you know both technologies you will find more inaccurate statements.

So should we toss it ? Maybe not. It really brings a couple of features to the table that are better or easier to do than with Kafka but for me the maturity is the biggest issue.

If you really rely on heavy duty streaming and complex operations on those streams I would still go with Apacha Kafka. It might be a bit more complex to set up but you get so much more in return.

As always I hoped you enjoyed reading, next time I will try to do a technical explanation on how to do a setup and explain how you can write some code against this platform.

I wrote this article from a personal view while trying to be objective as possible. These thoughts might not necessary be those of my firm.

Source: Azure Event Hubs: The good, the bad and the ugly

Related Blogs:

- ThirdEye Data Ranked Globally as a Top Big Data, BI and B2B Company

- ThirdEye Data Ranked as Top 3 Big Data Analytics Company

- ThirdEye Data launches 3 new Open Source solutions for Anomaly Detection and Predictive Analytics

Transforming Enterprises with Data & AI Services & Solutions.

ThirdEye delivers Data and AI services & solutions for enterprises worldwide by leveraging state-of-the-art Data & AI technologies.

Share This Article

Related posts.

Revolutionizing Customer Churn Prediction: Unleashing Enterprise Potential with OpenAI

Leveraging Azure OpenAI Solutions for the Manufacturing Industry

Microsoft ignite – ignited the partners all right, data lake analytics, services we offer.

Tailored Solutions

©2024. ThirdEye Data. All Rights Reserved.

Cookie Consent

Privacy overview.

Azure Sentinel with Event Hubs – Part 2

- 6 April 2022

In our introductory blog post to Azure Sentinel and Event Hubs, we explained the basic functionality of Event Hubs and how they can help to address data ingestion challenges in large organizations. In this post, we’ll deep dive into what happens between the Event Hubs and Sentinel and give a more detailed view of how logs can be processed and filtered before being ingested to Sentinel. Such filtering capabilities can be required to limit the quantity of logs ingested to Sentinel and reduce costs.

Two possible methods to process logs will be discussed: Azure functions and Stream Analytics. These are however not the only solutions available and any system or process that is an Event Hub consumer can technically be used for such purpose.

Filtering Event Hubs logs

Azure Event Hubs are useful to gather large volumes of logs and distribute them between different consumer s of these logs , but can be limited when logs needs to be further processed or filtered . If only a sub set of the logs received is needed by the “end-user” of the logs (e.g. Sentinel), these logs would have to be filtered by another service acting as a middle-man between the Event and Sentinel. This is where Azure Functions and/of Azure Stream Analytics come into play.

Azure F unctions

Azure Functions are serverless functions that can be created on a continually updated infrastructure managed by Microsoft. Azure functions can be used for any task that can be scripted, including sending email or notification, starting backups and processing events.

Azure Functions are an inexpensive option that can be rapidly deployed to ensure efficient data logging. The functions can be natively written in C#, Java, PowerShell, Python, or JavaScript. It is a simple to use yet powerful tool to process and filter logs before ingesting them into Sentinel.

An Azure Function deployed between an Event Hub and Sentinel would typically be composed of the following:

- An Event Hub trigger to start to function for each event or each batch of events received,

- A code section going through the logs to filter and/or enrich them,

- The code section sending the logs to Sentinel and its underlying Log Analytics Workspace using the Azure Monitor HTTP Data Collector API .

This can be a powerful solution when ingestion logs like AKS (Azure Kubernetes Service) or SQL audit logs as these can generate a large volume of events, some of which are not necessary for security monitoring.

S tream A nalytics

Azure Stream Analytics is a real-time event-processing and analytics engine that can be directly connected to an Event Hub. The service makes it easy to ingest, process, and analyze streaming data from Azure Event Hubs, enabling powerful insights to drive real-time actions.

Stream Analytics does however not support outputting logs directly to a Log Analytics workspace (and therefore Sentinel), but it can be an alternative solution to Azure functions in some case such as storing logs in a storage for long term retention or create dashboards based on the logs processed by the Event Hub. The service might also be more suited for teams that are familiar with database queries as the Stream Analytics queries are based on SQL (but can be extended with JavaScript or C# user-defined functions.

Summary

The versatile nature of Azure Event Hubs also allows users to ingest logs from a wide range of sources, making it an attractive resource for legacy systems. Event Hubs also support Apache Kafka, simplifying the integration with non-Azure systems that can use this protocol (e.g. an ELK stack).

With the flexibility and capabilities provided by other services such as Azure Functions or Stream Analytics, logs ingested by the Event Hub can easily be enriched and/or filtered to ensure each consumer gets access to the logs it requires without being overloaded.

Although the default connection method for Event Hubs is via the public network, Event Hubs can be paired with Azure Virtual Networks to allow a privatized flow of data. This can be taken one step further and can be paired with an Express route to securely ingest data from a locked down On-Prem source .

John Elliot Bannon McCullough

Recent posts.

Arco IT – The Strategic Synergy of MDR and XDR Redefining Business Security

- 22 February 2024

Why an ISMS set-up is important for a SME company

- 21 July 2023

Using the Right Communication is a Factor for Success

- 3 July 2022

How to Reduce Cyber attacks

- 11 April 2022

Azure Sentinel with Event Hubs – Part 1

- 17 January 2022

Cyber Attack: Guide for SMEs

- 6 October 2021

Arco IT is Cyber-Safe

- 22 March 2021

Train Your Human Firewall

- 28 December 2020

- +41 44 244 02 20

- Albulastrasse 34, 8048 Zürich

- [email protected]

© 2022 Arco IT GmbH

- Terms & Conditions

- Data & Privacy Policy

- Privacy Policy

© 2021 Arco IT GmbH

Privacy Overview

Analytics , Event Hubs

Azure Event Hubs Archive is now in public preview, providing efficient micro-batch processing

By Microsoft Azure

Posted on September 14, 2016 3 min read

Azure Event Hubs is a real-time, highly scalable, and fully managed data-stream ingestion service that can ingress millions of events per second and stream them through multiple applications. This lets you process and analyze massive amounts of data produced by your connected devices and applications.

Included in the many key scenarios for Event Hubs are long-term data archival and downstream micro-batch processing. Customers typically use compute or other homegrown solutions for archival or to prepare for batch processing tasks. These custom solutions involve significant overhead with regards to creating, scheduling and managing batch jobs. Why not have something out-of-the-box that solves this problem? Well, look no further – there’s now a great new feature called Event Hubs Archive!

Event Hubs Archive addresses these important requirements by archiving the data directly from Event Hubs to Azure storage as blobs. ‘Archive’ will manage all the compute and downstream processing required to pull data into Azure blob storage. This reduces your total cost of ownership, setup overhead, and management of custom jobs to do the same task, and lets you focus on your apps!

Benefits of Event Hub Archive

Simple setup

Extremely straightforward to configure your Event Hubs to take advantage of this feature.

Reduced total cost of ownership

Since Event Hubs handles all the management, there is minimal overhead involved in setting up your custom job processing mechanisms and tracking them.

Cohesive with your Azure Storage

By just choosing your Azure Storage account, Archive pulls the data from Event Hubs to your containers.

Near-Real time batch analytics

Archive data is available within minutes of ingress into Event Hubs. This enables most common scenarios of near-real time analytics without having to construct separate data pipelines.

A peek inside the Event Hubs Archive

Event Hubs Archive can be enabled in one of the following ways:

With just a click on the new Azure portal on an Event Hub in your namespace

Azure Resource Manager templates

Once the Archive is enabled for the Event Hub, you need to define the time and size windows for archiving.

The time window allows you to set the frequency with which the archival to Azure Blobs will happen. The frequency range is configurable from 60 – 900 seconds (1 – 15 minutes), both inclusive, with a granularity of 1 second. The default setting is 300 seconds (5 minutes).

The size window defines the amount of data built up in your Event Hub before an archival operation. The size range is configurable between 10MB – 500MB (10485760 – 524288000 bytes), both inclusive, at byte level granularity.

The archive operation will kick in when either the time or size window is exceeded . After time and size settings are set, the next step is configuring the destination which will be the storage account of your choosing.

That’s it! You’ll soon see blobs being created in the specified Azure Storage account’s container.

The blobs are created with the following naming convention:

/ / / / / / / /

For example: Myehns/myhub/0/2016/07/20/09/02/15 and are in standard Avro format.

If there is no event data in the specified time and size window, empty blobs will be created by Archive.

Archive will be an option when creating an Event Hub in a namespace and will be limited to one per Event Hub. This will be added to the Throughput Unit charge and thus will be based on the number of throughput units selected for the Event Hub.

Opting Archive will involve 100% egress of ingested data and the cost of storage is not included. This implies that cost is primarily for compute (hey, we are handling all this for you!).

Next Steps?

Learn all about this new feature here, Event Hubs Archive

Use templates to enable the feature on your Event Hub, Enable Archive using Azure Resource Manager

Check out the price details on Azure Event Hubs pricing .

Let us know what you think about newer sinks an newer serialization formats.

Start enjoying this feature, available today.

If you have any questions or suggestions, leave us a comment below.

Let us know what you think of Azure and what you would like to see in the future.

Provide feedback

Build your cloud computing and Azure skills with free courses by Microsoft Learn.

Explore Azure learning

Related posts

Analytics , Announcements , Azure Machine Learning , Azure Stream Analytics , Azure Time Series Insights , Event Hubs , Media

Introducing live video analytics from Azure Media Services—now in preview chevron_right

Analytics , Best practices , Event Hubs , Internet of Things

Data agility and open standards in health: FHIR fueling interoperability in Azure chevron_right

Azure Machine Learning , Azure Security Center , Event Hubs , Hybrid + Multicloud , Microsoft Sentinel

Azure.Source – Volume 86 chevron_right

Analytics , Announcements , Event Hubs , Serverless

Announcing self-serve experience for Azure Event Hubs Clusters chevron_right

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Explore Azure Event Hubs

Learn how Azure Event Hubs captures events and how to scale your processing application.

Learning objectives

After completing this module, you'll be able to:

- Describe the benefits of using Event Hubs and how it captures streaming data.

- Explain how to process events.

- Perform common operations with the Event Hubs client library.

Prerequisites

- You should be familiar with developer concepts and terminology.

- An understanding of cloud computing and some experience with the Azure portal.

- Introduction min

- Discover Azure Event Hubs min

- Explore Event Hubs Capture min

- Scale your processing application min

- Control access to events min

- Perform common operations with the Event Hubs client library min

- Knowledge check min

- Summary min

IMAGES

VIDEO

COMMENTS

Spring samples. Python samples. Show 6 more. You can find Event Hubs samples on GitHub. These samples demonstrate key features in Azure Event Hubs. This article categorizes and describes the samples available, with links to each.

In this article. Azure Event Hubs is a scalable event processing service that ingests and processes large volumes of events and data, with low latency and high reliability. For a high-level overview of the service, see What is Event Hubs?. This article builds on the information in the overview article, and provides technical and implementation ...

Event Hubs is a fully managed, real-time data ingestion service that's simple, trusted, and scalable. Stream millions of events per second from any source to build dynamic data pipelines and immediately respond to business challenges. Keep processing data during emergencies using the geo-disaster recovery and geo-replication features.

Here's a step-by-step example of a serverless streaming application using Azure Event Hubs and Azure Functions: Create an Azure Event Hub Start by creating an Azure Event Hub through the Azure ...

How to Use Azure Event Hubs. Using Azure Event Hubs involves three main steps: creating an event hub namespace, creating an event hub, and sending and receiving data. Let's explore each of these steps in detail. Step 1: Create an Event Hub Namespace. To use Azure Event Hubs, you need to create an event hub namespace, which is a container for ...

Understanding Azure Event Hubs Properties. Before delving into capacity planning, let's understand the key components of Azure Event Hubs. One noteworthy component is the Event Hub itself, a highly scalable data streaming platform designed for real-time data analysis. Azure Event Hubs offers four tiers—Basic, Standard, Premium, and ...

Azure Event Hub stands as a meticulously managed event streaming platform, empowering the aggregation, retention, and examination of extensive datasets. These datasets can originate from applications, devices, and Internet of Things (IoT) endpoints. This service is thoughtfully crafted for high-throughput use cases, rendering it exceptionally ...

Azure Event Hubs is a cloud native data streaming service that can stream millions of events per second, with low latency, from any source to any destination. Event Hubs is compatible with Apache Kafka, and it enables you to run existing Kafka workloads without any code changes. Using Event Hubs to ingest and store streaming data, businesses ...

Azure Event Hub can process millions of events per second, making it apt for our use case. 4. Data Processing & Filtering: Tool: Azure Stream Analytics; Once data arrives in the Event Hub, Azure Stream Analytics ingests the streaming data, processes it in real-time based on our predefined query, and sends the processed data to its designated ...

These functions App uses same App Service Plan and Application Insights. Each Function App utilize unique Azure Storage Account. 2 Event Hub Namespace which includes only 1 Event Hub. In this case study, total number of message/sec is 23,800, and the system needs 24 TU. As default, max number of TU is 20.

Build event-driven applications to support real-time massive scale. Data is valuable only when there's an easy way to process and get timely insights from data sources. Azure Event Hub is a big ...

Capture mode. Instead of having a service reading your data and writing it to storage, Event Hubs comes with an integrated solution. If you create an "Event Hub" you can choose to where you want to offload the data. You have 2 options at this point in time: Azure Blob Storage or Azure Datalake.

With integration of Event Hubs Capture, which automatically delivers the streaming data in Event Hubs to your Azure Blob storage (soon adding Azure Data Lake Store) to Event Grid, you can now focus and tune into Actionable insights. "Actionable insights sit at the apex of your data pyramid. An insight that drives action is typi...

To create an event hub within the namespace, do the following actions: On the Overview page, select + Event hub on the command bar. Type a name for your event hub, then select Review + create. The partition count setting allows you to parallelize consumption across many consumers. For more information, see Partitions.

Azure Sentinel with Event Hubs - Part 1. 17 January 2022. A common problem for large organizations using Azure Sentinel is the handling of data ingestion from applications. We show how our engineers have used Azure Event Hubs for a large environment of a global insurance company to control segregation of data, event filtering and volume control.

An Azure Function deployed between an Event Hub and Sentinel would typically be composed of the following: The code section sending the logs to Sentinel and its underlying Log Analytics Workspace using the Azure Monitor HTTP Data Collector API. This can be a powerful solution when ingestion logs like AKS (Azure Kubernetes Service) or SQL audit ...

Overview. Azure Event Hubs provides an Apache Kafka endpoint on an event hub, which enables users to connect to the event hub using the Kafka protocol. You can often use an event hub's Kafka endpoint from your applications without any code changes. You modify only the configuration, that is, update the connection string in configurations to ...

Of the many key scenarios for Event Hubs, are long term data archival and downstream micro-batch processing. Customers typically use compute (Event Processor Host/Event Receivers) or Stream Analytics jobs to perform these archival or batch processing tasks. These along with other custom downstream solutions involve significant overhead with regards to scheduling and managing batch jobs.

Explore Azure Event Hubs. Module 8 Units Feedback. Intermediate Developer Azure Event Hubs Learn how Azure Event Hubs captures events and how to scale your processing application. Learning objectives After completing this module, you'll be able to: Describe the benefits of using Event Hubs and how it captures streaming data. ...

Online Migration to PostgreSQL Flexible Server on Azure from Single Server (Public Preview) Extension Version Sync (Public Preview) Latest Extension Versions for Timescale DB, pgvector, PostGIS, tds_fdw, and pgaudit; Case Study: Better Performance with Azure PostgreSQL Flexible Server . 1. Enhanced Geo-Disaster recovery with read replicas