- Trending Now

- Foundational Courses

- Data Science

- Practice Problem

- Machine Learning

- System Design

- DevOps Tutorial

- Statistical Machine Translation of Languages in Artificial Intelligence

- Breadth-first Search is a special case of Uniform-cost search

- Artificial Intelligence - Boon or Bane

- Stochastic Games in Artificial Intelligence

- Resolution Algorithm in Artificial Intelligence

- Types of Environments in AI

- PEAS Description of Task Environment

- Optimal Decision Making in Multiplayer Games

- Game Theory in AI

- Emergence Of Artificial Intelligence

- Propositional Logic based Agent

- GPT-3 : Next AI Revolution

- Advantages and Disadvantage of Artificial Intelligence

- Understanding PEAS in Artificial Intelligence

- Sparse Rewards in Reinforcement Learning

- Propositional Logic Hybrid Agent and Logical State

- Prepositional Logic Inferences

- Linguistic variable And Linguistic hedges

- Knowledge based agents in AI

Problem Solving in Artificial Intelligence

The reflex agent of AI directly maps states into action. Whenever these agents fail to operate in an environment where the state of mapping is too large and not easily performed by the agent, then the stated problem dissolves and sent to a problem-solving domain which breaks the large stored problem into the smaller storage area and resolves one by one. The final integrated action will be the desired outcomes.

On the basis of the problem and their working domain, different types of problem-solving agent defined and use at an atomic level without any internal state visible with a problem-solving algorithm. The problem-solving agent performs precisely by defining problems and several solutions. So we can say that problem solving is a part of artificial intelligence that encompasses a number of techniques such as a tree, B-tree, heuristic algorithms to solve a problem.

We can also say that a problem-solving agent is a result-driven agent and always focuses on satisfying the goals.

There are basically three types of problem in artificial intelligence:

1. Ignorable: In which solution steps can be ignored.

2. Recoverable: In which solution steps can be undone.

3. Irrecoverable: Solution steps cannot be undo.

Steps problem-solving in AI: The problem of AI is directly associated with the nature of humans and their activities. So we need a number of finite steps to solve a problem which makes human easy works.

These are the following steps which require to solve a problem :

- Problem definition: Detailed specification of inputs and acceptable system solutions.

- Problem analysis: Analyse the problem thoroughly.

- Knowledge Representation: collect detailed information about the problem and define all possible techniques.

- Problem-solving: Selection of best techniques.

Components to formulate the associated problem:

- Initial State: This state requires an initial state for the problem which starts the AI agent towards a specified goal. In this state new methods also initialize problem domain solving by a specific class.

- Action: This stage of problem formulation works with function with a specific class taken from the initial state and all possible actions done in this stage.

- Transition: This stage of problem formulation integrates the actual action done by the previous action stage and collects the final stage to forward it to their next stage.

- Goal test: This stage determines that the specified goal achieved by the integrated transition model or not, whenever the goal achieves stop the action and forward into the next stage to determines the cost to achieve the goal.

- Path costing: This component of problem-solving numerical assigned what will be the cost to achieve the goal. It requires all hardware software and human working cost.

Please Login to comment...

Similar reads.

- Artificial Intelligence

- 10 Best Slack Integrations to Enhance Your Team's Productivity

- 10 Best Zendesk Alternatives and Competitors

- 10 Best Trello Power-Ups for Maximizing Project Management

- Google Rolls Out Gemini In Android Studio For Coding Assistance

- 30 OOPs Interview Questions and Answers (2024)

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

- Data Structure

- Coding Problems

- C Interview Programs

- C++ Aptitude

- Java Aptitude

- C# Aptitude

- PHP Aptitude

- Linux Aptitude

- DBMS Aptitude

- Networking Aptitude

- AI Aptitude

- MIS Executive

- Web Technologie MCQs

- CS Subjects MCQs

- Databases MCQs

- Programming MCQs

- Testing Software MCQs

- Digital Mktg Subjects MCQs

- Cloud Computing S/W MCQs

- Engineering Subjects MCQs

- Commerce MCQs

- More MCQs...

- Machine Learning/AI

- Operating System

- Computer Network

- Software Engineering

- Discrete Mathematics

- Digital Electronics

- Data Mining

- Embedded Systems

- Cryptography

- CS Fundamental

- More Tutorials...

- Tech Articles

- Code Examples

- Programmer's Calculator

- XML Sitemap Generator

- Tools & Generators

Home » Machine Learning/Artificial Intelligence

Problem Solving in Artificial Intelligence

In this tutorial, you will study about the problem-solving approach in Artificial Intelligence. You will learn how an agent tackles the problem and what steps are involved in solving it? By Monika Sharma Last updated : April 12, 2023

Problem Solving in AI

The aim of Artificial Intelligence is to develop a system which can solve the various problems on its own. But the challenge is, to understand a problem, a system must predict and convert the problem in its understandable form. That is, when an agent confronts a problem, it should first sense the problem, and this information that the agent gets through the sensing should be converted into machine-understandable form. For this, a particular sequence should be followed by the agent in which a particular format for the representation of agent's knowledge is defined and each time a problem arises, the agent can follow that particular approach to find a solution to it .

Types of Problems in AI

The types of problems in artificial intelligence are:

1. Ignorable Problems

In ignorable problems, the solution steps can be ignored.

2. Recoverable Problems

In recoverable problems, the solution steps which you have already implemented can be undone.

3. Irrecoverable Problems

In irrecoverable problems, the solution steps which you have already implemented cannot be undone.

Steps for Problem Solving in AI

The steps involved in solving a problem (by an agent based on Artificial Intelligence ) are:

1. Define a problem

Whenever a problem arises, the agent must first define a problem to an extent so that a particular state space can be represented through it. Analyzing and defining the problem is a very important step because if the problem is understood something which is different than the actual problem, then the whole problem-solving process by the agent is of no use.

2. Form the state space

Convert the problem statement into state space. A state space is the collection of all the possible valid states that an agent can reside in. But here, all the possible states are chosen which can exist according to the current problem. The rest are ignored while dealing with this particular problem.

3. Gather knowledge

collect and isolate the knowledge which is required by the agent to solve the current problem. This knowledge gathering is done from both the pre-embedded knowledge in the system and the knowledge it has gathered through the past experiences in solving the same type of problem earlier.

4. Planning-(Decide data structure and control strategy)

A problem may not always be an isolated problem. It may contain various related problems as well or some related areas where the decision made with respect to the current problem can affect those areas. So, a well-suited data structure and a relevant control strategy must be decided before attempting to solve the problem.

5. Applying and executing

After all the gathering of knowledge and planning the strategies, the knowledge should be applied and the plans should be executed in a systematic way so s to reach the goal state in the most efficient and fruitful manner.

Components to Formulate the Associated Problem

- Initial State

- Path Costing

Related Tutorials

- Machine Learning, AI, Deep Learning, and Data Science

- How to Learn Machine Learning and Artificial Intelligence?

- Artificial Intelligence - Introduction

- Artificial Intelligence: What It is, Types, Applications, Advantages and Disadvantages

- Artificial Intelligence-based Agent

- Types of Agents in AI

- Classification of Environment in AI

- PEAS Based Grouping of Agents in AI

- Important terms used while problem solving in AI

- Water jug problem in AI

- Problem Solving by Searching in AI

- Hill Climbing Search in AI

- Best-first Search (BFS) in AI

- Vacuum Cleaner Problem in AI

- Constraint Satisfaction Problems in AI

- N-Queens Problem

- Crypt-Arithmetic Problem

- Knowledge Representation in AI

- Quantifiers in knowledge Representation in an AI Agent

- What is logic in AI?

- Knowledge-Based Agent Levels in AI

- Backus-Naur Form (BNF) in AI

- Uncertainty in AI – A brief Introduction

- Reasons for Uncertainty in AI

- Probabilistic Reasoning in AI - A way to deal with Uncertainty

- Conditional Probability in AI

- Bayes Theorem in Conditional Probability

- Certainty Factor in AI

- Inference in terms of Artificial Intelligence

- Decision Making Under Uncertainty in AI

- What is Fuzzy Logic in AI and Why It is used?

- Fuzzy Logic System Architecture and Its Components in AI

- Membership Function in Fuzzy Logic | Artificial Intelligence

- Learning Agents in AI

- Types of Learning in Agents in AI

- Elements of a Learning Agent in AI

- Reinforcement Learning: What It Is, Types, Applications

- Artificial Communication | Artificial Intelligence

- Components of communicating agents | Artificial Intelligence

- Natural language processing (NLP)

- Natural Language Understanding (NLU) Process

Comments and Discussions!

Load comments ↻

- Marketing MCQs

- Blockchain MCQs

- Artificial Intelligence MCQs

- Data Analytics & Visualization MCQs

- Python MCQs

- C++ Programs

- Python Programs

- Java Programs

- D.S. Programs

- Golang Programs

- C# Programs

- JavaScript Examples

- jQuery Examples

- CSS Examples

- C++ Tutorial

- Python Tutorial

- ML/AI Tutorial

- MIS Tutorial

- Software Engineering Tutorial

- Scala Tutorial

- Privacy policy

- Certificates

- Content Writers of the Month

Copyright © 2024 www.includehelp.com. All rights reserved.

Artificial intelligence, or AI, is technology that enables computers and machines to simulate human intelligence and problem-solving capabilities.

On its own or combined with other technologies (e.g., sensors, geolocation, robotics) AI can perform tasks that would otherwise require human intelligence or intervention. Digital assistants, GPS guidance, autonomous vehicles, and generative AI tools (like Open AI's Chat GPT) are just a few examples of AI in the daily news and our daily lives.

As a field of computer science, artificial intelligence encompasses (and is often mentioned together with) machine learning and deep learning . These disciplines involve the development of AI algorithms, modeled after the decision-making processes of the human brain, that can ‘learn’ from available data and make increasingly more accurate classifications or predictions over time.

Artificial intelligence has gone through many cycles of hype, but even to skeptics, the release of ChatGPT seems to mark a turning point. The last time generative AI loomed this large, the breakthroughs were in computer vision, but now the leap forward is in natural language processing (NLP). Today, generative AI can learn and synthesize not just human language but other data types including images, video, software code, and even molecular structures.

Applications for AI are growing every day. But as the hype around the use of AI tools in business takes off, conversations around ai ethics and responsible ai become critically important. For more on where IBM stands on these issues, please read Building trust in AI .

Learn about barriers to AI adoptions, particularly lack of AI governance and risk management solutions.

Register for the guide on foundation models

Weak AI—also known as narrow AI or artificial narrow intelligence (ANI)—is AI trained and focused to perform specific tasks. Weak AI drives most of the AI that surrounds us today. "Narrow" might be a more apt descriptor for this type of AI as it is anything but weak: it enables some very robust applications, such as Apple's Siri, Amazon's Alexa, IBM watsonx™, and self-driving vehicles.

Strong AI is made up of artificial general intelligence (AGI) and artificial super intelligence (ASI). AGI, or general AI, is a theoretical form of AI where a machine would have an intelligence equal to humans; it would be self-aware with a consciousness that would have the ability to solve problems, learn, and plan for the future. ASI—also known as superintelligence—would surpass the intelligence and ability of the human brain. While strong AI is still entirely theoretical with no practical examples in use today, that doesn't mean AI researchers aren't also exploring its development. In the meantime, the best examples of ASI might be from science fiction, such as HAL, the superhuman and rogue computer assistant in 2001: A Space Odyssey.

Machine learning and deep learning are sub-disciplines of AI, and deep learning is a sub-discipline of machine learning.

Both machine learning and deep learning algorithms use neural networks to ‘learn’ from huge amounts of data. These neural networks are programmatic structures modeled after the decision-making processes of the human brain. They consist of layers of interconnected nodes that extract features from the data and make predictions about what the data represents.

Machine learning and deep learning differ in the types of neural networks they use, and the amount of human intervention involved. Classic machine learning algorithms use neural networks with an input layer, one or two ‘hidden’ layers, and an output layer. Typically, these algorithms are limited to supervised learning : the data needs to be structured or labeled by human experts to enable the algorithm to extract features from the data.

Deep learning algorithms use deep neural networks—networks composed of an input layer, three or more (but usually hundreds) of hidden layers, and an output layout. These multiple layers enable unsupervised learning : they automate extraction of features from large, unlabeled and unstructured data sets. Because it doesn’t require human intervention, deep learning essentially enables machine learning at scale.

Generative AI refers to deep-learning models that can take raw data—say, all of Wikipedia or the collected works of Rembrandt—and “learn” to generate statistically probable outputs when prompted. At a high level, generative models encode a simplified representation of their training data and draw from it to create a new work that’s similar, but not identical, to the original data.

Generative models have been used for years in statistics to analyze numerical data. The rise of deep learning, however, made it possible to extend them to images, speech, and other complex data types. Among the first class of AI models to achieve this cross-over feat were variational autoencoders, or VAEs, introduced in 2013. VAEs were the first deep-learning models to be widely used for generating realistic images and speech.

“VAEs opened the floodgates to deep generative modeling by making models easier to scale,” said Akash Srivastava , an expert on generative AI at the MIT-IBM Watson AI Lab. “Much of what we think of today as generative AI started here.”

Early examples of models, including GPT-3, BERT, or DALL-E 2, have shown what’s possible. In the future, models will be trained on a broad set of unlabeled data that can be used for different tasks, with minimal fine-tuning. Systems that execute specific tasks in a single domain are giving way to broad AI systems that learn more generally and work across domains and problems. Foundation models, trained on large, unlabeled datasets and fine-tuned for an array of applications, are driving this shift.

As to the future of AI, when it comes to generative AI, it is predicted that foundation models will dramatically accelerate AI adoption in enterprise. Reducing labeling requirements will make it much easier for businesses to dive in, and the highly accurate, efficient AI-driven automation they enable will mean that far more companies will be able to deploy AI in a wider range of mission-critical situations. For IBM, the hope is that the computing power of foundation models can eventually be brought to every enterprise in a frictionless hybrid-cloud environment.

Explore foundation models in watsonx.ai

There are numerous, real-world applications for AI systems today. Below are some of the most common use cases:

Also known as automatic speech recognition (ASR), computer speech recognition, or speech-to-text, speech recognition uses NLP to process human speech into a written format. Many mobile devices incorporate speech recognition into their systems to conduct voice search—Siri, for example—or provide more accessibility around texting in English or many widely-used languages. See how Don Johnston used IBM Watson Text to Speech to improve accessibility in the classroom with our case study .

Online virtual agents and chatbots are replacing human agents along the customer journey. They answer frequently asked questions (FAQ) around topics, like shipping, or provide personalized advice, cross-selling products or suggesting sizes for users, changing the way we think about customer engagement across websites and social media platforms. Examples include messaging bots on e-commerce sites with virtual agents , messaging apps, such as Slack and Facebook Messenger, and tasks usually done by virtual assistants and voice assistants . See how Autodesk Inc. used IBM watsonx Assistant to speed up customer response times by 99% with our case study .

This AI technology enables computers and systems to derive meaningful information from digital images, videos and other visual inputs, and based on those inputs, it can take action. This ability to provide recommendations distinguishes it from image recognition tasks. Powered by convolutional neural networks, computer vision has applications within photo tagging in social media, radiology imaging in healthcare, and self-driving cars within the automotive industry. See how ProMare used IBM Maximo to set a new course for ocean research with our case study .

Adaptive robotics act on Internet of Things (IoT) device information, and structured and unstructured data to make autonomous decisions. NLP tools can understand human speech and react to what they are being told. Predictive analytics are applied to demand responsiveness, inventory and network optimization, preventative maintenance and digital manufacturing. Search and pattern recognition algorithms—which are no longer just predictive, but hierarchical—analyze real-time data, helping supply chains to react to machine-generated, augmented intelligence, while providing instant visibility and transparency. See how Hendrickson used IBM Sterling to fuel real-time transactions with our case study .

The weather models broadcasters rely on to make accurate forecasts consist of complex algorithms run on supercomputers. Machine-learning techniques enhance these models by making them more applicable and precise. See how Emnotion used IBM Cloud to empower weather-sensitive enterprises to make more proactive, data-driven decisions with our case study .

AI models can comb through large amounts of data and discover atypical data points within a dataset. These anomalies can raise awareness around faulty equipment, human error, or breaches in security. See how Netox used IBM QRadar to protect digital businesses from cyberthreats with our case study .

The idea of "a machine that thinks" dates back to ancient Greece. But since the advent of electronic computing (and relative to some of the topics discussed in this article) important events and milestones in the evolution of artificial intelligence include the following:

- 1950: Alan Turing publishes Computing Machinery and Intelligence (link resides outside ibm.com) . In this paper, Turing—famous for breaking the German ENIGMA code during WWII and often referred to as the "father of computer science"— asks the following question: "Can machines think?" From there, he offers a test, now famously known as the "Turing Test," where a human interrogator would try to distinguish between a computer and human text response. While this test has undergone much scrutiny since it was published, it remains an important part of the history of AI, as well as an ongoing concept within philosophy as it utilizes ideas around linguistics.

- 1956: John McCarthy coins the term "artificial intelligence" at the first-ever AI conference at Dartmouth College. (McCarthy would go on to invent the Lisp language.) Later that year, Allen Newell, J.C. Shaw, and Herbert Simon create the Logic Theorist, the first-ever running AI software program.

- 1967: Frank Rosenblatt builds the Mark 1 Perceptron, the first computer based on a neural network that "learned" though trial and error. Just a year later, Marvin Minsky and Seymour Papert publish a book titled Perceptrons , which becomes both the landmark work on neural networks and, at least for a while, an argument against future neural network research projects.

- 1980s: Neural networks which use a backpropagation algorithm to train itself become widely used in AI applications.

- 1995 : Stuart Russell and Peter Norvig publish Artificial Intelligence: A Modern Approach (link resides outside ibm.com), which becomes one of the leading textbooks in the study of AI. In it, they delve into four potential goals or definitions of AI, which differentiates computer systems on the basis of rationality and thinking vs. acting.

- 1997: IBM's Deep Blue beats then world chess champion Garry Kasparov, in a chess match (and rematch).

- 2004 : John McCarthy writes a paper, What Is Artificial Intelligence? (link resides outside ibm.com), and proposes an often-cited definition of AI.

- 2011: IBM Watson beats champions Ken Jennings and Brad Rutter at Jeopardy!

- 2015: Baidu's Minwa supercomputer uses a special kind of deep neural network called a convolutional neural network to identify and categorize images with a higher rate of accuracy than the average human.

- 2016: DeepMind's AlphaGo program, powered by a deep neural network, beats Lee Sodol, the world champion Go player, in a five-game match. The victory is significant given the huge number of possible moves as the game progresses (over 14.5 trillion after just four moves!). Later, Google purchased DeepMind for a reported USD 400 million.

- 2023 : A rise in large language models, or LLMs, such as ChatGPT, create an enormous change in performance of AI and its potential to drive enterprise value. With these new generative AI practices, deep-learning models can be pre-trained on vast amounts of raw, unlabeled data.

Put AI to work in your business with IBM’s industry-leading AI expertise and portfolio of solutions at your side.

Reinvent critical workflows and operations by adding AI to maximize experiences, real-time decision-making and business value.

AI is changing the game for cybersecurity, analyzing massive quantities of risk data to speed response times and augment under-resourced security operations.

Learn how to use the model selection framework to select the foundation model for your business needs.

Access our full catalog of over 100 online courses by purchasing an individual or multi-user digital learning subscription today, enabling you to expand your skills across a range of our products at one low price.

IBM watsonx Assistant recognized as a Customers' Choice in the 2023 Gartner Peer Insights Voice of the Customer report for Enterprise Conversational AI platforms

Discover how machine learning can predict demand and cut costs.

Train, validate, tune and deploy generative AI, foundation models and machine learning capabilities with IBM watsonx.ai, a next-generation enterprise studio for AI builders. Build AI applications in a fraction of the time with a fraction of the data.

Artificial Intelligence

Control System

- Interview Q

Intelligent Agent

Problem-solving, adversarial search, knowledge represent, uncertain knowledge r., subsets of ai, artificial intelligence mcq, related tutorials.

- Send your Feedback to [email protected]

Help Others, Please Share

Learn Latest Tutorials

Transact-SQL

Reinforcement Learning

R Programming

React Native

Python Design Patterns

Python Pillow

Python Turtle

Preparation

Verbal Ability

Interview Questions

Company Questions

Trending Technologies

Cloud Computing

Data Science

Machine Learning

B.Tech / MCA

Data Structures

Operating System

Computer Network

Compiler Design

Computer Organization

Discrete Mathematics

Ethical Hacking

Computer Graphics

Software Engineering

Web Technology

Cyber Security

C Programming

Data Mining

Data Warehouse

Suggestions or feedback?

MIT News | Massachusetts Institute of Technology

- Machine learning

- Social justice

- Black holes

- Classes and programs

Departments

- Aeronautics and Astronautics

- Brain and Cognitive Sciences

- Architecture

- Political Science

- Mechanical Engineering

Centers, Labs, & Programs

- Abdul Latif Jameel Poverty Action Lab (J-PAL)

- Picower Institute for Learning and Memory

- Lincoln Laboratory

- School of Architecture + Planning

- School of Engineering

- School of Humanities, Arts, and Social Sciences

- Sloan School of Management

- School of Science

- MIT Schwarzman College of Computing

AI accelerates problem-solving in complex scenarios

Press contact :.

Previous image Next image

While Santa Claus may have a magical sleigh and nine plucky reindeer to help him deliver presents, for companies like FedEx, the optimization problem of efficiently routing holiday packages is so complicated that they often employ specialized software to find a solution.

This software, called a mixed-integer linear programming (MILP) solver, splits a massive optimization problem into smaller pieces and uses generic algorithms to try and find the best solution. However, the solver could take hours — or even days — to arrive at a solution.

The process is so onerous that a company often must stop the software partway through, accepting a solution that is not ideal but the best that could be generated in a set amount of time.

Researchers from MIT and ETH Zurich used machine learning to speed things up.

They identified a key intermediate step in MILP solvers that has so many potential solutions it takes an enormous amount of time to unravel, which slows the entire process. The researchers employed a filtering technique to simplify this step, then used machine learning to find the optimal solution for a specific type of problem.

Their data-driven approach enables a company to use its own data to tailor a general-purpose MILP solver to the problem at hand.

This new technique sped up MILP solvers between 30 and 70 percent, without any drop in accuracy. One could use this method to obtain an optimal solution more quickly or, for especially complex problems, a better solution in a tractable amount of time.

This approach could be used wherever MILP solvers are employed, such as by ride-hailing services, electric grid operators, vaccination distributors, or any entity faced with a thorny resource-allocation problem.

“Sometimes, in a field like optimization, it is very common for folks to think of solutions as either purely machine learning or purely classical. I am a firm believer that we want to get the best of both worlds, and this is a really strong instantiation of that hybrid approach,” says senior author Cathy Wu, the Gilbert W. Winslow Career Development Assistant Professor in Civil and Environmental Engineering (CEE), and a member of a member of the Laboratory for Information and Decision Systems (LIDS) and the Institute for Data, Systems, and Society (IDSS).

Wu wrote the paper with co-lead authors Sirui Li, an IDSS graduate student, and Wenbin Ouyang, a CEE graduate student; as well as Max Paulus, a graduate student at ETH Zurich. The research will be presented at the Conference on Neural Information Processing Systems.

Tough to solve

MILP problems have an exponential number of potential solutions. For instance, say a traveling salesperson wants to find the shortest path to visit several cities and then return to their city of origin. If there are many cities which could be visited in any order, the number of potential solutions might be greater than the number of atoms in the universe.

“These problems are called NP-hard, which means it is very unlikely there is an efficient algorithm to solve them. When the problem is big enough, we can only hope to achieve some suboptimal performance,” Wu explains.

An MILP solver employs an array of techniques and practical tricks that can achieve reasonable solutions in a tractable amount of time.

A typical solver uses a divide-and-conquer approach, first splitting the space of potential solutions into smaller pieces with a technique called branching. Then, the solver employs a technique called cutting to tighten up these smaller pieces so they can be searched faster.

Cutting uses a set of rules that tighten the search space without removing any feasible solutions. These rules are generated by a few dozen algorithms, known as separators, that have been created for different kinds of MILP problems.

Wu and her team found that the process of identifying the ideal combination of separator algorithms to use is, in itself, a problem with an exponential number of solutions.

“Separator management is a core part of every solver, but this is an underappreciated aspect of the problem space. One of the contributions of this work is identifying the problem of separator management as a machine learning task to begin with,” she says.

Shrinking the solution space

She and her collaborators devised a filtering mechanism that reduces this separator search space from more than 130,000 potential combinations to around 20 options. This filtering mechanism draws on the principle of diminishing marginal returns, which says that the most benefit would come from a small set of algorithms, and adding additional algorithms won’t bring much extra improvement.

Then they use a machine-learning model to pick the best combination of algorithms from among the 20 remaining options.

This model is trained with a dataset specific to the user’s optimization problem, so it learns to choose algorithms that best suit the user’s particular task. Since a company like FedEx has solved routing problems many times before, using real data gleaned from past experience should lead to better solutions than starting from scratch each time.

The model’s iterative learning process, known as contextual bandits, a form of reinforcement learning, involves picking a potential solution, getting feedback on how good it was, and then trying again to find a better solution.

This data-driven approach accelerated MILP solvers between 30 and 70 percent without any drop in accuracy. Moreover, the speedup was similar when they applied it to a simpler, open-source solver and a more powerful, commercial solver.

In the future, Wu and her collaborators want to apply this approach to even more complex MILP problems, where gathering labeled data to train the model could be especially challenging. Perhaps they can train the model on a smaller dataset and then tweak it to tackle a much larger optimization problem, she says. The researchers are also interested in interpreting the learned model to better understand the effectiveness of different separator algorithms.

This research is supported, in part, by Mathworks, the National Science Foundation (NSF), the MIT Amazon Science Hub, and MIT’s Research Support Committee.

Share this news article on:

Related links.

- Project website

- Laboratory for Information and Decision Systems

- Institute for Data, Systems, and Society

- Department of Civil and Environmental Engineering

Related Topics

- Computer science and technology

- Artificial intelligence

- Laboratory for Information and Decision Systems (LIDS)

- Civil and environmental engineering

- National Science Foundation (NSF)

Related Articles

Machine learning speeds up vehicle routing

Q&A: Cathy Wu on developing algorithms to safely integrate robots into our world

Keeping the balance: How flexible nuclear operation can help add more wind and solar to the grid

Previous item Next item

More MIT News

A biomedical engineer pivots from human movement to women’s health

Read full story →

MIT tops among single-campus universities in US patents granted

A new way to detect radiation involving cheap ceramics

A crossroads for computing at MIT

Growing our donated organ supply

New AI method captures uncertainty in medical images

- More news on MIT News homepage →

Massachusetts Institute of Technology 77 Massachusetts Avenue, Cambridge, MA, USA

- Map (opens in new window)

- Events (opens in new window)

- People (opens in new window)

- Careers (opens in new window)

- Accessibility

- Social Media Hub

- MIT on Facebook

- MIT on YouTube

- MIT on Instagram

- April 11, 2024 | Unlocking the Future of VR: New Algorithm Turns iPhones Into Holographic Projectors

- April 11, 2024 | Light-Matter Particle Breakthrough Could Change Displays Forever

- April 11, 2024 | Unlocking AI’s Black Box: New Formula Explains How They Detect Relevant Patterns

- April 11, 2024 | Sunflower Secrets Unveiled: Multiple Origins of Flower Symmetry Discovered

- April 11, 2024 | Unlocking Brain Health Through the Science of Nutrition

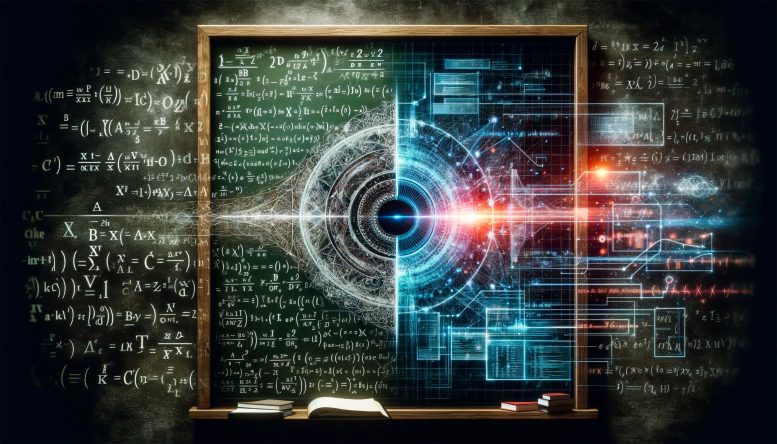

The Intersection of Math and AI: A New Era in Problem-Solving

By Whitney Clavin, California Institute of Technology (Caltech) December 11, 2023

The Mathematics and Machine Learning 2023 conference at Caltech highlights the growing integration of machine learning in mathematics, offering new solutions to complex problems and advancing algorithm development.

Conference is exploring burgeoning connections between the two fields.

Traditionally, mathematicians jot down their formulas using paper and pencil, seeking out what they call pure and elegant solutions. In the 1970s, they hesitantly began turning to computers to assist with some of their problems. Decades later, computers are often used to crack the hardest math puzzles. Now, in a similar vein, some mathematicians are turning to machine learning tools to aid in their numerical pursuits.

Embracing Machine Learning in Mathematics

“Mathematicians are beginning to embrace machine learning,” says Sergei Gukov, the John D. MacArthur Professor of Theoretical Physics and Mathematics at Caltech, who put together the Mathematics and Machine Learning 2023 conference, which is taking place at Caltech December 10–13.

“There are some mathematicians who may still be skeptical about using the tools,” Gukov says. “The tools are mischievous and not as pure as using paper and pencil, but they work.”

Machine Learning: A New Era in Mathematical Problem Solving

Machine learning is a subfield of AI, or artificial intelligence, in which a computer program is trained on large datasets and learns to find new patterns and make predictions. The conference, the first put on by the new Richard N. Merkin Center for Pure and Applied Mathematics, will help bridge the gap between developers of machine learning tools (the data scientists) and the mathematicians. The goal is to discuss ways in which the two fields can complement each other.

Mathematics and Machine Learning: A Two-Way Street

“It’s a two-way street,” says Gukov, who is the director of the new Merkin Center, which was established by Caltech Trustee Richard Merkin.

“Mathematicians can help come up with clever new algorithms for machine learning tools like the ones used in generative AI programs like ChatGPT, while machine learning can help us crack difficult math problems.”

Yi Ni, a professor of mathematics at Caltech, plans to attend the conference, though he says he does not use machine learning in his own research, which involves the field of topology and, specifically, the study of mathematical knots in lower dimensions. “Some mathematicians are more familiar with these advanced tools than others,” Ni says. “You need to know somebody who is an expert in machine learning and willing to help. Ultimately, I think AI for math will become a subfield of math.”

The Riemann Hypothesis and Machine Learning

One tough problem that may unravel with the help of machine learning, according to Gukov, is known as the Riemann hypothesis. Named after the 19th-century mathematician Bernhard Riemann, this problem is one of seven Millennium Problems selected by the Clay Mathematics Institute; a $1 million prize will be awarded for the solution to each problem.

The Riemann hypothesis centers around a formula known as the Riemann zeta function, which packages information about prime numbers. If proved true, the hypothesis would provide a new understanding of how prime numbers are distributed. Machine learning tools could help crack the problem by providing a new way to run through more possible iterations of the problem.

Mathematicians and Machine Learning: A Synergistic Relationship

“Machine learning tools are very good at recognizing patterns and analyzing very complex problems,” Gukov says.

Ni agrees that machine learning can serve as a helpful assistant. “Machine learning solutions may not be as beautiful, but they can find new connections,” he says. “But you still need a mathematician to turn the questions into something computers can solve.”

Knot Theory and Machine Learning

Gukov has used machine learning himself to untangle problems in knot theory. Knot theory is the study of abstract knots, which are similar to the knots you might find on a shoestring, but the ends of the strings are closed into loops. These mathematical knots can be entwined in various ways, and mathematicians like Gukov want to understand their structures and how they relate to each other. The work has relationships to other fields of mathematics such as representation theory and quantum algebra, and even quantum physics.

In particular, Gukov and his colleagues are working to solve what is called the smooth Poincaré conjecture in four dimensions. The original Poincaré conjecture, which is also a Millennium Problem, was proposed by mathematician Henri Poincaré early in the 20th century. It was ultimately solved from 2002 to 2003 by Grigori Perelman (who famously turned down his prize of $1 million). The problem involves comparing spheres to certain types of manifolds that look like spheres; manifolds are shapes that are projections of higher-dimensional objects onto lower dimensions. Gukov says the problem is like asking, “Are objects that look like spheres really spheres?”

The four-dimensional smooth Poincaré conjecture holds that, in four dimensions, all manifolds that look like spheres are indeed actually spheres. In an attempt to solve this conjecture, Gukov and his team develop a machine learning approach to evaluate so-called ribbon knots.

“Our brain cannot handle four dimensions, so we package shapes into knots,” Gukov says. “A ribbon is where the string in a knot pierces through a different part of the string in three dimensions but doesn’t pierce through anything in four dimensions. Machine learning lets us analyze the ‘ribboness’ of knots, a yes-or-no property of knots that has applications to the smooth Poincaré conjecture.”

“This is where machine learning comes to the rescue,” writes Gukov and his team in a preprint paper titled “ Searching for Ribbons with Machine Learning .” “It has the ability to quickly search through many potential solutions and, more importantly, to improve the search based on the successful ‘games’ it plays. We use the word ‘games’ since the same types of algorithms and architectures can be employed to play complex board games, such as Go or chess, where the goals and winning strategies are similar to those in math problems.”

The Interplay of Mathematics and Machine Learning Algorithms

On the flip side, math can help in developing machine learning algorithms, Gukov explains. A mathematical mindset, he says, can bring fresh ideas to the development of the algorithms behind AI tools. He cites Peter Shor as an example of a mathematician who brought insight to computer science problems. Shor, who graduated from Caltech with a bachelor’s degree in mathematics in 1981, famously came up with what is known as Shor’s algorithm, a set of rules that could allow quantum computers of the future to factor integers faster than typical computers, thereby breaking digital encryption codes.

Today’s machine learning algorithms are trained on large sets of data. They churn through mountains of data on language, images, and more to recognize patterns and come up with new connections. However, data scientists don’t always know how the programs reach their conclusions. The inner workings are hidden in a so-called “black box.” A mathematical approach to developing the algorithms would reveal what’s happening “under the hood,” as Gukov says, leading to a deeper understanding of how the algorithms work and thus can be improved.

“Math,” says Gukov, “is fertile ground for new ideas.”

The conference will take place at the Merkin Center on the eighth floor of Caltech Hall.

More on SciTechDaily

Ultrathin Durable Membrane Developed for High-Performance Oil and Water Separation

GEDI Laser Instrument Moves Toward Launch to Space Station

Flexible Robot Designed to “Grow” Like a Plant Snakes Through Tight Spaces, Lifts Heavy Loads [Video]

Functional Magnetic Resonance Imaging Shows How the Brain Repurposes Unused Regions

How Copper Deposits Turned a Village Into One of the Most Important Trade Hubs of the Late Bronze Age

Sticky Situation: Critical Antenna on ESA’s Jupiter Icy Moons Explorer Fails To Deploy

Science made simple: what are high energy density laboratory plasmas.

Large Increase in Nitrate Levels Found in Rural Water Wells in High Plains Aquifer

Be the first to comment on "the intersection of math and ai: a new era in problem-solving", leave a comment cancel reply.

Email address is optional. If provided, your email will not be published or shared.

Save my name, email, and website in this browser for the next time I comment.

What is AI (artificial intelligence)?

Humans and machines: a match made in productivity heaven. Our species wouldn’t have gotten very far without our mechanized workhorses. From the wheel that revolutionized agriculture to the screw that held together increasingly complex construction projects to the robot-enabled assembly lines of today, machines have made life as we know it possible. And yet, despite their seemingly endless utility, humans have long feared machines—more specifically, the possibility that machines might someday acquire human intelligence and strike out on their own.

Get to know and directly engage with senior McKinsey experts on AI

Sven Blumberg is a senior partner in McKinsey’s Düsseldorf office; Michael Chui is a partner at the McKinsey Global Institute and is based in the Bay Area office, where Lareina Yee is a senior partner; Kia Javanmardian is a senior partner in the Chicago office, where Alex Singla , the global leader of QuantumBlack, AI by McKinsey, is also a senior partner; Kate Smaje and Alex Sukharevsky are senior partners in the London office.

But we tend to view the possibility of sentient machines with fascination as well as fear. This curiosity has helped turn science fiction into actual science. Twentieth-century theoreticians, like computer scientist and mathematician Alan Turing, envisioned a future where machines could perform functions faster than humans. The work of Turing and others soon made this a reality. Personal calculators became widely available in the 1970s, and by 2016, the US census showed that 89 percent of American households had a computer. Machines— smart machines at that—are now just an ordinary part of our lives and culture.

Those smart machines are also getting faster and more complex. Some computers have now crossed the exascale threshold, meaning they can perform as many calculations in a single second as an individual could in 31,688,765,000 years . And beyond computation, which machines have long been faster at than we have, computers and other devices are now acquiring skills and perception that were once unique to humans and a few other species.

About QuantumBlack, AI by McKinsey

QuantumBlack, McKinsey’s AI arm, helps companies transform using the power of technology, technical expertise, and industry experts. With thousands of practitioners at QuantumBlack (data engineers, data scientists, product managers, designers, and software engineers) and McKinsey (industry and domain experts), we are working to solve the world’s most important AI challenges. QuantumBlack Labs is our center of technology development and client innovation, which has been driving cutting-edge advancements and developments in AI through locations across the globe.

AI is a machine’s ability to perform the cognitive functions we associate with human minds, such as perceiving, reasoning, learning, interacting with the environment, problem-solving, and even exercising creativity. You’ve probably interacted with AI even if you don’t realize it—voice assistants like Siri and Alexa are founded on AI technology, as are some customer service chatbots that pop up to help you navigate websites.

Applied AI —simply, artificial intelligence applied to real-world problems—has serious implications for the business world. By using artificial intelligence, companies have the potential to make business more efficient and profitable. But ultimately, the value of AI isn’t in the systems themselves. Rather, it’s in how companies use these systems to assist humans—and their ability to explain to shareholders and the public what these systems do—in a way that builds trust and confidence.

For more about AI, its history, its future, and how to apply it in business, read on.

Learn more about QuantumBlack, AI by McKinsey .

Introducing McKinsey Explainers : Direct answers to complex questions

What is machine learning.

Machine learning is a form of artificial intelligence that can adapt to a wide range of inputs, including large sets of historical data, synthesized data, or human inputs. (Some machine learning algorithms are specialized in training themselves to detect patterns; this is called deep learning. See Exhibit 1.) These algorithms can detect patterns and learn how to make predictions and recommendations by processing data, rather than by receiving explicit programming instruction. Some algorithms can also adapt in response to new data and experiences to improve over time.

The volume and complexity of data that is now being generated, too vast for humans to process and apply efficiently, has increased the potential of machine learning, as well as the need for it. In the years since its widespread deployment, which began in the 1970s, machine learning has had an impact on a number of industries, including achievements in medical-imaging analysis and high-resolution weather forecasting.

The volume and complexity of data that is now being generated, too vast for humans to process and apply efficiently, has increased the potential of machine learning, as well as the need for it.

What is deep learning?

Deep learning is a more advanced version of machine learning that is particularly adept at processing a wider range of data resources (text as well as unstructured data including images), requires even less human intervention, and can often produce more accurate results than traditional machine learning. Deep learning uses neural networks—based on the ways neurons interact in the human brain —to ingest data and process it through multiple neuron layers that recognize increasingly complex features of the data. For example, an early layer might recognize something as being in a specific shape; building on this knowledge, a later layer might be able to identify the shape as a stop sign. Similar to machine learning, deep learning uses iteration to self-correct and improve its prediction capabilities. For example, once it “learns” what a stop sign looks like, it can recognize a stop sign in a new image.

What is generative AI?

Case study: vistra and the martin lake power plant.

Vistra is a large power producer in the United States, operating plants in 12 states with a capacity to power nearly 20 million homes. Vistra has committed to achieving net-zero emissions by 2050. In support of this goal, as well as to improve overall efficiency, QuantumBlack, AI by McKinsey worked with Vistra to build and deploy an AI-powered heat rate optimizer (HRO) at one of its plants.

“Heat rate” is a measure of the thermal efficiency of the plant; in other words, it’s the amount of fuel required to produce each unit of electricity. To reach the optimal heat rate, plant operators continuously monitor and tune hundreds of variables, such as steam temperatures, pressures, oxygen levels, and fan speeds.

Vistra and a McKinsey team, including data scientists and machine learning engineers, built a multilayered neural network model. The model combed through two years’ worth of data at the plant and learned which combination of factors would attain the most efficient heat rate at any point in time. When the models were accurate to 99 percent or higher and run through a rigorous set of real-world tests, the team converted them into an AI-powered engine that generates recommendations every 30 minutes for operators to improve the plant’s heat rate efficiency. One seasoned operations manager at the company’s plant in Odessa, Texas, said, “There are things that took me 20 years to learn about these power plants. This model learned them in an afternoon.”

Overall, the AI-powered HRO helped Vistra achieve the following:

- approximately 1.6 million metric tons of carbon abated annually

- 67 power generators optimized

- $60 million saved in about a year

Read more about the Vistra story here .

Generative AI (gen AI) is an AI model that generates content in response to a prompt. It’s clear that generative AI tools like ChatGPT and DALL-E (a tool for AI-generated art) have the potential to change how a range of jobs are performed. Much is still unknown about gen AI’s potential, but there are some questions we can answer—like how gen AI models are built, what kinds of problems they are best suited to solve, and how they fit into the broader category of AI and machine learning.

For more on generative AI and how it stands to affect business and society, check out our Explainer “ What is generative AI? ”

What is the history of AI?

The term “artificial intelligence” was coined in 1956 by computer scientist John McCarthy for a workshop at Dartmouth. But he wasn’t the first to write about the concepts we now describe as AI. Alan Turing introduced the concept of the “ imitation game ” in a 1950 paper. That’s the test of a machine’s ability to exhibit intelligent behavior, now known as the “Turing test.” He believed researchers should focus on areas that don’t require too much sensing and action, things like games and language translation. Research communities dedicated to concepts like computer vision, natural language understanding, and neural networks are, in many cases, several decades old.

MIT physicist Rodney Brooks shared details on the four previous stages of AI:

Symbolic AI (1956). Symbolic AI is also known as classical AI, or even GOFAI (good old-fashioned AI). The key concept here is the use of symbols and logical reasoning to solve problems. For example, we know a German shepherd is a dog , which is a mammal; all mammals are warm-blooded; therefore, a German shepherd should be warm-blooded.

The main problem with symbolic AI is that humans still need to manually encode their knowledge of the world into the symbolic AI system, rather than allowing it to observe and encode relationships on its own. As a result, symbolic AI systems struggle with situations involving real-world complexity. They also lack the ability to learn from large amounts of data.

Symbolic AI was the dominant paradigm of AI research until the late 1980s.

Neural networks (1954, 1969, 1986, 2012). Neural networks are the technology behind the recent explosive growth of gen AI. Loosely modeling the ways neurons interact in the human brain , neural networks ingest data and process it through multiple iterations that learn increasingly complex features of the data. The neural network can then make determinations about the data, learn whether a determination is correct, and use what it has learned to make determinations about new data. For example, once it “learns” what an object looks like, it can recognize the object in a new image.

Neural networks were first proposed in 1943 in an academic paper by neurophysiologist Warren McCulloch and logician Walter Pitts. Decades later, in 1969, two MIT researchers mathematically demonstrated that neural networks could perform only very basic tasks. In 1986, there was another reversal, when computer scientist and cognitive psychologist Geoffrey Hinton and colleagues solved the neural network problem presented by the MIT researchers. In the 1990s, computer scientist Yann LeCun made major advancements in neural networks’ use in computer vision, while Jürgen Schmidhuber advanced the application of recurrent neural networks as used in language processing.

In 2012, Hinton and two of his students highlighted the power of deep learning. They applied Hinton’s algorithm to neural networks with many more layers than was typical, sparking a new focus on deep neural networks. These have been the main AI approaches of recent years.

Traditional robotics (1968). During the first few decades of AI, researchers built robots to advance research. Some robots were mobile, moving around on wheels, while others were fixed, with articulated arms. Robots used the earliest attempts at computer vision to identify and navigate through their environments or to understand the geometry of objects and maneuver them. This could include moving around blocks of various shapes and colors. Most of these robots, just like the ones that have been used in factories for decades, rely on highly controlled environments with thoroughly scripted behaviors that they perform repeatedly. They have not contributed significantly to the advancement of AI itself.

But traditional robotics did have significant impact in one area, through a process called “simultaneous localization and mapping” (SLAM). SLAM algorithms helped contribute to self-driving cars and are used in consumer products like vacuum cleaning robots and quadcopter drones. Today, this work has evolved into behavior-based robotics, also referred to as haptic technology because it responds to human touch.

- Behavior-based robotics (1985). In the real world, there aren’t always clear instructions for navigation, decision making, or problem-solving. Insects, researchers observed, navigate very well (and are evolutionarily very successful) with few neurons. Behavior-based robotics researchers took inspiration from this, looking for ways robots could solve problems with partial knowledge and conflicting instructions. These behavior-based robots are embedded with neural networks.

Learn more about QuantumBlack, AI by McKinsey .

What is artificial general intelligence?

The term “artificial general intelligence” (AGI) was coined to describe AI systems that possess capabilities comparable to those of a human . In theory, AGI could someday replicate human-like cognitive abilities including reasoning, problem-solving, perception, learning, and language comprehension. But let’s not get ahead of ourselves: the key word here is “someday.” Most researchers and academics believe we are decades away from realizing AGI; some even predict we won’t see AGI this century, or ever. Rodney Brooks, an MIT roboticist and cofounder of iRobot, doesn’t believe AGI will arrive until the year 2300 .

The timing of AGI’s emergence may be uncertain. But when it does emerge—and it likely will—it’s going to be a very big deal, in every aspect of our lives. Executives should begin working to understand the path to machines achieving human-level intelligence now and making the transition to a more automated world.

For more on AGI, including the four previous attempts at AGI, read our Explainer .

What is narrow AI?

Narrow AI is the application of AI techniques to a specific and well-defined problem, such as chatbots like ChatGPT, algorithms that spot fraud in credit card transactions, and natural-language-processing engines that quickly process thousands of legal documents. Most current AI applications fall into the category of narrow AI. AGI is, by contrast, AI that’s intelligent enough to perform a broad range of tasks.

How is the use of AI expanding?

AI is a big story for all kinds of businesses, but some companies are clearly moving ahead of the pack . Our state of AI in 2022 survey showed that adoption of AI models has more than doubled since 2017—and investment has increased apace. What’s more, the specific areas in which companies see value from AI have evolved, from manufacturing and risk to the following:

- marketing and sales

- product and service development

- strategy and corporate finance

One group of companies is pulling ahead of its competitors. Leaders of these organizations consistently make larger investments in AI, level up their practices to scale faster, and hire and upskill the best AI talent. More specifically, they link AI strategy to business outcomes and “ industrialize ” AI operations by designing modular data architecture that can quickly accommodate new applications.

What are the limitations of AI models? How can these potentially be overcome?

We have yet to see the longtail effect of gen AI models. This means there are some inherent risks involved in using them—both known and unknown.

The outputs gen AI models produce may often sound extremely convincing. This is by design. But sometimes the information they generate is just plain wrong. Worse, sometimes it’s biased (because it’s built on the gender, racial, and other biases of the internet and society more generally).

It can also be manipulated to enable unethical or criminal activity. Since gen AI models burst onto the scene, organizations have become aware of users trying to “jailbreak” the models—that means trying to get them to break their own rules and deliver biased, harmful, misleading, or even illegal content. Gen AI organizations are responding to this threat in two ways: for one thing, they’re collecting feedback from users on inappropriate content. They’re also combing through their databases, identifying prompts that led to inappropriate content, and training the model against these types of generations.

But awareness and even action don’t guarantee that harmful content won’t slip the dragnet. Organizations that rely on gen AI models should be aware of the reputational and legal risks involved in unintentionally publishing biased, offensive, or copyrighted content.

These risks can be mitigated, however, in a few ways. “Whenever you use a model,” says McKinsey partner Marie El Hoyek, “you need to be able to counter biases and instruct it not to use inappropriate or flawed sources, or things you don’t trust.” How? For one thing, it’s crucial to carefully select the initial data used to train these models to avoid including toxic or biased content. Next, rather than employing an off-the-shelf gen AI model, organizations could consider using smaller, specialized models. Organizations with more resources could also customize a general model based on their own data to fit their needs and minimize biases.

It’s also important to keep a human in the loop (that is, to make sure a real human checks the output of a gen AI model before it is published or used) and avoid using gen AI models for critical decisions, such as those involving significant resources or human welfare.

It can’t be emphasized enough that this is a new field. The landscape of risks and opportunities is likely to continue to change rapidly in the coming years. As gen AI becomes increasingly incorporated into business, society, and our personal lives, we can also expect a new regulatory climate to take shape. As organizations experiment—and create value—with these tools, leaders will do well to keep a finger on the pulse of regulation and risk.

What is the AI Bill of Rights?

The Blueprint for an AI Bill of Rights, prepared by the US government in 2022, provides a framework for how government, technology companies, and citizens can collectively ensure more accountable AI. As AI has become more ubiquitous, concerns have surfaced about a potential lack of transparency surrounding the functioning of gen AI systems, the data used to train them, issues of bias and fairness, potential intellectual property infringements, privacy violations, and more. The Blueprint comprises five principles that the White House says should “guide the design, use, and deployment of automated systems to protect [users] in the age of artificial intelligence.” They are as follows:

- The right to safe and effective systems. Systems should undergo predeployment testing, risk identification and mitigation, and ongoing monitoring to demonstrate that they are adhering to their intended use.

- Protections against discrimination by algorithms. Algorithmic discrimination is when automated systems contribute to unjustified different treatment of people based on their race, color, ethnicity, sex, religion, age, and more.

- Protections against abusive data practices, via built-in safeguards. Users should also have agency over how their data is used.

- The right to know that an automated system is being used, and a clear explanation of how and why it contributes to outcomes that affect the user.

- The right to opt out, and access to a human who can quickly consider and fix problems.

At present, more than 60 countries or blocs have national strategies governing the responsible use of AI (Exhibit 2). These include Brazil, China, the European Union, Singapore, South Korea, and the United States. The approaches taken vary from guidelines-based approaches, such as the Blueprint for an AI Bill of Rights in the United States, to comprehensive AI regulations that align with existing data protection and cybersecurity regulations, such as the EU’s AI Act, due in 2024.

There are also collaborative efforts between countries to set out standards for AI use. The US–EU Trade and Technology Council is working toward greater alignment between Europe and the United States. The Global Partnership on Artificial Intelligence, formed in 2020, has 29 members including Brazil, Canada, Japan, the United States, and several European countries.

Even though AI regulations are still being developed, organizations should act now to avoid legal, reputational, organizational, and financial risks. In an environment of public concern, a misstep could be costly. Here are four no-regrets, preemptive actions organizations can implement today:

- Transparency. Create an inventory of models, classifying them in accordance with regulation, and record all usage across the organization that is clear to those inside and outside the organization.

- Governance. Implement a governance structure for AI and gen AI that ensures sufficient oversight, authority, and accountability both within the organization and with third parties and regulators.

- Data management. Proper data management includes awareness of data sources, data classification, data quality and lineage, intellectual property, and privacy management.

- Model management. Organizations should establish principles and guardrails for AI development and use them to ensure all AI models uphold fairness and bias controls.

- Cybersecurity and technology management. Establish strong cybersecurity and technology to ensure a secure environment where unauthorized access or misuse is prevented.

- Individual rights. Make users aware when they are interacting with an AI system, and provide clear instructions for use.

How can organizations scale up their AI efforts from ad hoc projects to full integration?

Most organizations are dipping a toe into the AI pool—not cannonballing. Slow progress toward widespread adoption is likely due to cultural and organizational barriers. But leaders who effectively break down these barriers will be best placed to capture the opportunities of the AI era. And—crucially—companies that can’t take full advantage of AI are already being sidelined by those that can, in industries like auto manufacturing and financial services.

To scale up AI, organizations can make three major shifts :

- Move from siloed work to interdisciplinary collaboration. AI projects shouldn’t be limited to discrete pockets of organizations. Rather, AI has the biggest impact when it’s employed by cross-functional teams with a mix of skills and perspectives, enabling AI to address broad business priorities.

- Empower frontline data-based decision making . AI has the potential to enable faster, better decisions at all levels of an organization. But for this to work, people at all levels need to trust the algorithms’ suggestions and feel empowered to make decisions. (Equally, people should be able to override the algorithm or make suggestions for improvement when necessary.)

- Adopt and bolster an agile mindset. The agile test-and-learn mindset will help reframe mistakes as sources of discovery, allaying the fear of failure and speeding up development.

Learn more about QuantumBlack, AI by McKinsey , and check out AI-related job opportunities if you’re interested in working at McKinsey.

Articles referenced:

- “ As gen AI advances, regulators—and risk functions—rush to keep pace ,” December 21, 2023, Andreas Kremer, Angela Luget , Daniel Mikkelsen , Henning Soller , Malin Strandell-Jansson, and Sheila Zingg

- “ What is generative AI? ,” January 19, 2023

- “ Tech highlights from 2022—in eight charts ,” December 22, 2022

- “ Generative AI is here: How tools like ChatGPT could change your business ,” December 20, 2022, Michael Chui , Roger Roberts , and Lareina Yee

- “ The state of AI in 2022—and a half decade in review ,” December 6, 2022, Michael Chui , Bryce Hall , Helen Mayhew , Alex Singla , and Alex Sukharevsky

- “ Why businesses need explainable AI—and how to deliver it ,” September 29, 2022, Liz Grennan , Andreas Kremer, Alex Singla , and Peter Zipparo

- “ Why digital trust truly matters ,” September 12, 2022, Jim Boehm , Liz Grennan , Alex Singla , and Kate Smaje

- “ McKinsey Technology Trends Outlook 2023 ,” July 20, 2023, Michael Chui , Mena Issler, Roger Roberts , and Lareina Yee

- “ An AI power play: Fueling the next wave of innovation in the energy sector ,” May 12, 2022, Barry Boswell, Sean Buckley, Ben Elliott, Matias Melero , and Micah Smith

- “ Scaling AI like a tech native: The CEO’s role ,” October 13, 2021, Jacomo Corbo, David Harvey, Nicolas Hohn, Kia Javanmardian , and Nayur Khan

- “ What the draft European Union AI regulations mean for business ,” August 10, 2021, Misha Benjamin, Kevin Buehler , Rachel Dooley, and Peter Zipparo

- “ Winning with AI is a state of mind ,” April 30, 2021, Thomas Meakin , Jeremy Palmer, Valentina Sartori , and Jamie Vickers

- “ Breaking through data-architecture gridlock to scale AI ,” January 26, 2021, Sven Blumberg , Jorge Machado , Henning Soller , and Asin Tavakoli

- “ An executive’s guide to AI ,” November 17, 2020, Michael Chui , Brian McCarthy, and Vishnu Kamalnath

- “ Executive’s guide to developing AI at scale ,” October 28, 2020, Nayur Khan , Brian McCarthy, and Adi Pradhan

- “ An executive primer on artificial general intelligence ,” April 29, 2020, Federico Berruti , Pieter Nel, and Rob Whiteman

- “ The analytics academy: Bridging the gap between human and artificial intelligence ,” McKinsey Quarterly , September 25, 2019, Solly Brown, Darshit Gandhi, Louise Herring , and Ankur Puri

This article was updated in April 2024; it was originally published in April 2023.

Want to know more about AI?

Related articles.

Ten unsung digital and AI ideas shaping business

Driving innovation with generative AI

As gen AI advances, regulators—and risk functions—rush to keep pace

More From Forbes

How leaders are using ai as a problem-solving tool.

- Share to Facebook

- Share to Twitter

- Share to Linkedin

Leaders face more complex decisions than ever before. For example, many must deliver new and better services for their communities while meeting sustainability and equity goals. At the same time, many need to find ways to operate and manage their budgets more efficiently. So how can these leaders make complex decisions and get them right in an increasingly tricky business landscape? The answer lies in harnessing technological tools like Artificial Intelligence (AI).

CHONGQING, CHINA - AUGUST 22: A visitor interacts with a NewGo AI robot during the Smart China Expo ... [+] 2022 on August 22, 2022 in Chongqing, China. The expo, held annually in Chongqing since 2018, is a platform to promote global exchanges of smart technologies and international cooperation in the smart industry. (Photo by Chen Chao/China News Service via Getty Images)

What is AI?

AI can help leaders in several different ways. It can be used to process and make decisions on large amounts of data more quickly and accurately. AI can also help identify patterns and trends that would otherwise be undetectable. This information can then be used to inform strategic decision-making, which is why AI is becoming an increasingly important tool for businesses and governments. A recent study by PwC found that 52% of companies accelerated their AI adoption plans in the last year. In addition, 86% of companies believe that AI will become a mainstream technology at their company imminently. As AI becomes more central in the business world, leaders need to understand how this technology works and how they can best integrate it into their operations.

At its simplest, AI is a computer system that can learn and work independently without human intervention. This ability makes AI a powerful tool. With AI, businesses and public agencies can automate tasks, get insights from data, and make decisions with little or no human input. Consequently, AI can be a valuable problem-solving tool for leaders across the private and public sectors, primarily through three methods.

1) Automation

One of AI’s most beneficial ways to help leaders is by automating tasks. This can free up time to focus on other essential things. For example, AI can help a city save valuable human resources by automating parking enforcement. In addition, this will help improve the accuracy of detecting violations and prevent costly mistakes. Automation can also help with things like appointment scheduling and fraud detection.

2) Insights from data

Another way AI can help leaders solve problems is by providing insights from data. With AI, businesses can gather large amounts of data and then use that data to make better decisions. For example, suppose a company is trying to decide which products to sell. In that case, AI can be used to gather data about customer buying habits and then use that data to make recommendations about which products to market.

Best Travel Insurance Companies

Best covid-19 travel insurance plans.

3) Simulations

Finally, AI can help leaders solve problems by allowing them to create simulations. With AI, organizations can test out different decision scenarios and see what the potential outcomes could be. This can help leaders make better decisions by examining the consequences of their choices. For example, a city might use AI to simulate different traffic patterns to see how a new road layout would impact congestion.

Choosing the Right Tools

Artificial intelligence and machine learning technologies can revolutionize how governments and businesses solve real-world problems,” said Chris Carson, CEO of Hayden AI, a global leader in intelligent enforcement technologies powered by artificial intelligence. His company addresses a problem once thought unsolvable in the transit world: managing illegal parking in bus lanes in a cost effective, scalable way.

Illegal parking in bus lanes is a major problem for cities and their transit agencies. Cars and trucks illegally parked in bus lanes force buses to merge into general traffic lanes, significantly slowing down transit service and making riders’ trips longer. That’s where a company like Hayden AI comes in. “Hayden AI uses artificial intelligence and machine learning algorithms to detect and process illegal parking in bus lanes in real-time so that cities can take proactive measures to address the problem ,” Carson observes.

Illegal parking in bus lanes is a huge problem for transit agencies. Hayden AI works with transit ... [+] agencies to fix this problem by installing its AI-powered camera systems on buses to conduct automated enforcement of parking violations in bus lanes

In this case, an AI-powered camera system is installed on each bus. The camera system uses computer vision to “watch” the street for illegal parking in the bus lane. When it detects a traffic violation, it sends the data back to the parking authority. This allows the parking authority to take action, such as sending a ticket to the offending vehicle’s owner.

The effectiveness of AI is entirely dependent on how you use it. As former Accenture chief technology strategist Bob Suh notes in the Harvard Business Review, problem-solving is best when combined with AI and human ingenuity. “In other words, it’s not about the technology itself; it’s about how you use the technology that matters. AI is not a panacea for all ills. Still, when incorporated into a company’s problem-solving repertoire, it can be an enormously powerful tool,” concludes Terence Mauri, founder of Hack Future Lab, a global think tank.

Split the Responsibility

Huda Khan, an academic researcher from the University of Aberdeen, believes that AI is critical for international companies’ success, especially in the era of disruption. Khan is calling international marketing academics’ research attention towards exploring such transformative approaches in terms of how these inform competitive business practices, as are international marketing academics Michael Christofi from the Cyprus University of Technology; Richard Lee from the University of South Australia; Viswanathan Kumar from St. John University; and Kelly Hewett from the University of Tennessee. “AI is very good at automating repetitive tasks, such as customer service or data entry. But it’s not so good at creative tasks, such as developing new products,” Khan says. “So, businesses need to think about what tasks they want to automate and what tasks they want to keep for humans.”

Khan believes that businesses need to split the responsibility between AI and humans. For example, Hayden AI’s system is highly accurate and only sends evidence packages of potential violations for human review. Once the data is sent, human analysis is still needed to make the final decision. But with much less work to do, government agencies can devote their employees to tasks that can’t be automated.

Backed up by efficient, effective data analysis, human problem-solving can be more innovative than ever. Like all business transitions, developing the best system for combining human and AI work might take some experimentation, but it can significantly impact future success. For example, if a company is trying to improve its customer service, it can use AI startup Satisfi’s natural language processing technology . This technology can understand a customer’s question and find the best answer from a company’s knowledge base. Likewise, if a company tries to increase sales, it can use AI startup Persado’s marketing language generation technology . This technology can be used to create more effective marketing campaigns by understanding what motivates customers and then generating language that is more likely to persuade them to make a purchase.

Look at the Big Picture